论文阅读笔记(五十一):Understanding Deep Image Representations by Inverting Them

Abstract

Image representations, from SIFT and Bag of Visual Words to Convolutional Neural Networks (CNNs), are a crucial component of almost any image understanding system. Nevertheless, our understanding of them remains limited. In this paper we conduct a direct analysis of the visual information contained in representations by asking the following question: given an encoding of an image, to which extent is it possible to reconstruct the image itself? To answer this question we contribute a general framework to invert representations. We show that this method can invert representations such as HOG and SIFT more accurately than recent alternatives while being applicable to CNNs too. We then use this technique to study the inverse of recent state-of-the-art CNN image representations for the first time. Among our findings, we show that several layers in CNNs retain photographically accurate information about the image, with different degrees of geometric and photometric invariance.

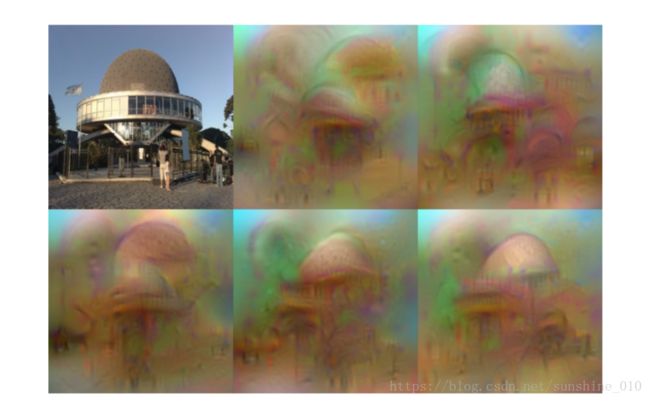

Figure 1. What is encoded by a CNN? The figure shows five possible reconstructions of the reference image obtained from the 1,000-dimensional code extracted at the penultimate layer of a reference CNN[13] (before the softmax is applied) trained on the ImageNet data. From the viewpoint of the model, all these images are practically equivalent. This image is best viewed in color/screen.

Introduction

Most image understanding and computer vision methods build on image representations such as textons [15], histogram of oriented gradients (SIFT [18] and HOG [4]), bag of visual words [3][25], sparse [35] and local coding [32], super vector coding [37], VLAD [9], Fisher Vectors [21], and, lately, deep neural networks, particularly of the convolutional variety [13, 23, 36]. However, despite the progress in the development of visual representations, their design is still driven empirically and a good understanding of their properties is lacking. While this is true of shallower handcrafted features, it is even more so for the latest generation of deep representations, where millions of parameters are learned from data.

In this paper we conduct a direct analysis of representations by characterising the image information that they retain (Fig. 1). We do so by modeling a representation as a function Φ(x) of the image x and then computing an approximated inverse φ−1, reconstructing x from the code Φ(x). A common hypothesis is that representations collapse irrelevant differences in images (e.g. illumination or viewpoint), so that Φ should not be uniquely invertible. Hence, we pose this as a reconstruction problem and find a number of possible reconstructions rather than a single one. By doing so, we obtain insights into the invariances captured by the representation.

Our contributions are as follows. First, we propose a general method to invert representations, including SIFT, HOG, and CNNs (Sect. 2). Crucially, this method uses only information from the image representation and a generic natural image prior, starting from random noise as initial solution, and hence captures only the information contained in the representation itself. We discuss and evaluate different regularization penalties as natural image priors. Second, we show that, despite its simplicity and generality, this method recovers significantly better reconstructions from DSIFT and HOG compared to recent alternatives [31]. As we do so, we emphasise a number of subtle differences between these representations and their effect on invertibility. Third, we apply the inversion technique to the analysis of recent deep CNNs, exploring their invariance by sampling possible approximate reconstructions. We relate this to the depth of the representation, showing that the CNN gradually builds an increasing amount of invariance, layer after layer. Fourth, we study the locality of the information stored in the representations by reconstructing images from selected groups of neurons, either spatially or by channel.

The rest of the paper is organised as follows. Sect. 2 introduces the inversion method, posing this as a regularised regression problem and proposing a number of image priors to aid the reconstruction. Sect. 3 introduces various representations: HOG and DSIFT as examples of shallow representations, and state-of-the-art CNNs as an example of deep representations. It also shows how HOG and DSIFT can be implemented as CNNs, simplifying the computation of their derivatives. Sect. 4 and 5 apply the inversion technique to the analysis of respectively shallow (HOG and DSIFT) and deep (CNNs) representations. Finally, Sect. 6 summarises our findings.

We use the matconvnet toolbox [30] for implementing convolutional neural networks.

Related work. There is a significant amount of work in understanding representations by means of visualisations. The works most related to ours are Weinzaepfel et al. [33] and Vondrick et al. [31] which invert sparse DSIFT and HOG features respectively. While our goal is similar to theirs, our method is substantially different from a technical viewpoint, being based on the direct solution of a regularised regression problem. The benefit is that our technique applies equally to shallow (SIFT, HOG) and deep (CNN) representations. Compared to existing inversion techniques for dense shallow representations [31], it is also shown to achieve superior results, both quantitatively and qualitatively.

An interesting conclusion of [31, 33] is that, while HOG and SIFT may not be exactly invertible, they capture a significant amount of information about the image. This is in apparent contradiction with the results of Tatu et al. [27] who show that it is possible to make any two images look nearly identical in SIFT space up to the injection of adversarial noise. A symmetric effect was demonstrated for CNNs by Szegedy et al. [26], where an imperceptible amount of adversarial noise suffices to change the predicted class of an image. The apparent inconsistency is easily resolved, however, as the methods of [26, 27] require the injection of high-pass structured noise which is very unlikely to occur in natural images.

Our work is also related to the DeConvNet method of Zeiler and Fergus [36], who backtrack the network computations to identify which image patches are responsible for certain neural activations. Simonyan et al. [24], however, demonstrated that DeConvNets can be interpreted as a sensitivity analysis of the network input/output relation. A consequence is that DeConvNets do not study the problem of representation inversion in the sense adopted here, which has significant methodological consequences; for example, DeConvNets require auxiliary information about the activations in several intermediate layers, while our inversion uses only the final image code. In other words, DeConvNets look at how certain network outputs are obtained, whereas we look for what information is preserved by the network output.

The problem of inverting representations, particularly CNN-based ones, is related to the problem of inverting neural networks, which received significant attention in the past. Algorithms similar to the back-propagation technique developed here were proposed by [14, 16, 19, 34], along with alternative optimisation strategies based on sampling. However, these methods did not use natural image priors as we do, nor were applied to the current generation of deep networks. Other works [10, 28] specialised on inverting networks in the context of dynamical systems and will not be discussed further here. Others [1] proposed to learn a second neural network to act as the inverse of the original one, but this is complicated by the fact that the inverse is usually not unique. Finally, auto-encoder architectures [8] train networks together with their inverses as a form of supervision; here we are interested instead in visualising feedforward and discriminatively-trained CNNs now popular in computer vision.

Summary

This paper proposed an optimisation method to invert shallow and deep representations based on optimizing an objective function with gradient descent. Compared to alternatives, a key difference is the use of image priors such as the V β norm that can recover the low-level image statistics removed by the representation. This tool performs better than alternative reconstruction methods for HOG. Applied to CNNs, the visualisations shed light on the information represented at each layer. In particular, it is clear that a progressively more invariant and abstract notion of the image content is formed in the network.

In the future, we shall experiment with more expressive natural image priors and analyze the effect of network hyper-parameters on the reconstructions. We shall extract subsets of neurons that encode object parts and try to establish sub-networks that capture different details of the image.

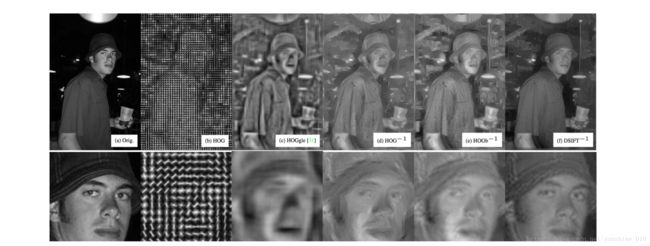

Figure 3. Reconstruction quality of different representation inversion methods, applied to HOG and DSIFT. HOGb denotes HOG with bilinear orientation assignments. This image is best viewed on screen.

Figure 6. CNN reconstruction. Reconstruction of the image of Fig. 5.a from each layer of CNN-A. To generate these results, the regularization coefficient for each layer is chosen to match the highlighted rows in table 3. This figure is best viewed in color/screen.

Figure 7. CNN invariances. Multiple reconstructions of the images of Fig. 5.c–d from different deep codes obtained from CNN-A. This figure is best seen in colour/screen.

Figure 9. CNN receptive field. Reconstructions of the image of Fig. 5.a from the central 5 × 5 neuron fields at different depths of CNN-A. The white box marks the field of view of the 5 × 5 neuron field. The field of view is the entire image for conv5 and relu5.

Figure 10. CNN neural streams. Reconstructions of the images of Fig. 5.c-b from either of the two neural streams of CNN-A. This figure is best seen in colour/screen.

Figure 11. Diversity in the CNN model. mpool5 reconstructions show that the network retains rich information even at such deep levels. This figure is best viewed in color/screen (zoom in).