kubenetes集群部署CoreDNS

原创内容,转载请注明出处

博主地址:https://aronligithub.github.io/

kubernetes v1.11 二进制部署篇章目录

- kubernetes v1.11 二进制部署

- (一)环境介绍

- (二)Openssl自签TLS证书

- (三)master组件部署

- (四)node组件部署

前言

在经过上一篇章Calico集成kubernetes的CNI网络部署全过程、启用CA自签名之后,kubernetes每台node节点的状态已经是ready状态了,那么下一步就是需要解决kubernetes集群中pod的域名解析问题。

为什么要解决这个问题呢?

因为kuberntes中的所有pod都是基于service域名解析后,再负载均衡分发到service后端的各个pod服务中,那么如果没有DNS解析,则无法查到各个服务对应的service服务,以下举个例子。

1.首先上传一个基于centos的工具镜像

[root@server81 registry]# docker push 172.16.5.181:5000/networkbox

The push refers to repository [172.16.5.181:5000/networkbox]

6793eb3b0692: Pushed

4b398ee02e06: Pushed

b91100adb338: Pushed

5f70bf18a086: Pushed

479d1ea9f888: Pushed

latest: digest: sha256:0159b2282815ecd59809dcca4d74cffd5b75a9ca5deec8a220080c254cc43ff2 size: 1987

[root@server81 registry]#

2.创建pod以及svc

[root@server81 test_yaml]# vim networkbox.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: networkbox

labels:

app: networkbox

spec:

replicas: 10

template:

metadata:

labels:

app: networkbox

spec:

terminationGracePeriodSeconds: 60

containers:

- name: networkbox

image: 172.16.5.181:5000/networkbox

---

apiVersion: v1

kind: Service

metadata:

name: networkbox

labels:

name: networkbox

spec:

ports:

- port: 8008

selector:

name: networkbox

[root@server81 test_yaml]# vim networkbox.yaml

[root@server81 test_yaml]#

[root@server81 test_yaml]# kubectl apply -f networkbox.yaml

deployment.extensions/networkbox configured

service/networkbox unchanged

[root@server81 test_yaml]#

[root@server81 test_yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

networkbox-85bd85cd54-7hsgp 1/1 Terminating 0 1m 10.1.0.196 172.16.5.181

networkbox-85bd85cd54-7sr5f 1/1 Terminating 0 1m 10.1.0.65 172.16.5.87

networkbox-85bd85cd54-9j8cg 1/1 Running 0 1m 10.1.0.194 172.16.5.181

networkbox-85bd85cd54-btcvz 1/1 Terminating 0 1m 10.1.0.198 172.16.5.181

networkbox-85bd85cd54-btmwm 1/1 Terminating 0 1m 10.1.0.200 172.16.5.181

networkbox-85bd85cd54-dfngz 1/1 Terminating 0 1m 10.1.0.197 172.16.5.181

networkbox-85bd85cd54-gp525 1/1 Terminating 0 1m 10.1.0.66 172.16.5.87

networkbox-85bd85cd54-llrw6 1/1 Running 0 1m 10.1.0.193 172.16.5.181

networkbox-85bd85cd54-psh4r 1/1 Running 0 1m 10.1.0.195 172.16.5.181

networkbox-85bd85cd54-zsg8m 1/1 Terminating 0 1m 10.1.0.199 172.16.5.181

[root@server81 test_yaml]#

[root@server81 test_yaml]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.6.1 443/TCP 1h

networkbox ClusterIP 10.0.6.138 8008/TCP 1m

[root@server81 test_yaml]#

3.进入networkbox的容器内,ping service域名,确认是否返回IP地址

[root@server81 test_yaml]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.6.1 443/TCP 1h

networkbox ClusterIP 10.0.6.138 8008/TCP 1m

[root@server81 test_yaml]#

[root@server81 test_yaml]# kubectl exec -it networkbox-85bd85cd54-9j8cg bash

[root@networkbox-85bd85cd54-9j8cg /]#

[root@networkbox-85bd85cd54-9j8cg /]# ping networkbox

ping: unknown host networkbox

[root@networkbox-85bd85cd54-9j8cg /]#

[root@networkbox-85bd85cd54-9j8cg /]# ping kubernetes

ping: unknown host kubernetes

[root@networkbox-85bd85cd54-9j8cg /]#

可以看出在容器内由于没有kubernetes的DNS服务解析,容器是找不到service的IP地址,那么也就找不到后面的服务了,所以CoreDNS的解析服务是必须要安装好的。

CoreDNS在kubernetes中的部署

1.查看CoreDNS的官网

点击这里访问CoreDNS的官网。

在CoreDns官网可以查看相关版本以及功能介绍,还可以进入CoreDns的github。

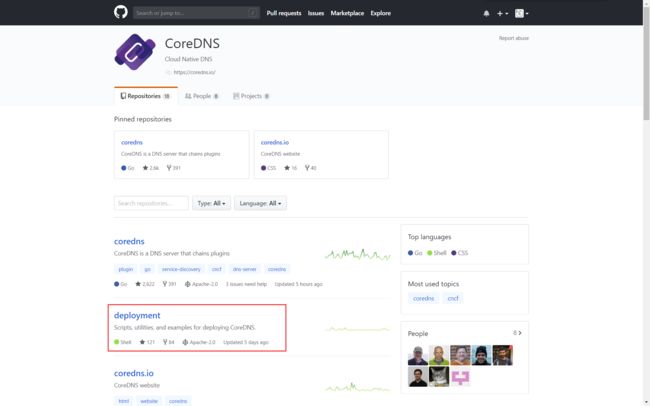

2.查看CoreDNS的github

点击这里访问CoreDNS的github地址。

选择进入deployment,查看如何进行部署的。

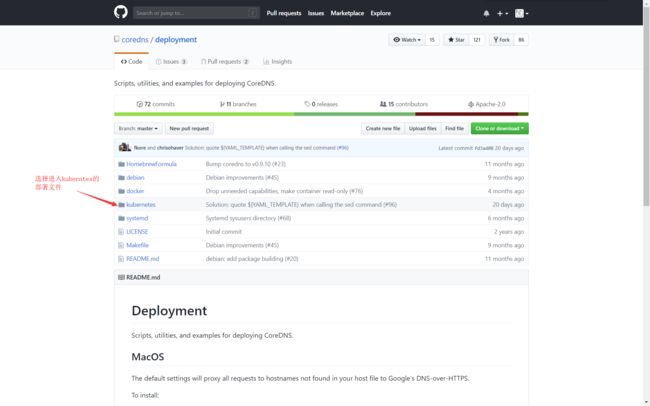

3.进入github的deployment

进入deployment页面。

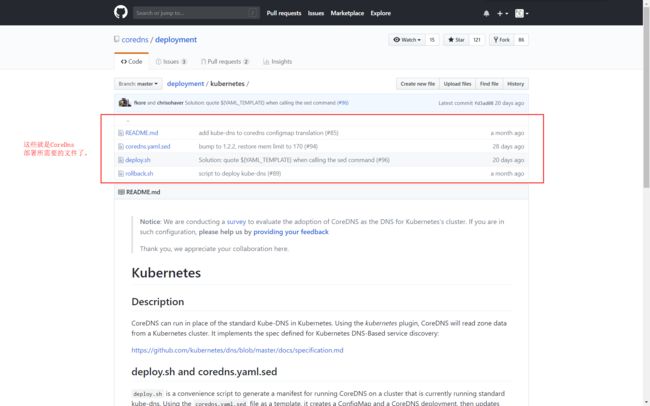

4.查看CoreDNS在kubernetes部署的github脚本文件

进入CoreDNS的kubernetes部署文件页面。

找到了这里,通过github的介绍说明,基本就可以知道上面的四个文件就是部署所需要的文件,以及如何进行部署的。下面我将文件下载下来,修改一下之后进行部署。

5.下载部署文件

从上面github中,将文件都下载到服务器中。

下载coredns.yaml.sed

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health

kubernetes CLUSTER_DOMAIN REVERSE_CIDRS {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}FEDERATIONS

prometheus :9153

proxy . UPSTREAMNAMESERVER

cache 30

loop

reload

loadbalance

}STUBDOMAINS

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 2

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

serviceAccountName: coredns

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

containers:

- name: coredns

image: coredns/coredns:1.2.2

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: CLUSTER_DNS_IP

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

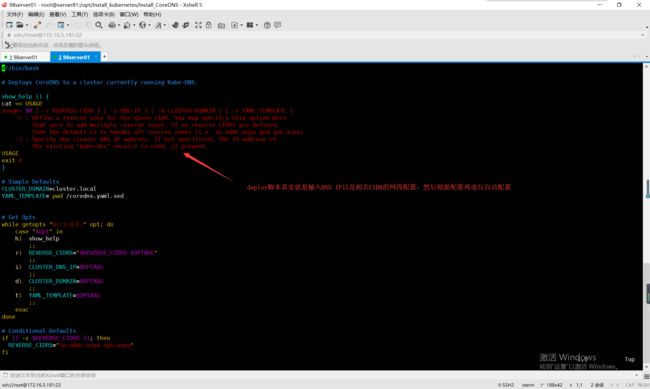

下载deploy.sh部署配置脚本。

#!/bin/bash

# Deploys CoreDNS to a cluster currently running Kube-DNS.

show_help () {

cat << USAGE

usage: $0 [ -r REVERSE-CIDR ] [ -i DNS-IP ] [ -d CLUSTER-DOMAIN ] [ -t YAML-TEMPLATE ] [ -k KUBECONFIG ]

-r : Define a reverse zone for the given CIDR. You may specifcy this option more

than once to add multiple reverse zones. If no reverse CIDRs are defined,

then the default is to handle all reverse zones (i.e. in-addr.arpa and ip6.arpa)

-i : Specify the cluster DNS IP address. If not specificed, the IP address of

the existing "kube-dns" service is used, if present.

-s : Skips the translation of kube-dns configmap to the corresponding CoreDNS Corefile configuration.

USAGE

exit 0

}

# Simple Defaults

CLUSTER_DOMAIN=cluster.local

YAML_TEMPLATE=`pwd`/coredns.yaml.sed

STUBDOMAINS=""

UPSTREAM=\\/etc\\/resolv\.conf

FEDERATIONS=""

# Translates the kube-dns ConfigMap to equivalent CoreDNS Configuration.

function translate-kube-dns-configmap {

kube-dns-federation-to-coredns

kube-dns-upstreamnameserver-to-coredns

kube-dns-stubdomains-to-coredns

}

function kube-dns-federation-to-coredns {

fed=$(kubectl -n kube-system get configmap kube-dns -ojsonpath='{.data.federations}' 2> /dev/null | jq . | tr -d '":,')

if [[ ! -z ${fed} ]]; then

FEDERATIONS=$(sed -e '1s/^/federation /' -e 's/^/ /' -e '1i\\' <<< "${fed}") # add federation to the stanza

fi

}

function kube-dns-upstreamnameserver-to-coredns {

up=$(kubectl -n kube-system get configmap kube-dns -ojsonpath='{.data.upstreamNameservers}' 2> /dev/null | tr -d '[",]')

if [[ ! -z ${up} ]]; then

UPSTREAM=${up}

fi

}

function kube-dns-stubdomains-to-coredns {

STUBDOMAIN_TEMPLATE='

SD_DOMAIN:53 {

errors

cache 30

loop

proxy . SD_DESTINATION

}'

function dequote {

str=${1#\"} # delete leading quote

str=${str%\"} # delete trailing quote

echo ${str}

}

function parse_stub_domains() {

sd=$1

# get keys - each key is a domain

sd_keys=$(echo -n $sd | jq keys[])

# For each domain ...

for dom in $sd_keys; do

dst=$(echo -n $sd | jq '.['$dom'][0]') # get the destination

dom=$(dequote $dom)

dst=$(dequote $dst)

sd_stanza=${STUBDOMAIN_TEMPLATE/SD_DOMAIN/$dom} # replace SD_DOMAIN

sd_stanza=${sd_stanza/SD_DESTINATION/$dst} # replace SD_DESTINATION

echo "$sd_stanza"

done

}

sd=$(kubectl -n kube-system get configmap kube-dns -ojsonpath='{.data.stubDomains}' 2> /dev/null)

STUBDOMAINS=$(parse_stub_domains "$sd")

}

# Get Opts

while getopts "hsr:i:d:t:k:" opt; do

case "$opt" in

h) show_help

;;

s) SKIP=1

;;

r) REVERSE_CIDRS="$REVERSE_CIDRS $OPTARG"

;;

i) CLUSTER_DNS_IP=$OPTARG

;;

d) CLUSTER_DOMAIN=$OPTARG

;;

t) YAML_TEMPLATE=$OPTARG

;;

k) KUBECONFIG=$OPTARG

;;

esac

done

# Set kubeconfig flag if config specified

if [[ ! -z $KUBECONFIG ]]; then

if [[ -f $KUBECONFIG ]]; then

KUBECONFIG="--kubeconfig $KUBECONFIG"

else

KUBECONFIG=""

fi

fi

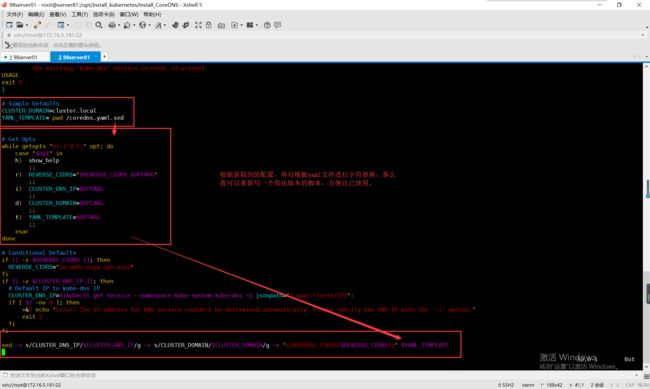

# Conditional Defaults

if [[ -z $REVERSE_CIDRS ]]; then

REVERSE_CIDRS="in-addr.arpa ip6.arpa"

fi

if [[ -z $CLUSTER_DNS_IP ]]; then

# Default IP to kube-dns IP

CLUSTER_DNS_IP=$(kubectl get service --namespace kube-system kube-dns -o jsonpath="{.spec.clusterIP}" $KUBECONFIG)

if [ $? -ne 0 ]; then

>&2 echo "Error! The IP address for DNS service couldn't be determined automatically. Please specify the DNS-IP with the '-i' option."

exit 2

fi

fi

if [[ "${SKIP}" -ne 1 ]] ; then

translate-kube-dns-configmap

fi

orig=$'\n'

replace=$'\\\n'

sed -e "s/CLUSTER_DNS_IP/$CLUSTER_DNS_IP/g" \

-e "s/CLUSTER_DOMAIN/$CLUSTER_DOMAIN/g" \

-e "s?REVERSE_CIDRS?$REVERSE_CIDRS?g" \

-e "s@STUBDOMAINS@${STUBDOMAINS//$orig/$replace}@g" \

-e "s@FEDERATIONS@${FEDERATIONS//$orig/$replace}@g" \

-e "s/UPSTREAMNAMESERVER/$UPSTREAM/g" \

"${YAML_TEMPLATE}"

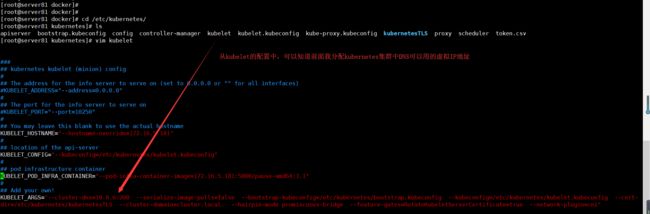

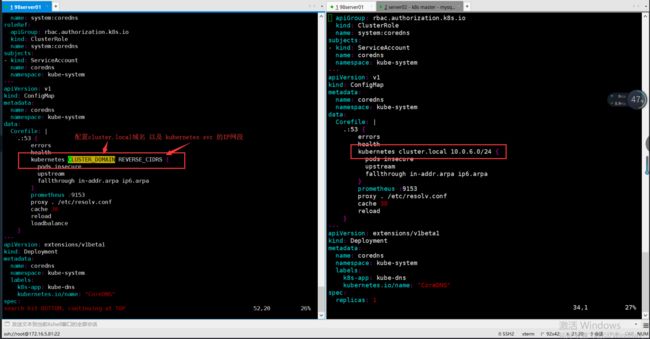

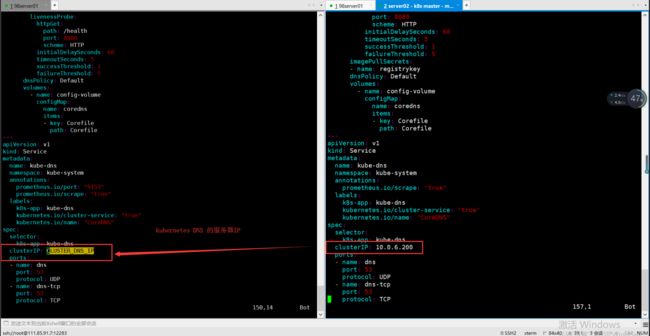

6.配置文件并部署服务

首先第一步要知道集群使用的DNS的IP地址

从kubelet配置中可以得知集群配置的clusterIP地址是10.0.6.200

查看delpoy脚本,确定执行过程

总结需要修改下面几个配置:

sed

-e s/CLUSTER_DNS_IP/$CLUSTER_DNS_IP/g

-e s/CLUSTER_DOMAIN/$CLUSTER_DOMAIN/g

-e "s?REVERSE_CIDRS?$REVERSE_CIDRS?g"

$YAML_TEMPLATE

其中REVERSE_CIDRS就是上游的IP地址。

in-addr.arpa ip6.arpa

查看配置在模板yaml文件的位置

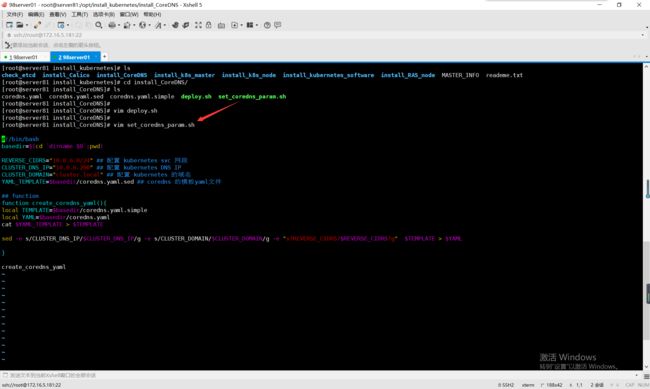

编写自定义配置脚本

代码如下:

[root@server81 install_CoreDNS]# vim set_coredns_param.sh

#!/bin/bash

basedir=$(cd `dirname $0`;pwd)

REVERSE_CIDRS="10.0.6.0/24" ## 配置 kubernetes svc 网段

CLUSTER_DNS_IP="10.0.6.200" ## 配置 kubernetes DNS IP

CLUSTER_DOMAIN="cluster.local" ## 配置 kubernetes 的域名

YAML_TEMPLATE=$basedir/coredns.yaml.sed ## coredns 的模板yaml文件

## function

function create_coredns_yaml(){

local TEMPLATE=$basedir/coredns.yaml.simple

local YAML=$basedir/coredns.yaml

cat $YAML_TEMPLATE > $TEMPLATE

sed -e s/CLUSTER_DNS_IP/$CLUSTER_DNS_IP/g -e s/CLUSTER_DOMAIN/$CLUSTER_DOMAIN/g -e "s?REVERSE_CIDRS?$REVERSE_CIDRS?g" $TEMPLATE > $YAML

}

create_coredns_yaml

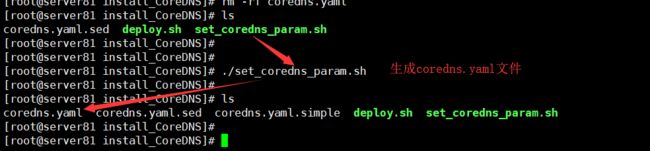

执行配置脚本,生成部署coreDNS.yaml文件

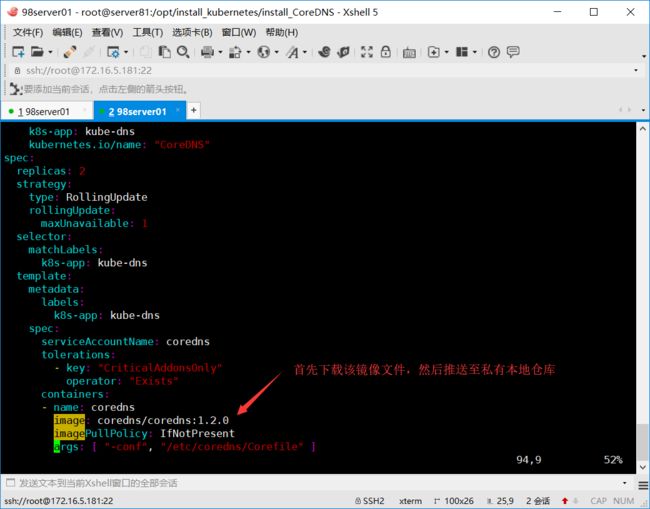

下载镜像,并推送至本地的私有仓库

image: coredns/coredns:1.2.0

这里就不说明如何推送镜像到仓库的步骤了。

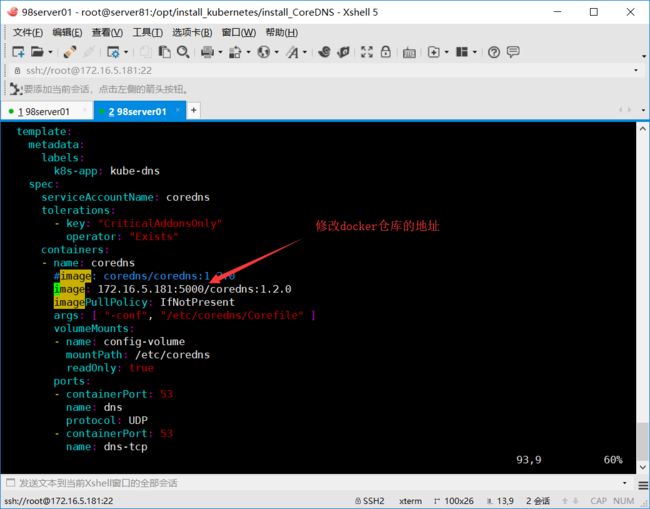

修改coredns.yaml文件中的镜像地址

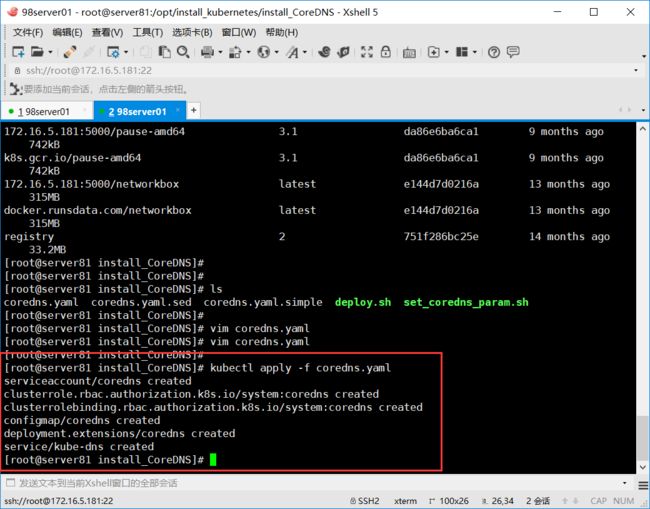

执行部署

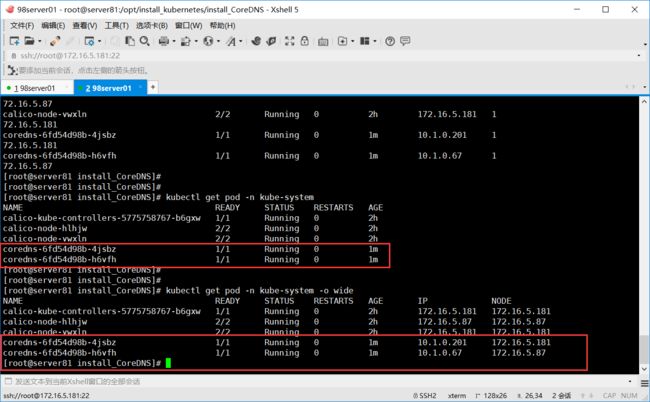

查看部署服务是否正常

服务已经正常运行,那么下面进入容器内ping一下域名,确认是否解析成功了。

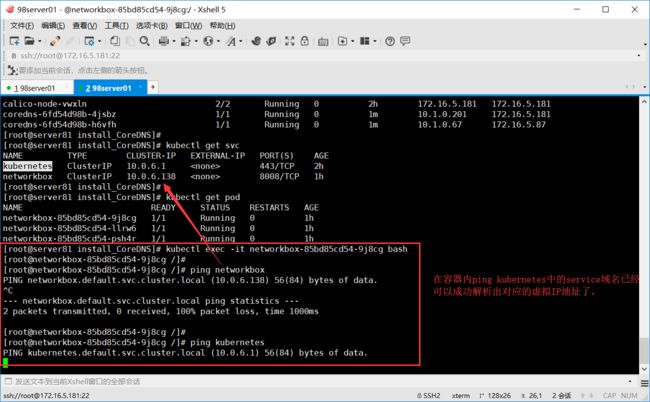

再次进入容器内ping域名,确认是否解析成功

留下一个问题

在经过上面的一系列部署之后,kuberntes的虚拟集群网络的DNS解析的确是可以的了。但是kubernetes之外的物理机node也是需要DNS解析的,那么该怎么去管理呢?

下面后续一个篇章,我会介绍使用dnsmasq的部署,方便管理kuberntes集群以及多台物理机服务器的DNS统一管理。

关注微信公众号,回复【资料】、Python、PHP、JAVA、web,则可获得Python、PHP、JAVA、前端等视频资料。