Logstash-input-jdbc 同步mysql 数据至ElasticSearch

- 笔者环境: Logstash 6.6.2 ,Centos6.9, Mysql 8.0, ElasticSearch 6.6.2 ,三个节点

一、安装 logstash-input-jdbc

- 1、下载Logstash 上传并解压,笔者已经安装,见如下链接 Logstash Centos上部署与入门测试

注:logstash-input-jdbc插件是logstash 的一个个插件,因此需要先安装Logstash。

- 2、笔者没有安装gem,故先安装gem

yum install gem

在安装gem里还遇到了一点小问题,详见下文;

- 3、安装logstash-input-jdbc (特别幸运,没有修改镜像几秒钟便安装成功)

[root@node bin]# ./logstash-plugin install logstash-input-jdbc

Validating logstash-input-jdbc

Installing logstash-input-jdbc

Installation successful

二、实现 Mysql 数据的增量同步至ElasticSearch

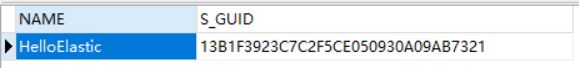

mysql 表如下,其中S_GUID 为表主键,该表只有一条数据

写配置文件

- 1、my-jdbc.conf

[root@node logstash-6.6.2]# more my-jdbc.conf

input {

stdin {

}

jdbc {

# mysql jdbc connection string to our backup databse

jdbc_connection_string => "jdbc:mysql://10.xx.xx.x/test?useSSL=false"

jdbc_user => "root"

jdbc_password => "root-123"

jdbc_driver_library => "/root/mysql-connector-java-8.0.11.jar"

jdbc_driver_class => "com.mysql.cj.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

statement_filepath => "/usr/logstash-6.6.2/my-jdbc.sql"

schedule => "* * * * *"

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

}

output {

elasticsearch {

hosts => ["es01:9200"]

index => "mysql02"

document_id => "%{s_guid}"

document_type => "mysql_test"

}

stdout {

codec => json_lines

}

}

注:

1、index 只支持小写;

2、document_id 也要小写;

3、es 6.x 一个索引下只支持一个类型。

- 2、待执行的sql文件

[root@node logstash-6.6.2]# more my-jdbc.sql

select * from TEST_ES

- 3、启动命令

[root@node logstash-6.6.2]# ./bin/logstash -f my-jdbc.conf

输出日志:

[root@node logstash-6.6.2]# ./bin/logstash -f my-jdbc.conf

Sending Logstash logs to /usr/logstash-6.6.2/logs which is now configured via log4j2.properties

[2019-03-22T18:31:15,463][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-03-22T18:31:15,500][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.2"}

[2019-03-22T18:31:28,390][WARN ][logstash.outputs.elasticsearch] You are using a deprecated config setting "document_type" set in elasticsearch. Deprecated settings will continue to work, but are scheduled for removal from logstash in the future. Document types are being deprecated in Elasticsearch 6.0, and removed entirely in 7.0. You should avoid this feature If you have any questions about this, please visit the #logstash channel on freenode irc. {:name=>"document_type", :plugin=>"mysql02", id=>"2b2108c2afc5e536fc03529a453a8c0bd625c8113a8ca6b8a167e81fa9642788", document_id=>"%{s_guid}", hosts=>[//bigdata:9200], document_type=>"mysql_test", enable_metric=>true, codec=>"plain_0c81a8f7-3d02-4df6-a200-800738eb8cdf", enable_metric=>true, charset=>"UTF-8">, workers=>1, manage_template=>true, template_name=>"logstash", template_overwrite=>false, doc_as_upsert=>false, script_type=>"inline", script_lang=>"painless", script_var_name=>"event", scripted_upsert=>false, retry_initial_interval=>2, retry_max_interval=>64, retry_on_conflict=>1, ilm_enabled=>false, ilm_rollover_alias=>"logstash", ilm_pattern=>"{now/d}-000001", ilm_policy=>"logstash-policy", action=>"index", ssl_certificate_verification=>true, sniffing=>false, sniffing_delay=>5, timeout=>60, pool_max=>1000, pool_max_per_route=>100, resurrect_delay=>5, validate_after_inactivity=>10000, http_compression=>false>}

[2019-03-22T18:31:28,530][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>32, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2019-03-22T18:31:29,364][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://bigdata:9200/]}}

[2019-03-22T18:31:29,604][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://bigdata:9200/"}

[2019-03-22T18:31:29,721][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>6}

[2019-03-22T18:31:29,725][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>6}

[2019-03-22T18:31:29,758][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//bigdata:9200"]}

[2019-03-22T18:31:29,768][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2019-03-22T18:31:29,825][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

[2019-03-22T18:31:31,191][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

The stdin plugin is now waiting for input:

[2019-03-22T18:31:31,262][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2019-03-22T18:31:32,151][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

[2019-03-22T18:32:03,266][INFO ][logstash.inputs.jdbc ] (0.159731s) SELECT version()

[2019-03-22T18:32:03,313][INFO ][logstash.inputs.jdbc ] (0.000779s) SELECT version()

[2019-03-22T18:32:03,459][INFO ][logstash.inputs.jdbc ] (0.000869s) SELECT count(*) AS `count` FROM (select * from TEST_ES

) AS `t1` LIMIT 1

[2019-03-22T18:32:03,504][INFO ][logstash.inputs.jdbc ] (0.000881s) SELECT * FROM (select * from TEST_ES

) AS `t1` LIMIT 50000 OFFSET 0

{"name":"HelloElastic","s_guid":"13B1F3923C7C2F5CE050930A09AB7321","@timestamp":"2019-03-22T10:32:03.530Z","@version":"1"}

[2019-03-22T18:33:00,308][INFO ][logstash.inputs.jdbc ] (0.000389s) SELECT version()

[2019-03-22T18:33:00,313][INFO ][logstash.inputs.jdbc ] (0.000302s) SELECT version()

[2019-03-22T18:33:00,324][INFO ][logstash.inputs.jdbc ] (0.000551s) SELECT count(*) AS `count` FROM (select * from TEST_ES

) AS `t1` LIMIT 1

往表添加一条数据后,日志如下

[2019-03-22T18:38:00,247][INFO ][logstash.inputs.jdbc ] (0.000397s) SELECT * FROM (select * from TEST_ES

) AS `t1` LIMIT 50000 OFFSET 0

{"name":"HelloJava","s_guid":"123141","@timestamp":"2019-03-22T10:38:00.248Z","@version":"1"}

{"name":"HelloElastic","s_guid":"13B1F3923C7C2F5CE050930A09AB7321","@timestamp":"2019-03-22T10:38:00.248Z","@version":"1"}

添加新数据前如下

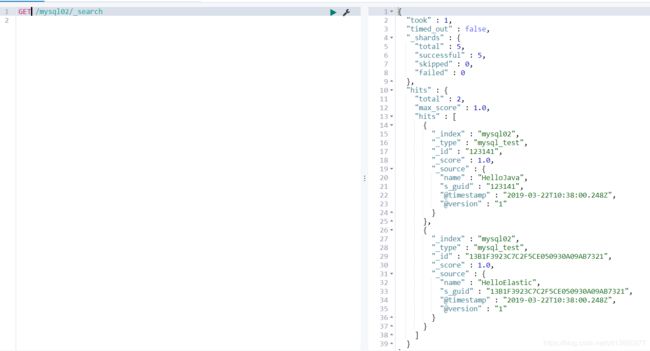

添加后如下:

可见数据已添加至ES;

删除mysql一条数据

这时ES中的数据并不会发生删除该条数据的操作,这个需要配置额外.conf配置文件

配置规则详见官网: logstash-input-jdbc官方参考文档

小结:

-

修改已存在的数据,不改主键,修改后的数据会被更新至ES(覆盖原先的数据)

-

执行过程:input 设置了分页,其首先是计算了所查询表的数据量,然后再按分页的形式一批批的加载进ES。从Kibana可知其加载过程是加载一批则写一批到ES中。

-

为了方便测试,目前执行过程在配置文件设置成每分钟执行一次,在logstash执行的过程中,如果对所监听的表进行数据添加,可自行加载到ES中。

-

当删除数据时,需要额外设置,目前没用上该功能后续再瞧瞧了。

三、数据存储所占磁盘空间对比

-

EX_GOOD_01_GRDJ 个人登记信息 ,数据量:3549969,41个字段

-

16:15 开始抽取,每批5万数据,数据以分页每次5万的方式提交到ES中,用时接近30分钟。即每秒插入约2000条数据。若同时启动两台logstash(默认配置),用时大概15分钟。

-

es 采用默认配置,三个数据节点,每台机子内存64G。

对比数据存储于mysql 与 ES、HBase 对应的空间大小

保存一个副本,总共在ES 中占空间 6.7 G

在mysql 中占空间

-rw-r-----. 1 mysql mysql 2.5G Feb 20 05:24 EX_GOOD_01_GRDJ.ibd

直接查询如下:

select concat(round(sum(DATA_LENGTH/1024/1024/1024),2),'GB')as data

from information_schema.TABLES where table_schema='test'

AND TABLE_NAME='EX_GOOD_01_GRDJ';

结果为:1.89GB

在Hive中所占空间为:2G。

在Hbase 所占空间(这个还是没有包含副本信息所占的空间):

19.5 G /apps/hbase/data/data/default/EX_GOOD_01_GRDJ

四、同步数据相关的优化

1、部署多个logstash 同时执行同步任务

2、修改ES jvm 大小,官方建议修改成所占机器的50%(前提若该机器只为ElasticSearch服务)

3、修改ElasticSearch 数据默认的刷新时间。

五、同步数据遇到的问题

1、安装时 gem 出现如下异常

[root@node yum.repos.d]# yum install gem

Loaded plugins: fastestmirror, refresh-packagekit, security

Setting up Install Process

Loading mirror speeds from cached hostfile

No package gem available.

Error: Nothing to do

解决

[root@node yum.repos.d]# yum search gem

rubygems.noarch : The Ruby standard for packaging ruby libraries

故直接运行如下命令进行安装

yum install rubygems.noarch

参考文档:yum安装出现No package vim available解决办法

2、修改镜像出现错误,则直接安装竟然成功了

[root@node yum.repos.d]# gem sources --add https://gems.ruby-china.com/ --remove https://rubygems.org/

Error fetching https://gems.ruby-china.com/:

hostname was not match with the server certificate (https://gems.ruby-china.com/specs.4.8.gz)

source https://rubygems.org/ not present in cache

[root@node yum.repos.d]# gem -v

1.3.7

[root@node yum.repos.d]# gem update --system

Updating RubyGems

Updating rubygems-update

ERROR: Error installing rubygems-update:

rubygems-update requires Ruby version >= 2.3.0.

ERROR: While executing gem ... (NoMethodError)

undefined method `version' for nil:NilClass

3、数据无论如何都不能将整张表的加载进入ElasticSearch,每次只加载一条数据,但又没有异常日志输出

实际情况:mysql 表中的主键 s_guid 为大写的。

原因:.conf 配置文件中document_id => "%{s_guid}" ,该id配置成大写

解决将其配置成小写便可。

参考文档:

mysql 与elasticsearch实时同步常用插件及优缺点对比

ElasticSearch5+logstash的logstash-input-jdbc实现mysql数据同步

利用logstash的logstash-input-jdbc插件实现mysql增量导入ES的介绍

Elasticsearch 用logstash6.1.1导入数据库报错

Java连接mysql中遇到的一些问题及解决方法