TensorFlow 简单卷积神经网络实现Cifar10数据集分类

1.环境

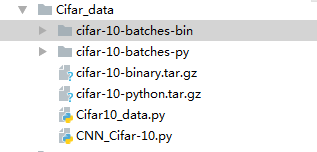

本人电脑win7+python3.6+i5,E盘创建一个文件夹Cifar_data,里面包括cifar-10-binary.tar.gz解压后的数据集cifar-10-batches-bin,Cifar10_data.py文件主要负责读取数据并对其进行数据增强预处理。

CNN_Cifar-10.py,文件负责构建卷积神经网络的整体结构,并运行训练和测试的过程。

2.数据集

Cifar10网站链接

里面有三个版本的数据集,分别为

CIFAR-10 python版本

CIFAR-10 Matlab版本

CIFAR-10 binary 版本 (适合 C 语言)

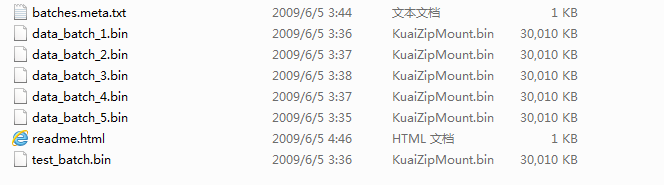

本次用了第三个版本,解压后如下:

数据包含60000张32x32彩色图像,训练集图像50000张,测试集图像10000张,除了test_batch.bin中保存的10000张测试集图像数据,其他五个.bin文件各自保存10000张训练集图像。Cifar10数据集标注10类,彼此间没有重叠情况,batches.meta.txt文件中是10类标签的字符串信息。

3.代码

Cifar10_data.py

import os

import tensorflow as tf

"""

该文件负责读取数据并对其进行数据增强预处理

"""

num_classes=10

# 设定用于训练和评估的样本总数

num_examples_pre_epoch_for_train=50000

num_examples_pre_epoch_for_eval=10000

# 定义一个空类,用于返回读取的数据

class CIFAR10Record(object):

pass

# 定义读取cifar10数据的函数

def read_cifar10(file_queue):

result=CIFAR10Record()

label_bytes=1#如果是cifar_100数据集,则是为2

result.height=32

result.width=32

result.depth=3#RGB三通道

image_bytes=result.height*result.width*result.depth#=3072

# 每个样本都包含一个lable数据和image数据,结果为:record_bytes=3073

record_bytes=label_bytes+image_bytes

"""

创建一个文件读取类,并调用该类的read()函数从文件队列中读取文件

FiexdLengthRecordReader类用于读取固定长度字节数信息(针对bin文件而言,使用这个类读取比较合适)

"""

# 构造函数原型:__init__(self,record_bytes,header_bytes,footer_bytes,name)

reader=tf.FixedLengthRecordReader(record_bytes=record_bytes)

result.key,value=reader.read(file_queue)

# 得到的value就是record_bytes长度的包含多个label数据和image数据的字符串

# decode_raw函数可以将字符串解析成图像对应的像素数组

record_bytes=tf.decode_raw(value,tf.uint8)

# 将得到的record_bytes数组中第一个元素类型转换为int32类型

# strided_slice函数用于对input截取[begin,end]区间的数据

result.label=tf.cast(tf.strided_slice(record_bytes,[0],[label_bytes]),tf.int32)

# 剪切label后就剩下图片数据,将数据的格式从[depth*height*width]转换为[depth,height,width]

depth_major=tf.reshape(tf.strided_slice(record_bytes,[label_bytes],

[label_bytes+image_bytes]),

[result.depth,result.height,result.width])

# 将[depth,height,width]转换为[height,width,depth]

result.uint8image=tf.transpose(depth_major,[1,2,0])

return result

"""

定义input()函数,该函数传入的data_dir参数就是存放原始Cifar_10数据的目录,

函数通过join()函数拼接文件完整的路径,

"""

def inputs(data_dir,batch_size,distorted):

# 使用os的join()函数拼接路径

filenames=[os.path.join(data_dir,"data_batch_%d.bin"%i)for i in range(1,6)]

#创建一个文件队列,并调用read_cifar()函数读取队列中的文件

file_queue=tf.train.string_input_producer(filenames)

read_input=read_cifar10(file_queue)

# 使用cast()函数将图片数据转换为float32格式

reshaped_image=tf.cast(read_input.uint8image,tf.float32)

num_examples_per_epoch=num_examples_pre_epoch_for_train

# 对图像数据进行数据增强处理

if distorted !=None:

# 将[32,32,3]大小的图片随机裁剪成[24,24,3]大小

cropped_image=tf.random_crop(reshaped_image,[24,24,3])

# 随机左右翻转图片

flipped_image=tf.image.random_flip_left_right(cropped_image)

# 调整图片亮度

adjusted_brightness=tf.image.random_brightness(flipped_image,max_delta=0.8)

#调整对比度

adjusted_contrast=tf.image.random_contrast(adjusted_brightness,lower=0.2,upper=1.8)

#标准化图片,注意不是归一化

#该函数是对每一个像素减去平均值并除以像素方差

float_image=tf.image.per_image_standardization(adjusted_contrast)

#设置图片数据及label的形状

float_image.set_shape([24,24,3])

read_input.label.set_shape([1])

min_queue_examples=int(num_examples_pre_epoch_for_eval*0.4)

print('filling queue with %d CIFAR images before starting to train.''this will take a few minutes. %d min_queue_examples')

# 使用shuffle_batch()函数随机产生一个batch的image和label

images_train,labels_train=tf.train.shuffle_batch([float_image,read_input.label],

batch_size=batch_size,num_threads=16,

capacity=min_queue_examples+3*batch_size,

min_after_dequeue=min_queue_examples)

return images_train,tf.reshape(labels_train,[batch_size])

#不对图像数据进行数据增强处理

else:

resized_image=tf.image.resize_image_with_crop_or_pad(reshaped_image,24,24)

#没有图像的其他处理过程,直接标准化

float_image=tf.image.per_image_standardization(resized_image)

#设置图片数据及label的形状

float_image.set_shape([24,24,3])

read_input.label.set_shape([1])

min_queue_examples=int(num_examples_per_epoch*0.4)

# 使用batch()函数创建样例的batch,该过程使用最多的是shuffle_batch()函数,但这里用batch()函数代替

images_test,labels_test=tf.train.batch([float_image,read_input.label],

batch_size=batch_size,num_threads=16,

capacity=min_queue_examples+3*batch_size)

return images_test,tf.reshape(labels_test,[batch_size])

CNN_Cifar-10.py

import tensorflow as tf

import numpy as np

import time

import math

import Cifar_data.Cifar10_data as Cifar10_data

max_steps=4000

batch_size=100

num_examples_for_eval=1000

data_dir="cifar-10-batches-bin"

# 定义创建权重参数的函数

def variable_with_weight_loss(shape,stddev,wl):

var=tf.Variable(tf.truncated_normal(shape,stddev=stddev))

if wl is not None:

weights_loss=tf.multiply(tf.nn.l2_loss(var),wl,name="weights_loss")

tf.add_to_collection("losses",weights_loss)

return var

# 使用Cifar10_data.py里的inputs()函数生成训练数据batch和测试数据batch

"""

对于要训练的图片数据,distorted参数为true,表示进行数据增强处理

对于要测试的图片数据,则设为None,表示不进行数据增强处理

"""

images_train,labels_train=Cifar10_data.inputs(data_dir=data_dir,batch_size=batch_size,distorted=True)

images_test,labels_test=Cifar10_data.inputs(data_dir=data_dir,batch_size=batch_size,distorted=None)

# 创建x和y_两个placeholder用于在训练或评估时提供输入的数据和对应的label

x=tf.placeholder(tf.float32,[batch_size,24,24,3])

y_=tf.placeholder(tf.int32,[batch_size])

# 第一个卷积层

kernel1=variable_with_weight_loss(shape=[5,5,3,64],stddev=5e-2,wl=0.0)

conv1=tf.nn.conv2d(x,kernel1,[1,1,1,1],padding="SAME")

bias1=tf.Variable(tf.constant(0.0,shape=[64]))

relu1=tf.nn.relu(tf.nn.bias_add(conv1,bias1))

pool1=tf.nn.max_pool(relu1,ksize=[1,3,3,1],strides=[1,2,2,1],padding="SAME")

# 第二个卷积层

kernel2=variable_with_weight_loss(shape=[5,5,64,64],stddev=5e-2,wl=0.0)#第三个输入维度即输入的通道数和第一层的输出通道数保持一致

conv2=tf.nn.conv2d(pool1,kernel2,[1,1,1,1],padding="SAME")

bias2=tf.Variable(tf.constant(0.1,shape=[64]))#将第一层的b值换为0.1

relu2=tf.nn.relu(tf.nn.bias_add(conv2,bias2))

pool2=tf.nn.max_pool(relu2,ksize=[1,3,3,1],strides=[1,2,2,1],padding="SAME")

# 两个卷积层后即三个全连接层,将reshape()函数将pool2输出变成一维向量,并使用get_shape()函数获取数据扁平化之后的长度

reshape=tf.reshape(pool2,[batch_size,-1])

dim=reshape.get_shape()[1].value

# 第一个全连接层

weight1=variable_with_weight_loss(shape=[dim,384],stddev=0.04,wl=0.004)

fc_bias1=tf.Variable(tf.constant(0.1,shape=[384]))

fc_1=tf.nn.relu(tf.matmul(reshape,weight1)+fc_bias1)

# 第2个全连接层

weight2=variable_with_weight_loss(shape=[384,192],stddev=0.04,wl=0.004)

fc_bias2=tf.Variable(tf.constant(0.1,shape=[192]))

local4=tf.nn.relu(tf.matmul(fc_1,weight2)+fc_bias2)

# 第3个全连接层

weight3=variable_with_weight_loss(shape=[192,10],stddev=1/192.0,wl=0.0)

fc_bias3=tf.Variable(tf.constant(0.0,shape=[10]))

result=tf.add(tf.matmul(local4,weight3),fc_bias3)

# 计算损失,包括权重参数的正则化损失和交叉熵的损失

cross_entropy=tf.nn.sparse_softmax_cross_entropy_with_logits(logits=result,

labels=tf.cast(y_,tf.int64))

weight_with_l2_loss=tf.add_n(tf.get_collection("losses"))

loss=tf.reduce_mean(cross_entropy)+weight_with_l2_loss

train_op=tf.train.AdamOptimizer(1e-3).minimize(loss)

top_k_op=tf.nn.in_top_k(result,y_,1)

with tf.Session() as sess:

tf.global_variables_initializer().run()

# 开启多线程

tf.train.start_queue_runners()

for step in range(max_steps):

start_time=time.time()

image_batch,label_batch=sess.run([images_train,labels_train])

_,loss_value=sess.run([train_op,loss],feed_dict={x:image_batch,y_:label_batch})

duration=time.time()-start_time

if step %100==0:

examples_per_sec=batch_size/duration

sec_per_batch=float(duration)

# 答应每一轮训练所消耗的时间

print("step %d,loss=%.2f(%.1f examples/sec; %.3fsec/batch)"%

(step,loss_value,examples_per_sec,sec_per_batch))

运行结果:

D:\python3.6.5\python.exe E:/python数据/Cifar_data/CNN_Cifar-10.py

filling queue with %d CIFAR images before starting to train.this will take a few minutes. %d min_queue_examples

2019-08-17 14:57:59.593591: W C:\tf_jenkins\home\workspace\rel-win\M\windows\PY\36\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2019-08-17 14:57:59.594591: W C:\tf_jenkins\home\workspace\rel-win\M\windows\PY\36\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

step 0,loss=4.67(31.5 examples/sec; 3.175sec/batch)

step 100,loss=1.89(148.6 examples/sec; 0.673sec/batch)

step 200,loss=1.67(138.5 examples/sec; 0.722sec/batch)

step 300,loss=1.60(135.5 examples/sec; 0.738sec/batch)

step 400,loss=1.66(150.4 examples/sec; 0.665sec/batch)

step 500,loss=1.58(151.5 examples/sec; 0.660sec/batch)

step 600,loss=1.64(145.3 examples/sec; 0.688sec/batch)

step 700,loss=1.42(150.8 examples/sec; 0.663sec/batch)

step 800,loss=1.11(137.0 examples/sec; 0.730sec/batch)

step 900,loss=1.33(139.7 examples/sec; 0.716sec/batch)

step 1000,loss=1.42(152.0 examples/sec; 0.658sec/batch)

step 1100,loss=1.49(136.0 examples/sec; 0.735sec/batch)

step 1200,loss=1.26(153.1 examples/sec; 0.653sec/batch)

step 1300,loss=1.40(147.1 examples/sec; 0.680sec/batch)

step 1400,loss=1.26(155.0 examples/sec; 0.645sec/batch)

step 1500,loss=1.44(154.8 examples/sec; 0.646sec/batch)

step 1600,loss=1.36(148.1 examples/sec; 0.675sec/batch)

step 1700,loss=1.33(150.6 examples/sec; 0.664sec/batch)

step 1800,loss=1.09(158.5 examples/sec; 0.631sec/batch)

step 1900,loss=1.35(140.2 examples/sec; 0.713sec/batch)

step 2000,loss=1.14(146.0 examples/sec; 0.685sec/batch)

step 2100,loss=1.09(142.6 examples/sec; 0.701sec/batch)

step 2200,loss=1.12(153.1 examples/sec; 0.653sec/batch)

step 2300,loss=1.04(141.6 examples/sec; 0.706sec/batch)

step 2400,loss=1.04(152.2 examples/sec; 0.657sec/batch)

step 2500,loss=1.03(143.5 examples/sec; 0.697sec/batch)

step 2600,loss=1.16(151.0 examples/sec; 0.662sec/batch)

step 2700,loss=0.94(140.8 examples/sec; 0.710sec/batch)

step 2800,loss=1.05(142.0 examples/sec; 0.704sec/batch)

step 2900,loss=1.13(139.1 examples/sec; 0.719sec/batch)

step 3000,loss=0.89(136.6 examples/sec; 0.732sec/batch)

step 3100,loss=0.90(150.4 examples/sec; 0.665sec/batch)

step 3200,loss=1.11(149.0 examples/sec; 0.671sec/batch)

step 3300,loss=1.16(142.8 examples/sec; 0.700sec/batch)

step 3400,loss=1.12(141.4 examples/sec; 0.707sec/batch)

step 3500,loss=0.96(152.0 examples/sec; 0.658sec/batch)

step 3600,loss=1.03(132.3 examples/sec; 0.756sec/batch)

step 3700,loss=0.91(139.7 examples/sec; 0.716sec/batch)

step 3800,loss=0.95(147.3 examples/sec; 0.679sec/batch)

step 3900,loss=0.86(154.6 examples/sec; 0.647sec/batch)

Process finished with exit code 0

运行结果展示了每隔100step会计算当前的损失值、每秒钟能训练的样本数量,以及训练一个batch数据所花费的时间。所以,可以看到,最后loss=086,每秒训练的样本大约154个,训练一个batch数据所花费的时间为0.647s。

训练结束后可以在测试集上评测模型的准确率。测试集共有10000个样本,为了最大限度使用完验证数据,先计算评测全部样本一共要多少个batch,得到变量num_batch,该变量会作为循环进行的次数。该代码接Session内容

# math.ceil()函数用于求整

num_batch=int(math.ceil(num_examples_for_eval/batch_size))

true_count=0

total_sample_count=num_batch*batch_size

# 在一个for循环内统计所有预测正确的样例个数

for j in range(num_batch):

image_batch,label_batch=sess.run([images_test,labels_test])

predictions=sess.run([top_k_op],feed_dict={x:image_batch,y_:label_batch})

true_count +=np.sum(predictions)

# 打印正确率信息

print("accuracy=%.3f%%"%((true_count/total_sample_count)*100))

结果如下:

accuracy=73.300%