Example output of netstat -su:

$ netstat -su

Udp:

2829651752 packets received

27732564 packets to unknown port received.

1629462811 packet receive errors

179722143 packets sent This is showing the total number of UDP packets received and sent, plus two extra metrics. The second line shows UDP packets that were sent to a port that doesn't have a listening socket, then the third line shows packets that were dropped by the kernel.

Sockets contain a couple of buffers between the kernel and the application, one for receiving and one for sending data which have a fixed size. When the application fails to read from the buffer fast enough, packets will be discarded, incrementing the receive error counter.

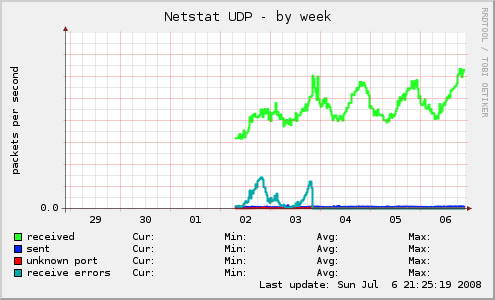

As no technical blog post is complete without a pretty graph, below is a graph generated using Munin, showing the UDP traffic flowing on one particular system:

In the above graph, you can see the dominant line being the received packets and the turquoise line lower down is showing the packet receive errors.

On Linux, the buffer sizes are controlled by a group of sysctl parameters with rmem* being receive buffers and w* being send buffers:

- net.core.rmem_default

- net.core.rmem_max

- net.core.wmem_default

- net.core.wmem_max

$ sysctl net.core grep [rw]mem net.core.wmem_max = 131071 net.core.rmem_max = 131071 net.core.wmem_default = 122880 net.core.rmem_default = 122880Using sysctl, you can update the values of these parameters with the -w option:

$ sudo sysctl -w net.core.rmem_max=1048576 net.core.rmem_default=1048576 net.core.rmem_max = 1048576 net.core.rmem_default = 1048576This now causes any application to have increased buffer sizes on its sockets by default, which provided your application doesn't have other bottlenecks affecting its throughput, will give it a little more space. It's also possible to increase the maximum and then have the application alter the socket size - see socket(7) for more info.

In our case, you can clearly see on the graph that the problem has been solved for a few days. We had to apply two changes mentioned:

- Increasing the buffer size, which was done using the application config (and increasing the net.core.rmem_max parameter, leaving rmem_default alone)

- by tweaking the application to increase its throughput, using more controlled buffering internally, rather than relying on the kernel socket buffering