saltstack部署实现nginx和apache的负载均衡及高可用(批量实现)

一 saltstack的介绍

SaltStack是一个服务器基础架构集中化管理平台,具备配置管理、远程执行、监控等功能,基于Python语言实现,结合轻量级消息队列(ZeroMQ)与Python第三方模块(Pyzmq、PyCrypto、Pyjinjia2、python-msgpack和PyYAML等)构建。

通过部署SaltStack,我们可以在成千万台服务器上做到批量执行命令,根据不同业务进行配置集中化管理、分发文件、采集服务器数据、操作系统基础及软件包管理等,SaltStack是运维人员提高工作效率、规范业务配置与操作的利器。

1 基本原理

SaltStack 采用 C/S模式,server端就是salt的master,client端就是minion,minion与master之间通过ZeroMQ消息队列通信

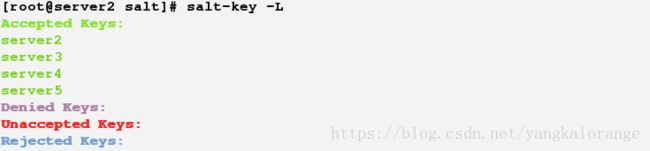

minion上线后先与master端联系,把自己的pub key发过去,这时master端通过salt-key -L命令就会看到minion的key,接受该minion-key后,也就是master与minion已经互信

master可以发送任何指令让minion执行了,salt有很多可执行模块,比如说cmd模块,在安装minion的时候已经自带了,它们通常位于你的python库中,

locate salt | grep /usr/可以看到salt自带的所有东西。这些模块是python写成的文件,里面会有好多函数,如cmd.run,当我们执行

salt '*' cmd.run 'uptime'的时候,master下发任务匹配到的minion上去,minion执行模块函数,并返回结果。master监听4505和4506端口,4505对应的是ZMQ的PUB system,用来发送消息,4506对应的是REP system是来接受消息的。

具体步骤如下:

- Salt stack的Master与Minion之间通过ZeroMq进行消息传递,使用了ZeroMq的发布-订阅模式,连接方式包括tcp,ipc

- salt命令,将

cmd.run ls命令从salt.client.LocalClient.cmd_cli发布到master,获取一个Jodid,根据jobid获取命令执行结果。 - master接收到命令后,将要执行的命令发送给客户端minion。

- minion从消息总线上接收到要处理的命令,交给

minion._handle_aes处理 minion._handle_aes发起一个本地线程调用cmdmod执行ls命令。线程执行完ls后,调用minion._return_pub方法,将执行结果通过消息总线返回给master- master接收到客户端返回的结果,调用

master._handle_aes方法,将结果写的文件中 salt.client.LocalClient.cmd_cli通过轮询获取Job执行结果,将结果输出到终端。

二 实验步骤

1)实验环境

| master | minion |

| server2: 172.25.1.2 | server2:172.25.1.2(haproxy,keeplived) |

| server3:172.25.1.3(httpd) | |

| server4:172.25.1.4(nginx) | |

| server5:172.25.1.5(haproxy,keeplived) |

这四个结点上配置好安装的yum源:

[salt]

name=salt

baseurl=http://172.25.1.250/rhel6

gpgcheck=0

2)安装软件

server2(master):

yum install -y salt-master

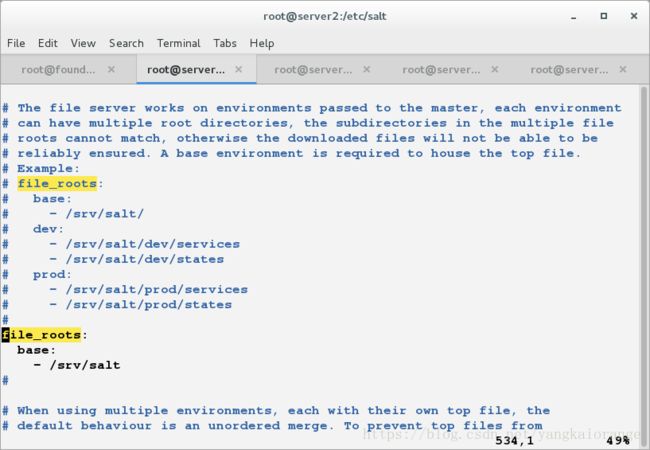

编辑/etc/salt/master

mkdir /srv/salt

mkdir /srv/pillar

注意:Pillar是在salt 0.9.8版本后才添加的功能组件。它跟grains的结构一样,也是一个字典格式,数据通过key/value的格式进行存储。在Salt的设计 中,Pillar使用独立的加密sessiion,所以Pillar可以用来传递敏感的数据,例如ssh-key,加密证书等。

/etc/init.d/salt-master start

server2.server3.server4.server5(minion)

yum install -y salt-minion

编辑 /etc/salt/minion

master: 172.25.1.2(指定master)

3 )Master与Minion认证

(1) minion在第一次启动时,会在/etc/salt/pki/minion/(该路径在/etc/salt/minion里面设置)下自动生成 minion.pem(private key)和 minion.pub(public key),然后将 minion.pub发送给master。

(2)master在接收到minion的public key后,通过salt-key命令accept minion public key,这样在master的/etc/salt/pki/master/minions下的将会存放以minion id命名的 public key,然后master就能对minion发送指令了

4 )Master与Minion的连接

SaltStack master启动后默认监听4505和4506两个端口。4505(publish_port)为saltstack的消息发布系 统,4506(ret_port)为saltstack客户端与服务端通信的端口。如果使用lsof 查看4505端口,会发现所有的minion在4505端口持续保持在ESTABLISHED状态。

5 )apache

1 建立file文件仓库和安装脚本

[root@server2 apache]# pwd

/srv/salt/apache

[root@server2 apache]# ls

files lib.sls web.sls

[root@server2 apache]# cd files/

[root@server2 files]# ls

httpd.conf index.php

[root@server2 files]#

2 vim web.sls

apache-install:

pkg.installed:

- pkgs:

- httpd

- php

file.managed:

- name: /var/www/html/index.php

- source: salt://apache/files/index.php

- mode: 644

- user: root

- group: root

apache-service:

file.managed:

- name: /etc/httpd/conf/httpd.conf

- source: salt://apache/files/httpd.conf

- template: jinja

- context:

port: {{ pillar['port'] }}

bind: {{ pillar['bind'] }}

service.running:

- name: httpd

- enable: True

- reload: True

- watch:

- file: apache-service

~ 3 vim lib.sls

{% set port = 80 %}

4

[root@server2 web]# pwd

/srv/pillar/web

[root@server2 web]# ls

install.sls

[root@server2 web]# vim install.sls

{% if grains['fqdn'] == 'server3' %}

webserver: httpd

bind: 172.25.1.3

port: 80

在server3 上编辑vim /etc/salt/minion

/etc/salt/minion restart

5 最好是做完一个服务发送的minion端 进行验证,保证正确!

files 里面的配置文件和默认发布目录自己编写好,本文是将minion的配置文件发送到master端来进行修改操作的,其实也没有改什么!

vim index.php

~

6 验证

6 )nginx

apache 一样 这里只展现代码

[root@server2 nginx]# pwd

/srv/salt/nginx

[root@server2 nginx]# ls

files install.sls nginx-pre.sls service.sls

[root@server2 nginx]# cd files/

[root@server2 files]# ls

nginx nginx-1.14.0.tar.gz nginx.conf

[root@server2 files]#

vim nginx-pre.sls

pkg-init:

pkg.installed:

- pkgs:

- gcc

- zlib-devel

- openssl-devel

- pcre-devel

~

vim install.sls

include:

- nginx.nginx-pre

nginx-source-install:

file.managed:

- name: /mnt/nginx-1.14.0.tar.gz

- source: salt://nginx/files/nginx-1.14.0.tar.gz

cmd.run:

- name: cd /mnt && tar zxf nginx-1.14.0.tar.gz && cd nginx-1.14.0 && sed -i.bak 's/#define NGINX_VER "nginx\/" NGINX_VERSION/#define NGINX_VER "nginx"/g' src/core/nginx.h && sed -i.bak 's/CFLAGS="$CFLAGS -g"/#CFLAGS="$CFLAGS -g"/g' auto/cc/gcc && ./configure --prefix=/usr/local/nginx --with-http_ssl_module --with-http_stub_status_module && make && make install && ln -s /usr/local/nginx/sbin/nginx /usr/sbin/nginx

- creates: /usr/local/nginx

vim service.sls

include:

- nginx.install

/usr/local/nginx/conf/nginx.conf:

file.managed:

- source: salt://nginx/files/nginx.conf

nginx-service:

file.managed:

- name: /etc/init.d/nginx

- source: salt://nginx/files/nginx

- mode: 755

service.running:

- name: nginx

- enable: True

- reload: True

- watch:

- file: /usr/local/nginx/conf/nginx.conf

cd files

vim nginx

#!/bin/sh

#

# nginx - this script starts and stops the nginx daemon

#

# chkconfig: - 85 15

# description: Nginx is an HTTP(S) server, HTTP(S) reverse \

# proxy and IMAP/POP3 proxy server

# processname: nginx

# config: /usr/local/nginx/conf/nginx.conf

# pidfile: /usr/local/nginx/logs/nginx.pid

# Source function library.

. /etc/rc.d/init.d/functions

# Source networking configuration.

. /etc/sysconfig/network

# Check that networking is up.

[ "$NETWORKING" = "no" ] && exit 0

nginx="/usr/local/nginx/sbin/nginx"

prog=$(basename $nginx)

lockfile="/var/lock/subsys/nginx"

pidfile="/usr/local/nginx/logs/${prog}.pid"

NGINX_CONF_FILE="/usr/local/nginx/conf/nginx.conf"

start() {

[ -x $nginx ] || exit 5

[ -f $NGINX_CONF_FILE ] || exit 6

echo -n $"Starting $prog: "

daemon $nginx -c $NGINX_CONF_FILE

retval=$?

echo

[ $retval -eq 0 ] && touch $lockfile

return $retval

}

stop() {

echo -n $"Stopping $prog: "

killproc -p $pidfile $prog

retval=$?

echo

[ $retval -eq 0 ] && rm -f $lockfile

return $retval

}

restart() {

configtest_q || return 6

stop

start

}

reload() {

configtest_q || return 6

echo -n $"Reloading $prog: "

killproc -p $pidfile $prog -HUP

echo

}

configtest() {

$nginx -t -c $NGINX_CONF_FILE

}

configtest_q() {

$nginx -t -q -c $NGINX_CONF_FILE

}

rh_status() {

status $prog

}

rh_status_q() {

rh_status >/dev/null 2>&1

}

# Upgrade the binary with no downtime.

upgrade() {

local oldbin_pidfile="${pidfile}.oldbin"

configtest_q || return 6

echo -n $"Upgrading $prog: "

killproc -p $pidfile $prog -USR2

retval=$?

sleep 1

if [[ -f ${oldbin_pidfile} && -f ${pidfile} ]]; then

killproc -p $oldbin_pidfile $prog -QUIT

success $"$prog online upgrade"

echo

return 0

else

failure $"$prog online upgrade"

echo

return 1

fi

}

# Tell nginx to reopen logs

reopen_logs() {

configtest_q || return 6

echo -n $"Reopening $prog logs: "

killproc -p $pidfile $prog -USR1

retval=$?

echo

return $retval

}

case "$1" in

start)

rh_status_q && exit 0

$1

;;

stop)

rh_status_q || exit 0

$1

;;

restart|configtest|reopen_logs)

$1

;;

force-reload|upgrade)

rh_status_q || exit 7

upgrade

;;

reload)

rh_status_q || exit 7

$1

;;

status|status_q)

rh_$1

;;

condrestart|try-restart)

rh_status_q || exit 7

restart

;;

*)

echo $"Usage: $0 {start|stop|reload|configtest|status|force-reload|upgrade|restart|reopen_logs}"

exit 2

esac

vim nginx.conf

#user nobody;

worker_processes auto;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

[root@server2 web]# pwd

/srv/pillar/web

[root@server2 web]# vim install.sls

{% if grains['fqdn'] == 'server3' %}

webserver: httpd

bind: 172.25.1.3

port: 80

{% elif grains['fqdn'] == 'server4'%}

webserver: nginx

bind: 172.25.1.4

port: 80

minion端:

[root@server4 salt]# cd /etc/salt/

[root@server4 salt]# vim grains

roles: nginx

注意:最后每个服务都去测试

测试结果:

7 )haproxy

[root@server2 haproxy]# pwd

/srv/salt/haproxy

[root@server2 haproxy]# cd files/

[root@server2 files]# ls

haproxy-1.6.11.tar.gz haproxy.cfg haproxy.init

[root@server2 files]# pwd

/srv/salt/haproxy/files

vim install.sls

include:

- nginx.nginx-pre

- users.haproxy

haproxy-install:

file.managed:

- name: /mnt/haproxy-1.6.11.tar.gz

- source: salt://haproxy/files/haproxy-1.6.11.tar.gz

cmd.run:

- name: cd /mnt && tar zxf haproxy-1.6.11.tar.gz && cd haproxy-1.6.11 && make TARGET=linux2628 USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 PREFIX=/usr/local/haproxy && make TARGET=linux2628 USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 PREFIX=/usr/local/haproxy install

- creates: /usr/local/haproxy

/etc/haproxy:

file.directory:

- mode: 755

/usr/sbin/haproxy:

file.symlink:

- target: /usr/local/haproxy/sbin/haproxy

~

vim service.sls

include:

- haproxy.install

/etc/haproxy/haproxy.cfg:

file.managed:

- source: salt://haproxy/files/haproxy.cfg

haproxy-service:

file.managed:

- name: /etc/init.d/haproxy

- source: salt://haproxy/files/haproxy.init

- mode: 755

service.running:

- name: haproxy

- enable: True

- reload: True

- watch:

- file: /etc/haproxy/haproxy.cfg

vim haproxy.cfg

defaults

mode http

log global

option httplog

option dontlognull

monitor-uri /monitoruri

maxconn 8000

timeout client 30s

retries 2

option redispatch

timeout connect 5s

timeout server 30s

timeout queue 30s

stats uri /admin/stats

# The public 'www' address in the DMZ

frontend public

bind *:80 name clear

default_backend dynamic

# the application servers go here

backend dynamic

balance roundrobin

fullconn 4000 # the servers will be used at full load above this number of connections

server dynsrv1 172.25.1.3:80 check inter 1000

server dynsrv2 172.25.1.4:80 check inter 1000

vim haproxy.init

从minion端 安装包里/mnt/haproxy-1.6.11/examples 自行复制脚本启动文件

建立一个用户sls文件

[root@server2 salt]# cd users/

[root@server2 users]# ls

haproxy.sls

vim haproxy.sls

haproxy-group:

group.present:

- name: haproxy

- gid: 200

haproxy:

user.present:

- uid: 200

- gid: 200

- home: /usr/local/haproxy

- createhome: False

- shell: /sbin/nologin

server2 和 server5 都需要安装

[root@server2 web]# pwd

/srv/pillar/web

[root@server2 web]# vim install.sls

{% if grains['fqdn'] == 'server3' %}

webserver: httpd

bind: 172.25.1.3

port: 80

{% elif grains['fqdn'] == 'server4'%}

webserver: nginx

bind: 172.25.1.4

port: 80

{% elif grains['fqdn'] == 'server2'%}

webserver: haproxy

{% elif grains['fqdn'] == 'server5'%}

webserver: haproxy

{% endif %}

负载均衡测试:

8 )keeplived

[root@server2 keepalived]# pwd

/srv/salt/keepalived

[root@server2 keepalived]# ls

files install.sls service.sls

[root@server2 keepalived]# cd files/

[root@server2 files]# ls

keepalived keepalived-2.0.6.tar.gz keepalived.conf

[root@server2 files]# pwd

/srv/salt/keepalived/files

vim install.sls

include:

- nginx.nginx-pre

kp-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV && make && make install

- creates: /usr/local/keepalived

/etc/keepalived:

file.directory:

- mode: 755

/sbin/keepalived:

file.symlink:

- target: /usr/local/keepalived/sbin/keepalived

/etc/sysconfig/keepalived:

file.symlink:

- target: /usr/local/keepalived/etc/sysconfig/keepalived

/etc/init.d/keepalived:

file.managed:

- source: salt://keepalived/files/keepalived

- mode: 755

vim service.sls

include:

- keepalived.install

kp-service:

file.managed:

- name: /etc/keepalived/keepalived.conf

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {{ pillar['state'] }}

VRID: {{ pillar['vrid'] }}

PRIORITY: {{ pillar['priority'] }}

service.running:

- name: keepalived

- reload: True

- watch:

- file: kp-service

~

vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{ VRID }}

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.1.100

}

}

vim keepalived

脚本自行复制

[root@server2 keepalived]# pwd

/srv/pillar/keepalived

[root@server2 keepalived]# ls

install.sls

[root@server2 keepalived]# vim install.sls

{% if grains['fqdn'] == 'server2' %}

state: MASTER

vrid: 39

priority: 100

{% elif grains['fqdn'] == 'server5'%}

state: SLAVE

vrid: 39

priority: 50

{% endif %}

[root@server2 pillar]# pwd

/srv/pillar

[root@server2 pillar]# ls

keepalived top.sls web

[root@server2 pillar]# vim top.sls

base:

'*':

- web.install

- keepalived.install

~

[root@server2 salt]# pwd

/srv/salt

[root@server2 salt]# vim top.sls

base:

'server2':

- haproxy.service

- keepalived.service

'server5':

- haproxy.service

- keepalived.service

'roles:apache':

- match: grain

- apache.web

'roles:nginx':

- match: grain

- nginx.service

salt '*' state.highstate (高级推送)

测试:

这个时候假如done掉server2端,会发现访问依然没有变化,但是master vip 已经漂向server5了