KNN算法实现,KD-Tree与sklearn实现

距离度量

闵可夫斯基距离

- 假设有两个样本点x1、x2,它们两者之间的闵可夫斯基距离L_{p}定义为:

L p ( x 1 , x 2 ) = ( ∑ l = 1 n ∣ x i ( l ) − x j ( l ) ∣ p ) 1 p L_{p}\left(x_{1}, x_{2}\right)=\left(\sum_{l=1}^{n}\left|x_{i}^{(l)}-x_{j}^{(l)}\right|^{p}\right)^{\frac{1}{p}} Lp(x1,x2)=(∑l=1n∣∣∣xi(l)−xj(l)∣∣∣p)p1 - 当p=1时,称为曼哈顿距离:

L p ( x 1 , x 2 ) = ( ∑ l = 1 n ∣ x i ( l ) − x j ( l ) ∣ 1 ) 1 1 L_{p}\left(x_{1}, x_{2}\right)=\left(\sum_{l=1}^{n}\left|x_{i}^{(l)}-x_{j}^{(l)}\right|^{1}\right)^{\frac{1}{1}} Lp(x1,x2)=(∑l=1n∣∣∣xi(l)−xj(l)∣∣∣1)11 - 当p=2时,称为欧氏距离:

L p ( x 1 , x 2 ) = ( ∑ l = 1 n ∣ x i ( l ) − x j ( l ) ∣ 2 ) 1 2 L_{p}\left(x_{1}, x_{2}\right)=\left(\sum_{l=1}^{n}\left|x_{i}^{(l)}-x_{j}^{(l)}\right|^{2}\right)^{\frac{1}{2}} Lp(x1,x2)=(∑l=1n∣∣∣xi(l)−xj(l)∣∣∣2)21 - 当p=∞时,称为切比雪夫距离:

L p ( x 1 , x 2 ) = max l ∣ x i ( l ) − x j ( l ) ∣ L_{p}\left(x_{1}, x_{2}\right)=\max _{l}\left|x_{i}^{(l)}-x_{j}^{(l)}\right| Lp(x1,x2)=maxl∣∣∣xi(l)−xj(l)∣∣∣

加权平均

假设有a_{1},a_{2},a_{3}三个数,对应权重为 p 2 , p 2 , p 3 p_{2},p_{2},p_{3} p2,p2,p3,则加权平均值: a ˉ = p 1 a 1 + p 2 a 2 + p 3 a 3 p 1 + p 2 + p 3 \bar{a}=\frac{p_{1} a_{1}+p_{2} a_{2}+p_{3} a_{3}}{p_{1}+p_{2}+p_{3}} aˉ=p1+p2+p3p1a1+p2a2+p3a3

KNN

K近邻:就是k个最近的邻居的意思,说的是每个样本都可以用它最接近的k个邻居来代表。

算法原理较简单,主要是通过距离度量进行实现:

- 计算测试数据与各个训练数据之间的距离;

- 按照距离的递增关系进行排序;

- 选取距离最小的K个点;

- 确定前K个点所在类别的出现频率;

- 返回前K个点中出现频率最高的类别作为测试数据的预测分类

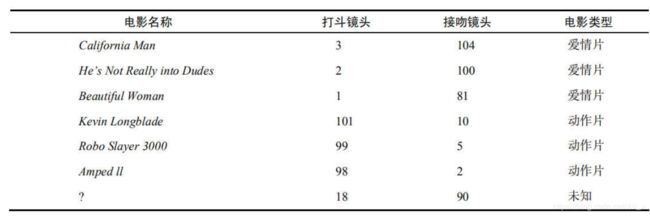

分类例子

- 计算待预测电影与已知电影的欧氏距离

- 而后,将距离从小到大进行排序,就得到 [ 18.7 , 19.2 , 20.5 , 115.3 ] [18.7,19.2,20.5,115.3] [18.7,19.2,20.5,115.3],然后找到对应电影名称的类型,发现爱情片有三个,动作片有1个,因此待预测电影归为爱情片

回归例子

- 同理,计算距离后并排序,取k=4,得到 [ 18.7 , 19.2 , 20.5 , 115.3 ] [18.7,19.2,20.5,115.3] [18.7,19.2,20.5,115.3],所以预测的电影的甜蜜指数就采用距离进行加权,得到的值为预测值

KNN的三个基本要素

距离、k值的选择、决策规则

- 距离:一般使用欧式距离,也可以使用曼哈顿距离、切比雪夫距离等

- K值的选择

k值的选择会对kNN模型的结果产生重大影响。选择较大的k值,相当于用较大邻域中的训练实例进行预测,模型会考虑过多的邻近点实例点,甚至会考虑到大量已经对预测结果没有影响的实例点,会让预测出错;选择较小的k值,相当于用较小邻域中的训练实例进行预测,会使模型变得敏感(如果邻近的实例点恰巧是噪声,预测就会出错)

– 可使用网格搜索方法进行k的的选择,找到最优k值 - 决策规则

1、分类:往往是多数表决,即由输入实例的k个邻近的训练实例中的多数决定待测实例的类。或带权投票

2、回归:取平均值。或带权取平均值

步骤实现与sklearn实现

# python3.7

# -*- coding: utf-8 -*-

#@Author : huinono

#@Software : PyCharm

import warnings

import numpy as np

import pandas as pd

import matplotlib as mpl

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from sklearn.preprocessing import StandardScaler,LabelEncoder

from sklearn.model_selection import train_test_split,GridSearchCV

from sklearn.neighbors import KNeighborsClassifier,KNeighborsRegressor

from sklearn.metrics import classification_report,mean_squared_error,r2_score

warnings.filterwarnings('ignore')

mpl.rcParams['font.sans-serif'] = 'SimHei'

mpl.rcParams['axes.unicode_minus'] = 'False'

plt.rcParams['font.sans-serif'] = 'SimHei'

plt.rcParams['axes.unicode_minus'] = 'False'

def knn_Vote():

movie = ['爱情片','动作片']

data = np.array([

[3,104,0],[2,100,0],[1,81,0],[101,10,1],[99,5,1],[98,2,1]

])

#test sample

x = [18,90]

k=5 #k value

dis = []

for i in data:

d = np.sqrt((x[0] - i[0]) ** 2 + (x[1] - i[1]) ** 2)

dis.append([d,i[2]])

dis.sort(key=lambda x:x[0])

print(dis)

count = {}

for i in dis[0:k]:

if count.get(i[1]) == None:

count[i[1]] = 1

else:

count[i[1]] += 1

max_key = max(count,key=count.get)

print(count)

print(movie[max_key])

def knn_VoteRight():

movie = ['爱情片', '动作片']

data = np.array([

[3, 104, 0], [2, 100, 0], [1, 81, 0],

[101, 10, 1], [99, 5, 1], [98, 2, 1]

])

# test sample

x = [18, 90]

k = 5 # k value

dis = []

for i in data:

d = np.sqrt((x[0] - i[0]) ** 2 + (x[1] - i[1]) ** 2)

dis.append([d, i[2]])

dis.sort(key=lambda x: x[0])

print(dis)

count = {}

for i in dis:

if count.get(i[1]) == None:

count[i[1]] = 1/i[0]

else:

count[i[1]] += 1/i[0]

max_key = max(count,key=count.get)

print(count)

print(movie[max_key])

def knn_Regression():

data = np.array([

[3, 104, 98], [2, 100, 93], [1, 81, 95],

[101, 10, 16], [99, 5, 8], [98, 2, 7]

])

# test sample

x = [18, 90]

k = 5 # k value

dis = []

for i in data:

d = np.sqrt((x[0] - i[0]) ** 2 + (x[1] - i[1]) ** 2)

dis.append([d, i[2]])

dis.sort(key=lambda x: x[0])

sum = 0

for i in dis[0:k]:

sum += i[1]

print(sum/k) #the value is predicted by regression

def knn_VoteRegression():

data = np.array([

[3, 104, 98], [2, 100, 93], [1, 81, 95],

[101, 10, 16], [99, 5, 8], [98, 2, 7]

])

# test sample

x = [18, 90]

k = 5 # k value

dis = []

for i in data:

d = np.sqrt((x[0] - i[0]) ** 2 + (x[1] - i[1]) ** 2)

dis.append([d, i[2]])

dis.sort(key=lambda x: x[0])

dis = [[1/i[0],i[1]] for i in dis][0:k]

a = 1/sum([i[1] for i in dis])

res = sum([i[0]*i[1] for i in dis])

print(res*a)

def knn_classification():

iris_dara = load_iris()

x = iris_dara['data']

y = iris_dara['target']

#y的标签化处理

y = LabelEncoder().fit_transform(y)

x = StandardScaler().fit_transform(x)

#使用留出法切分数据

x_train,x_test,y_train,y_test = train_test_split(x,y,test_size=0.3)

#使用网格搜索交叉验证选取最佳k值

param_grid = {'n_neighbors':[3,4,5,6]}

model = KNeighborsClassifier()

knn = GridSearchCV(model,param_grid=param_grid,cv=5)

knn.fit(x_train,y_train)

print(knn.best_params_)

y_ = knn.predict(x_test)

print(classification_report(y_test,y_))

#计算出的概率

print(knn.predict_proba(x_test))

def knn_regression():

data = pd.read_csv(r'data/Advertising.csv')

x = data.iloc[:,:-1]

y = data.iloc[:,-1:]

x = StandardScaler().fit_transform(x)

x_train,x_test,y_train,y_test = train_test_split(x,y,test_size=0.3)

param_grid = {'n_neighbors':[3,4,5,6]}

model = KNeighborsRegressor()

knn = GridSearchCV(model,param_grid=param_grid)

knn.fit(x_train,y_train)

print(knn.best_params_)

y_ = knn.predict(x_test)

print(mean_squared_error(y_test,y_))

print(r2_score(y_test,y_))

算法优化

- 蛮力法(brute-force):k近邻法最简单的实现方式是线性扫描,需要计算待测实例与每个实例的距离,在大数据上不可行。

- KD树:为了提高k近邻搜索效率,考虑使用特殊的结构存储训练数据,以减少计算距离的次数,可以使用kd树 (kd tree)方法。kd树分为两个过程——构造kd树(使用特殊结构存储训练集)、搜索kd树(减少搜索 计算量)

- 球树(BallTree)

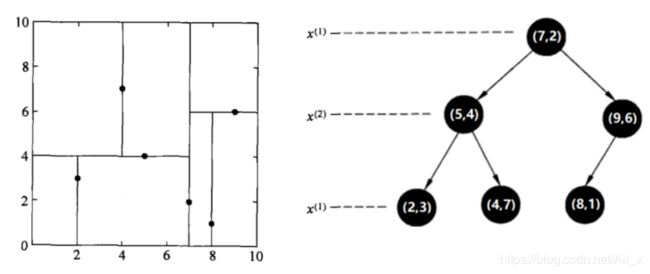

KD-Tree

- 从m个样本的n维特征中,分别计算n个特征取值的方差,用方差最大的第k维特征作为根节点

- 选择第k维特征的中位数作为样本划分点对于小于该值的样本划分到左

子树,对于大于等于该值的样本划分到右子树,对左右子树采用同样的方式找方差最大的特征作为根节点,递归即可产生KD树。

例子

构造KD树

给定一个二维空间的数据集: T = { ( 2 , 3 ) , ( 5 , 4 ) , ( 9 , 6 ) , ( 4 , 7 ) , ( 8 , 1 ) , ( 7 , 2 ) } T=\{(2,3),(5,4),(9,6),(4,7),(8,1),(7,2)\} T={(2,3),(5,4),(9,6),(4,7),(8,1),(7,2)}

- 计算维度方差, x 1 = [ 2 , 5 , 9 , 4 , 8 , 7 ] 方 差 为 5.81 x_1 = [2,5,9,4,8,7]方差为5.81 x1=[2,5,9,4,8,7]方差为5.81, x 2 = [ 3 , 4 , 6 , 7 , 1 , 2 ] 方 差 为 4.47 x_2 = [3,4,6,7,1,2]方差为4.47 x2=[3,4,6,7,1,2]方差为4.47,因此选择第一轴特征作为划分根节点

- 对第一维特征,取中位数,这里中位数为6,因此取7作为划分值,得到:

- 再计算左右子树各特征轴方差, [ 2 , 5 , 4 ] 方 差 为 1.56 [2,5,4]方差为1.56 [2,5,4]方差为1.56, [ 3 , 4 , 7 ] 方 差 为 2.89 [3,4,7]方差为2.89 [3,4,7]方差为2.89,因此第二轴为换分特征, ( 5 , 4 ) (5,4) (5,4)作为划分节点,得到:

- 对叶子节点,进行处理

搜索KD树

假设搜索(2,5)

- 对(2,5)在KD树中进行搜索,得到这个点在包含(4,7)叶子节点的矩形区域中;

- 计算(2,5)到(4,7)的距离为 5 \sqrt{5} 5,以(2,5)为圆心, 5 \sqrt{5} 5为半径画圆,最近邻点记为(4,7);

- 返回(4,7)的父子节点,查看圆是否与父子节点(5,4)的另一子节点所在矩形区域(即(2,3)所在矩形区域)相交。

- 若不相交,返回父子节点(5,4)的父子节点(7,2),搜索另一子树

若相交,计算(2,3)与(2,5)的距离为2,小于 5 \sqrt{5} 5,因此最近邻点更新为(2,3),返回父子节点(5,4)的父子节点(7,2),搜索另一子树 - 计算(2,5)与(5,4)的距离为 5 \sqrt{5} 5,查看圆是否与(9,6)所在矩形区域相交,重复步骤4

- 直到路径回溯完毕

import numpy as np

from sklearn.neighbors import KDTree

if __name__ == '__main__':

x = np.array([[2,3],[5,4],[9,6],[4,7],[8,1],[7,2]])

tree = KDTree(x,leaf_size=2)

dist,ind = tree.query(np.array([[2,5]]),k=5)

print(ind)

print(dist)

优缺点

- 优点:

- 思想简单,能做分类和回归

- 惰性学习,无需训练(蛮力法)

- KD树的话,则需要建树 ,对异常点不敏感

- 缺点:

- 计算量大、速度慢 ,样本不平衡的时候,对稀有类别的预测准确率低

- KD树,球树之类的模型建立需要大量的内存 ,相比决策树模型,KNN模型可解释性不强