hadoop学习过程(一)

内容提示:

——双系统安装(Ubuntu)

——Hadoop 伪分布式安装

——Ubuntu下eclipse的下载安装与配置

——eclipse中Maven插件的下载安装与配置

——eclipse中hadoop插件的下载安装与配置

——HDFS bash命令

——HDFS Java API 基本编程

注:Ubuntu安装与卸载mysql,jdk,eclipse,tomcat,workbench

参考链接:https://blog.csdn.net/t1dmzks/article/details/52079791

一、Linux系统安装(Ubuntu16.0.4LTS)双系统安装

第一步:制作安装 U 盘

参考链接:http://jingyan.baidu.com/article/59703552e0a6e18fc007409f.html

第二步:双系统安装

参考链接:http://jingyan.baidu.com/article/dca1fa6fa3b905f1a44052bd.html

注:

1.Ubuntu下载地址:https://www.ubuntu.com/download/desktop

(如:ubuntu-16.04.4-desktop-amd64.iso)

2.安装前设置U盘启动,打开BIOS设置启动项(不同型号的电脑不同)

3.安装的过程中最好不要联网

二、Hadoop 伪分布式安装

参考链接:https://www.cnblogs.com/87hbteo/p/7606012.html

创建hadoop用户->设置无密码登录->JDK的下载与安装配置->

hadoop的下载与安装配置->伪分布式配置->启动hadoop->运行实例

1.创建hadoop用户

sudo useradd -m username -s /bin/bash #/bin/bash作为shell

sudo passwd 123 #设置密码,如:123

sudo adduser username #sudo为用户增加管理员权限

su - lln #切换当前用户为lln

2.安装SSH,设置SSH无密码登陆

sudo apt-get install openssh-server #安装SSH server

ssh localhost #登陆SSH,第一次登陆输入yes

exit #退出登录的ssh localhost

cd ~/.ssh/ #如果没法进入该目录,执行一次ssh localhost

ssh-keygen -t rsa #接下来连续敲击三次enter键

cat ./id_rsa.pub >> ./authorized_keys #加入授权

ssh localhost #此时即可免密码登录

3.JDK的下载安装与配置

下载:http://www.oracle.com/technetwork/java/javase/downloads/index.html

(如:jdk-8u162-linux-x64.tar.gz)

解压:sudo tar zxvf jdk-7u80-linux-x64.tar.gz -C /usr/lib/jvm ( 解压到/usr/lib/jvm目录下)

配置:vim ~/.bashrc

export JAVA_HOME=/usr/lib/jvm/java

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

生效:source ~/.bashrc

查看版本号:java -version

注:

vim的安装:sudo apt-get install vim

4.hadoop的下载安装与配置

下载:http://hadoop.apache.org/releases.html

(如:hadoop-2.6.5.tar.gz)

解压:sudo tar -zxvf hadoop-2.6.0.tar.gz -C /usr/local (解压到/usr/local目录下)

权限:sudo chown -R hadoop ./hadoop #修改文件权限

配置:

export HADOOP_HOME=/usr/local/hadoop

export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

生效:source ~./bashrc

查看版本号:hadoop version

5.伪分布式配置

修改core-site.xml

hadoop.tmp.dir

file:/usr/local/hadoop/tmp

Abase for other temporary directories.

fs.defaultFS

hdfs://localhost:9000

修改配置文件 hdfs-site.xml

dfs.replication

1

dfs.namenode.name.dir

file:/usr/local/hadoop/tmp/dfs/name

dfs.datanode.data.dir

file:/usr/local/hadoop/tmp/dfs/data

NameNode 的格式化: ./bin/hdfs namenode -format

启动hadoop:./sbin/start-all.sh

查看java进程:jps

Web 界面查看: http://localhost:50070

注:

localhost:9000failed on connection exception:java.net.ConnectException:拒绝链接

解决:通常是namenode没有启动,检查配置路径是否出错(core-site.xml、hdfs-site.xml)

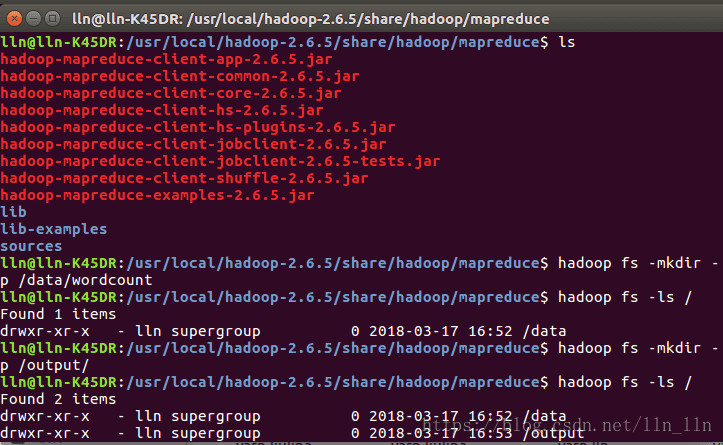

6.Hadoop运行WorldCount示例

找到hadoop自带worldcount jar包示例的路径->准备需要的输入输出路径以及上传的文件->运行实例

路径:cd /usr/local/hadoop-2.6.5/share/hadoop/mapreduce

创建目录: hadoop fs -mkdir -p /data/wordcount

hadoop fs -mkdir -p /output/

本地创建输入文件:vim /usr/inputWord

上传至HDFS:hadoop fs -put /usr/inputWord /data/wordcount

查看:hadoop fs -ls /data/wordcount

hadoop fs -text /data/wordcount/inputWord

运行:hadoop jar hadoop-mapreduce-examples-2.6.5.jar wordcount /data/wordcount /output/wordcountresult

三、Ubuntu下eclipse的下载安装与配置

参考链接:https://www.linuxidc.com/Linux/2016-07/133482.htm

或https://blog.csdn.net/qq_37549757/article/details/56012895

JDK 的下载安装与配置->eclipse的下载安装与配置

(JDK 的下载安装与配置跳过)

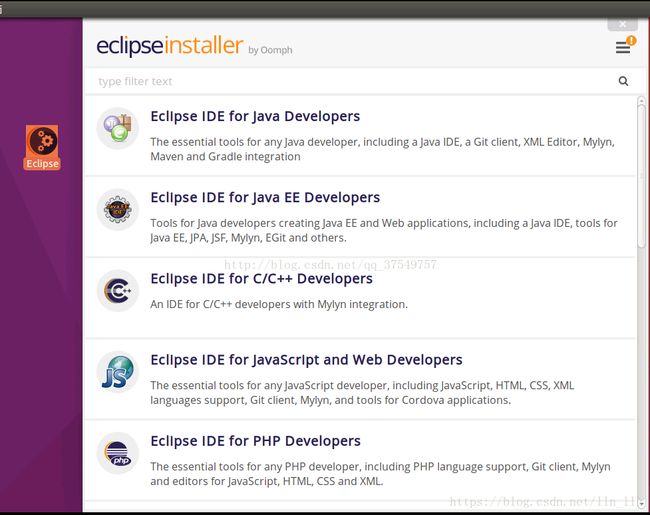

1.eclipse的下载安装与配置

下载:http://www.eclipse.org/downloads/

(如:eclipse-inst-linux64.tar.gz)

解压:sudo tar zxvf eclipse-inst-linux64.tar.gz -C /opt/jre

安装:进入/opt/jre/eclipse-installer,点击eclipse-inst,根据自己的需求安装

(本人选择安装Eclipse IDE for Java EE Developers)

注:

错误:A Java RunTime Environment (JRE) or JavaDevelopment Kit (JDK) must be available in order to run Eclipse. No javavirtual machine was found after searching the following locations:…

解决:创建java路径的软链接

进入/opt/jre/eclipse-installer -> sudo mkdir jre -> cd jre ->

ln –s /usr/lib/jvm/jdk1.8.0_162/bin bin

创建桌面图标:

cd /usr/share/applications

sudo vim eclipse.desktop

添加:

[Desktop Entry]

Encoding=UTF-8

Name=Eclipse

Comment=Eclipse

Exec=/home/lln/eclipse/jee-oxygen/eclipse/eclipse

Icon=/home/lln/eclipse/jee-oxygen/eclipse/icon.xpm

Terminal=false

StartupNotify=true

Type=Application

其中“Exec=”后面为eclipse安装目录下的eclipse程序的位置路径,“Icon=”后面为eclipse安装目录下的图标图片的路径

修改所有者:sudo chown lln: ./eclipse.desktop

修改权限:sudo chmod u+x eclipse.desktop

在/usr/share/applications目录下将Eclipse图标右键复制到桌面即可

四、eclipse中Maven插件的下载安装与配置

参考链接:http://www.cnblogs.com/travellife/p/4091993.html

或https://www.cnblogs.com/lr393993507/p/5310433.html

第一种方式:本地Maven安装

下载:http://maven.apache.org/download.cgi

(如:apache-maven-3.5.3-bin.tar.gz)

解压:sudo tar zxvf apache-maven-3.5.3-bin.tar.gz -C /usr/local

配置:sudo gedit /etc/profile

添加:

export M2_HOME=/usr/local/apache-maven-3.5.3

export M2=M2HOME/binexportPATH=M2_HOME/bin export PATH=M2HOME/binexportPATH=M2:$PATH

生效:source /etc/profile

查看版本号:mvn -version

修改本地maven仓库地址:

找到/usr/local/apache-maven-3.5.3/conf下的 settings.xml 配置文件。找到settings.xml配置文件中和标签,前者使你本地仓库的地址,后者则是maven服务器的相关连接配置

配置eclipse的maven插件:

Window->preference->Maven->installations->Add->选择你本机安装 maven 的路径值

User Setting->点击 Browse 按钮,选到你 maven 的 setting.xml 配置文件/usr/local/apache-maven-3.5.3/conf/setting.xml

第二种方式:eclipse中maven插件的安装

help–install new software–add

官网地址:https://www.eclipse.org/m2e/

Name:m2e

Location:http://download.eclipse.org/technology/m2e/releases

注:

错误:Cannot complete the install because one or more required items could not be found.

解决:版本问题,在线安装插件地址引起的可以去官网上找适合自己Eclipse的版本。或参考:http://www.cnblogs.com/travellife/p/4090406.html

五、eclipse中hadoop插件的下载安装与配置

参考链接:https://www.cnblogs.com/liuchangchun/p/4121817.html

eclipse下hadoop开发环境搭建参考链接:https://blog.csdn.net/qq_23617681/article/details/51246803

方式一:下载源码自己编译

方式二:下载编译好的插件

六、HDFS shell命令

官网:

http://hadoop.apache.org/docs/r2.6.5/hadoop-project-dist/hadoop-common/FileSystemShell.html#appendToFile

hadoop官网首页->Documentation->Release 2.6.5->General->FileSystem shell(hadoop command)

shell下java程序的编译与运行

1.查看java路径:which java

2.编译(.java—->.class):javac Filename.java

3.生成jar包:jar cvf Filename.jar Filename*class

4.hadoop下运行: /usr/local/hadoop-2.6.5/bin/hadoop jar Filename.jar Filename

七、HDFS Java API 基本编程

参考链接:https://blog.csdn.net/yuan_xw/article/details/50383005

API 文档:http://hadoop.apache.org/docs/r2.6.5/api/index.html

查看hadoop JAVA API文档

官网:http://hadoop.apache.org/

如Documentation ——>Release2.6.5——>Reference——>API Docs——>可查看Packsges、Interface、Classes的详细信息。

1.开启hadoop :

cd /usr/local/hadoop-2.6.5

./sbin/start-all.sh

jps

离开安全模式:hdfs dfsadmin -safemode leave

2.新建Maven项目,导入依赖文件

pom.xml

4.0.0

com.hadoop

Hdfs

0.0.1-SNAPSHOT

org.apache.hadoop

hadoop-common

2.6.5

org.apache.hadoop

hadoop-hdfs

2.6.5

org.apache.hadoop

hadoop-client

2.6.5

3.创建.java文件

hdfsTest.java

import java.io.BufferedInputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import java.util.Scanner;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class hdfsTest {

private static final String HDFS = "hdfs://localhost:9000";

public hdfsTest(String hdfs, Configuration conf ){

this.hdfsPath = hdfs;

this.conf = conf;

}

public hdfsTest() {

// TODO Auto-generated constructor stub

}

private String hdfsPath;

private Configuration conf = new Configuration() ;

public static void main(String[] args) throws IOException, URISyntaxException{

hdfsTest hdfstest = new hdfsTest();

String folder = HDFS + "/user/upload";

String folder1 = HDFS + "/data/wordcount";

String local = "/home/lln/下载/test";

String local1 = "/home/lln/文档/upload";

String local2 = "/home/lln/文档/helloworld";

//1.上传文件

//hdfstest.put(local, folder);

//2.下载文件

//hdfstest.get(folder,local);

//3.显示文件

//hdfstest.cat(folder);

//4.显示指定的文件的读写权限、大小、创建时间、路径等信息

//hdfstest.getFilestatus(folder);

//5.递归列出所有文件夹

//hdfstest.lsr(folder);

//6.创建和删除文件

//hdfstest.crermfile(folder);

//7.创建和删除目录

//hdfstest.crermdir(local1);

//8.向 HDFS 中指定的文件追加内容

//hdfstest.append(folder,local);

//9.删除 HDFS 中指定的文件

//hdfstest.rm(folder);

//10.将文件从源路径移动到目的路径

//hdfstest.mv(folder1,local);

}

//1.上传文件

private void put(String local, String remote) throws IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS), new Configuration());

Path path = new Path(HDFS + "/test");

if(fs.exists(path)){

System.out.println("文件已存在!" );

System.out.println("append or overwrite?" );

Scanner sc = new Scanner(System.in);

String s = sc.next();

if(s.equals("append")) {

fs.append(path);

System.out.println("文件已追加" );

}else {

fs.create(path, true);

System.out.println("文件已覆盖" );

}

}else{

fs.copyFromLocalFile(new Path(local), new Path(remote));

System.out.println("文件已上传至: " + remote);

}

fs.close();

}

//2.下载文件

private void get(String remote, String local) throws IllegalArgumentException, IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS), new Configuration());

Path path = new Path(local);

if(fs.exists(path)){

fs.rename(new Path(local), new Path("/home/lln/文档/test1"));

System.out.println("文件已下载并重命名为:" + "/home/lln/文档/test1");

}else{

fs.copyToLocalFile(new Path(remote), new Path(local));

System.out.println("文件已下载至:" + local);

}

fs.close();

}

//3.显示指定文件内容

private void cat(String folder) throws IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS),new Configuration());

Path path = new Path(folder);

FSDataInputStream fsdis = null;

System.out.println("cat: " + folder);

try {

fsdis =fs.open(path);

IOUtils.copyBytes(fsdis, System.out, 4096, false);

} finally {

IOUtils.closeStream(fsdis);

fs.close();

}

}

//4.显示 HDFS 中指定的文件的读写权限、大小、创建时间、路径等信息

private void getFilestatus(String folder) throws IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS),new Configuration());

Path path = new Path(folder);

FileStatus fileStatus = fs.getFileStatus(path);

System.out.println("路径:"+fileStatus.getPath());

System.out.println("大小:"+fileStatus.getBlockSize());

}

//5.递归列出所有文件夹

private void lsr(String folder) throws IOException, URISyntaxException {

//与hdfs建立联系

FileSystem fs = FileSystem.get(new URI(HDFS),new Configuration());

Path path = new Path(folder);

//得到该目录下的所有文件

FileStatus[] fileList = fs.listStatus(path);

for (FileStatus f : fileList) {

System.out.printf("name: %s | folder: %s | size: %d\n", f.getPath(), f.isDir() , f.getLen());

try{

FileStatus[] fileListR = fs.listStatus(f.getPath());

for(FileStatus fr:fileListR){

System.out.printf("name: %s | folder: %s | size: %d\n", fr.getPath(), fr.isDir() , fr.getLen());

}

}finally{

continue;

}

}

fs.close();

}

//6.创建和删除文件

private void crermfile(String folder) throws IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS),new Configuration());

Path path = new Path(folder);

System.out.println("creat or delete file?" );

Scanner sc = new Scanner(System.in);

String s = sc.next();

if(s.equals("creat")) {

if(fs.exists(path)){

System.out.println("该文件已存在:"+folder);

}else{

fs.create(path);

System.out.println("已自动创建该文件:"+folder);

}

}else {

if(fs.deleteOnExit(path)){

fs.delete(path);

System.out.println("已删除文件:"+folder);

}else{

System.out.println("要删除文件文件路径不正确:"+folder);

}

}

fs.close();

}

//7.创建和删除目录

private void crermdir(String folder) throws IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS),new Configuration());

Path path = new Path(folder);

System.out.println("creat or delete dir?" );

Scanner sc = new Scanner(System.in);

String s = sc.next();

if(s.equals("creat")) {

if(fs.exists(path)){

System.out.println("该目录已存在:"+folder);

}else{

fs.mkdirs(path);

System.out.println("已自动创建该目录:"+folder);

}

}else {

if(fs.deleteOnExit(path)){

fs.listStatus(path);

System.out.println("continue delete dir?yes/no" );

Scanner sc1 = new Scanner(System.in);

String s1 = sc1.next();

if(s1.equals("yes")) {

fs.delete(path);

System.out.println("已删除目录:"+folder);

}else {

System.out.println("放弃删除目录:"+folder);

}

}else{

System.out.println("要删除文件目录路径不正确:"+folder);

}

}

fs.close();

}

//8.向 HDFS 中指定的文件追加内容

private void append(String folder,String local) throws IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS),new Configuration());

Path path = new Path(folder);

FileOutputStream out=new FileOutputStream(local);

System.out.println("追加至文件的开头或结尾:start or end?");

Scanner sc = new Scanner(System.in);

String s = sc.next();

if(s.equals("start")) {

fs.append(path);

System.out.println("已追加至文件:"+folder+"开头");

}else {

fs.append(path);

System.out.println("已追加至文件:"+folder+"结尾");

}

IOUtils.closeStream(out);

fs.close();

}

//9.删除 HDFS 中指定的文件

private void rm(String folder) throws IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS),new Configuration());

Path path = new Path(folder);

if(fs.deleteOnExit(path)){

fs.delete(path);

System.out.println("已删除文件:" + folder);

}else{

System.out.println("指定删除文件不存在!");

}

fs.close();

}

//10.将文件从源路径移动到目的路径

private void mv(String folder,String local) throws IOException, URISyntaxException {

FileSystem fs = FileSystem.get(new URI(HDFS),new Configuration());

Path path = new Path(folder);

Path path1 = new Path(local);

fs.moveFromLocalFile(path1,path);

System.out.println("文件已从文件夹:"+local+"移动至文件夹:"+folder);

fs.close();

}

}

MyFSDataInputStream.java

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

public class MyFSDataInputStream extends org.apache.hadoop.fs.FSDataInputStream {

private static final String HDFS = "hdfs://localhost:9000";

private Configuration conf = new Configuration() ;

public MyFSDataInputStream(InputStream in) {

super(in);

// TODO Auto-generated constructor stub

}

public static void main(String[] args) throws IOException, URISyntaxException {

String folder = HDFS + "/user/lln/test/fs_Readme.pdf";

FileSystem fs = FileSystem.get(new URI(HDFS), new Configuration());

Path path = new Path(folder);

FSDataInputStream fp = fs.open(path) ;

InputStreamReader isr = new InputStreamReader(fp) ;

BufferedReader br = new BufferedReader(isr) ;

String line = br.readLine() ;

while(line !=null){

System.out.println(line);

line = br.readLine() ;

}

}

}

CatFileToTerminal.java

import java.io.IOException;

import java.io.InputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class CatFileToTerminal {

private static final String HDFS = "hdfs://localhost:9000";

public static void main(String[] args) throws IOException {

// TODO Auto-generated method stub

String uri= HDFS + "/data/wordcount/test"; ;

Configuration conf = new Configuration();

FileSystem fs = FileSystem. get(URI.create (uri), conf);

InputStream in = null;

try {

in = fs.open( new Path(uri));

IOUtils.copyBytes(in, System.out, 4096, false);

} finally {

IOUtils.closeStream(in);

}

}

}