hadoop 简单的MapReduce源码分析(源码&流程&word count日志)

目录

- Job提交

- mapTask的输入:splits

- MapReduce Job执行的架构(1.0简单描述)

- MapTask run

- MapTask的`run`方法和流程

- split怎么读取?

- TextInputFormat & LineRecordReader

- 原始 k,v 进行 map 处理

- map结果怎么输出?

- output(org.apache.hadoop.mapreduce.RecordWriter)构造

- 分区(partitioner & 默认的HashPartitioner)

- context.write(collector.collect)

- collect(kv缓冲区) & partition(分区)处理图

- SpillThread(将缓冲区中的数据spill到磁盘)

- flush & mergeParts()

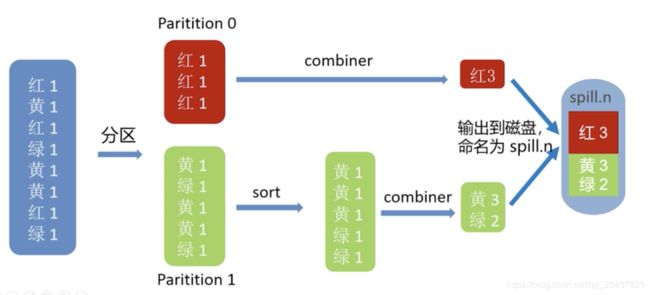

- spill(combiner)到输出文件图

- 回顾MapTask的流程

- 回顾 为什么要排序和分区

- partitions 归并排序

- 在partitions排序的基础上:key再排序

- 为什么有 combiner

- 为什么有 compression

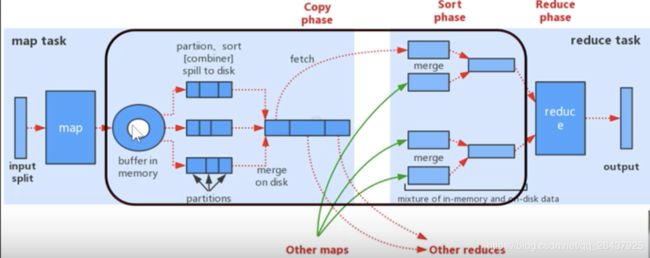

- mapreduce处理流程图

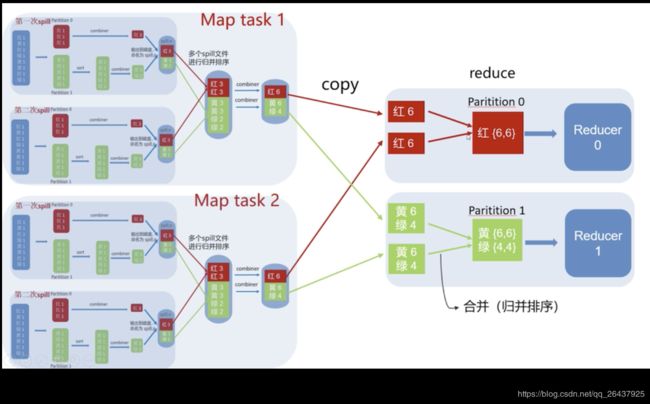

- map 到 shuffle 到 reduce 实例流程图

- ReduceTask

- reduceTask流程

- ReduceTask run()

- reduce的三个阶段

- reduce的输入来源?(shuffle拉取)

- Shuffle run方法

- rIter 迭代器模式的使用?

- runNewReducer

- 如何执行reduce方法?

- shuffle

- mapreduce log 学习

- 输入 & 输出

- 不实用combiner & 使用combiner的log 区别

- 自定义key排序:`job.setSortComparatorClass`

- word count java 程序

上一篇简单的博文写了个最简单的mapreduce程序(https://blog.csdn.net/qq_26437925/article/details/78458471)

,本文则主要是对源码跳转分析Job,MapReduce的过程,对源码有一定的认识和深入

Job提交

/**

* Internal method for submitting jobs to the system.

*

* The job submission process involves:

*

* -

* Checking the input and output specifications of the job.

*

* -

* Computing the {@link InputSplit}s for the job.

*

* -

* Setup the requisite accounting information for the

* {@link DistributedCache} of the job, if necessary.

*

* -

* Copying the job's jar and configuration to the map-reduce system

* directory on the distributed file-system.

*

* -

* Submitting the job to the

JobTracker and optionally

* monitoring it's status.

*

*

* @param job the configuration to submit

* @param cluster the handle to the Cluster

* @throws ClassNotFoundException

* @throws InterruptedException

* @throws IOException

*/

JobStatus submitJobInternal(Job job, Cluster cluster)

// 把任务相关的文件,配置,jars上传

copyAndConfigureFiles(job, submitJobDir);

// 获取配置文件job.xml的路径

Path submitJobFile = JobSubmissionFiles.getJobConfPath(submitJobDir);

// 输入文件的splits,配置信息写入job信息中

// Create the splits for the job

LOG.debug("Creating splits at " + jtFs.makeQualified(submitJobDir));

int maps = writeSplits(job, submitJobDir);

conf.setInt(MRJobConfig.NUM_MAPS, maps);

LOG.info("number of splits:" + maps);

int maxMaps = conf.getInt(MRJobConfig.JOB_MAX_MAP,

MRJobConfig.DEFAULT_JOB_MAX_MAP);

if (maxMaps >= 0 && maxMaps < maps) {

throw new IllegalArgumentException("The number of map tasks " + maps +

" exceeded limit " + maxMaps);

}

// 设置job使用的资源队列

// write "queue admins of the queue to which job is being submitted"

// to job file.

String queue = conf.get(MRJobConfig.QUEUE_NAME,

JobConf.DEFAULT_QUEUE_NAME);

AccessControlList acl = submitClient.getQueueAdmins(queue);

conf.set(toFullPropertyName(queue,

QueueACL.ADMINISTER_JOBS.getAclName()), acl.getAclString());

// removing jobtoken referrals before copying the jobconf to HDFS

// as the tasks don't need this setting, actually they may break

// because of it if present as the referral will point to a

// different job.

TokenCache.cleanUpTokenReferral(conf);

if (conf.getBoolean(

MRJobConfig.JOB_TOKEN_TRACKING_IDS_ENABLED,

MRJobConfig.DEFAULT_JOB_TOKEN_TRACKING_IDS_ENABLED)) {

// Add HDFS tracking ids

ArrayList<String> trackingIds = new ArrayList<String>();

for (Token<? extends TokenIdentifier> t :

job.getCredentials().getAllTokens()) {

trackingIds.add(t.decodeIdentifier().getTrackingId());

}

conf.setStrings(MRJobConfig.JOB_TOKEN_TRACKING_IDS,

trackingIds.toArray(new String[trackingIds.size()]));

}

// Set reservation info if it exists

ReservationId reservationId = job.getReservationId();

if (reservationId != null) {

conf.set(MRJobConfig.RESERVATION_ID, reservationId.toString());

}

// Write job file to submit dir

writeConf(conf, submitJobFile);

// submitClient.submitJob 提交Job

//

// Now, actually submit the job (using the submit name)

//

printTokens(jobId, job.getCredentials());

status = submitClient.submitJob(

jobId, submitJobDir.toString(), job.getCredentials());

if (status != null) {

return status;

} else {

throw new IOException("Could not launch job");

}

} finally {

if (status == null) {

LOG.info("Cleaning up the staging area " + submitJobDir);

if (jtFs != null && submitJobDir != null)

jtFs.delete(submitJobDir, true);

}

}

- 输入文件 writeNewSplits

@SuppressWarnings("unchecked")

private <T extends InputSplit>

int writeNewSplits(JobContext job, Path jobSubmitDir) throws IOException,

InterruptedException, ClassNotFoundException {

Configuration conf = job.getConfiguration();

// 获取输入文件格式,默认:TextInputFormat

InputFormat<?, ?> input =

ReflectionUtils.newInstance(job.getInputFormatClass(), conf);

// 进行split操作

List<InputSplit> splits = input.getSplits(job);

T[] array = (T[]) splits.toArray(new InputSplit[splits.size()]);

// 排序,让最大的split优先处理

// sort the splits into order based on size, so that the biggest

// go first

Arrays.sort(array, new SplitComparator());

// 把split文件信息写入

JobSplitWriter.createSplitFiles(jobSubmitDir, conf,

jobSubmitDir.getFileSystem(conf), array);

return array.length;

}

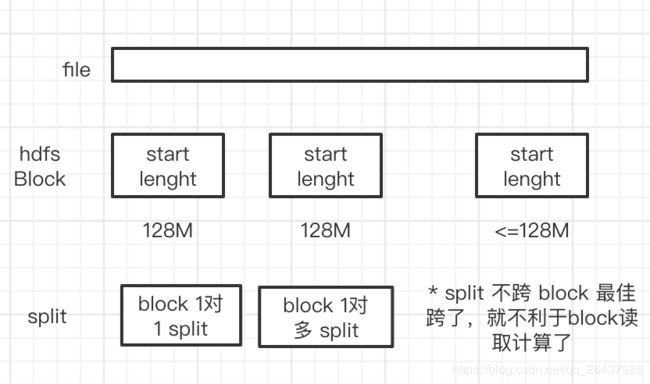

mapTask的输入:splits

可以通过配置BlockSize、split min size、split max size 等参数,达到控制mapper的数量(注意到:即使在程序里对 conf 显式地设置了 mapred.map.tasks 或 mapreduce.job.maps,程序不一定能运行期望数量的 mapper)

- input.getSplits(job); (org.apache.hadoop.mapreduce.lib.input

FileInputFormat)

/**

* Generate the list of files and make them into FileSplits.

* @param job the job context

* @throws IOException

*/

public List<InputSplit> getSplits(JobContext job) throws IOException {

StopWatch sw = new StopWatch().start();

// split的最大最小值,可配置

long minSize = Math.max(getFormatMinSplitSize(), getMinSplitSize(job));

long maxSize = getMaxSplitSize(job);

// 获取job信息,并判断产生split

// generate splits

List<InputSplit> splits = new ArrayList<InputSplit>();

List<FileStatus> files = listStatus(job);

boolean ignoreDirs = !getInputDirRecursive(job)

&& job.getConfiguration().getBoolean(INPUT_DIR_NONRECURSIVE_IGNORE_SUBDIRS, false);

for (FileStatus file: files) {

if (ignoreDirs && file.isDirectory()) {

continue;

}

Path path = file.getPath();

long length = file.getLen();

if (length != 0) {

// 获取输入文件的所有 block 信息

BlockLocation[] blkLocations;

if (file instanceof LocatedFileStatus) {

blkLocations = ((LocatedFileStatus) file).getBlockLocations();

} else {

FileSystem fs = path.getFileSystem(job.getConfiguration());

blkLocations = fs.getFileBlockLocations(file, 0, length);

}

if (isSplitable(job, path)) {

// 计算 splitSize: Math.max(minSize, Math.min(maxSize, blockSize));

long blockSize = file.getBlockSize();

long splitSize = computeSplitSize(blockSize, minSize, maxSize);

long bytesRemaining = length;

while (((double) bytesRemaining)/splitSize > SPLIT_SLOP) {

int blkIndex = getBlockIndex(blkLocations, length-bytesRemaining);

splits.add(makeSplit(path, length-bytesRemaining, splitSize,

blkLocations[blkIndex].getHosts(),

blkLocations[blkIndex].getCachedHosts()));

bytesRemaining -= splitSize;

}

if (bytesRemaining != 0) {

int blkIndex = getBlockIndex(blkLocations, length-bytesRemaining);

splits.add(makeSplit(path, length-bytesRemaining, bytesRemaining,

blkLocations[blkIndex].getHosts(),

blkLocations[blkIndex].getCachedHosts()));

}

} else { // not splitable

if (LOG.isDebugEnabled()) {

// Log only if the file is big enough to be splitted

if (length > Math.min(file.getBlockSize(), minSize)) {

LOG.debug("File is not splittable so no parallelization "

+ "is possible: " + file.getPath());

}

}

splits.add(makeSplit(path, 0, length, blkLocations[0].getHosts(),

blkLocations[0].getCachedHosts()));

}

} else {

//Create empty hosts array for zero length files

splits.add(makeSplit(path, 0, length, new String[0]));

}

}

// Save the number of input files for metrics/loadgen

job.getConfiguration().setLong(NUM_INPUT_FILES, files.size());

sw.stop();

if (LOG.isDebugEnabled()) {

LOG.debug("Total # of splits generated by getSplits: " + splits.size()

+ ", TimeTaken: " + sw.now(TimeUnit.MILLISECONDS));

}

return splits;

}

// 输入文件的splits,配置信息写入job信息中

// Create the splits for the job

LOG.debug("Creating splits at " + jtFs.makeQualified(submitJobDir));

int maps = writeSplits(job, submitJobDir);

conf.setInt(MRJobConfig.NUM_MAPS, maps);

LOG.info("number of splits:" + maps);

int maxMaps = conf.getInt(MRJobConfig.JOB_MAX_MAP,

MRJobConfig.DEFAULT_JOB_MAX_MAP);

if (maxMaps >= 0 && maxMaps < maps) {

throw new IllegalArgumentException("The number of map tasks " + maps +

" exceeded limit " + maxMaps);

}

split 的多少决定了 Map Task 的数目,因为每个 split 会交由一个 Map Task 处理

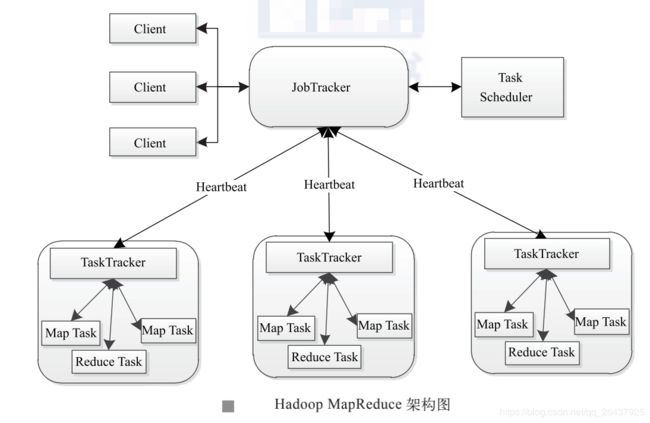

MapReduce Job执行的架构(1.0简单描述)

- client

用户编写的 MapReduce 程序通过 Client 提交到 JobTracker 端 ;同时,用户可通过 Client 提 供的一些接口查看作业运行状态。在 Hadoop 内部用“作业”(Job)表示 MapReduce 程序。一个 MapReduce 程序可对应若干个作业,而每个作业会被分解成若干个 Map/Reduce 任务(Task)。

- JobTracker

JobTracker 主要负责资源监控和作业调度。JobTracker 监控所有 TaskTracker 与作业的 健康状况,一旦发现失败情况后,其会将相应的任务转移到其他节点;同时,JobTracker 会 跟踪任务的执行进度、资源使用量等信息,并将这些信息告诉任务调度器,而调度器会在 资源出现空闲时,选择合适的任务使用这些资源。在 Hadoop 中,任务调度器是一个可插拔 的模块,用户可以根据自己的需要设计相应的调度器。

- TaskTracker

TaskTracker 会周期性地通过 Heartbeat 将本节点上资源的使用情况和任务的运行进度汇报 给 JobTracker,同时接收 JobTracker 发送过来的命令并执行相应的操作(如启动新任务、杀死 任务等)。TaskTracker 使用“slot”等量划分本节点上的资源量。“slot”代表计算资源(CPU、 内存等)。一个 Task 获取到一个 slot 后才有机会运行,而 Hadoop 调度器的作用就是将各个 TaskTracker 上的空闲 slot 分配给 Task 使用。slot 分为 Map slot 和 Reduce slot 两种,分别供 Map Task 和 Reduce Task 使用。TaskTracker 通过 slot 数目(可配置参数)限定 Task 的并发度。

- Task

Task 分为 Map Task 和 Reduce Task 两种,均由 TaskTracker 启动。从上一小节中我们知道, HDFS 以固定大小的 block 为基本单位存储数据,而对于 MapReduce 而言,其处理单位是 split。

MapTask run

MapTask的run方法和流程

@Override

public void run(final JobConf job, final TaskUmbilicalProtocol umbilical)

throws IOException, ClassNotFoundException, InterruptedException {

this.umbilical = umbilical;

if (isMapTask()) {

// If there are no reducers then there won't be any sort. Hence the map

// phase will govern the entire attempt's progress.

if (conf.getNumReduceTasks() == 0) {

mapPhase = getProgress().addPhase("map", 1.0f);

} else {

// If there are reducers then the entire attempt's progress will be

// split between the map phase (67%) and the sort phase (33%).

mapPhase = getProgress().addPhase("map", 0.667f);

sortPhase = getProgress().addPhase("sort", 0.333f);

}

}

TaskReporter reporter = startReporter(umbilical);

boolean useNewApi = job.getUseNewMapper();

initialize(job, getJobID(), reporter, useNewApi);

// check if it is a cleanupJobTask

if (jobCleanup) {

runJobCleanupTask(umbilical, reporter);

return;

}

if (jobSetup) {

runJobSetupTask(umbilical, reporter);

return;

}

if (taskCleanup) {

runTaskCleanupTask(umbilical, reporter);

return;

}

if (useNewApi) {

runNewMapper(job, splitMetaInfo, umbilical, reporter);

} else {

runOldMapper(job, splitMetaInfo, umbilical, reporter);

}

done(umbilical, reporter);

}

- MapTask先判断是否有Reduce任务;没有reduce, 则只有map阶段,Map阶段结束则整个job可以结束

- 有reduce任务,则map阶段会有个排序:当Map任务完成的时候设置当前进度为

66.7%,Sort完成的时候设置进度为33.3%

(补充:排序的好处:方便后续的reduce读取减少IO次数)

@SuppressWarnings("unchecked")

private <INKEY,INVALUE,OUTKEY,OUTVALUE>

void runNewMapper(final JobConf job,

final TaskSplitIndex splitIndex,

final TaskUmbilicalProtocol umbilical,

TaskReporter reporter

) throws IOException, ClassNotFoundException,

InterruptedException {

// make a task context so we can get the classes

org.apache.hadoop.mapreduce.TaskAttemptContext taskContext =

new org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl(job,

getTaskID(),

reporter);

// 构造 mapper, 用客户端自己手写的Mapper函数反射构造

// 每个 mapper 处理 一个 split, job是构造了整个split数组信息的

// make a mapper

org.apache.hadoop.mapreduce.Mapper<INKEY,INVALUE,OUTKEY,OUTVALUE> mapper =

(org.apache.hadoop.mapreduce.Mapper<INKEY,INVALUE,OUTKEY,OUTVALUE>)

ReflectionUtils.newInstance(taskContext.getMapperClass(), job);

// make the input format

org.apache.hadoop.mapreduce.InputFormat<INKEY,INVALUE> inputFormat =

(org.apache.hadoop.mapreduce.InputFormat<INKEY,INVALUE>)

ReflectionUtils.newInstance(taskContext.getInputFormatClass(), job);

// rebuild the input split

org.apache.hadoop.mapreduce.InputSplit split = null;

split = getSplitDetails(new Path(splitIndex.getSplitLocation()),

splitIndex.getStartOffset());

LOG.info("Processing split: " + split);

// 从split获取信息,读取真正的block得到原始的一条一条的记录

org.apache.hadoop.mapreduce.RecordReader<INKEY,INVALUE> input =

new NewTrackingRecordReader<INKEY,INVALUE>

(split, inputFormat, reporter, taskContext);

job.setBoolean(JobContext.SKIP_RECORDS, isSkipping());

org.apache.hadoop.mapreduce.RecordWriter output = null;

// get an output object

if (job.getNumReduceTasks() == 0) {

output =

new NewDirectOutputCollector(taskContext, job, umbilical, reporter);

} else {

output = new NewOutputCollector(taskContext, job, umbilical, reporter);

}

org.apache.hadoop.mapreduce.MapContext<INKEY, INVALUE, OUTKEY, OUTVALUE>

mapContext =

new MapContextImpl<INKEY, INVALUE, OUTKEY, OUTVALUE>(job, getTaskID(),

input, output,

committer,

reporter, split);

org.apache.hadoop.mapreduce.Mapper<INKEY,INVALUE,OUTKEY,OUTVALUE>.Context

mapperContext =

new WrappedMapper<INKEY, INVALUE, OUTKEY, OUTVALUE>().getMapContext(

mapContext);

try {

// 输入(split)初始化

input.initialize(split, mapperContext);

// map操作:while循环不断的读取原始数据进行map处理

mapper.run(mapperContext);

// map阶段完成

mapPhase.complete();

// 排序阶段

setPhase(TaskStatus.Phase.SORT);

statusUpdate(umbilical);

// 输入关闭

input.close();

input = null;

// 输出,刷回磁盘

output.close(mapperContext);

output = null;

} finally {

closeQuietly(input);

closeQuietly(output, mapperContext);

}

}

map执行思路: 读取splits;内存中完成计算和排序;然后输入关闭,输出要刷回磁盘,然后关闭

split怎么读取?

需要使用记录读取器RecordReader

// 从split获取信息,读取真正的block得到原始的一条一条的记录

org.apache.hadoop.mapreduce.RecordReader<INKEY,INVALUE> input =

new NewTrackingRecordReader<INKEY,INVALUE>

(split, inputFormat, reporter, taskContext);

TextInputFormat & LineRecordReader

RecordReader的创建由TextInputFormat来创建this.real = inputFormat.createRecordReader(split, taskContext);

input.initialize(split, mapperContext);初始化就在LineRecordReader的initialize方法

/** An {@link InputFormat} for plain text files. Files are broken into lines.

* Either linefeed or carriage-return are used to signal end of line. Keys are

* the position in the file, and values are the line of text.. */

@InterfaceAudience.Public

@InterfaceStability.Stable

public class TextInputFormat extends FileInputFormat<LongWritable, Text> {

@Override

public RecordReader<LongWritable, Text>

createRecordReader(InputSplit split,

TaskAttemptContext context) {

String delimiter = context.getConfiguration().get(

"textinputformat.record.delimiter");

byte[] recordDelimiterBytes = null;

if (null != delimiter)

recordDelimiterBytes = delimiter.getBytes(Charsets.UTF_8);

return new LineRecordReader(recordDelimiterBytes);

}

@Override

protected boolean isSplitable(JobContext context, Path file) {

final CompressionCodec codec =

new CompressionCodecFactory(context.getConfiguration()).getCodec(file);

if (null == codec) {

return true;

}

return codec instanceof SplittableCompressionCodec;

}

}

- LineRecordReader

public void initialize(InputSplit genericSplit,

TaskAttemptContext context) throws IOException {

FileSplit split = (FileSplit) genericSplit;

Configuration job = context.getConfiguration();

this.maxLineLength = job.getInt(MAX_LINE_LENGTH, Integer.MAX_VALUE);

start = split.getStart();

end = start + split.getLength();

final Path file = split.getPath();

// open the file and seek to the start of the split

final FileSystem fs = file.getFileSystem(job);

fileIn = fs.open(file);

CompressionCodec codec = new CompressionCodecFactory(job).getCodec(file);

if (null!=codec) {

isCompressedInput = true;

decompressor = CodecPool.getDecompressor(codec);

if (codec instanceof SplittableCompressionCodec) {

final SplitCompressionInputStream cIn =

((SplittableCompressionCodec)codec).createInputStream(

fileIn, decompressor, start, end,

SplittableCompressionCodec.READ_MODE.BYBLOCK);

in = new CompressedSplitLineReader(cIn, job,

this.recordDelimiterBytes);

start = cIn.getAdjustedStart();

end = cIn.getAdjustedEnd();

filePosition = cIn;

} else {

if (start != 0) {

// So we have a split that is only part of a file stored using

// a Compression codec that cannot be split.

throw new IOException("Cannot seek in " +

codec.getClass().getSimpleName() + " compressed stream");

}

in = new SplitLineReader(codec.createInputStream(fileIn,

decompressor), job, this.recordDelimiterBytes);

filePosition = fileIn;

}

} else {

fileIn.seek(start);

in = new UncompressedSplitLineReader(

fileIn, job, this.recordDelimiterBytes, split.getLength());

filePosition = fileIn;

}

// If this is not the first split, we always throw away first record

// because we always (except the last split) read one extra line in

// next() method.

if (start != 0) {

start += in.readLine(new Text(), 0, maxBytesToConsume(start));

}

this.pos = start;

}

对 hdfs 文件进行读取是构造一个 fs 对象并打开:

final FileSystem fs = file.getFileSystem(job); fileIn = fs.open(file);

一个map只处理自己负责的split,split的几个属性

start = split.getStart();

end = start + split.getLength();

final Path file = split.getPath();

原始 k,v 进行 map 处理

源码是一句:mapper.run(mapperContext); , 所以mapperContext是什么?

- mapContext 包含了上文的input

org.apache.hadoop.mapreduce.MapContext<INKEY, INVALUE, OUTKEY, OUTVALUE>

mapContext =

new MapContextImpl<INKEY, INVALUE, OUTKEY, OUTVALUE>(job, getTaskID(),

input, output,

committer,

reporter, split);

public class Mapper

/**

* Expert users can override this method for more complete control over the

* execution of the Mapper.

* @param context

* @throws IOException

*/

public void run(Context context) throws IOException, InterruptedException {

setup(context);

try {

while (context.nextKeyValue()) {

map(context.getCurrentKey(), context.getCurrentValue(), context);

}

} finally {

cleanup(context);

}

}

- 使用迭代器模式,不断的判断

nextKeyValue然后处理

LineRecordReader的nextKeyValue方法

public boolean nextKeyValue() throws IOException {

if (key == null) {

key = new LongWritable();

}

key.set(pos);

if (value == null) {

value = new Text();

}

int newSize = 0;

// We always read one extra line, which lies outside the upper

// split limit i.e. (end - 1)

while (getFilePosition() <= end || in.needAdditionalRecordAfterSplit()) {

if (pos == 0) {

newSize = skipUtfByteOrderMark();

} else {

newSize = in.readLine(value, maxLineLength, maxBytesToConsume(pos));

pos += newSize;

}

if ((newSize == 0) || (newSize < maxLineLength)) {

break;

}

// line too long. try again

LOG.info("Skipped line of size " + newSize + " at pos " +

(pos - newSize));

}

if (newSize == 0) {

key = null;

value = null;

return false;

} else {

return true;

}

}

map结果怎么输出?

回到class MapTask, 会构造output, 然后mapperContext引入了该output,最后会写到磁盘并关闭;对输出的写入磁盘操作,程序员是在自定义的map类中写了context.write(k,v);这一句的

// get an output object

if (job.getNumReduceTasks() == 0) {

output =

new NewDirectOutputCollector(taskContext, job, umbilical, reporter);

} else {

output = new NewOutputCollector(taskContext, job, umbilical, reporter);

}

output.close(mapperContext);

output = null;

output(org.apache.hadoop.mapreduce.RecordWriter)构造

由reduceTask,则output = new NewOutputCollector(taskContext, job, umbilical, reporter);

@SuppressWarnings("unchecked")

NewOutputCollector(org.apache.hadoop.mapreduce.JobContext jobContext,

JobConf job,

TaskUmbilicalProtocol umbilical,

TaskReporter reporter

) throws IOException, ClassNotFoundException {

collector = createSortingCollector(job, reporter);

// reduce任务的数量 就是 partitions

partitions = jobContext.getNumReduceTasks();

if (partitions > 1) { // 多个reduce任务

partitioner = (org.apache.hadoop.mapreduce.Partitioner<K,V>)

ReflectionUtils.newInstance(jobContext.getPartitionerClass(), job);

} else {

partitioner = new org.apache.hadoop.mapreduce.Partitioner<K,V>() {

@Override

public int getPartition(K key, V value, int numPartitions) {

return partitions - 1;

}

};

}

}

- 引入collector & partitioner 这两个对象

- 分区数量就是reduceTask的数量

分区(partitioner & 默认的HashPartitioner)

/**

* Get the {@link Partitioner} class for the job.

*

* @return the {@link Partitioner} class for the job.

*/

@SuppressWarnings("unchecked")

public Class<? extends Partitioner<?,?>> getPartitionerClass()

throws ClassNotFoundException {

return (Class<? extends Partitioner<?,?>>)

conf.getClass(PARTITIONER_CLASS_ATTR, HashPartitioner.class);

}

/**

* Partitions the key space.

*

* Partitioner controls the partitioning of the keys of the

* intermediate map-outputs. The key (or a subset of the key) is used to derive

* the partition, typically by a hash function. The total number of partitions

* is the same as the number of reduce tasks for the job. Hence this controls

* which of the m reduce tasks the intermediate key (and hence the

* record) is sent for reduction.

*

* Note: A Partitioner is created only when there are multiple

* reducers.

*

* Note: If you require your Partitioner class to obtain the Job's

* configuration object, implement the {@link Configurable} interface.

*

* @see Reducer

*/

@InterfaceAudience.Public

@InterfaceStability.Stable

public abstract class Partitioner<KEY, VALUE> {

/** Partition keys by their {@link Object#hashCode()}. */

@InterfaceAudience.Public

@InterfaceStability.Stable

public class HashPartitioner<K, V> extends Partitioner<K, V> {

/** Use {@link Object#hashCode()} to partition. */

public int getPartition(K key, V value,

int numReduceTasks) {

return (key.hashCode() & Integer.MAX_VALUE) % numReduceTasks;

}

}

context.write(collector.collect)

- k, v, p 三个维度写入到

buffer memory(环形buffer缓冲区)

@Override

public void write(K key, V value) throws IOException, InterruptedException {

collector.collect(key, value,

partitioner.getPartition(key, value, partitions));

}

collect()方法会先序列化对象的k,v 然后放到buffer缓冲区中

- 缓冲区

MapOutputBuffer

@SuppressWarnings("unchecked")

private <KEY, VALUE> MapOutputCollector<KEY, VALUE>

createSortingCollector(JobConf job, TaskReporter reporter)

throws IOException, ClassNotFoundException {

MapOutputCollector.Context context =

new MapOutputCollector.Context(this, job, reporter);

Class<?>[] collectorClasses = job.getClasses(

JobContext.MAP_OUTPUT_COLLECTOR_CLASS_ATTR, MapOutputBuffer.class);

int remainingCollectors = collectorClasses.length;

Exception lastException = null;

for (Class clazz : collectorClasses) {

try {

if (!MapOutputCollector.class.isAssignableFrom(clazz)) {

throw new IOException("Invalid output collector class: " + clazz.getName() +

" (does not implement MapOutputCollector)");

}

Class<? extends MapOutputCollector> subclazz =

clazz.asSubclass(MapOutputCollector.class);

LOG.debug("Trying map output collector class: " + subclazz.getName());

MapOutputCollector<KEY, VALUE> collector =

ReflectionUtils.newInstance(subclazz, job);

collector.init(context);

LOG.info("Map output collector class = " + collector.getClass().getName());

return collector;

} catch (Exception e) {

String msg = "Unable to initialize MapOutputCollector " + clazz.getName();

if (--remainingCollectors > 0) {

msg += " (" + remainingCollectors + " more collector(s) to try)";

}

lastException = e;

LOG.warn(msg, e);

}

}

- init方法

@SuppressWarnings("unchecked")

public void init(MapOutputCollector.Context context

) throws IOException, ClassNotFoundException {

job = context.getJobConf();

reporter = context.getReporter();

mapTask = context.getMapTask();

mapOutputFile = mapTask.getMapOutputFile();

sortPhase = mapTask.getSortPhase();

spilledRecordsCounter = reporter.getCounter(TaskCounter.SPILLED_RECORDS);

partitions = job.getNumReduceTasks();

rfs = ((LocalFileSystem)FileSystem.getLocal(job)).getRaw();

//sanity checks

final float spillper =

job.getFloat(JobContext.MAP_SORT_SPILL_PERCENT, (float)0.8);

final int sortmb = job.getInt(MRJobConfig.IO_SORT_MB,

MRJobConfig.DEFAULT_IO_SORT_MB);

indexCacheMemoryLimit = job.getInt(JobContext.INDEX_CACHE_MEMORY_LIMIT,

INDEX_CACHE_MEMORY_LIMIT_DEFAULT);

if (spillper > (float)1.0 || spillper <= (float)0.0) {

throw new IOException("Invalid \"" + JobContext.MAP_SORT_SPILL_PERCENT +

"\": " + spillper);

}

if ((sortmb & 0x7FF) != sortmb) {

throw new IOException(

"Invalid \"" + JobContext.IO_SORT_MB + "\": " + sortmb);

}

sorter = ReflectionUtils.newInstance(job.getClass(

MRJobConfig.MAP_SORT_CLASS, QuickSort.class,

IndexedSorter.class), job);

// buffers and accounting

int maxMemUsage = sortmb << 20;

maxMemUsage -= maxMemUsage % METASIZE;

kvbuffer = new byte[maxMemUsage];

bufvoid = kvbuffer.length;

kvmeta = ByteBuffer.wrap(kvbuffer)

.order(ByteOrder.nativeOrder())

.asIntBuffer();

setEquator(0);

bufstart = bufend = bufindex = equator;

kvstart = kvend = kvindex;

maxRec = kvmeta.capacity() / NMETA;

softLimit = (int)(kvbuffer.length * spillper);

bufferRemaining = softLimit;

LOG.info(JobContext.IO_SORT_MB + ": " + sortmb);

LOG.info("soft limit at " + softLimit);

LOG.info("bufstart = " + bufstart + "; bufvoid = " + bufvoid);

LOG.info("kvstart = " + kvstart + "; length = " + maxRec);

// k/v serialization

comparator = job.getOutputKeyComparator();

keyClass = (Class<K>)job.getMapOutputKeyClass();

valClass = (Class<V>)job.getMapOutputValueClass();

serializationFactory = new SerializationFactory(job);

keySerializer = serializationFactory.getSerializer(keyClass);

keySerializer.open(bb);

valSerializer = serializationFactory.getSerializer(valClass);

valSerializer.open(bb);

// output counters

mapOutputByteCounter = reporter.getCounter(TaskCounter.MAP_OUTPUT_BYTES);

mapOutputRecordCounter =

reporter.getCounter(TaskCounter.MAP_OUTPUT_RECORDS);

fileOutputByteCounter = reporter

.getCounter(TaskCounter.MAP_OUTPUT_MATERIALIZED_BYTES);

// compression

if (job.getCompressMapOutput()) {

Class<? extends CompressionCodec> codecClass =

job.getMapOutputCompressorClass(DefaultCodec.class);

codec = ReflectionUtils.newInstance(codecClass, job);

} else {

codec = null;

}

// combiner

final Counters.Counter combineInputCounter =

reporter.getCounter(TaskCounter.COMBINE_INPUT_RECORDS);

combinerRunner = CombinerRunner.create(job, getTaskID(),

combineInputCounter,

reporter, null);

if (combinerRunner != null) {

final Counters.Counter combineOutputCounter =

reporter.getCounter(TaskCounter.COMBINE_OUTPUT_RECORDS);

combineCollector= new CombineOutputCollector<K,V>(combineOutputCounter, reporter, job);

} else {

combineCollector = null;

}

spillInProgress = false;

minSpillsForCombine = job.getInt(JobContext.MAP_COMBINE_MIN_SPILLS, 3);

spillThread.setDaemon(true);

spillThread.setName("SpillThread");

spillLock.lock();

try {

spillThread.start();

while (!spillThreadRunning) {

spillDone.await();

}

} catch (InterruptedException e) {

throw new IOException("Spill thread failed to initialize", e);

} finally {

spillLock.unlock();

}

if (sortSpillException != null) {

throw new IOException("Spill thread failed to initialize",

sortSpillException);

}

}

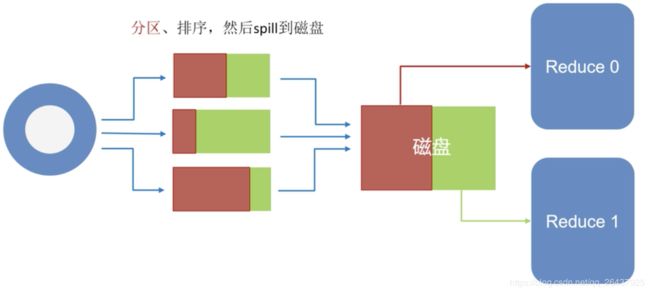

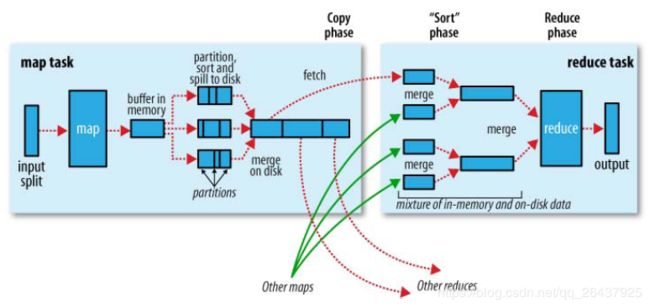

collect(kv缓冲区) & partition(分区)处理图

SpillThread(将缓冲区中的数据spill到磁盘)

private void sortAndSpill() throws IOException, ClassNotFoundException,

InterruptedException {

// 近似提前统计这次溢写是多少个字节长度:包含kv原始数据和分区的头信息

//approximate the length of the output file to be the length of the

//buffer + header lengths for the partitions

final long size = distanceTo(bufstart, bufend, bufvoid) +

partitions * APPROX_HEADER_LENGTH;

FSDataOutputStream out = null;

FSDataOutputStream partitionOut = null;

try {

// 构造SpillRecord对象和spill文件名称,并创建spill文件,返回FSDataOutputStream对象

// create spill file

final SpillRecord spillRec = new SpillRecord(partitions);

final Path filename =

mapOutputFile.getSpillFileForWrite(numSpills, size);

out = rfs.create(filename);

final int mstart = kvend / NMETA;

final int mend = 1 + // kvend is a valid record

(kvstart >= kvend

? kvstart

: kvmeta.capacity() + kvstart) / NMETA;

// 默认快排,进行按照partition和key进行排序操作,参见 MapOutputBuffer 的 compare 实现

sorter.sort(MapOutputBuffer.this, mstart, mend, reporter);

int spindex = mstart;

final IndexRecord rec = new IndexRecord();

final InMemValBytes value = new InMemValBytes();

// 遍历partitions进行处理

for (int i = 0; i < partitions; ++i) {

IFile.Writer<K, V> writer = null;

try {

long segmentStart = out.getPos();

partitionOut = CryptoUtils.wrapIfNecessary(job, out, false);

writer = new Writer<K, V>(job, partitionOut, keyClass, valClass, codec,

spilledRecordsCounter);

if (combinerRunner == null) {

// spill directly

DataInputBuffer key = new DataInputBuffer();

while (spindex < mend &&

kvmeta.get(offsetFor(spindex % maxRec) + PARTITION) == i) {

final int kvoff = offsetFor(spindex % maxRec);

int keystart = kvmeta.get(kvoff + KEYSTART);

int valstart = kvmeta.get(kvoff + VALSTART);

key.reset(kvbuffer, keystart, valstart - keystart);

getVBytesForOffset(kvoff, value);

writer.append(key, value);

++spindex;

}

} else {

int spstart = spindex;

while (spindex < mend &&

kvmeta.get(offsetFor(spindex % maxRec)

+ PARTITION) == i) {

++spindex;

}

// Note: we would like to avoid the combiner if we've fewer

// than some threshold of records for a partition

if (spstart != spindex) {

combineCollector.setWriter(writer);

RawKeyValueIterator kvIter =

new MRResultIterator(spstart, spindex);

combinerRunner.combine(kvIter, combineCollector);

}

}

// close the writer

writer.close();

if (partitionOut != out) {

partitionOut.close();

partitionOut = null;

}

// record offsets

rec.startOffset = segmentStart;

rec.rawLength = writer.getRawLength() + CryptoUtils.cryptoPadding(job);

rec.partLength = writer.getCompressedLength() + CryptoUtils.cryptoPadding(job);

spillRec.putIndex(rec, i);

writer = null;

} finally {

if (null != writer) writer.close();

}

}

// spill索引信息的处理

if (totalIndexCacheMemory >= indexCacheMemoryLimit) {

// create spill index file

Path indexFilename =

mapOutputFile.getSpillIndexFileForWrite(numSpills, partitions

* MAP_OUTPUT_INDEX_RECORD_LENGTH);

spillRec.writeToFile(indexFilename, job);

} else {

indexCacheList.add(spillRec);

totalIndexCacheMemory +=

spillRec.size() * MAP_OUTPUT_INDEX_RECORD_LENGTH;

}

// 完成一个spill文件

LOG.info("Finished spill " + numSpills);

++numSpills;

} finally {

if (out != null) out.close();

if (partitionOut != null) {

partitionOut.close();

}

}

}

- 当缓冲区满足一定条件后就会对缓冲区kvbuffer中的数据进行排序,先按分区编号partition进行升序,然后按照key进行升序,使得同一分区数据聚合,且分区内所有数据按照key有序(这里使用了快速排序)

- 接着会生成spill文件,不过在此之前如果有

combiner,则会进行combiner - 最后是

归并合并所有spill文件和索引文件, 这里还会进行一次combiner(如果有设置的话)

flush & mergeParts()

public void flush() throws IOException, ClassNotFoundException,

InterruptedException {

LOG.info("Starting flush of map output");

if (kvbuffer == null) {

LOG.info("kvbuffer is null. Skipping flush.");

return;

}

spillLock.lock();

try {

while (spillInProgress) {

reporter.progress();

spillDone.await();

}

checkSpillException();

final int kvbend = 4 * kvend;

if ((kvbend + METASIZE) % kvbuffer.length !=

equator - (equator % METASIZE)) {

// spill finished

resetSpill();

}

if (kvindex != kvend) {

kvend = (kvindex + NMETA) % kvmeta.capacity();

bufend = bufmark;

LOG.info("Spilling map output");

LOG.info("bufstart = " + bufstart + "; bufend = " + bufmark +

"; bufvoid = " + bufvoid);

LOG.info("kvstart = " + kvstart + "(" + (kvstart * 4) +

"); kvend = " + kvend + "(" + (kvend * 4) +

"); length = " + (distanceTo(kvend, kvstart,

kvmeta.capacity()) + 1) + "/" + maxRec);

sortAndSpill();

}

} catch (InterruptedException e) {

throw new IOException("Interrupted while waiting for the writer", e);

} finally {

spillLock.unlock();

}

assert !spillLock.isHeldByCurrentThread();

// shut down spill thread and wait for it to exit. Since the preceding

// ensures that it is finished with its work (and sortAndSpill did not

// throw), we elect to use an interrupt instead of setting a flag.

// Spilling simultaneously from this thread while the spill thread

// finishes its work might be both a useful way to extend this and also

// sufficient motivation for the latter approach.

try {

spillThread.interrupt();

spillThread.join();

} catch (InterruptedException e) {

throw new IOException("Spill failed", e);

}

// release sort buffer before the merge

kvbuffer = null;

mergeParts();

Path outputPath = mapOutputFile.getOutputFile();

fileOutputByteCounter.increment(rfs.getFileStatus(outputPath).getLen());

// If necessary, make outputs permissive enough for shuffling.

if (!SHUFFLE_OUTPUT_PERM.equals(

SHUFFLE_OUTPUT_PERM.applyUMask(FsPermission.getUMask(job)))) {

Path indexPath = mapOutputFile.getOutputIndexFile();

rfs.setPermission(outputPath, SHUFFLE_OUTPUT_PERM);

rfs.setPermission(indexPath, SHUFFLE_OUTPUT_PERM);

}

}

spill(combiner)到输出文件图

当最后一次sortAndSpill操作完成后; 开始合并溢写文件,形成最终的一个输出文件

回顾MapTask的流程

input -> map -> output

input输入:来自输入格式化类给我们实际返回的记录读取器对象

TextInputFormat -> LineRecordreader

split: file, offset, seek(offset)

init():

in = fs.open(file).seek(offset)

nextKeyValue()

* 读取原始Block数据中的一条记录,对key,value默认赋值

* 返回boolean

getCurrentKey()

getCurrentValue()

map: 就是 我们自己定义的MapperClass,eg: job.setMapperClass(TempMapper.class);对input进行map操作

output:

MapOutputBuffer

init():

splitter: 0.8

sortedmn: 100M

sorter = QuickSort

comparator = jobConf.getOutputKeyComparator(); // 默认,key自己的Comparator

combiner(相当于map做小reduce压缩一下)

reduce, minSpillsForCombine

SpillThread

sortAndSpill()

combiner

回顾 为什么要排序和分区

内存中进行排序,而不是随机写到磁盘;以减少后续的IO

多组文件:内部有序,外部无序 ,要求整体有序 =》 归并排序

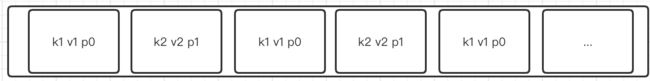

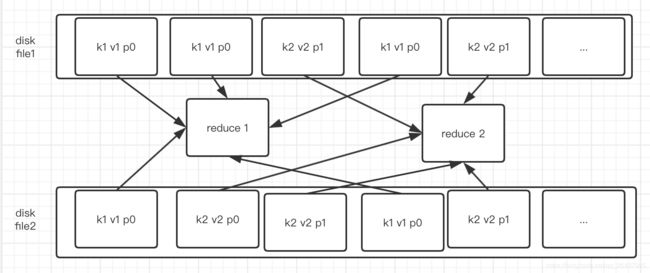

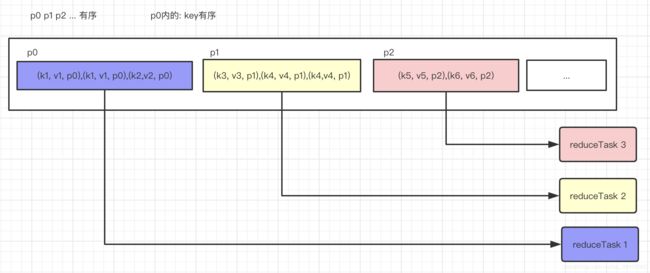

partitions 归并排序

忘记上面的图,假如什么排序都没有,map输出的一个文件会如下:

那么在reduce阶段,因为是一个partition一个reduce任务;那么每一个reduce任务为了完成对一个partition的计算,不得不去读取整个的磁盘文件数据(即一条一条记录的去读取并判断是不是要处理的partition),那么IO次数是partitions次(且每一次IO,需要读取整个的磁盘文件), 如下图:

加入按照partition排序好了,如下

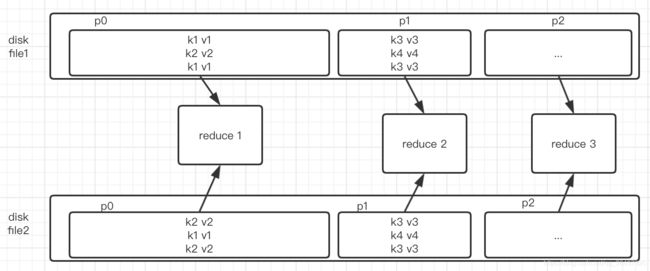

在partitions排序的基础上:key再排序

可以看到reduce处理,对每一组处理需要全部遍历完,才能计算出这组数据的结果;假如有排序,每个组的计算成本显然下降不少,如下:(又可以类似归并排序处理)

即最后如下:

- map输出的是一个有序的(partititon有序,partition内key有序)的文件,供reduceTask来执行

为什么有 combiner

在map阶段进行合并操作,降低后续reduceTask的计算压力;也相当于提前做一次小reduce操作

- 并不是所有的程序都适合combiner

- 需要测试

- 默认关闭的

eg: Sum()求和适合,Average()求平均数不适合;例如,求0、20、10、25 、15的平均数,直接使用Reduce求平均数Average(0,20,10,25,15),得到的结果是14, 如果先使用Combiner分别对不同Mapper结果求平均数,Average(0,20,10)=10,Average(25,15)=20,再使用Reducer求平均数Average(10,20),得到的结果为15,很明显求平均数并不适合使用Combiner。

为什么有 compression

减少磁盘IO和网络IO

mapreduce处理流程图

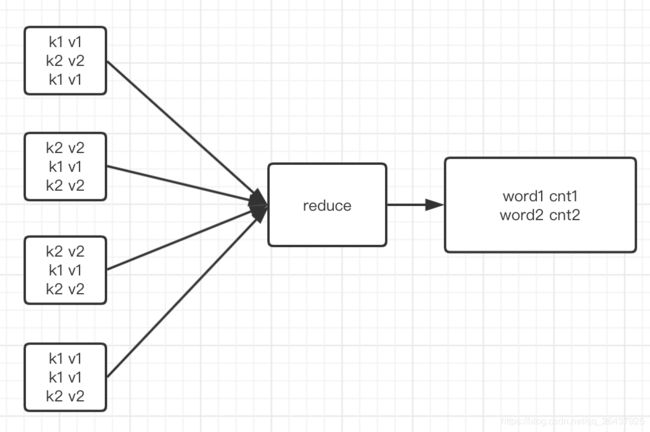

map 到 shuffle 到 reduce 实例流程图

ReduceTask

reduceTask流程

ReduceTask

input -> reduce -> output

map:run: while(context.nextKeyValue())

一条记录调用一次map

reduece:run while(context.nextKey())

一组数据调用一次reduce

shuffle: 拉取数据(相同partion的key会被拉取到一个partion)(一个partition 一个 reduceTask)

sort: 归并排序, grouping comparator

reduce run: 迭代器

reduce拉取属于自己partition的数据,并包装成迭代器

reduce方法被调用的时候,并没有把一组数据真的加载到内存中,而是传递一个迭代器-values

hasNext:判断下一条是否还在当前一组的nextKeyIsSame

next:负责读取nextKeyValue方法,同时也会更新nextKeyIsSame

- Reducer

/**

* Advanced application writers can use the

* {@link #run(org.apache.hadoop.mapreduce.Reducer.Context)} method to

* control how the reduce task works.

*/

public void run(Context context) throws IOException, InterruptedException {

setup(context);

try {

while (context.nextKey()) {

reduce(context.getCurrentKey(), context.getValues(), context);

// If a back up store is used, reset it

Iterator<VALUEIN> iter = context.getValues().iterator();

if(iter instanceof ReduceContext.ValueIterator) {

((ReduceContext.ValueIterator<VALUEIN>)iter).resetBackupStore();

}

}

} finally {

cleanup(context);

}

}

ReduceTask run()

@Override

@SuppressWarnings("unchecked")

public void run(JobConf job, final TaskUmbilicalProtocol umbilical)

throws IOException, InterruptedException, ClassNotFoundException {

job.setBoolean(JobContext.SKIP_RECORDS, isSkipping());

if (isMapOrReduce()) {

copyPhase = getProgress().addPhase("copy");

sortPhase = getProgress().addPhase("sort");

reducePhase = getProgress().addPhase("reduce");

}

// start thread that will handle communication with parent

TaskReporter reporter = startReporter(umbilical);

boolean useNewApi = job.getUseNewReducer();

initialize(job, getJobID(), reporter, useNewApi);

// check if it is a cleanupJobTask

if (jobCleanup) {

runJobCleanupTask(umbilical, reporter);

return;

}

if (jobSetup) {

runJobSetupTask(umbilical, reporter);

return;

}

if (taskCleanup) {

runTaskCleanupTask(umbilical, reporter);

return;

}

// Initialize the codec

codec = initCodec();

RawKeyValueIterator rIter = null;

ShuffleConsumerPlugin shuffleConsumerPlugin = null;

Class combinerClass = conf.getCombinerClass();

CombineOutputCollector combineCollector =

(null != combinerClass) ?

new CombineOutputCollector(reduceCombineOutputCounter, reporter, conf) : null;

Class<? extends ShuffleConsumerPlugin> clazz =

job.getClass(MRConfig.SHUFFLE_CONSUMER_PLUGIN, Shuffle.class, ShuffleConsumerPlugin.class);

shuffleConsumerPlugin = ReflectionUtils.newInstance(clazz, job);

LOG.info("Using ShuffleConsumerPlugin: " + shuffleConsumerPlugin);

ShuffleConsumerPlugin.Context shuffleContext =

new ShuffleConsumerPlugin.Context(getTaskID(), job, FileSystem.getLocal(job), umbilical,

super.lDirAlloc, reporter, codec,

combinerClass, combineCollector,

spilledRecordsCounter, reduceCombineInputCounter,

shuffledMapsCounter,

reduceShuffleBytes, failedShuffleCounter,

mergedMapOutputsCounter,

taskStatus, copyPhase, sortPhase, this,

mapOutputFile, localMapFiles);

shuffleConsumerPlugin.init(shuffleContext);

rIter = shuffleConsumerPlugin.run();

// free up the data structures

mapOutputFilesOnDisk.clear();

sortPhase.complete(); // sort is complete

setPhase(TaskStatus.Phase.REDUCE);

statusUpdate(umbilical);

Class keyClass = job.getMapOutputKeyClass();

Class valueClass = job.getMapOutputValueClass();

RawComparator comparator = job.getOutputValueGroupingComparator();

if (useNewApi) {

runNewReducer(job, umbilical, reporter, rIter, comparator,

keyClass, valueClass);

} else {

runOldReducer(job, umbilical, reporter, rIter, comparator,

keyClass, valueClass);

}

shuffleConsumerPlugin.close();

done(umbilical, reporter);

}

reduce的三个阶段

copyPhase = getProgress().addPhase("copy");

sortPhase = getProgress().addPhase("sort");

reducePhase = getProgress().addPhase("reduce");

- 首先需要从各个mapTask的输出文件下载自身reduceTask要执行的分区部分

- 显然为了减少IO,需要

归并排序进行文件合并,方便reduce任务处理 - reduce操作后写结果回磁盘

reduce的输入来源?(shuffle拉取)

Shuffle run方法

mapreduce.reduce.shuffle.parallelcopies并发线程拉取

@Override

public RawKeyValueIterator run() throws IOException, InterruptedException {

// Scale the maximum events we fetch per RPC call to mitigate OOM issues

// on the ApplicationMaster when a thundering herd of reducers fetch events

// TODO: This should not be necessary after HADOOP-8942

int eventsPerReducer = Math.max(MIN_EVENTS_TO_FETCH,

MAX_RPC_OUTSTANDING_EVENTS / jobConf.getNumReduceTasks());

int maxEventsToFetch = Math.min(MAX_EVENTS_TO_FETCH, eventsPerReducer);

// Start the map-completion events fetcher thread

final EventFetcher<K, V> eventFetcher =

new EventFetcher<K, V>(reduceId, umbilical, scheduler, this,

maxEventsToFetch);

eventFetcher.start();

// Start the map-output fetcher threads

boolean isLocal = localMapFiles != null;

final int numFetchers = isLocal ? 1 :

jobConf.getInt(MRJobConfig.SHUFFLE_PARALLEL_COPIES, 5);

Fetcher<K, V>[] fetchers = new Fetcher[numFetchers];

if (isLocal) {

fetchers[0] = new LocalFetcher<K, V>(jobConf, reduceId, scheduler,

merger, reporter, metrics, this, reduceTask.getShuffleSecret(),

localMapFiles);

fetchers[0].start();

} else {

for (int i=0; i < numFetchers; ++i) {

fetchers[i] = new Fetcher<K, V>(jobConf, reduceId, scheduler, merger,

reporter, metrics, this,

reduceTask.getShuffleSecret());

fetchers[i].start();

}

}

// Wait for shuffle to complete successfully

while (!scheduler.waitUntilDone(PROGRESS_FREQUENCY)) {

reporter.progress();

synchronized (this) {

if (throwable != null) {

throw new ShuffleError("error in shuffle in " + throwingThreadName,

throwable);

}

}

}

// Stop the event-fetcher thread

eventFetcher.shutDown();

// Stop the map-output fetcher threads

for (Fetcher<K, V> fetcher : fetchers) {

fetcher.shutDown();

}

// stop the scheduler

scheduler.close();

copyPhase.complete(); // copy is already complete

taskStatus.setPhase(TaskStatus.Phase.SORT);

reduceTask.statusUpdate(umbilical);

// Finish the on-going merges...

RawKeyValueIterator kvIter = null;

try {

kvIter = merger.close();

} catch (Throwable e) {

throw new ShuffleError("Error while doing final merge ", e);

}

// Sanity check

synchronized (this) {

if (throwable != null) {

throw new ShuffleError("error in shuffle in " + throwingThreadName,

throwable);

}

}

return kvIter;

}

public void run() {

try {

while (!stopped && !Thread.currentThread().isInterrupted()) {

MapHost host = null;

try {

// If merge is on, block

merger.waitForResource();

// Get a host to shuffle from

host = scheduler.getHost();

metrics.threadBusy();

// Shuffle

copyFromHost(host);

} finally {

if (host != null) {

scheduler.freeHost(host);

metrics.threadFree();

}

}

}

} catch (InterruptedException ie) {

return;

} catch (Throwable t) {

exceptionReporter.reportException(t);

}

}

在shuffle 拉取数据完成后,相关拉取线程关闭,然后会返回RawKeyValueIterator,在真正执行reduce操作之前,还会进行一次排序, 这使得一个reduce拉取到的是一个有序的数据集

rIter 迭代器模式的使用?

数据仍在磁盘中,使用迭代器模式,直接文件IO读取,避免大数据量在内存中导致内存不足的问题

runNewReducer

runNewReducer(job, umbilical, reporter, rIter, comparator,

keyClass, valueClass);

reducer对象使用Job设置的reducerClass

@SuppressWarnings("unchecked")

private <INKEY,INVALUE,OUTKEY,OUTVALUE>

void runNewReducer(JobConf job,

final TaskUmbilicalProtocol umbilical,

final TaskReporter reporter,

RawKeyValueIterator rIter,

RawComparator<INKEY> comparator,

Class<INKEY> keyClass,

Class<INVALUE> valueClass

) throws IOException,InterruptedException,

ClassNotFoundException {

// wrap value iterator to report progress.

final RawKeyValueIterator rawIter = rIter;

rIter = new RawKeyValueIterator() {

public void close() throws IOException {

rawIter.close();

}

public DataInputBuffer getKey() throws IOException {

return rawIter.getKey();

}

public Progress getProgress() {

return rawIter.getProgress();

}

public DataInputBuffer getValue() throws IOException {

return rawIter.getValue();

}

public boolean next() throws IOException {

boolean ret = rawIter.next();

reporter.setProgress(rawIter.getProgress().getProgress());

return ret;

}

};

// make a task context so we can get the classes

org.apache.hadoop.mapreduce.TaskAttemptContext taskContext =

new org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl(job,

getTaskID(), reporter);

// make a reducer

org.apache.hadoop.mapreduce.Reducer<INKEY,INVALUE,OUTKEY,OUTVALUE> reducer =

(org.apache.hadoop.mapreduce.Reducer<INKEY,INVALUE,OUTKEY,OUTVALUE>)

ReflectionUtils.newInstance(taskContext.getReducerClass(), job);

org.apache.hadoop.mapreduce.RecordWriter<OUTKEY,OUTVALUE> trackedRW =

new NewTrackingRecordWriter<OUTKEY, OUTVALUE>(this, taskContext);

job.setBoolean("mapred.skip.on", isSkipping());

job.setBoolean(JobContext.SKIP_RECORDS, isSkipping());

org.apache.hadoop.mapreduce.Reducer.Context

reducerContext = createReduceContext(reducer, job, getTaskID(),

rIter, reduceInputKeyCounter,

reduceInputValueCounter,

trackedRW,

committer,

reporter, comparator, keyClass,

valueClass);

try {

reducer.run(reducerContext);

} finally {

trackedRW.close(reducerContext);

}

}

如何执行reduce方法?

也是迭代器模式(不过是两次:nextKey, 然后ValueIterator)

/**

* Advanced application writers can use the

* {@link #run(org.apache.hadoop.mapreduce.Reducer.Context)} method to

* control how the reduce task works.

*/

public void run(Context context) throws IOException, InterruptedException {

setup(context);

try {

while (context.nextKey()) {

reduce(context.getCurrentKey(), context.getValues(), context);

// If a back up store is used, reset it

Iterator<VALUEIN> iter = context.getValues().iterator();

if(iter instanceof ReduceContext.ValueIterator) {

((ReduceContext.ValueIterator<VALUEIN>)iter).resetBackupStore();

}

}

} finally {

cleanup(context);

}

}

reduce的结果是程序员context.write

/**

* This method is called once for each key. Most applications will define

* their reduce class by overriding this method. The default implementation

* is an identity function.

*/

@SuppressWarnings("unchecked")

protected void reduce(KEYIN key, Iterable<VALUEIN> values, Context context

) throws IOException, InterruptedException {

for(VALUEIN value: values) {

context.write((KEYOUT) key, (VALUEOUT) value);

}

}

shuffle

shuffle 包括了map的输出 到 reduce 的数据输入处理

mapreduce log 学习

WordCount 例子

输入 & 输出

hdfs命令

hadoop fs -put ~/words.txt hdfs://localhost:9000/input/wcinput

hadoop fs -ls hdfs://localhost:9000/

hadoop fs -mkdir hdfs://localhost:9000/output

hadoop fs -cat hdfs://localhost:9000/output/wcoutput/part-r-00000

原始input

hello hadoop

hello world hello

hadoop hello

最后的mapreduce输出

hadoop 2

hello 4

world 1

不实用combiner & 使用combiner的log 区别

2020-04-30 00:04:57,430 INFO [org.apache.hadoop.mapreduce.Job] - Counters: 36

File System Counters

FILE: Number of bytes read=524

FILE: Number of bytes written=709206

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=88

HDFS: Number of bytes written=25

HDFS: Number of read operations=15

HDFS: Number of large read operations=0

HDFS: Number of write operations=4

HDFS: Number of bytes read erasure-coded=0

Map-Reduce Framework

Map input records=3

Map output records=7

Map output bytes=72

Map output materialized bytes=92

Input split bytes=100

Combine input records=0

Combine output records=0

Reduce input groups=3

Reduce shuffle bytes=92

Reduce input records=7

Reduce output records=3

Spilled Records=14

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=7

Total committed heap usage (bytes)=586153984

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=44

File Output Format Counters

Bytes Written=25

2020-04-30 00:04:57,430 DEBUG [org.apache.hadoop.security.UserGroupInformation] - PrivilegedAction as:mubi (auth:SIMPLE) from:org.apache.hadoop.mapreduce.Job.updateStatus(Job.java:328)

- Map output records=7 即是 Reduce input records=7

// 第一行

hello 1

hadoop 1

// 第二行

hello 1

world 1

hello 1

// 第三行

hadoop 1

hello 1

- 加入combiner

job.setCombinerClass(WordCountReducer.class);

2020-04-30 00:22:10,512 INFO [org.apache.hadoop.mapreduce.Job] - Counters: 36

File System Counters

FILE: Number of bytes read=426

FILE: Number of bytes written=709865

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=88

HDFS: Number of bytes written=25

HDFS: Number of read operations=17

HDFS: Number of large read operations=0

HDFS: Number of write operations=6

HDFS: Number of bytes read erasure-coded=0

Map-Reduce Framework

Map input records=3

Map output records=7

Map output bytes=72

Map output materialized bytes=43

Input split bytes=100

Combine input records=7

Combine output records=3

Reduce input groups=3

Reduce shuffle bytes=43

Reduce input records=3

Reduce output records=3

Spilled Records=6

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=79

Total committed heap usage (bytes)=801112064

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=44

File Output Format Counters

Bytes Written=25

2020-04-30 00:22:10,512 DEBUG [org.apache.hadoop.security.UserGroupInformation] - PrivilegedAction as:mubi (auth:SIMPLE) from:org.apache.hadoop.mapreduce.Job.updateStatus(Job.java:328)

多了combine, 且reduce的输入是combine 的输出了

Combine input records=7

Combine output records=3

Reduce input groups=3

Reduce shuffle bytes=43

Reduce input records=3

Reduce output records=3

自定义key排序:job.setSortComparatorClass

word count, 让 word 逆序

static class KeyComparator extends WritableComparator{

public KeyComparator() {

super(Text.class, true);

}

@Override

public int compare(WritableComparable w1, WritableComparable w2) {

Text key1 = (Text)w1;

Text key2 = (Text)w2;

String s1 = key1.toString();

String s2 = key2.toString();

return s2.compareTo(s1);

}

}

然后程序设置

// 设置key排序

job.setSortComparatorClass(KeyComparator.class);

最后结果输出

world 1

hello 4

hadoop 2

word count java 程序

import java.io.IOException;

import java.net.URI;

import java.util.Iterator;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

/**

* @Author mubi

* @Date 2020/4/28 23:39

*/

public class WC {

/**

* map函数

*

* 四个泛型类型分别代表:

* KeyIn Mapper的输入数据的Key,这里是每行文字的起始位置(0,11,...)

* ValueIn Mapper的输入数据的Value,这里是每行文字

* KeyOut Mapper的输出数据的Key,这里是每行文字中的"单词"

* ValueOut Mapper的输出数据的Value,这里是单词的数量"1"

*/

static class WordCountMapper extends

Mapper<LongWritable, Text, Text, IntWritable> {

@Override

public void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context)

throws IOException, InterruptedException {

// 将mapTask传给我们的文本内容先转换为String

String line = value.toString();

// 根据空格将这一行切分为单词

String[] words = line.split(" ");

// 将单词输出为<单词,1>

for(String word: words){

// 将单词作为key, 将次数作为value, 以便于后续的数据分发, 根据单词分发, 以便于相同单词会到相同的reduceTask内部.

context.write(new Text(word), new IntWritable(1));

}

}

}

/**

* reduce函数

*

* 四个泛型类型分别代表:

* KeyIn Reducer的输入数据的Key,这里是每行文字中的“单词”

* ValueIn Reducer的输入数据的Value,这里是单词数量列表

* KeyOut Reducer的输出数据的Key,这里是不重复的“单词”

* ValueOut Reducer的输出数据的Value,这里是单词总数量

*/

static class WordCountReducer extends

Reducer<Text, IntWritable, Text, IntWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Reducer<Text, IntWritable, Text, IntWritable>.Context context)throws IOException, InterruptedException {

int count=0;

Iterator<IntWritable> it = values.iterator();

while(it.hasNext()){

count += it.next().get();

}

context.write(key, new IntWritable(count));

}

}

public static void main(String[] args) throws Exception {

//输入路径

String dst = "hdfs://localhost:9000/input/wcinput";

// String dst = "hdfs://localhost:9000" + args[1];

//输出路径,必须是不存在的,空文件也不行。

String dstOut = "hdfs://localhost:9000/output/wcoutput";

// String dstOut = "hdfs://localhost:9000" + args[2];

Configuration hadoopConfig = new Configuration();

hadoopConfig.set("fs.hdfs.impl",

org.apache.hadoop.hdfs.DistributedFileSystem.class.getName()

);

hadoopConfig.set("fs.file.impl",

org.apache.hadoop.fs.LocalFileSystem.class.getName()

);

//如果输出目录已经存在,则先删除

FileSystem fileSystem = FileSystem.get(new URI("hdfs://localhost:9000"), hadoopConfig);

Path outputPath = new Path("/output/wcoutput");

if(fileSystem.exists(outputPath)){

fileSystem.delete(outputPath,true);

}

//如果输出目录已经存在,则先删除

FileSystem fileSystem = FileSystem.get(new URI("hdfs://localhost:9000"), hadoopConfig);

Path outputPath = new Path("/output/wcoutput");

if(fileSystem.exists(outputPath)){

fileSystem.delete(outputPath,true);

}

Job job = new Job(hadoopConfig);

//如果需要打成jar运行,需要下面这句

job.setJarByClass(WC.class);

job.setJobName("wordcount");

//job执行作业时输入和输出文件的路径

FileInputFormat.addInputPath(job, new Path(dst));

FileOutputFormat.setOutputPath(job, new Path(dstOut));

//指定自定义的Mapper和Reducer作为两个阶段的任务处理类

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

// 设置combiner

job.setCombinerClass(WordCountReducer.class);

//设置最后输出结果的Key和Value的类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//执行job,直到完成

job.waitForCompletion(true);

System.out.println("Job Finished");

System.exit(0);

}

}

- 依赖

<dependencies>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.2.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.2.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-jobclient -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>3.2.1</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>3.2.1</version>

</dependency>

</dependencies>

- gradle 格式

// https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client

compile group: 'org.apache.hadoop', name: 'hadoop-client', version: '3.2.1'

// https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common

compile group: 'org.apache.hadoop', name: 'hadoop-common', version: '3.2.1'

// https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-jobclient

compile group: 'org.apache.hadoop', name: 'hadoop-mapreduce-client-jobclient', version: '3.2.1'