深入学习java源码之ForkJoinTask.doJoin()与ForkJoinPool.execute()

深入学习java源码之ForkJoinTask.doJoin()与ForkJoinPool.execute()

ForkJoinTask:

简单的说,ForkJoinTask将任务fork成足够小的任务,并发解决这些小任务,然后将这些小任务结果join。这种思想充分利用了CPU的多核系统,使得CPU的利用率得到大幅度提升,减少了任务执行时间。

通常我们会利用ForkJoinTask的fork方法来分割任务,利用join方法来合并任务,因此我们首先以这两个方法作为切入

分割子任务-fork

public final ForkJoinTask fork() {

((ForkJoinWorkerThread) Thread.currentThread()).pushTask(this);

return this;

} 该方法很简单,将该任务添加到当前线程维护的队列队首处,返回该任务。这里有三点需要注意的:

fork方法将当前线程强制转换成ForkJoinWorkerThread,通过ForkJoinPool执行ForkJoinTask的线程都是该框架定义的ForkJoinWorkerThread,因此这种转换是正确的。如果不是利用ForkJoinPool线程池执行ForkJoinTask,将Thread强制转换成ForkJoinWorkerThread会抛出ClassCastException异常。

将任务加到队列后,由ForkJoinPool调度执行该任务。一般情况下,该任务会被队列所在的线程执行,当线程池其他线程空闲的时候,该任务可能被其他线程窃取。

任务被添加到队列的队首处,即本线程fork的小任务被添加到队首,这隐藏着另一个意思,队列中越早的任务是个较大的任务,刚添加的任务是较小的任务,每次该线程从队首拿更小的任务执行,更小的任务执行完成之后才能join成更大的任务。其他线程从该队列窃取任务的时候会窃取队列最早的任务,即窃取了较大的任务执行。

合并结果-join

假设我们将当前任务t分割成两个任务t1和t2,为了获取任务t的结果,需要等待任务t1和t2的结果,代码片断通常是这种形式:

t1.fork();

t2.fork();

result = t1.join() + t2.join();join方法正是阻塞等待当前任务执行结束并返回结果。如果任务非正常结束,join方法可能会抛出异常。join结果的时候,如果线程维护的队列头就是该任务,那么直接执行该任务,否则还有更小的任务需要执行,等待该线程执行完该任务。

和很多其他JUC框架类似,ForkJoinTask也有自己的任务执行状态,先学习下ForkJoinTask的几个状态:

volatile int status;

private static final int NORMAL = -1;

private static final int CANCELLED = -2;

private static final int EXCEPTIONAL = -3;

private static final int SIGNAL = 1;status是一个volatile变量,表示当前任务的执行状态,它有五个状态,负数表示该任务已经执行完成,非负数表示任务还没有执行完成。其中已经执行完成状态又包括NORMAL、CANCELLED、EXCEPTIONAL三种状态,未完成状态包括初始状态0和SIGNAL。下面详细看下每个状态:

NORMAL:表示任务“正常”完成的状态。

CANCELLED:表示任务“取消”完成的状态。

EXCEPTIONAL:表示任务“异常”完成的状态。注意,以上这三个状态都是“完成”的状态,只是完成的途径不一样。

SIGNAL:有其他任务依赖当前任务,任务结束前,通知其他任务join当前任务的结果。

0:任务初始状态(正在执行状态),不需要等待子任务完成。

join方法调用了doJoin方法,doJoin方法有一个关键代码片断:

//任务正常完成,设置正常完成状态,通知其他需要join该任务的线程

if (completed)

return setCompletion(NORMAL);当前任务完成后,设置该任务的完成状态为NORMAL,并且notifyAll(唤醒)其他在该任务上等待的线程。其他线程被唤醒后会合并该任务的执行结果。既然有notifyAll,那对应的wait在哪里呢?ForkJoinPool调用了ForkJoinTask的tryAwaitDone方法,等待任务完成。

doJoin方法等待该任务完成,返回完成时的状态(NORMAL、CANCELLED、EXCEPTIONAL)。

如果执行当前任务的线程不是ForkJoinWorkerThread,调用externalAwaitDone方法等待任务执行完成。否则,从当前线程维护的队列取队首的任务,如果队首的任务不是当前的任务或者任务未完成,调用当前线程的joinTask方法将当前任务加入到等待队列并等待该任务执行完成。

exec是一个抽象方法,完成具体任务的代码,由子类实现,该方法返回任务是否正常完成。任务执行过程如果抛出异常,捕获异常并设置异常完成状态。如果任务正常完成,设置正常状态并通知其他需要join该任务的线程,其他需要join该任务的线程通常是一个等待父任务完成的线程,也就是说,此时当前任务其实是个子任务,子任务结束后,父任务就可以尝试合并子任务的执行结果了,看下示例图:

任务执行过程抛出异常时,调用者可以获取该异常,ForkJoinTask并没有直接将异常的任务保存起来,而是保存了异常任务的弱引用,在合适的时候,GC将会回收该异常任务,被回收对象对应的弱引用将会保存在弱引用队列中。

private int setExceptionalCompletion(Throwable ex) {

//System.identityHashCode和Object.hashCode返回的值一样,

//都是根据对象在内存中的地址计算出来的哈希码

int h = System.identityHashCode(this);

//操作异常任务表之前先获取锁

final ReentrantLock lock = exceptionTableLock;

lock.lock();

try {

//删除已经被回收对象对应的弱引用,该方法会遍历exceptionTableRefQueue,并删除exceptionTable中

//对应的弱引用

expungeStaleExceptions();

ExceptionNode[] t = exceptionTable;

//将执行过程抛出异常的任务弱引用保存到exceptionTable,这里其实是将

//exceptionTable当作哈希表使用,i就是保存的位置

int i = h & (t.length - 1);

//遍历哈希表索引i处的链表,如果遍历过程中发现已经存在该任务,跳出循环,否则

//遍历到链表末尾时,创建新的ExceptionNode,并将该节点放到链表的头部

for (ExceptionNode e = t[i]; ; e = e.next) {

if (e == null) {

t[i] = new ExceptionNode(this, ex, t[i]);

break;

}

if (e.get() == this)

break;

}

} finally {

lock.unlock();

}

//设置任务的完成状态为EXCEPTIONAL

return setCompletion(EXCEPTIONAL);

}get方法等待任务执行完成并返回任务计算结果,看下源码:

public final V get() throws InterruptedException, ExecutionException {

int s = (Thread.currentThread() instanceof ForkJoinWorkerThread) ?

doJoin() : externalInterruptibleAwaitDone(0L);

Throwable ex;

if (s == CANCELLED)

throw new CancellationException();

if (s == EXCEPTIONAL && (ex = getThrowableException()) != null)

throw new ExecutionException(ex);

return getRawResult();

}如果当前线程是ForkJoinWorkerThread,调用doJoin方法获取结果,该方法前面已经讲过了。如果当前线程不是ForkerJoinWorkerThread,调用externalInterruptibleAwaitDone方法。

任务执行完成返回后,如果任务完成状态是CANCELLED,抛出CancellationException异常。如果任务完成状态是EXCEPTIONAL,将任务执行过程中抛出的异常包装成ExecutionExcepiton重新抛出。

重点看下getThrowableException方法,该方法返回当前任务执行过程中抛出的异常,看下源码:

private Throwable getThrowableException() {

//如果任务状态不是EXCEPTIONAL,返回null

if (status != EXCEPTIONAL)

return null;

int h = System.identityHashCode(this);

ExceptionNode e;

final ReentrantLock lock = exceptionTableLock;

lock.lock();

try {

expungeStaleExceptions();

ExceptionNode[] t = exceptionTable;

e = t[h & (t.length - 1)];

//从哈希表exceptionTable中找到当前任务抛出的异常

while (e != null && e.get() != this)

e = e.next;

} finally {

lock.unlock();

}

Throwable ex;

if (e == null || (ex = e.ex) == null)

return null;

//如果该异常不是由当前线程抛出的,通过该异常的无参构造函数,或者只有一个Throwable参数的构造函数

//新建一个异常并返回

if (e.thrower != Thread.currentThread().getId()) {

Class ec = ex.getClass();

try {

Constructor noArgCtor = null;

Constructor[] cs = ec.getConstructors();

for (int i = 0; i < cs.length; ++i) {

Constructor c = cs[i];

Class[] ps = c.getParameterTypes();

if (ps.length == 0)

noArgCtor = c;

else if (ps.length == 1 && ps[0] == Throwable.class)

return (Throwable)(c.newInstance(ex));

}

if (noArgCtor != null) {

Throwable wx = (Throwable)(noArgCtor.newInstance());

wx.initCause(ex);

return wx;

}

} catch (Exception ignore) {

}

}

return ex;

}该方法从异常表exceptionTable中取当前任务抛出的异常。如果抛出该异常的不是当前线程,查找该异常类对应的无参构造函数、或者只有一个参数Throwable的构造函数,通过该构造函数和反射,创建一个该异常的实例并返回。

getPool返回执行该任务线程所在的线程池,inForkJoinPool返回该任务是否由FJ线程执行。

public static ForkJoinPool getPool() {

Thread t = Thread.currentThread();

return (t instanceof ForkJoinWorkerThread) ?

((ForkJoinWorkerThread) t).pool : null;

}

public static boolean inForkJoinPool() {

return Thread.currentThread() instanceof ForkJoinWorkerThread;

}tryUnfork方法尝试将该任务从任务队列中弹出。该任务不再被线程池调度。

public boolean tryUnfork() {

return ((ForkJoinWorkerThread) Thread.currentThread())

.unpushTask(this);

}getQueuedTaskCount方法返回当前线程已经fork但是没有执行的任务数量。

public static int getQueuedTaskCount() {

//返回任务队列中任务的数量

return ((ForkJoinWorkerThread) Thread.currentThread())

.getQueueSize();

}总结:

1,可以使用invokeAll(task)方法,主动执行其它的ForkJoinTask,并等待Task完成。(是同步的)

2,还可以使用fork方法,让一个task执行(这个方法是异步的)

3,还可以使用join方法,让一个task执行(这个方法是同步的,它和fork不同点是同步或者异步的区别)

4,可以使用join来取得ForkJoinTask的返回值。由于RecursiveTask类实现了Future接口,所以也可以使用get()取得返回值。

get()和join()有两个主要的区别:

join()方法不能被中断。如果你中断调用join()方法的线程,这个方法将抛出InterruptedException异常。

如果任务抛出任何未受检异常,get()方法将返回一个ExecutionException异常,而join()方法将返回一个RuntimeException异常。

5,ForkJoinTask在不显示使用ForkJoinPool.execute/invoke/submit()方法进行执行的情况下,也可以使用自己的fork/invoke方法进行执行。

使用fork/invoke方法执行时,其实原理也是在ForkJoinPool里执行,只不过使用的是一个“在ForkJoinPool内部生成的静态的”ForkJoinPool。

6,ForkJoinTask有两个子类,RecursiveAction和RecursiveTask。他们之间的区别是,RecursiveAction没有返回值,RecursiveTask有返回值。

7,看看ForkjoinTask的Complete方法的使用场景

这个方法好要是用来使一个任务结束。这个方法被用在结束异步任务上,或者为那些能不正常结束的任务,提供一个选择。

8,Task的completeExceptionally方法是怎么回事。

这个方法被用来,在异步的Task中产生一个exception,或者强制结束那些“不会结束”的任务

这个方法是在Task想要“自己结束自己”时,可以被使用。而cancel方法,被设计成被其它TASK调用。

当你在一个任务中抛出一个未检查异常时,它也影响到它的父任务(把它提交到ForkJoinPool类的任务)和父任务的父任务,以此类推。

ForkJoinPool:

为什么使用ForkJoinPool

ThreadPoolExecutor中每个任务都是由单个线程独立处理的,如果出现一个非常耗时的大任务(比如大数组排序),就可能出现线程池中只有一个线程在处理这个大任务,而其他线程却空闲着,这会导致CPU负载不均衡:空闲的处理器无法帮助工作繁忙的处理器。

ForkJoinPool就是用来解决这种问题的:将一个大任务拆分成多个小任务后,使用fork可以将小任务分发给其他线程同时处理,使用join可以将多个线程处理的结果进行汇总;这实际上就是分治思想的并行版本。

ForkJoinPool的基本原理

ForkJoinPool 类是Fork/Join 框架的核心,和ThreadPoolExecutor一样它也是ExecutorService接口的实现类。

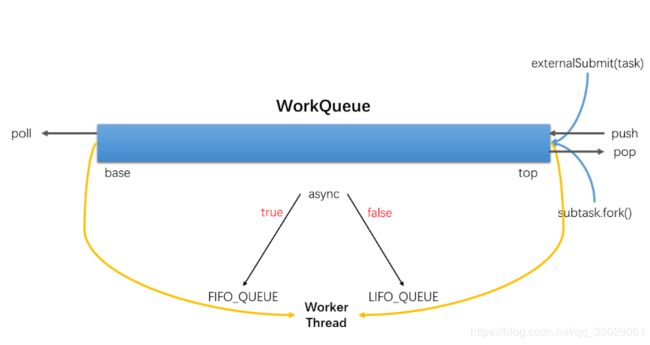

虽说了ForkJoinPool会把大任务拆分成多个子任务,但是ForkJoinPool并不会为每个子任务创建单独的线程。相反,池中每个线程都有自己的双端队列(Deque)用于存储任务。这个双端队列对于工作窃取算法至关重要。

public class ForkJoinWorkerThread extends Thread {

final ForkJoinPool pool; // 工作线程所在的线程池

final ForkJoinPool.WorkQueue workQueue; // 线程的工作队列(这个双端队列是work-stealing机制的核心)

...

}ForkJoinPool的两大核心就是分而治之(Divide and conquer)和工作窃取(Work Stealing)算法

工作窃取算法

Work Stealing算法是Fork/Join框架的核心思想:

每个线程都有自己的一个WorkQueue,该工作队列是一个双端队列。

队列支持三个功能push、pop、poll

push/pop只能被队列的所有者线程调用,而poll可以被其他线程调用。

划分的子任务调用fork时,都会被push到自己的队列中。

默认情况下,工作线程从自己的双端队列获出任务并执行。

当自己的队列为空时,线程随机从另一个线程的队列末尾调用poll方法窃取任务。

创建ForkJoinPool对象

使用Executors工具类

Java8在Executors工具类中新增了两个工厂方法:

// parallelism定义并行级别

public static ExecutorService newWorkStealingPool(int parallelism);

// 默认并行级别为JVM可用的处理器个数

// Runtime.getRuntime().availableProcessors()

public static ExecutorService newWorkStealingPool();使用ForkJoinPool内部已经初始化好的commonPool

public static ForkJoinPool commonPool();

// 类静态代码块中会调用makeCommonPool方法初始化一个commonPool使用构造器创建

public ForkJoinPool() {

this(Math.min(MAX_CAP, Runtime.getRuntime().availableProcessors()),

defaultForkJoinWorkerThreadFactory, null, false);

}

public ForkJoinPool(int parallelism) {

this(parallelism, defaultForkJoinWorkerThreadFactory, null, false);

}

public ForkJoinPool(int parallelism,

ForkJoinWorkerThreadFactory factory,

UncaughtExceptionHandler handler,

boolean asyncMode) {

this(checkParallelism(parallelism),

checkFactory(factory),

handler,

asyncMode ? FIFO_QUEUE : LIFO_QUEUE, // 队列工作模式

"ForkJoinPool-" + nextPoolId() + "-worker-");

checkPermission();

}前两个构造器最终都是调用第三个构造器,下面解释一下第四个构造器中各个参数的含义:

parallelism:并行级别,通常默认为JVM可用的处理器个数Runtime.getRuntime().availableProcessors()

factory:用于创建ForkJoinPool中使用的线程。

public static interface ForkJoinWorkerThreadFactory {

public ForkJoinWorkerThread newThread(ForkJoinPool pool);

}ForkJoinPool管理的线程均是扩展自Thread类的ForkJoinWorkerThread类型(里面包含了一个双端队列)。

handler:用于处理工作线程未处理的异常,默认为null。

asyncMode:用于控制WorkQueue的工作模式

ForkJoinTask

大多数情况下,我们都是直接提交ForkJoinTask对象到ForkJoinPool中。

因为ForkJoinTask有以下三个核心方法:

fork():在任务执行过程中将大任务划分为多个小的子任务,调用子任务的fork()方法可以将任务放到线程池中异步调度。

join():调用子任务的join()方法等待任务返回的结果。这个方法类似于Thread.join(),区别在于前者不受线程中断机制的影响。

如果子任务中有运行时异常,join()会抛出异常,quietlyJoin()方法不会抛出异常也不会返回结果,需要你调用getException()或getRawResult()自己去处理异常和结果。

invoke():在当前线程同步执行该任务。该方法也不受中断机制影响。

如果子任务中有运行时异常,invoke()会抛出异常,quietlyInvoke()方法不会抛出异常也不会返回结果,需要你调用getException()或getRawResult()自己去处理异常和结果。

ForkJoinTask中join(),invoke()都不受中断机制影响,内部调用externalAwaitDone()方法实现

如果是在ForkJoinTask内部调用get()方法,本质上和join()方法一样都是调用externalAwaitDone()。

但如果是在ForkJoinTask外部调用get()方法,这时会受线程中断机制影响,因为内部是通过调用externalInterruptibleAwaitDone()方法实现的。

public final V get() throws InterruptedException, ExecutionException {

int s = (Thread.currentThread() instanceof ForkJoinWorkerThread) ?

doJoin() : externalInterruptibleAwaitDone();

...

}ForkJoinTask内部维护了四个状态:

总结:

1,可以使用ForkJoinPool.execute(异步,不返回结果)/invoke(同步,返回结果)/submit(异步,返回结果)方法,来执行ForkJoinTask。

2,ForkJoinPool有一个方法commonPool(),这个方法返回一个ForkJoinPool内部声明的静态ForkJoinPool实例。

文档上说,这个方法适用于大多数的应用。这个静态实例的初始线程数,为“CPU核数-1 ”(Runtime.getRuntime().availableProcessors() - 1)。

ForkJoinTask自己启动时,使用的就是这个静态实例。

Fork/Join的陷阱与注意事项

使用Fork/Join框架时,需要注意一些陷阱

避免不必要的fork()

划分成两个子任务后,不要同时调用两个子任务的fork()方法。

表面上看上去两个子任务都fork(),然后join()两次似乎更自然。但事实证明,直接调用compute()效率更高。因为直接调用子任务的compute()方法实际上就是在当前的工作线程进行了计算(线程重用),这比“将子任务提交到工作队列,线程又从工作队列中拿任务”快得多。

当一个大任务被划分成两个以上的子任务时,尽可能使用前面说到的三个衍生的invokeAll方法,因为使用它们能避免不必要的fork()。

注意fork()、compute()、join()的顺序

为了两个任务并行,三个方法的调用顺序需要万分注意。

right.fork(); // 计算右边的任务

long leftAns = left.compute(); // 计算左边的任务(同时右边任务也在计算)

long rightAns = right.join(); // 等待右边的结果

return leftAns + rightAns;如果我们写成:

left.fork(); // 计算完左边的任务

long leftAns = left.join(); // 等待左边的计算结果

long rightAns = right.compute(); // 再计算右边的任务

return leftAns + rightAns;或者

long rightAns = right.compute(); // 计算完右边的任务

left.fork(); // 再计算左边的任务

long leftAns = left.join(); // 等待左边的计算结果

return leftAns + rightAns;下面两种实际上都没有并行。

选择合适的子任务粒度

选择划分子任务的粒度(顺序执行的阈值)很重要,因为使用Fork/Join框架并不一定比顺序执行任务的效率高:如果任务太大,则无法提高并行的吞吐量;如果任务太小,子任务的调度开销可能会大于并行计算的性能提升,我们还要考虑创建子任务、fork()子任务、线程调度以及合并子任务处理结果的耗时以及相应的内存消耗。

官方文档给出的粗略经验是:任务应该执行100~10000个基本的计算步骤。决定子任务的粒度的最好办法是实践,通过实际测试结果来确定这个阈值才是“上上策”。

和其他Java代码一样,Fork/Join框架测试时需要“预热”或者说执行几遍才会被JIT(Just-in-time)编译器优化,所以测试性能之前跑几遍程序很重要。

避免重量级任务划分与结果合并

Fork/Join的很多使用场景都用到数组或者List等数据结构,子任务在某个分区中运行,最典型的例子如并行排序和并行查找。拆分子任务以及合并处理结果的时候,应该尽量避免System.arraycopy这样耗时耗空间的操作,从而最小化任务的处理开销。

异常处理

Java的受检异常机制一直饱受诟病,所以在ForkJoinTask的invoke()、join()方法及其衍生方法中都没有像get()方法那样抛出个ExecutionException的受检异常。

所以你可以在ForkJoinTask中看到内部把受检异常转换成了运行时异常。

static void rethrow(Throwable ex) {

if (ex != null)

ForkJoinTask.uncheckedThrow(ex);

}

@SuppressWarnings("unchecked")

static void uncheckedThrow(Throwable t) throws T {

throw (T)t; // rely on vacuous cast

} 关于Java你不知道的10件事中已经指出,JVM实际并不关心这个异常是受检异常还是运行时异常,受检异常这东西完全是给Java编译器用的:用于警告程序员这里有个异常没有处理。

但不可否认的是invoke、join()仍可能会抛出运行时异常,所以ForkJoinTask还提供了两个不提取结果和异常的方法quietlyInvoke()、quietlyJoin(),这两个方法允许你在所有任务完成后对结果和异常进行处理。

使用quitelyInvoke()和quietlyJoin()时可以配合isCompletedAbnormally()和isCompletedNormally()方法使用。

java源码

| Modifier and Type | Method and Description |

|---|---|

static |

adapt(Callable callable) 返回一个新的 |

static ForkJoinTask |

adapt(Runnable runnable) 返回一个新的 |

static |

adapt(Runnable runnable, T result) 返回一个新的 |

boolean |

cancel(boolean mayInterruptIfRunning) 尝试取消执行此任务。 |

boolean |

compareAndSetForkJoinTaskTag(short e, short tag) 以原子方式有条件地设置此任务的标签值。 |

void |

complete(V value) 完成此任务,如果尚未中止或取消,返回给定的值作为后续调用的结果 |

void |

completeExceptionally(Throwable ex) 完成此任务异常,如果尚未中止或取消,将导致给定异常 |

protected abstract boolean |

exec() 立即执行此任务的基本操作,并返回true,如果从此方法返回后,此任务将保证已正常完成。 |

ForkJoinTask |

fork() 在当前任务正在运行的池中异步执行此任务(如果适用),或使用 |

V |

get() 等待计算完成,然后检索其结果。 |

V |

get(long timeout, TimeUnit unit) 如果需要等待最多在给定的时间计算完成,然后检索其结果(如果可用)。 |

Throwable |

getException() 返回由基础计算抛出的异常,或 |

short |

getForkJoinTaskTag() 返回此任务的标签。 |

static ForkJoinPool |

getPool() 返回托管当前任务执行的池,如果此任务在任何ForkJoinPool之外执行,则返回null。 |

static int |

getQueuedTaskCount() 返回当前工作线程已分配但尚未执行的任务数量的估计。 |

abstract V |

getRawResult() 返回由 |

static int |

getSurplusQueuedTaskCount() 返回当前工作线程保留的本地排队任务数量多于可能窃取它们的其他工作线程的估计值,如果该线程未在ForkJoinPool中运行,则返回零。 |

static void |

helpQuiesce() 可能执行任务,直到托管当前任务的池 |

static boolean |

inForkJoinPool() 返回 |

V |

invoke() 执行此任务后,如有必要,等待其完成,并返回其结果,或者如果基础计算执行此操作,则抛出(未选中) |

static |

invokeAll(Collection 叉指定集合中的所有任务,当 |

static void |

invokeAll(ForkJoinTask... tasks) 叉出给定的任务,当每个任务保持isDone时 |

static void |

invokeAll(ForkJoinTask t1, ForkJoinTask t2) 叉出给定的任务,当每个任务保持isDone时 |

boolean |

isCancelled() 如果此任务在正常完成之前被取消,则返回 |

boolean |

isCompletedAbnormally() 如果此任务抛出异常或被取消,返回 |

boolean |

isCompletedNormally() 如果此任务完成而不抛出异常并且未被取消,则返回 |

boolean |

isDone() 返回 |

V |

join() 当 |

protected static ForkJoinTask |

peekNextLocalTask() 返回,但不会取消调度或执行当前线程排队但尚未执行的任务(如果可以立即可用)。 |

protected static ForkJoinTask |

pollNextLocalTask() 如果当前线程正在ForkJoinPool中运行,则不执行当前线程排队的下一个任务但尚未执行的时间并返回。 |

protected static ForkJoinTask |

pollTask() 如果当前线程在ForkJoinPool中运行,则不执行下一个任务,返回当前线程排队的下一个任务,但尚未执行,如果一个可用,或者如果不可用,则由其他线程分派的任务,如果可供使用的话。 |

void |

quietlyComplete() 正常完成此任务而不设置值。 |

void |

quietlyInvoke() 执行此任务并等待其完成(如有必要),而不返回其结果或抛出异常。 |

void |

quietlyJoin() 加入此任务,而不返回其结果或抛出异常。 |

void |

reinitialize() 重置此任务的内部簿记状态,允许随后的 |

short |

setForkJoinTaskTag(short tag) 原子地设置此任务的标签值。 |

protected abstract void |

setRawResult(V value) 强制给定的值作为结果返回。 |

boolean |

tryUnfork() 尝试取消执行此任务。 |

package java.util.concurrent;

import java.io.Serializable;

import java.util.Collection;

import java.util.List;

import java.util.RandomAccess;

import java.lang.ref.WeakReference;

import java.lang.ref.ReferenceQueue;

import java.util.concurrent.Callable;

import java.util.concurrent.CancellationException;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.Future;

import java.util.concurrent.RejectedExecutionException;

import java.util.concurrent.RunnableFuture;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.TimeoutException;

import java.util.concurrent.locks.ReentrantLock;

import java.lang.reflect.Constructor;

public abstract class ForkJoinTask implements Future, Serializable {

volatile int status; // accessed directly by pool and workers

static final int DONE_MASK = 0xf0000000; // mask out non-completion bits

static final int NORMAL = 0xf0000000; // must be negative

static final int CANCELLED = 0xc0000000; // must be < NORMAL

static final int EXCEPTIONAL = 0x80000000; // must be < CANCELLED

static final int SIGNAL = 0x00010000; // must be >= 1 << 16

static final int SMASK = 0x0000ffff; // short bits for tags

private int setCompletion(int completion) {

for (int s;;) {

if ((s = status) < 0)

return s;

if (U.compareAndSwapInt(this, STATUS, s, s | completion)) {

if ((s >>> 16) != 0)

synchronized (this) { notifyAll(); }

return completion;

}

}

}

final int doExec() {

int s; boolean completed;

if ((s = status) >= 0) {

try {

completed = exec();

} catch (Throwable rex) {

return setExceptionalCompletion(rex);

}

if (completed)

s = setCompletion(NORMAL);

}

return s;

}

final void internalWait(long timeout) {

int s;

if ((s = status) >= 0 && // force completer to issue notify

U.compareAndSwapInt(this, STATUS, s, s | SIGNAL)) {

synchronized (this) {

if (status >= 0)

try { wait(timeout); } catch (InterruptedException ie) { }

else

notifyAll();

}

}

}

private int externalAwaitDone() {

int s = ((this instanceof CountedCompleter) ? // try helping

ForkJoinPool.common.externalHelpComplete(

(CountedCompleter)this, 0) :

ForkJoinPool.common.tryExternalUnpush(this) ? doExec() : 0);

if (s >= 0 && (s = status) >= 0) {

boolean interrupted = false;

do {

if (U.compareAndSwapInt(this, STATUS, s, s | SIGNAL)) {

synchronized (this) {

if (status >= 0) {

try {

wait(0L);

} catch (InterruptedException ie) {

interrupted = true;

}

}

else

notifyAll();

}

}

} while ((s = status) >= 0);

if (interrupted)

Thread.currentThread().interrupt();

}

return s;

}

private int externalInterruptibleAwaitDone() throws InterruptedException {

int s;

if (Thread.interrupted())

throw new InterruptedException();

if ((s = status) >= 0 &&

(s = ((this instanceof CountedCompleter) ?

ForkJoinPool.common.externalHelpComplete(

(CountedCompleter)this, 0) :

ForkJoinPool.common.tryExternalUnpush(this) ? doExec() :

0)) >= 0) {

while ((s = status) >= 0) {

if (U.compareAndSwapInt(this, STATUS, s, s | SIGNAL)) {

synchronized (this) {

if (status >= 0)

wait(0L);

else

notifyAll();

}

}

}

}

return s;

}

private int doJoin() {

int s; Thread t; ForkJoinWorkerThread wt; ForkJoinPool.WorkQueue w;

return (s = status) < 0 ? s :

((t = Thread.currentThread()) instanceof ForkJoinWorkerThread) ?

(w = (wt = (ForkJoinWorkerThread)t).workQueue).

tryUnpush(this) && (s = doExec()) < 0 ? s :

wt.pool.awaitJoin(w, this, 0L) :

externalAwaitDone();

}

private int doInvoke() {

int s; Thread t; ForkJoinWorkerThread wt;

return (s = doExec()) < 0 ? s :

((t = Thread.currentThread()) instanceof ForkJoinWorkerThread) ?

(wt = (ForkJoinWorkerThread)t).pool.

awaitJoin(wt.workQueue, this, 0L) :

externalAwaitDone();

}

// Exception table support

private static final ExceptionNode[] exceptionTable;

private static final ReentrantLock exceptionTableLock;

private static final ReferenceQueue

| Modifier and Type | Method and Description |

|---|---|

boolean |

awaitQuiescence(long timeout, TimeUnit unit) 如果被一个ForkJoinTask调用在这个池中运行,相当于 |

boolean |

awaitTermination(long timeout, TimeUnit unit) 阻止所有任务在关闭请求完成后执行,或发生超时,或当前线程中断,以先到者为准。 |

static ForkJoinPool |

commonPool() 返回公共池实例。 |

protected int |

drainTasksTo(Collection> c) 从调度队列中删除所有可用的未执行的提交和分派任务,并将其添加到给定集合中,而不会更改其执行状态。 |

void |

execute(ForkJoinTask task) 为异步执行给定任务的排列。 |

void |

execute(Runnable task) 在将来的某个时间执行给定的命令。 |

int |

getActiveThreadCount() 返回当前正在窃取或执行任务的线程数的估计。 |

boolean |

getAsyncMode() 返回 |

static int |

getCommonPoolParallelism() 返回公共池的目标并行度级别。 |

ForkJoinPool.ForkJoinWorkerThreadFactory |

getFactory() 返回用于构建新工人的工厂。 |

int |

getParallelism() 返回此池的目标并行度级别。 |

int |

getPoolSize() 返回已启动但尚未终止的工作线程数。 |

int |

getQueuedSubmissionCount() 返回提交给此池尚未开始执行的任务数量的估计。 |

long |

getQueuedTaskCount() 返回由工作线程(但不包括提交到池中尚未开始执行的任务)当前在队列中保留的任务总数的估计值。 |

int |

getRunningThreadCount() 返回等待加入任务或其他受管同步的未阻止的工作线程数的估计。 |

long |

getStealCount() 返回从另一个线程的工作队列中偷取的任务总数的估计值。 |

Thread.UncaughtExceptionHandler |

getUncaughtExceptionHandler() 返回由于在执行任务时遇到不可恢复的错误而终止的内部工作线程的处理程序。 |

boolean |

hasQueuedSubmissions() 返回 |

|

invoke(ForkJoinTask 执行给定的任务,在完成后返回其结果。 |

|

invokeAll(Collection> tasks) 执行给定的任务,返回持有他们的状态和结果的所有完成的期货列表。 |

boolean |

isQuiescent() 如果所有工作线程当前处于空闲状态,则返回 |

boolean |

isShutdown() 如果此池已关闭,则返回 |

boolean |

isTerminated() 如果所有任务在关闭后完成,则返回 |

boolean |

isTerminating() 如果 |

static void |

managedBlock(ForkJoinPool.ManagedBlocker blocker) 阻挡符合给定的阻滞剂。 |

protected |

newTaskFor(Callable 返回给定可调用任务的 |

protected |

newTaskFor(Runnable runnable, T value) 返回给定的可运行和默认值的 |

protected ForkJoinTask |

pollSubmission() 删除并返回下一个未执行的提交(如果有)。 |

void |

shutdown() 可能启动有序关闭,其中先前提交的任务被执行,但不会接受任何新的任务。 |

List |

shutdownNow() 可能尝试取消和/或停止所有任务,并拒绝所有后续提交的任务。 |

|

submit(Callable 提交值返回任务以执行,并返回代表任务待处理结果的Future。 |

|

submit(ForkJoinTask 提交一个ForkJoinTask来执行。 |

ForkJoinTask |

submit(Runnable task) 提交一个可运行的任务执行,并返回一个表示该任务的未来。 |

|

submit(Runnable task, T result) 提交一个可运行的任务执行,并返回一个表示该任务的未来。 |

String |

toString() 返回一个标识此池的字符串,以及它的状态,包括运行状态,并行级和工作和任务计数的指示。 |

package java.util.concurrent;

import java.lang.Thread.UncaughtExceptionHandler;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collection;

import java.util.Collections;

import java.util.List;

import java.util.concurrent.AbstractExecutorService;

import java.util.concurrent.Callable;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Future;

import java.util.concurrent.RejectedExecutionException;

import java.util.concurrent.RunnableFuture;

import java.util.concurrent.ThreadLocalRandom;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicLong;

import java.security.AccessControlContext;

import java.security.ProtectionDomain;

import java.security.Permissions;

@sun.misc.Contended

public class ForkJoinPool extends AbstractExecutorService {

private static void checkPermission() {

SecurityManager security = System.getSecurityManager();

if (security != null)

security.checkPermission(modifyThreadPermission);

}

// Nested classes

public static interface ForkJoinWorkerThreadFactory {

public ForkJoinWorkerThread newThread(ForkJoinPool pool);

}

static final class DefaultForkJoinWorkerThreadFactory

implements ForkJoinWorkerThreadFactory {

public final ForkJoinWorkerThread newThread(ForkJoinPool pool) {

return new ForkJoinWorkerThread(pool);

}

}

static final class EmptyTask extends ForkJoinTask {

private static final long serialVersionUID = -7721805057305804111L;

EmptyTask() { status = ForkJoinTask.NORMAL; } // force done

public final Void getRawResult() { return null; }

public final void setRawResult(Void x) {}

public final boolean exec() { return true; }

}

// Constants shared across ForkJoinPool and WorkQueue

// Bounds

static final int SMASK = 0xffff; // short bits == max index

static final int MAX_CAP = 0x7fff; // max #workers - 1

static final int EVENMASK = 0xfffe; // even short bits

static final int SQMASK = 0x007e; // max 64 (even) slots

// Masks and units for WorkQueue.scanState and ctl sp subfield

static final int SCANNING = 1; // false when running tasks

static final int INACTIVE = 1 << 31; // must be negative

static final int SS_SEQ = 1 << 16; // version count

// Mode bits for ForkJoinPool.config and WorkQueue.config

static final int MODE_MASK = 0xffff << 16; // top half of int

static final int LIFO_QUEUE = 0;

static final int FIFO_QUEUE = 1 << 16;

static final int SHARED_QUEUE = 1 << 31; // must be negative

@sun.misc.Contended

static final class WorkQueue {

static final int INITIAL_QUEUE_CAPACITY = 1 << 13;

static final int MAXIMUM_QUEUE_CAPACITY = 1 << 26; // 64M

// Instance fields

volatile int scanState; // versioned, <0: inactive; odd:scanning

int stackPred; // pool stack (ctl) predecessor

int nsteals; // number of steals

int hint; // randomization and stealer index hint

int config; // pool index and mode

volatile int qlock; // 1: locked, < 0: terminate; else 0

volatile int base; // index of next slot for poll

int top; // index of next slot for push

ForkJoinTask[] array; // the elements (initially unallocated)

final ForkJoinPool pool; // the containing pool (may be null)

final ForkJoinWorkerThread owner; // owning thread or null if shared

volatile Thread parker; // == owner during call to park; else null

volatile ForkJoinTask currentJoin; // task being joined in awaitJoin

volatile ForkJoinTask currentSteal; // mainly used by helpStealer

WorkQueue(ForkJoinPool pool, ForkJoinWorkerThread owner) {

this.pool = pool;

this.owner = owner;

// Place indices in the center of array (that is not yet allocated)

base = top = INITIAL_QUEUE_CAPACITY >>> 1;

}

final int getPoolIndex() {

return (config & 0xffff) >>> 1; // ignore odd/even tag bit

}

final int queueSize() {

int n = base - top; // non-owner callers must read base first

return (n >= 0) ? 0 : -n; // ignore transient negative

}

final boolean isEmpty() {

ForkJoinTask[] a; int n, m, s;

return ((n = base - (s = top)) >= 0 ||

(n == -1 && // possibly one task

((a = array) == null || (m = a.length - 1) < 0 ||

U.getObject

(a, (long)((m & (s - 1)) << ASHIFT) + ABASE) == null)));

}

final void push(ForkJoinTask task) {

ForkJoinTask[] a; ForkJoinPool p;

int b = base, s = top, n;

if ((a = array) != null) { // ignore if queue removed

int m = a.length - 1; // fenced write for task visibility

U.putOrderedObject(a, ((m & s) << ASHIFT) + ABASE, task);

U.putOrderedInt(this, QTOP, s + 1);

if ((n = s - b) <= 1) {

if ((p = pool) != null)

p.signalWork(p.workQueues, this);

}

else if (n >= m)

growArray();

}

}

final ForkJoinTask[] growArray() {

ForkJoinTask[] oldA = array;

int size = oldA != null ? oldA.length << 1 : INITIAL_QUEUE_CAPACITY;

if (size > MAXIMUM_QUEUE_CAPACITY)

throw new RejectedExecutionException("Queue capacity exceeded");

int oldMask, t, b;

ForkJoinTask[] a = array = new ForkJoinTask[size];

if (oldA != null && (oldMask = oldA.length - 1) >= 0 &&

(t = top) - (b = base) > 0) {

int mask = size - 1;

do { // emulate poll from old array, push to new array

ForkJoinTask x;

int oldj = ((b & oldMask) << ASHIFT) + ABASE;

int j = ((b & mask) << ASHIFT) + ABASE;

x = (ForkJoinTask)U.getObjectVolatile(oldA, oldj);

if (x != null &&

U.compareAndSwapObject(oldA, oldj, x, null))

U.putObjectVolatile(a, j, x);

} while (++b != t);

}

return a;

}

/**

* Takes next task, if one exists, in LIFO order. Call only

* by owner in unshared queues.

*/

final ForkJoinTask pop() {

ForkJoinTask[] a; ForkJoinTask t; int m;

if ((a = array) != null && (m = a.length - 1) >= 0) {

for (int s; (s = top - 1) - base >= 0;) {

long j = ((m & s) << ASHIFT) + ABASE;

if ((t = (ForkJoinTask)U.getObject(a, j)) == null)

break;

if (U.compareAndSwapObject(a, j, t, null)) {

U.putOrderedInt(this, QTOP, s);

return t;

}

}

}

return null;

}

final ForkJoinTask pollAt(int b) {

ForkJoinTask t; ForkJoinTask[] a;

if ((a = array) != null) {

int j = (((a.length - 1) & b) << ASHIFT) + ABASE;

if ((t = (ForkJoinTask)U.getObjectVolatile(a, j)) != null &&

base == b && U.compareAndSwapObject(a, j, t, null)) {

base = b + 1;

return t;

}

}

return null;

}

final ForkJoinTask poll() {

ForkJoinTask[] a; int b; ForkJoinTask t;

while ((b = base) - top < 0 && (a = array) != null) {

int j = (((a.length - 1) & b) << ASHIFT) + ABASE;

t = (ForkJoinTask)U.getObjectVolatile(a, j);

if (base == b) {

if (t != null) {

if (U.compareAndSwapObject(a, j, t, null)) {

base = b + 1;

return t;

}

}

else if (b + 1 == top) // now empty

break;

}

}

return null;

}

final ForkJoinTask nextLocalTask() {

return (config & FIFO_QUEUE) == 0 ? pop() : poll();

}

final ForkJoinTask peek() {

ForkJoinTask[] a = array; int m;

if (a == null || (m = a.length - 1) < 0)

return null;

int i = (config & FIFO_QUEUE) == 0 ? top - 1 : base;

int j = ((i & m) << ASHIFT) + ABASE;

return (ForkJoinTask)U.getObjectVolatile(a, j);

}

final boolean tryUnpush(ForkJoinTask t) {

ForkJoinTask[] a; int s;

if ((a = array) != null && (s = top) != base &&

U.compareAndSwapObject

(a, (((a.length - 1) & --s) << ASHIFT) + ABASE, t, null)) {

U.putOrderedInt(this, QTOP, s);

return true;

}

return false;

}

final void cancelAll() {

ForkJoinTask t;

if ((t = currentJoin) != null) {

currentJoin = null;

ForkJoinTask.cancelIgnoringExceptions(t);

}

if ((t = currentSteal) != null) {

currentSteal = null;

ForkJoinTask.cancelIgnoringExceptions(t);

}

while ((t = poll()) != null)

ForkJoinTask.cancelIgnoringExceptions(t);

}

// Specialized execution methods

final void pollAndExecAll() {

for (ForkJoinTask t; (t = poll()) != null;)

t.doExec();

}

final void execLocalTasks() {

int b = base, m, s;

ForkJoinTask[] a = array;

if (b - (s = top - 1) <= 0 && a != null &&

(m = a.length - 1) >= 0) {

if ((config & FIFO_QUEUE) == 0) {

for (ForkJoinTask t;;) {

if ((t = (ForkJoinTask)U.getAndSetObject

(a, ((m & s) << ASHIFT) + ABASE, null)) == null)

break;

U.putOrderedInt(this, QTOP, s);

t.doExec();

if (base - (s = top - 1) > 0)

break;

}

}

else

pollAndExecAll();

}

}

final void runTask(ForkJoinTask task) {

if (task != null) {

scanState &= ~SCANNING; // mark as busy

(currentSteal = task).doExec();

U.putOrderedObject(this, QCURRENTSTEAL, null); // release for GC

execLocalTasks();

ForkJoinWorkerThread thread = owner;

if (++nsteals < 0) // collect on overflow

transferStealCount(pool);

scanState |= SCANNING;

if (thread != null)

thread.afterTopLevelExec();

}

}

final void transferStealCount(ForkJoinPool p) {

AtomicLong sc;

if (p != null && (sc = p.stealCounter) != null) {

int s = nsteals;

nsteals = 0; // if negative, correct for overflow

sc.getAndAdd((long)(s < 0 ? Integer.MAX_VALUE : s));

}

}

final boolean tryRemoveAndExec(ForkJoinTask task) {

ForkJoinTask[] a; int m, s, b, n;

if ((a = array) != null && (m = a.length - 1) >= 0 &&

task != null) {

while ((n = (s = top) - (b = base)) > 0) {

for (ForkJoinTask t;;) { // traverse from s to b

long j = ((--s & m) << ASHIFT) + ABASE;

if ((t = (ForkJoinTask)U.getObject(a, j)) == null)

return s + 1 == top; // shorter than expected

else if (t == task) {

boolean removed = false;

if (s + 1 == top) { // pop

if (U.compareAndSwapObject(a, j, task, null)) {

U.putOrderedInt(this, QTOP, s);

removed = true;

}

}

else if (base == b) // replace with proxy

removed = U.compareAndSwapObject(

a, j, task, new EmptyTask());

if (removed)

task.doExec();

break;

}

else if (t.status < 0 && s + 1 == top) {

if (U.compareAndSwapObject(a, j, t, null))

U.putOrderedInt(this, QTOP, s);

break; // was cancelled

}

if (--n == 0)

return false;

}

if (task.status < 0)

return false;

}

}

return true;

}

final CountedCompleter popCC(CountedCompleter task, int mode) {

int s; ForkJoinTask[] a; Object o;

if (base - (s = top) < 0 && (a = array) != null) {

long j = (((a.length - 1) & (s - 1)) << ASHIFT) + ABASE;

if ((o = U.getObjectVolatile(a, j)) != null &&

(o instanceof CountedCompleter)) {

CountedCompleter t = (CountedCompleter)o;

for (CountedCompleter r = t;;) {

if (r == task) {

if (mode < 0) { // must lock

if (U.compareAndSwapInt(this, QLOCK, 0, 1)) {

if (top == s && array == a &&

U.compareAndSwapObject(a, j, t, null)) {

U.putOrderedInt(this, QTOP, s - 1);

U.putOrderedInt(this, QLOCK, 0);

return t;

}

U.compareAndSwapInt(this, QLOCK, 1, 0);

}

}

else if (U.compareAndSwapObject(a, j, t, null)) {

U.putOrderedInt(this, QTOP, s - 1);

return t;

}

break;

}

else if ((r = r.completer) == null) // try parent

break;

}

}

}

return null;

}

final int pollAndExecCC(CountedCompleter task) {

int b, h; ForkJoinTask[] a; Object o;

if ((b = base) - top >= 0 || (a = array) == null)

h = b | Integer.MIN_VALUE; // to sense movement on re-poll

else {

long j = (((a.length - 1) & b) << ASHIFT) + ABASE;

if ((o = U.getObjectVolatile(a, j)) == null)

h = 2; // retryable

else if (!(o instanceof CountedCompleter))

h = -1; // unmatchable

else {

CountedCompleter t = (CountedCompleter)o;

for (CountedCompleter r = t;;) {

if (r == task) {

if (base == b &&

U.compareAndSwapObject(a, j, t, null)) {

base = b + 1;

t.doExec();

h = 1; // success

}

else

h = 2; // lost CAS

break;

}

else if ((r = r.completer) == null) {

h = -1; // unmatched

break;

}

}

}

}

return h;

}

/**

* Returns true if owned and not known to be blocked.

*/

final boolean isApparentlyUnblocked() {

Thread wt; Thread.State s;

return (scanState >= 0 &&

(wt = owner) != null &&

(s = wt.getState()) != Thread.State.BLOCKED &&

s != Thread.State.WAITING &&

s != Thread.State.TIMED_WAITING);

}

// Unsafe mechanics. Note that some are (and must be) the same as in FJP

private static final sun.misc.Unsafe U;

private static final int ABASE;

private static final int ASHIFT;

private static final long QTOP;

private static final long QLOCK;

private static final long QCURRENTSTEAL;

static {

try {

U = sun.misc.Unsafe.getUnsafe();

Class wk = WorkQueue.class;

Class ak = ForkJoinTask[].class;

QTOP = U.objectFieldOffset

(wk.getDeclaredField("top"));

QLOCK = U.objectFieldOffset

(wk.getDeclaredField("qlock"));

QCURRENTSTEAL = U.objectFieldOffset

(wk.getDeclaredField("currentSteal"));

ABASE = U.arrayBaseOffset(ak);

int scale = U.arrayIndexScale(ak);

if ((scale & (scale - 1)) != 0)

throw new Error("data type scale not a power of two");

ASHIFT = 31 - Integer.numberOfLeadingZeros(scale);

} catch (Exception e) {

throw new Error(e);

}

}

}

// static fields (initialized in static initializer below)

public static final ForkJoinWorkerThreadFactory

defaultForkJoinWorkerThreadFactory;

private static final RuntimePermission modifyThreadPermission;

static final ForkJoinPool common;

static final int commonParallelism;

private static int commonMaxSpares;

private static int poolNumberSequence;

private static final synchronized int nextPoolId() {

return ++poolNumberSequence;

}

// static configuration constants

private static final long IDLE_TIMEOUT = 2000L * 1000L * 1000L; // 2sec

private static final long TIMEOUT_SLOP = 20L * 1000L * 1000L; // 20ms

private static final int DEFAULT_COMMON_MAX_SPARES = 256;

private static final int SPINS = 0;

private static final int SEED_INCREMENT = 0x9e3779b9;

// Lower and upper word masks

private static final long SP_MASK = 0xffffffffL;

private static final long UC_MASK = ~SP_MASK;

// Active counts

private static final int AC_SHIFT = 48;

private static final long AC_UNIT = 0x0001L << AC_SHIFT;

private static final long AC_MASK = 0xffffL << AC_SHIFT;

// Total counts

private static final int TC_SHIFT = 32;

private static final long TC_UNIT = 0x0001L << TC_SHIFT;

private static final long TC_MASK = 0xffffL << TC_SHIFT;

private static final long ADD_WORKER = 0x0001L << (TC_SHIFT + 15); // sign

// runState bits: SHUTDOWN must be negative, others arbitrary powers of two

private static final int RSLOCK = 1;

private static final int RSIGNAL = 1 << 1;

private static final int STARTED = 1 << 2;

private static final int STOP = 1 << 29;

private static final int TERMINATED = 1 << 30;

private static final int SHUTDOWN = 1 << 31;

// Instance fields

volatile long ctl; // main pool control

volatile int runState; // lockable status

final int config; // parallelism, mode

int indexSeed; // to generate worker index

volatile WorkQueue[] workQueues; // main registry

final ForkJoinWorkerThreadFactory factory;

final UncaughtExceptionHandler ueh; // per-worker UEH

final String workerNamePrefix; // to create worker name string

volatile AtomicLong stealCounter; // also used as sync monitor

private int lockRunState() {

int rs;

return ((((rs = runState) & RSLOCK) != 0 ||

!U.compareAndSwapInt(this, RUNSTATE, rs, rs |= RSLOCK)) ?

awaitRunStateLock() : rs);

}

private int awaitRunStateLock() {

Object lock;

boolean wasInterrupted = false;

for (int spins = SPINS, r = 0, rs, ns;;) {

if (((rs = runState) & RSLOCK) == 0) {

if (U.compareAndSwapInt(this, RUNSTATE, rs, ns = rs | RSLOCK)) {

if (wasInterrupted) {

try {

Thread.currentThread().interrupt();

} catch (SecurityException ignore) {

}

}

return ns;

}

}

else if (r == 0)

r = ThreadLocalRandom.nextSecondarySeed();

else if (spins > 0) {

r ^= r << 6; r ^= r >>> 21; r ^= r << 7; // xorshift

if (r >= 0)

--spins;

}

else if ((rs & STARTED) == 0 || (lock = stealCounter) == null)

Thread.yield(); // initialization race

else if (U.compareAndSwapInt(this, RUNSTATE, rs, rs | RSIGNAL)) {

synchronized (lock) {

if ((runState & RSIGNAL) != 0) {

try {

lock.wait();

} catch (InterruptedException ie) {

if (!(Thread.currentThread() instanceof

ForkJoinWorkerThread))

wasInterrupted = true;

}

}

else

lock.notifyAll();

}

}

}

}

private void unlockRunState(int oldRunState, int newRunState) {

if (!U.compareAndSwapInt(this, RUNSTATE, oldRunState, newRunState)) {

Object lock = stealCounter;

runState = newRunState; // clears RSIGNAL bit

if (lock != null)

synchronized (lock) { lock.notifyAll(); }

}

}

// Creating, registering and deregistering workers

private boolean createWorker() {

ForkJoinWorkerThreadFactory fac = factory;

Throwable ex = null;

ForkJoinWorkerThread wt = null;

try {

if (fac != null && (wt = fac.newThread(this)) != null) {

wt.start();

return true;

}

} catch (Throwable rex) {

ex = rex;

}

deregisterWorker(wt, ex);

return false;

}

private void tryAddWorker(long c) {

boolean add = false;

do {

long nc = ((AC_MASK & (c + AC_UNIT)) |

(TC_MASK & (c + TC_UNIT)));

if (ctl == c) {

int rs, stop; // check if terminating

if ((stop = (rs = lockRunState()) & STOP) == 0)

add = U.compareAndSwapLong(this, CTL, c, nc);

unlockRunState(rs, rs & ~RSLOCK);

if (stop != 0)

break;

if (add) {

createWorker();

break;

}

}

} while (((c = ctl) & ADD_WORKER) != 0L && (int)c == 0);

}

final WorkQueue registerWorker(ForkJoinWorkerThread wt) {

UncaughtExceptionHandler handler;

wt.setDaemon(true); // configure thread

if ((handler = ueh) != null)

wt.setUncaughtExceptionHandler(handler);

WorkQueue w = new WorkQueue(this, wt);

int i = 0; // assign a pool index

int mode = config & MODE_MASK;

int rs = lockRunState();

try {

WorkQueue[] ws; int n; // skip if no array

if ((ws = workQueues) != null && (n = ws.length) > 0) {

int s = indexSeed += SEED_INCREMENT; // unlikely to collide

int m = n - 1;

i = ((s << 1) | 1) & m; // odd-numbered indices

if (ws[i] != null) { // collision

int probes = 0; // step by approx half n

int step = (n <= 4) ? 2 : ((n >>> 1) & EVENMASK) + 2;

while (ws[i = (i + step) & m] != null) {

if (++probes >= n) {

workQueues = ws = Arrays.copyOf(ws, n <<= 1);

m = n - 1;

probes = 0;

}

}

}

w.hint = s; // use as random seed

w.config = i | mode;

w.scanState = i; // publication fence

ws[i] = w;

}

} finally {

unlockRunState(rs, rs & ~RSLOCK);

}

wt.setName(workerNamePrefix.concat(Integer.toString(i >>> 1)));

return w;

}

final void deregisterWorker(ForkJoinWorkerThread wt, Throwable ex) {

WorkQueue w = null;

if (wt != null && (w = wt.workQueue) != null) {

WorkQueue[] ws; // remove index from array

int idx = w.config & SMASK;

int rs = lockRunState();

if ((ws = workQueues) != null && ws.length > idx && ws[idx] == w)

ws[idx] = null;

unlockRunState(rs, rs & ~RSLOCK);

}

long c; // decrement counts

do {} while (!U.compareAndSwapLong

(this, CTL, c = ctl, ((AC_MASK & (c - AC_UNIT)) |

(TC_MASK & (c - TC_UNIT)) |

(SP_MASK & c))));

if (w != null) {

w.qlock = -1; // ensure set

w.transferStealCount(this);

w.cancelAll(); // cancel remaining tasks

}

for (;;) { // possibly replace

WorkQueue[] ws; int m, sp;

if (tryTerminate(false, false) || w == null || w.array == null ||

(runState & STOP) != 0 || (ws = workQueues) == null ||

(m = ws.length - 1) < 0) // already terminating

break;

if ((sp = (int)(c = ctl)) != 0) { // wake up replacement

if (tryRelease(c, ws[sp & m], AC_UNIT))

break;

}

else if (ex != null && (c & ADD_WORKER) != 0L) {

tryAddWorker(c); // create replacement

break;

}

else // don't need replacement

break;

}

if (ex == null) // help clean on way out

ForkJoinTask.helpExpungeStaleExceptions();

else // rethrow

ForkJoinTask.rethrow(ex);

}

// Signalling

final void signalWork(WorkQueue[] ws, WorkQueue q) {

long c; int sp, i; WorkQueue v; Thread p;

while ((c = ctl) < 0L) { // too few active

if ((sp = (int)c) == 0) { // no idle workers

if ((c & ADD_WORKER) != 0L) // too few workers

tryAddWorker(c);

break;

}

if (ws == null) // unstarted/terminated

break;

if (ws.length <= (i = sp & SMASK)) // terminated

break;

if ((v = ws[i]) == null) // terminating

break;

int vs = (sp + SS_SEQ) & ~INACTIVE; // next scanState

int d = sp - v.scanState; // screen CAS

long nc = (UC_MASK & (c + AC_UNIT)) | (SP_MASK & v.stackPred);

if (d == 0 && U.compareAndSwapLong(this, CTL, c, nc)) {

v.scanState = vs; // activate v

if ((p = v.parker) != null)

U.unpark(p);

break;

}

if (q != null && q.base == q.top) // no more work

break;

}

}

private boolean tryRelease(long c, WorkQueue v, long inc) {

int sp = (int)c, vs = (sp + SS_SEQ) & ~INACTIVE; Thread p;

if (v != null && v.scanState == sp) { // v is at top of stack

long nc = (UC_MASK & (c + inc)) | (SP_MASK & v.stackPred);

if (U.compareAndSwapLong(this, CTL, c, nc)) {

v.scanState = vs;

if ((p = v.parker) != null)

U.unpark(p);

return true;

}

}

return false;

}

// Scanning for tasks

final void runWorker(WorkQueue w) {

w.growArray(); // allocate queue

int seed = w.hint; // initially holds randomization hint

int r = (seed == 0) ? 1 : seed; // avoid 0 for xorShift

for (ForkJoinTask t;;) {

if ((t = scan(w, r)) != null)

w.runTask(t);

else if (!awaitWork(w, r))

break;

r ^= r << 13; r ^= r >>> 17; r ^= r << 5; // xorshift

}

}

private ForkJoinTask scan(WorkQueue w, int r) {

WorkQueue[] ws; int m;

if ((ws = workQueues) != null && (m = ws.length - 1) > 0 && w != null) {

int ss = w.scanState; // initially non-negative

for (int origin = r & m, k = origin, oldSum = 0, checkSum = 0;;) {

WorkQueue q; ForkJoinTask[] a; ForkJoinTask t;

int b, n; long c;

if ((q = ws[k]) != null) {

if ((n = (b = q.base) - q.top) < 0 &&

(a = q.array) != null) { // non-empty

long i = (((a.length - 1) & b) << ASHIFT) + ABASE;

if ((t = ((ForkJoinTask)

U.getObjectVolatile(a, i))) != null &&

q.base == b) {

if (ss >= 0) {

if (U.compareAndSwapObject(a, i, t, null)) {

q.base = b + 1;

if (n < -1) // signal others

signalWork(ws, q);

return t;

}

}

else if (oldSum == 0 && // try to activate

w.scanState < 0)

tryRelease(c = ctl, ws[m & (int)c], AC_UNIT);

}

if (ss < 0) // refresh

ss = w.scanState;

r ^= r << 1; r ^= r >>> 3; r ^= r << 10;

origin = k = r & m; // move and rescan

oldSum = checkSum = 0;

continue;

}

checkSum += b;

}

if ((k = (k + 1) & m) == origin) { // continue until stable

if ((ss >= 0 || (ss == (ss = w.scanState))) &&

oldSum == (oldSum = checkSum)) {

if (ss < 0 || w.qlock < 0) // already inactive

break;

int ns = ss | INACTIVE; // try to inactivate

long nc = ((SP_MASK & ns) |

(UC_MASK & ((c = ctl) - AC_UNIT)));

w.stackPred = (int)c; // hold prev stack top

U.putInt(w, QSCANSTATE, ns);

if (U.compareAndSwapLong(this, CTL, c, nc))

ss = ns;

else

w.scanState = ss; // back out

}

checkSum = 0;

}

}

}

return null;

}

private boolean awaitWork(WorkQueue w, int r) {

if (w == null || w.qlock < 0) // w is terminating

return false;

for (int pred = w.stackPred, spins = SPINS, ss;;) {

if ((ss = w.scanState) >= 0)

break;

else if (spins > 0) {

r ^= r << 6; r ^= r >>> 21; r ^= r << 7;

if (r >= 0 && --spins == 0) { // randomize spins

WorkQueue v; WorkQueue[] ws; int s, j; AtomicLong sc;

if (pred != 0 && (ws = workQueues) != null &&

(j = pred & SMASK) < ws.length &&

(v = ws[j]) != null && // see if pred parking

(v.parker == null || v.scanState >= 0))

spins = SPINS; // continue spinning

}

}

else if (w.qlock < 0) // recheck after spins

return false;

else if (!Thread.interrupted()) {

long c, prevctl, parkTime, deadline;

int ac = (int)((c = ctl) >> AC_SHIFT) + (config & SMASK);

if ((ac <= 0 && tryTerminate(false, false)) ||

(runState & STOP) != 0) // pool terminating

return false;

if (ac <= 0 && ss == (int)c) { // is last waiter

prevctl = (UC_MASK & (c + AC_UNIT)) | (SP_MASK & pred);

int t = (short)(c >>> TC_SHIFT); // shrink excess spares

if (t > 2 && U.compareAndSwapLong(this, CTL, c, prevctl))

return false; // else use timed wait

parkTime = IDLE_TIMEOUT * ((t >= 0) ? 1 : 1 - t);

deadline = System.nanoTime() + parkTime - TIMEOUT_SLOP;

}

else

prevctl = parkTime = deadline = 0L;

Thread wt = Thread.currentThread();

U.putObject(wt, PARKBLOCKER, this); // emulate LockSupport

w.parker = wt;

if (w.scanState < 0 && ctl == c) // recheck before park

U.park(false, parkTime);

U.putOrderedObject(w, QPARKER, null);

U.putObject(wt, PARKBLOCKER, null);

if (w.scanState >= 0)

break;

if (parkTime != 0L && ctl == c &&

deadline - System.nanoTime() <= 0L &&

U.compareAndSwapLong(this, CTL, c, prevctl))

return false; // shrink pool

}

}

return true;

}

// Joining tasks

final int helpComplete(WorkQueue w, CountedCompleter task,

int maxTasks) {

WorkQueue[] ws; int s = 0, m;

if ((ws = workQueues) != null && (m = ws.length - 1) >= 0 &&

task != null && w != null) {

int mode = w.config; // for popCC

int r = w.hint ^ w.top; // arbitrary seed for origin

int origin = r & m; // first queue to scan

int h = 1; // 1:ran, >1:contended, <0:hash

for (int k = origin, oldSum = 0, checkSum = 0;;) {

CountedCompleter p; WorkQueue q;

if ((s = task.status) < 0)

break;

if (h == 1 && (p = w.popCC(task, mode)) != null) {

p.doExec(); // run local task

if (maxTasks != 0 && --maxTasks == 0)

break;

origin = k; // reset

oldSum = checkSum = 0;

}

else { // poll other queues

if ((q = ws[k]) == null)

h = 0;

else if ((h = q.pollAndExecCC(task)) < 0)

checkSum += h;

if (h > 0) {

if (h == 1 && maxTasks != 0 && --maxTasks == 0)

break;

r ^= r << 13; r ^= r >>> 17; r ^= r << 5; // xorshift

origin = k = r & m; // move and restart

oldSum = checkSum = 0;

}

else if ((k = (k + 1) & m) == origin) {

if (oldSum == (oldSum = checkSum))

break;

checkSum = 0;

}

}

}

}

return s;

}

private void helpStealer(WorkQueue w, ForkJoinTask task) {

WorkQueue[] ws = workQueues;

int oldSum = 0, checkSum, m;

if (ws != null && (m = ws.length - 1) >= 0 && w != null &&

task != null) {

do { // restart point

checkSum = 0; // for stability check

ForkJoinTask subtask;

WorkQueue j = w, v; // v is subtask stealer

descent: for (subtask = task; subtask.status >= 0; ) {

for (int h = j.hint | 1, k = 0, i; ; k += 2) {

if (k > m) // can't find stealer

break descent;

if ((v = ws[i = (h + k) & m]) != null) {

if (v.currentSteal == subtask) {

j.hint = i;

break;

}

checkSum += v.base;

}

}

for (;;) { // help v or descend

ForkJoinTask[] a; int b;

checkSum += (b = v.base);

ForkJoinTask next = v.currentJoin;

if (subtask.status < 0 || j.currentJoin != subtask ||

v.currentSteal != subtask) // stale

break descent;

if (b - v.top >= 0 || (a = v.array) == null) {

if ((subtask = next) == null)

break descent;

j = v;

break;

}

int i = (((a.length - 1) & b) << ASHIFT) + ABASE;

ForkJoinTask t = ((ForkJoinTask)

U.getObjectVolatile(a, i));

if (v.base == b) {

if (t == null) // stale

break descent;

if (U.compareAndSwapObject(a, i, t, null)) {

v.base = b + 1;

ForkJoinTask ps = w.currentSteal;

int top = w.top;

do {

U.putOrderedObject(w, QCURRENTSTEAL, t);

t.doExec(); // clear local tasks too

} while (task.status >= 0 &&

w.top != top &&

(t = w.pop()) != null);

U.putOrderedObject(w, QCURRENTSTEAL, ps);

if (w.base != w.top)

return; // can't further help

}

}

}

}

} while (task.status >= 0 && oldSum != (oldSum = checkSum));

}

}

private boolean tryCompensate(WorkQueue w) {

boolean canBlock;

WorkQueue[] ws; long c; int m, pc, sp;

if (w == null || w.qlock < 0 || // caller terminating

(ws = workQueues) == null || (m = ws.length - 1) <= 0 ||

(pc = config & SMASK) == 0) // parallelism disabled

canBlock = false;

else if ((sp = (int)(c = ctl)) != 0) // release idle worker

canBlock = tryRelease(c, ws[sp & m], 0L);

else {

int ac = (int)(c >> AC_SHIFT) + pc;

int tc = (short)(c >> TC_SHIFT) + pc;

int nbusy = 0; // validate saturation

for (int i = 0; i <= m; ++i) { // two passes of odd indices

WorkQueue v;

if ((v = ws[((i << 1) | 1) & m]) != null) {

if ((v.scanState & SCANNING) != 0)

break;

++nbusy;

}

}

if (nbusy != (tc << 1) || ctl != c)

canBlock = false; // unstable or stale

else if (tc >= pc && ac > 1 && w.isEmpty()) {

long nc = ((AC_MASK & (c - AC_UNIT)) |

(~AC_MASK & c)); // uncompensated

canBlock = U.compareAndSwapLong(this, CTL, c, nc);

}

else if (tc >= MAX_CAP ||

(this == common && tc >= pc + commonMaxSpares))

throw new RejectedExecutionException(

"Thread limit exceeded replacing blocked worker");

else { // similar to tryAddWorker

boolean add = false; int rs; // CAS within lock

long nc = ((AC_MASK & c) |

(TC_MASK & (c + TC_UNIT)));

if (((rs = lockRunState()) & STOP) == 0)

add = U.compareAndSwapLong(this, CTL, c, nc);

unlockRunState(rs, rs & ~RSLOCK);

canBlock = add && createWorker(); // throws on exception

}

}

return canBlock;

}

final int awaitJoin(WorkQueue w, ForkJoinTask task, long deadline) {

int s = 0;

if (task != null && w != null) {

ForkJoinTask prevJoin = w.currentJoin;

U.putOrderedObject(w, QCURRENTJOIN, task);

CountedCompleter cc = (task instanceof CountedCompleter) ?

(CountedCompleter)task : null;

for (;;) {

if ((s = task.status) < 0)

break;

if (cc != null)

helpComplete(w, cc, 0);

else if (w.base == w.top || w.tryRemoveAndExec(task))

helpStealer(w, task);

if ((s = task.status) < 0)

break;

long ms, ns;

if (deadline == 0L)

ms = 0L;

else if ((ns = deadline - System.nanoTime()) <= 0L)

break;

else if ((ms = TimeUnit.NANOSECONDS.toMillis(ns)) <= 0L)

ms = 1L;

if (tryCompensate(w)) {

task.internalWait(ms);

U.getAndAddLong(this, CTL, AC_UNIT);

}

}

U.putOrderedObject(w, QCURRENTJOIN, prevJoin);

}

return s;

}

// Specialized scanning

private WorkQueue findNonEmptyStealQueue() {

WorkQueue[] ws; int m; // one-shot version of scan loop

int r = ThreadLocalRandom.nextSecondarySeed();

if ((ws = workQueues) != null && (m = ws.length - 1) >= 0) {

for (int origin = r & m, k = origin, oldSum = 0, checkSum = 0;;) {

WorkQueue q; int b;

if ((q = ws[k]) != null) {

if ((b = q.base) - q.top < 0)

return q;

checkSum += b;

}

if ((k = (k + 1) & m) == origin) {

if (oldSum == (oldSum = checkSum))

break;

checkSum = 0;

}

}

}

return null;

}

final void helpQuiescePool(WorkQueue w) {

ForkJoinTask ps = w.currentSteal; // save context

for (boolean active = true;;) {

long c; WorkQueue q; ForkJoinTask t; int b;

w.execLocalTasks(); // run locals before each scan

if ((q = findNonEmptyStealQueue()) != null) {

if (!active) { // re-establish active count

active = true;

U.getAndAddLong(this, CTL, AC_UNIT);

}

if ((b = q.base) - q.top < 0 && (t = q.pollAt(b)) != null) {

U.putOrderedObject(w, QCURRENTSTEAL, t);

t.doExec();

if (++w.nsteals < 0)

w.transferStealCount(this);

}

}

else if (active) { // decrement active count without queuing

long nc = (AC_MASK & ((c = ctl) - AC_UNIT)) | (~AC_MASK & c);

if ((int)(nc >> AC_SHIFT) + (config & SMASK) <= 0)

break; // bypass decrement-then-increment

if (U.compareAndSwapLong(this, CTL, c, nc))

active = false;

}

else if ((int)((c = ctl) >> AC_SHIFT) + (config & SMASK) <= 0 &&

U.compareAndSwapLong(this, CTL, c, c + AC_UNIT))

break;

}

U.putOrderedObject(w, QCURRENTSTEAL, ps);

}

final ForkJoinTask nextTaskFor(WorkQueue w) {

for (ForkJoinTask t;;) {

WorkQueue q; int b;

if ((t = w.nextLocalTask()) != null)

return t;

if ((q = findNonEmptyStealQueue()) == null)

return null;

if ((b = q.base) - q.top < 0 && (t = q.pollAt(b)) != null)

return t;

}

}

static int getSurplusQueuedTaskCount() {

Thread t; ForkJoinWorkerThread wt; ForkJoinPool pool; WorkQueue q;

if (((t = Thread.currentThread()) instanceof ForkJoinWorkerThread)) {

int p = (pool = (wt = (ForkJoinWorkerThread)t).pool).

config & SMASK;

int n = (q = wt.workQueue).top - q.base;

int a = (int)(pool.ctl >> AC_SHIFT) + p;

return n - (a > (p >>>= 1) ? 0 :

a > (p >>>= 1) ? 1 :

a > (p >>>= 1) ? 2 :

a > (p >>>= 1) ? 4 :

8);

}

return 0;

}

// Termination

private boolean tryTerminate(boolean now, boolean enable) {

int rs;

if (this == common) // cannot shut down

return false;

if ((rs = runState) >= 0) {

if (!enable)

return false;

rs = lockRunState(); // enter SHUTDOWN phase

unlockRunState(rs, (rs & ~RSLOCK) | SHUTDOWN);

}

if ((rs & STOP) == 0) {

if (!now) { // check quiescence

for (long oldSum = 0L;;) { // repeat until stable

WorkQueue[] ws; WorkQueue w; int m, b; long c;

long checkSum = ctl;

if ((int)(checkSum >> AC_SHIFT) + (config & SMASK) > 0)

return false; // still active workers

if ((ws = workQueues) == null || (m = ws.length - 1) <= 0)

break; // check queues

for (int i = 0; i <= m; ++i) {

if ((w = ws[i]) != null) {

if ((b = w.base) != w.top || w.scanState >= 0 ||

w.currentSteal != null) {

tryRelease(c = ctl, ws[m & (int)c], AC_UNIT);

return false; // arrange for recheck

}

checkSum += b;

if ((i & 1) == 0)

w.qlock = -1; // try to disable external

}

}

if (oldSum == (oldSum = checkSum))

break;

}

}

if ((runState & STOP) == 0) {

rs = lockRunState(); // enter STOP phase

unlockRunState(rs, (rs & ~RSLOCK) | STOP);

}

}

int pass = 0; // 3 passes to help terminate

for (long oldSum = 0L;;) { // or until done or stable

WorkQueue[] ws; WorkQueue w; ForkJoinWorkerThread wt; int m;

long checkSum = ctl;

if ((short)(checkSum >>> TC_SHIFT) + (config & SMASK) <= 0 ||

(ws = workQueues) == null || (m = ws.length - 1) <= 0) {

if ((runState & TERMINATED) == 0) {

rs = lockRunState(); // done

unlockRunState(rs, (rs & ~RSLOCK) | TERMINATED);

synchronized (this) { notifyAll(); } // for awaitTermination

}

break;

}

for (int i = 0; i <= m; ++i) {

if ((w = ws[i]) != null) {

checkSum += w.base;

w.qlock = -1; // try to disable

if (pass > 0) {

w.cancelAll(); // clear queue

if (pass > 1 && (wt = w.owner) != null) {

if (!wt.isInterrupted()) {

try { // unblock join

wt.interrupt();

} catch (Throwable ignore) {

}

}

if (w.scanState < 0)

U.unpark(wt); // wake up

}

}

}

}

if (checkSum != oldSum) { // unstable

oldSum = checkSum;

pass = 0;

}

else if (pass > 3 && pass > m) // can't further help

break;

else if (++pass > 1) { // try to dequeue

long c; int j = 0, sp; // bound attempts

while (j++ <= m && (sp = (int)(c = ctl)) != 0)

tryRelease(c, ws[sp & m], AC_UNIT);

}

}

return true;

}

// External operations

private void externalSubmit(ForkJoinTask task) {

int r; // initialize caller's probe

if ((r = ThreadLocalRandom.getProbe()) == 0) {

ThreadLocalRandom.localInit();

r = ThreadLocalRandom.getProbe();

}

for (;;) {

WorkQueue[] ws; WorkQueue q; int rs, m, k;

boolean move = false;

if ((rs = runState) < 0) {

tryTerminate(false, false); // help terminate

throw new RejectedExecutionException();

}

else if ((rs & STARTED) == 0 || // initialize

((ws = workQueues) == null || (m = ws.length - 1) < 0)) {

int ns = 0;

rs = lockRunState();

try {

if ((rs & STARTED) == 0) {

U.compareAndSwapObject(this, STEALCOUNTER, null,

new AtomicLong());

// create workQueues array with size a power of two

int p = config & SMASK; // ensure at least 2 slots

int n = (p > 1) ? p - 1 : 1;

n |= n >>> 1; n |= n >>> 2; n |= n >>> 4;

n |= n >>> 8; n |= n >>> 16; n = (n + 1) << 1;

workQueues = new WorkQueue[n];

ns = STARTED;

}

} finally {

unlockRunState(rs, (rs & ~RSLOCK) | ns);

}

}

else if ((q = ws[k = r & m & SQMASK]) != null) {

if (q.qlock == 0 && U.compareAndSwapInt(q, QLOCK, 0, 1)) {

ForkJoinTask[] a = q.array;

int s = q.top;

boolean submitted = false; // initial submission or resizing

try { // locked version of push

if ((a != null && a.length > s + 1 - q.base) ||

(a = q.growArray()) != null) {

int j = (((a.length - 1) & s) << ASHIFT) + ABASE;

U.putOrderedObject(a, j, task);

U.putOrderedInt(q, QTOP, s + 1);

submitted = true;

}

} finally {

U.compareAndSwapInt(q, QLOCK, 1, 0);

}

if (submitted) {

signalWork(ws, q);

return;

}

}

move = true; // move on failure

}

else if (((rs = runState) & RSLOCK) == 0) { // create new queue

q = new WorkQueue(this, null);

q.hint = r;

q.config = k | SHARED_QUEUE;

q.scanState = INACTIVE;

rs = lockRunState(); // publish index

if (rs > 0 && (ws = workQueues) != null &&

k < ws.length && ws[k] == null)

ws[k] = q; // else terminated

unlockRunState(rs, rs & ~RSLOCK);

}

else

move = true; // move if busy

if (move)

r = ThreadLocalRandom.advanceProbe(r);

}

}

final void externalPush(ForkJoinTask task) {

WorkQueue[] ws; WorkQueue q; int m;

int r = ThreadLocalRandom.getProbe();

int rs = runState;

if ((ws = workQueues) != null && (m = (ws.length - 1)) >= 0 &&

(q = ws[m & r & SQMASK]) != null && r != 0 && rs > 0 &&

U.compareAndSwapInt(q, QLOCK, 0, 1)) {

ForkJoinTask[] a; int am, n, s;

if ((a = q.array) != null &&

(am = a.length - 1) > (n = (s = q.top) - q.base)) {

int j = ((am & s) << ASHIFT) + ABASE;

U.putOrderedObject(a, j, task);

U.putOrderedInt(q, QTOP, s + 1);

U.putIntVolatile(q, QLOCK, 0);

if (n <= 1)

signalWork(ws, q);

return;

}

U.compareAndSwapInt(q, QLOCK, 1, 0);

}

externalSubmit(task);

}

static WorkQueue commonSubmitterQueue() {

ForkJoinPool p = common;

int r = ThreadLocalRandom.getProbe();

WorkQueue[] ws; int m;

return (p != null && (ws = p.workQueues) != null &&

(m = ws.length - 1) >= 0) ?

ws[m & r & SQMASK] : null;

}

final boolean tryExternalUnpush(ForkJoinTask task) {

WorkQueue[] ws; WorkQueue w; ForkJoinTask[] a; int m, s;

int r = ThreadLocalRandom.getProbe();

if ((ws = workQueues) != null && (m = ws.length - 1) >= 0 &&

(w = ws[m & r & SQMASK]) != null &&

(a = w.array) != null && (s = w.top) != w.base) {

long j = (((a.length - 1) & (s - 1)) << ASHIFT) + ABASE;

if (U.compareAndSwapInt(w, QLOCK, 0, 1)) {

if (w.top == s && w.array == a &&

U.getObject(a, j) == task &&

U.compareAndSwapObject(a, j, task, null)) {

U.putOrderedInt(w, QTOP, s - 1);

U.putOrderedInt(w, QLOCK, 0);

return true;

}

U.compareAndSwapInt(w, QLOCK, 1, 0);

}

}

return false;

}

final int externalHelpComplete(CountedCompleter task, int maxTasks) {

WorkQueue[] ws; int n;

int r = ThreadLocalRandom.getProbe();

return ((ws = workQueues) == null || (n = ws.length) == 0) ? 0 :

helpComplete(ws[(n - 1) & r & SQMASK], task, maxTasks);

}

// Exported methods

// Constructors

public ForkJoinPool() {

this(Math.min(MAX_CAP, Runtime.getRuntime().availableProcessors()),

defaultForkJoinWorkerThreadFactory, null, false);

}

public ForkJoinPool(int parallelism) {

this(parallelism, defaultForkJoinWorkerThreadFactory, null, false);

}

public ForkJoinPool(int parallelism,

ForkJoinWorkerThreadFactory factory,

UncaughtExceptionHandler handler,

boolean asyncMode) {

this(checkParallelism(parallelism),

checkFactory(factory),

handler,

asyncMode ? FIFO_QUEUE : LIFO_QUEUE,

"ForkJoinPool-" + nextPoolId() + "-worker-");

checkPermission();

}

private static int checkParallelism(int parallelism) {

if (parallelism <= 0 || parallelism > MAX_CAP)

throw new IllegalArgumentException();

return parallelism;

}

private static ForkJoinWorkerThreadFactory checkFactory

(ForkJoinWorkerThreadFactory factory) {

if (factory == null)

throw new NullPointerException();

return factory;

}

private ForkJoinPool(int parallelism,

ForkJoinWorkerThreadFactory factory,

UncaughtExceptionHandler handler,

int mode,

String workerNamePrefix) {

this.workerNamePrefix = workerNamePrefix;

this.factory = factory;

this.ueh = handler;

this.config = (parallelism & SMASK) | mode;

long np = (long)(-parallelism); // offset ctl counts

this.ctl = ((np << AC_SHIFT) & AC_MASK) | ((np << TC_SHIFT) & TC_MASK);

}

public static ForkJoinPool commonPool() {

// assert common != null : "static init error";

return common;

}

// Execution methods

public T invoke(ForkJoinTask task) {

if (task == null)

throw new NullPointerException();

externalPush(task);

return task.join();

}

public void execute(ForkJoinTask task) {

if (task == null)

throw new NullPointerException();

externalPush(task);

}

// AbstractExecutorService methods

public void execute(Runnable task) {

if (task == null)

throw new NullPointerException();

ForkJoinTask job;

if (task instanceof ForkJoinTask) // avoid re-wrap

job = (ForkJoinTask) task;

else

job = new ForkJoinTask.RunnableExecuteAction(task);

externalPush(job);

}

public ForkJoinTask submit(ForkJoinTask task) {

if (task == null)

throw new NullPointerException();

externalPush(task);

return task;

}

public ForkJoinTask submit(Callable task) {

ForkJoinTask job = new ForkJoinTask.AdaptedCallable(task);

externalPush(job);

return job;

}

public ForkJoinTask submit(Runnable task, T result) {

ForkJoinTask job = new ForkJoinTask.AdaptedRunnable(task, result);

externalPush(job);

return job;

}

public ForkJoinTask submit(Runnable task) {

if (task == null)

throw new NullPointerException();

ForkJoinTask job;

if (task instanceof ForkJoinTask) // avoid re-wrap

job = (ForkJoinTask) task;

else

job = new ForkJoinTask.AdaptedRunnableAction(task);

externalPush(job);

return job;

}

public List> invokeAll(Collection> tasks) {

// In previous versions of this class, this method constructed

// a task to run ForkJoinTask.invokeAll, but now external

// invocation of multiple tasks is at least as efficient.

ArrayList> futures = new ArrayList<>(tasks.size());

boolean done = false;

try {

for (Callable t : tasks) {

ForkJoinTask f = new ForkJoinTask.AdaptedCallable(t);

futures.add(f);

externalPush(f);

}

for (int i = 0, size = futures.size(); i < size; i++)

((ForkJoinTask)futures.get(i)).quietlyJoin();

done = true;

return futures;

} finally {

if (!done)

for (int i = 0, size = futures.size(); i < size; i++)

futures.get(i).cancel(false);

}

}

public ForkJoinWorkerThreadFactory getFactory() {

return factory;

}

public UncaughtExceptionHandler getUncaughtExceptionHandler() {

return ueh;

}

public int getParallelism() {

int par;

return ((par = config & SMASK) > 0) ? par : 1;

}

public static int getCommonPoolParallelism() {

return commonParallelism;

}

public int getPoolSize() {

return (config & SMASK) + (short)(ctl >>> TC_SHIFT);

}

public boolean getAsyncMode() {

return (config & FIFO_QUEUE) != 0;

}

public int getRunningThreadCount() {

int rc = 0;

WorkQueue[] ws; WorkQueue w;

if ((ws = workQueues) != null) {

for (int i = 1; i < ws.length; i += 2) {

if ((w = ws[i]) != null && w.isApparentlyUnblocked())

++rc;

}

}

return rc;

}

public int getActiveThreadCount() {

int r = (config & SMASK) + (int)(ctl >> AC_SHIFT);

return (r <= 0) ? 0 : r; // suppress momentarily negative values

}

public boolean isQuiescent() {

return (config & SMASK) + (int)(ctl >> AC_SHIFT) <= 0;

}

public long getStealCount() {

AtomicLong sc = stealCounter;

long count = (sc == null) ? 0L : sc.get();

WorkQueue[] ws; WorkQueue w;

if ((ws = workQueues) != null) {

for (int i = 1; i < ws.length; i += 2) {

if ((w = ws[i]) != null)

count += w.nsteals;

}

}

return count;

}

public long getQueuedTaskCount() {

long count = 0;

WorkQueue[] ws; WorkQueue w;

if ((ws = workQueues) != null) {

for (int i = 1; i < ws.length; i += 2) {

if ((w = ws[i]) != null)

count += w.queueSize();

}

}

return count;

}

public int getQueuedSubmissionCount() {

int count = 0;

WorkQueue[] ws; WorkQueue w;

if ((ws = workQueues) != null) {

for (int i = 0; i < ws.length; i += 2) {

if ((w = ws[i]) != null)

count += w.queueSize();

}

}

return count;

}

public boolean hasQueuedSubmissions() {

WorkQueue[] ws; WorkQueue w;

if ((ws = workQueues) != null) {

for (int i = 0; i < ws.length; i += 2) {

if ((w = ws[i]) != null && !w.isEmpty())

return true;

}

}

return false;

}

protected ForkJoinTask pollSubmission() {

WorkQueue[] ws; WorkQueue w; ForkJoinTask t;

if ((ws = workQueues) != null) {

for (int i = 0; i < ws.length; i += 2) {

if ((w = ws[i]) != null && (t = w.poll()) != null)

return t;

}

}

return null;

}

protected int drainTasksTo(Collection> c) {

int count = 0;

WorkQueue[] ws; WorkQueue w; ForkJoinTask t;

if ((ws = workQueues) != null) {

for (int i = 0; i < ws.length; ++i) {

if ((w = ws[i]) != null) {

while ((t = w.poll()) != null) {

c.add(t);

++count;

}

}

}

}

return count;

}

public String toString() {

// Use a single pass through workQueues to collect counts

long qt = 0L, qs = 0L; int rc = 0;

AtomicLong sc = stealCounter;

long st = (sc == null) ? 0L : sc.get();

long c = ctl;

WorkQueue[] ws; WorkQueue w;

if ((ws = workQueues) != null) {

for (int i = 0; i < ws.length; ++i) {

if ((w = ws[i]) != null) {

int size = w.queueSize();

if ((i & 1) == 0)

qs += size;

else {

qt += size;

st += w.nsteals;

if (w.isApparentlyUnblocked())

++rc;

}

}

}

}

int pc = (config & SMASK);

int tc = pc + (short)(c >>> TC_SHIFT);

int ac = pc + (int)(c >> AC_SHIFT);

if (ac < 0) // ignore transient negative

ac = 0;

int rs = runState;

String level = ((rs & TERMINATED) != 0 ? "Terminated" :

(rs & STOP) != 0 ? "Terminating" :

(rs & SHUTDOWN) != 0 ? "Shutting down" :

"Running");

return super.toString() +

"[" + level +

", parallelism = " + pc +

", size = " + tc +

", active = " + ac +

", running = " + rc +

", steals = " + st +

", tasks = " + qt +

", submissions = " + qs +

"]";

}

public void shutdown() {

checkPermission();

tryTerminate(false, true);

}

public List shutdownNow() {

checkPermission();

tryTerminate(true, true);

return Collections.emptyList();

}

public boolean isTerminated() {

return (runState & TERMINATED) != 0;

}

public boolean isTerminating() {

int rs = runState;

return (rs & STOP) != 0 && (rs & TERMINATED) == 0;

}

public boolean isShutdown() {

return (runState & SHUTDOWN) != 0;

}

public boolean awaitTermination(long timeout, TimeUnit unit)

throws InterruptedException {

if (Thread.interrupted())

throw new InterruptedException();

if (this == common) {

awaitQuiescence(timeout, unit);

return false;

}

long nanos = unit.toNanos(timeout);

if (isTerminated())

return true;

if (nanos <= 0L)

return false;

long deadline = System.nanoTime() + nanos;

synchronized (this) {

for (;;) {

if (isTerminated())

return true;

if (nanos <= 0L)

return false;

long millis = TimeUnit.NANOSECONDS.toMillis(nanos);

wait(millis > 0L ? millis : 1L);

nanos = deadline - System.nanoTime();

}

}

}

public boolean awaitQuiescence(long timeout, TimeUnit unit) {

long nanos = unit.toNanos(timeout);

ForkJoinWorkerThread wt;

Thread thread = Thread.currentThread();

if ((thread instanceof ForkJoinWorkerThread) &&

(wt = (ForkJoinWorkerThread)thread).pool == this) {

helpQuiescePool(wt.workQueue);

return true;

}

long startTime = System.nanoTime();

WorkQueue[] ws;

int r = 0, m;

boolean found = true;

while (!isQuiescent() && (ws = workQueues) != null &&

(m = ws.length - 1) >= 0) {

if (!found) {

if ((System.nanoTime() - startTime) > nanos)

return false;

Thread.yield(); // cannot block

}

found = false;

for (int j = (m + 1) << 2; j >= 0; --j) {

ForkJoinTask t; WorkQueue q; int b, k;

if ((k = r++ & m) <= m && k >= 0 && (q = ws[k]) != null &&

(b = q.base) - q.top < 0) {

found = true;

if ((t = q.pollAt(b)) != null)

t.doExec();

break;

}

}

}

return true;

}

static void quiesceCommonPool() {

common.awaitQuiescence(Long.MAX_VALUE, TimeUnit.NANOSECONDS);

}

public static interface ManagedBlocker {

boolean block() throws InterruptedException;

boolean isReleasable();

}

public static void managedBlock(ManagedBlocker blocker)

throws InterruptedException {

ForkJoinPool p;

ForkJoinWorkerThread wt;

Thread t = Thread.currentThread();

if ((t instanceof ForkJoinWorkerThread) &&

(p = (wt = (ForkJoinWorkerThread)t).pool) != null) {

WorkQueue w = wt.workQueue;

while (!blocker.isReleasable()) {

if (p.tryCompensate(w)) {

try {