lucene全文检索 对数据库表进行增删改查之入门小白必看篇

我的qq 2038373094

Lucene全文搜索最主要的就是索引

它把数据库表里的数据都通过分词器做成了索引,程序只要执行索引的一些增删改查操作就可以实现对数据库的增删改查了

1、搜索模块的核心 IndexSearcher类

2、添加模块的核心 IndexWriter类

3、删除模块的核心

4、修改模块的核心

搜索模块 IndexWriter类

要执行搜索功能,是不是应该知道这个索引文件的路径,不然怎么查询?

但是一般都不用 IndexSearch(Directory path);而是用IndexReader r 为什么呢?

indexReader比起直接用Directory path打开更快速,不浪费资源;而且indexReader还可以删除资源,比较全能!

如何实现对某个字段进行搜索?

选择合适的sql语句

普遍用TermQuery,这个是精准查询

Query query=new TermQuery(new Term("字段名","搜索的词"));

踩坑:这个一般用于字段是Field.Index.NOT_ANALYZED,如果字段是Field.Index.ANALYZED,采用TermQuery查询是不会有结果的!

建议使用QueryParser

QueryParser parser = new QueryParser("areas",new StandardAnalyzer());

org.apache.lucene.search.Query query = parser.parse(keyword)选择好搜索语句以后,可以开始进行查询了

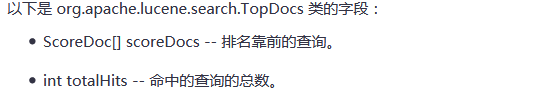

查询的结果也有很多形式,如下所示

一般都是TopDocs

TopDocs tds = searcher.search(query, 10);获取排名靠前的数据

一个小栗子

public void searchFile() {

IndexSearcher searcher = new IndexSearcher(this.getIndexReader());

Query query = new TermQuery(new Term("content", "my")); // 精确搜索:搜索"content"中包含"my"的文档

try {

TopDocs tds = searcher.search(query, 10);

for (ScoreDoc sd : tds.scoreDocs) {

Document doc = searcher.doc(sd.doc); // sd.doc得到的是文档的序号

System.out.print("(" + sd.doc + "|" + sd.score + ")"

+ doc.get("name") + "[" + doc.get("email") + "]-->");

}

} catch (Exception e) {

e.printStackTrace();

}

}

添加模块

先创建文档 Document doc=new Document();

给文档添加字段 doc.add(new Field("字段名","值",是否解析));

增量索引类 IndexWriter in=new IndexWriter(path,new IndexWriterConfig(Version.LUCENE_41, new StandardAnalyzer(Version.LUCENE_41)));

然后为数据添加索引

in.addDocument(doc);

以下是一个对数据库表的lucene搜索

记住一句话,term是精准查询,是针对与未分词的字段才有效的!

package cn.com.lucene;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileReader;

import java.io.FileWriter;

import java.io.IOException;

import java.io.PrintWriter;

import java.util.List;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.queryparser.classic.ParseException;

import org.apache.lucene.queryparser.classic.QueryParser;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

import org.hibernate.Query;

import org.hibernate.Session;

import org.hibernate.SessionFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Scope;

import org.springframework.stereotype.Repository;

import org.springframework.transaction.annotation.Transactional;

import com.sun.corba.se.impl.ior.WireObjectKeyTemplate;

import cn.com.bean.Teacher;

@Repository(value = "teacherIndex")

@Scope("prototype")

public class TeacherIndex {

/*author:命运的信徒

* arm:为表teacher建立索引

* 并且进行查询

*/

String indexpath="D:/index/index";

String idpath="D:/index/id/1.txt";

private static IndexReader reader = null;

private Directory directory = null;

@Autowired

private SessionFactory sf;

private String content;

private String key;

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

public String getKey() {

return key;

}

public void setKey(String key) {

this.key = key;

}

//对数据库中的表建立索引

@Transactional

public String CreateIndex(){

//1.查找idpath里面的数据,如果是null的或者不存在的话,就创建,然后输入0进去

String storeId=getStoreId(idpath);

//2.如果有数据,然后就开始查询数据库中大于这个数据的结果集

Session session=sf.getCurrentSession();

List list=getResult(storeId,session);

//3.为这个结果集建立索引,把这个结果集中最大的id,写到idpath里面去

System.out.println("集合的长度是:"+list.size());

if(list.size()>0){

indexBuilding(indexpath,idpath,list);

}

//4.开始搜索功能了

searchFile(content,key);

return null;

}

/**

* 获取IndexReader实例

*/

private IndexReader getIndexReader() {

try {

directory=FSDirectory.open(new File(indexpath));

if (reader == null) {

reader = IndexReader.open(directory);

} else {

IndexReader ir = IndexReader.open(directory);

if (ir != null) {

reader.close(); // 关闭原reader

reader = ir; // 赋予新reader

}

}

return reader;

} catch (Exception e) {

e.printStackTrace();

}

return null; // 发生异常则返回null

}

/**

* 搜索文件

*/

public void searchFile(String content,String keyword) {

IndexSearcher searcher = new IndexSearcher(this.getIndexReader());

//创建一个查询条件解析器

QueryParser parser = new QueryParser(content,new StandardAnalyzer());

org.apache.lucene.search.Query query=null;

try {

query = parser.parse(keyword);

} catch (ParseException e1) {

// TODO Auto-generated catch block

e1.printStackTrace();

}

try {

TopDocs tds = searcher.search(query, 10);

System.out.println(tds.totalHits);

for (ScoreDoc sd : tds.scoreDocs) {

Document doc = searcher.doc(sd.doc); // sd.doc得到的是文档的序号

Teacher tt=parse(doc);

System.out.println("符合条件的心理咨询师:"+tt.getUsername());

}

} catch (Exception e) {

e.printStackTrace();

}

}

//获取存储的id

public static String getStoreId(String path) {

String storeId = "";

try {

File file = new File(path);

if (!file.exists()) {

file.createNewFile();

}

FileReader fr = new FileReader(path);

BufferedReader br = new BufferedReader(fr);

storeId = br.readLine();

if (storeId == null || storeId == ""){

storeId = "0";

}

br.close();

fr.close();

} catch (Exception e) {

e.printStackTrace();

}

return storeId;

}

//查询新增的数据

public List getResult(String storeId,Session session){

String sql="from Teacher where tid > ? order by tid asc";

Query query=session.createQuery(sql);

int tid=Integer.parseInt(storeId);

query.setInteger(0, tid);

List list=query.list();

return list;

}

//把新增的数据建立索引文件

public static boolean indexBuilding(String path, String storeIdPath,

List list) {

try {

Analyzer luceneAnalyzer = new StandardAnalyzer();

Directory directory = FSDirectory.open(new File(path));

IndexWriter writer = new IndexWriter(directory,

new IndexWriterConfig(Version.LUCENE_41,luceneAnalyzer));

int id = 0;

Document doc = null;

for (Teacher t : list) {

doc=TeacherIndex.addIndex(t);

writer.addDocument(doc);

id=t.getTid();

}

System.out.println("最新的id:"+id);

//获取新的id

writer.commit();

writer.close();

writeStoreId(storeIdPath,Integer.toString(id));

return true;

} catch (Exception e) {

e.printStackTrace();

System.out.println("出错了" + e.getClass() + "\n 错误信息为: "

+ e.getMessage());

return false;

}

}

//增加索引的类,把对象转换成文本类型

@SuppressWarnings("deprecation")

public static Document addIndex(Teacher t){

Document doc=new Document();

doc.add(new Field("areas",t.getAreas(),Field.Store.YES,Field.Index.ANALYZED));

doc.add(new Field("imageUrl",t.getImageUrl(),Field.Store.YES,Field.Index.NOT_ANALYZED));

doc.add(new Field("indentity",t.getIndentity(),Field.Store.YES,Field.Index.NOT_ANALYZED));

doc.add(new Field("instroduce",t.getInstroduce(),Field.Store.YES,Field.Index.ANALYZED));

doc.add(new Field("number",t.getNumber(),Field.Store.YES,Field.Index.NOT_ANALYZED));

doc.add(new Field("quality",t.getQuality(),Field.Store.YES,Field.Index.NOT_ANALYZED));

doc.add(new Field("tell",t.getTell(),Field.Store.YES,Field.Index.NOT_ANALYZED));

doc.add(new Field("time",t.getTime(),Field.Store.YES,Field.Index.NOT_ANALYZED));

doc.add(new Field("username",t.getUsername(),Field.Store.YES,Field.Index.ANALYZED));

return doc;

}

//把文本类型转换为对象

public static Teacher parse(Document doc){

Teacher tea=new Teacher();

System.out.println("开始转换了....");

tea.setImageUrl(doc.get("imageUrl"));

tea.setUsername(doc.get("username"));

tea.setTell(doc.get("tell"));

tea.setIndentity(doc.get("indentity"));

tea.setAreas(doc.get("areas"));

tea.setQuality(doc.get("quality"));

tea.setNumber(doc.get("number"));

tea.setInstroduce(doc.get("instroduce"));

tea.setTime(doc.get("time"));

System.out.println(tea.getUsername());

return tea;

}

//写id入1.txt文件

public static boolean writeStoreId(String path, String storeId) {

boolean b = false;

try {

File file = new File(path);

if (!file.exists()) {

file.createNewFile();

}

FileWriter fw = new FileWriter(path);

PrintWriter out = new PrintWriter(fw);

System.out.println("要存储的id:"+storeId);

out.write(storeId);

out.close();

fw.close();

b = true;

} catch (IOException e) {

e.printStackTrace();

}

return b;

}

//删除操作

public void delete(String key,String value){

try {

Analyzer luceneAnalyzer = new StandardAnalyzer();

Directory directory = FSDirectory.open(new File(indexpath));

IndexWriter writer;

writer = new IndexWriter(directory,

new IndexWriterConfig(Version.LUCENE_41,luceneAnalyzer));

//精准删除-对应的字段一定不能是分词的

writer.deleteDocuments(new Term(key,value));

writer.commit();

writer.close();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

//修改操作

public void update(String key,String value,Teacher tt){

try {

Analyzer luceneAnalyzer = new StandardAnalyzer();

Directory directory = FSDirectory.open(new File(indexpath));

IndexWriter writer;

writer = new IndexWriter(directory,

new IndexWriterConfig(Version.LUCENE_41,luceneAnalyzer));

Document doc=addIndex(tt);

writer.updateDocument(new Term(key,value), doc);

writer.commit();

writer.close();

} catch (IOException e1) {

// TODO Auto-generated catch block

e1.printStackTrace();

}

}

public static void main(String[] args) {

TeacherIndex in=new TeacherIndex();

// in.delete("tell", "110");

Teacher tea=new Teacher();

tea.setTid(2);

tea.setUsername("田江南");

tea.setImageUrl("xxxx");

tea.setTell("110");

tea.setIndentity("00000");

tea.setAreas("人际关系");

tea.setQuality("iiiiiiii");

tea.setNumber("123456");

tea.setTime("5");

tea.setInstroduce("我是中国人");

in.update("time","5",tea);

}

}