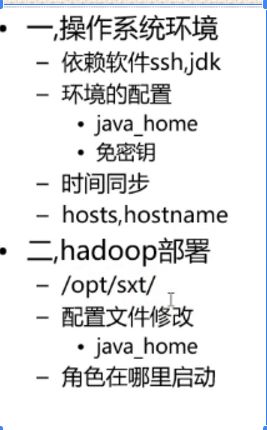

大数据伪分布式平台搭建过程

大数据相关jar包可在https://www.siyang.site/portfolio/出下载

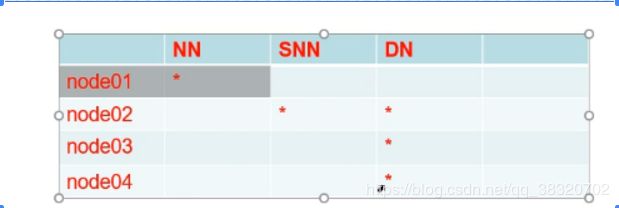

平台结构为上图

-

配置ssh

每个节点操作

ssh localhost

-

配置ssh免秘钥登录

node1配置

ssh-keygen -t dsa -P '' -f /root/.ssh/id_dsa会在.ssh目录中生成2个文件:id_dsa及id_dsa.pub

去.ssh目录将id_dsa.pub复制一份为authorized_keys

cp id_dsa.pub authorized_keys

将node1的id_dsa.pub复制到node2、node3、node4的/root/.ssh下

scp /root/.ssh/id_dsa.pub root@node2:/root/.ssh/node1.pub

scp /root/.ssh/id_dsa.pub root@node3:/root/.ssh/node1.pub

scp /root/.ssh/id_dsa.pub root@node4:/root/.ssh/node1.pubnode2、node3、node4的/root/.ssh/node1.pub下改名为authorized_keys

cp node1.pub authorized_keys-

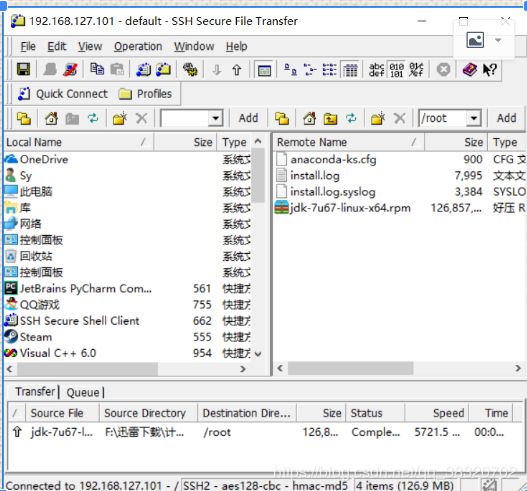

装JDK

每个节点操作

利用sshfile传输jdk至root目录

安装jdk

rpm -i jdk-7u67-linux-x64.rpm安装目录为/usr/java

配置环境变量

/etc/profile文件最下添加

export JAVA_HOME=/usr/java/jdk1.7.0_67

export PATH=$PATH:$JAVA_HOME/bin保存后刷新配置文件

source /etc/profile

-

装hadoop

利用sshfile传输hadoop至root目录

安装hadoop

tar xf hadoop-2.6.5.tar.gz将hadoop移动位置

mkdir /opt/home(每个节点都要创建)

mv hadoop-2.6.5 /opt/home/

-

配置hadoop环境变量

export HADOOP_HOME=/opt/home/hadoop-2.6.5

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin*此处可用scp将/etc/profile 覆盖给node2-4的/etc/profile

scp /etc/profile root@node*:/etc/profile

保存后刷新配置文件

source /etc/profile

-

修改hadoop配置文件

*仅修改node1,node2-4可用scp传过去(需要node2-4创建/opt/home/文件夹)

scp -r hadoop-2.6.5/ root@node1:/opt/home/

修改hadoop-2.6.5/etc/hadoop/hadoop-env.sh

将export JAVA_HOME=${JAVA_HOME}

改为绝对路径export JAVA_HOME=/usr/java/jdk1.7.0_67修改hadoop-2.6.5/etc/hadoop/mapred-env.sh

将# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

export JAVA_HOME=/usr/java/jdk1.7.0_67修改hadoop-2.6.5/etc/hadoop/yarn-env.sh

将# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

改为绝对路径export JAVA_HOME=/usr/java/jdk1.7.0_67修改hadoop-2.6.5/etc/hadoop/core-site.xml

fs.defaultFS

hdfs://node1:9000

hadoop.tmp.dir #hdfs文件目录位置

/var/home/hadoop/full

修改hadoop-2.6.5/etc/hadoop/hdfs-site.xml

dfs.replication

2

dfs.namenode.secondary.http-address

node2:50090

修改hadoop-2.6.5/etc/hadoop/slaves

node2

node3

node4

-

格式化namenode(装完最开始执行一次)

hdfs namenode -format

-

开启hadoop

start-dfs.sh