使用docker跑一个伪分布式hadoop测试集群

使用docker跑一个伪分布式hadoop测试集群

- 概述

- 主要镜像

- 组件版本

- 镜像构建

- hadoop-base

- namenode

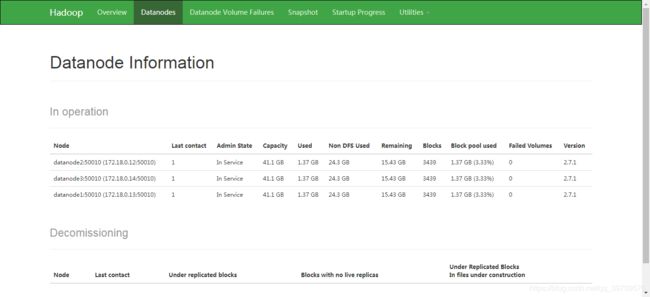

- datanode

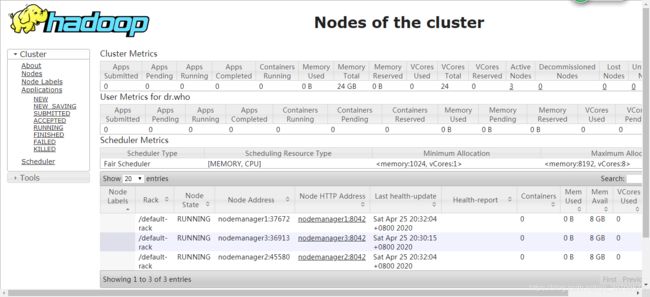

- resourcemanager

- nodemanager

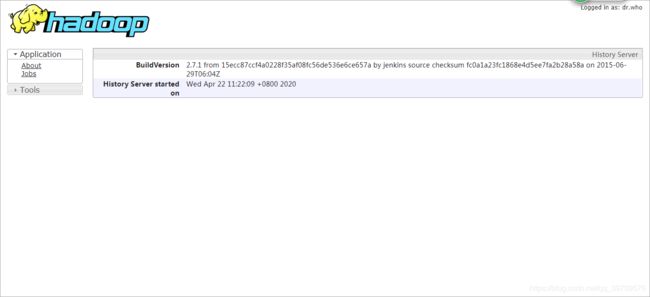

- historyserver

- zookeeper

- 跑一个伪分布式集群

- docker-compose.yaml 中需要挂载的env文件

概述

这是之前学习大数据时服务器不足自己用docker搭建的一个hadoop伪分布式集群,主要用于学习测试。

主要镜像

- hadoop-base (是除了zookeeper以外其他几个镜像的基础镜像)

- namenode

- datanode

- nodemanager

- resourcemanager

- historyserver

- zookeeper

组件版本

- hadoop 2.7.1

- jdk 7

- zookeeper 3.4.11

- 安装包链接: hadoop-images.

提取码:jcic

镜像构建

hadoop-base

- Dockerfile

FROM centos:6.9

MAINTAINER [email protected]

ADD jdk-7u67-linux-x64.tar.gz /usr/lib

ADD hadoop-2.7.1.tar.gz /usr/lib

ENV JAVA_HOME=/usr/lib/jdk1.7.0_67 \

HADOOP_HOME=/usr/lib/hadoop-2.7.1 \

HADOOP_CONF_DIR=/usr/lib/hadoop-2.7.1/etc/hadoop \

HADOOP_CLASSPATH=$HADOOP_CLASSPATH

ENV PATH=$JAVA_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$PATH

COPY entrypoint.sh /usr/local/bin/

RUN chmod a+x /usr/local/bin/entrypoint.sh \

&& yum -y install lrzsz zip unzip

EXPOSE 9000 50070 8088

ENTRYPOINT ["/usr/local/bin/entrypoint.sh"]

- entrypoint.sh (容器启动脚本)

#!/bin/bash

service sshd start

if [ ! -d "$HADOOP_HOME/logs" ]; then

mkdir $HADOOP_HOME/logs

fi

cp $HADOOP_HOME/etc/hadoop/mapred-site.xml.template $HADOOP_HOME/etc/hadoop/mapred-site.xml

#CORE

export CORE_fs_defaultFS=${CORE_fs_defaultFS:-hdfs://`hostname -f`:9000}

function addProperty() {

local path=$1

local name=$2

local value=$3

local entry="$name ${value}

' > /usr/lib/hadoop-2.7.1/share/hadoop/hdfs/webapps/hdfs/crossdomain.xml

echo '

' > /usr/lib/hadoop-2.7.1/share/hadoop/hdfs/webapps/datanode/crossdomain.xml

}

addConfigure core CORE

addConfigure hdfs HDFS

addConfigure yarn YARN

addConfigure mapred MAPRED

crossdomain

exec $@

tail -f /dev/null

namenode

- Dockerfile

FROM registry.team.com/hadoop-base:2.7.1

MAINTAINER [email protected]

ENV HDFS_dfs_namenode_name_dir=/var/hadoop/dfs/name

VOLUME /var/hadoop/dfs/name

COPY start-namenode.sh /usr/local/bin

RUN chmod a+x /usr/local/bin/start-namenode.sh

EXPOSE 50070

CMD ["/usr/local/bin/start-namenode.sh"]

- start-namenode.sh

#!/bin/bash

# 判断是否格式化namenode

if [ ! -d $HDFS_dfs_namenode_name_dir/current ]; then

$HADOOP_HOME/bin/hdfs namenode -format

fi

$HADOOP_HOME/sbin/hadoop-daemon.sh start namenode

tail -f /dev/null

datanode

- Dockerfile

FROM registry.team.com/hadoop-base:2.7.1

MAINTAINER [email protected]

ENV HDFS_dfs_datanode_data_dir=/var/hadoop/dfs/data

VOLUME /var/hadoop/dfs/data

COPY start-datanode.sh /usr/local/bin/

RUN chmod a+x /usr/local/bin/start-datanode.sh

EXPOSE 50075

CMD ["/usr/local/bin/start-datanode.sh"]

- start-datanode.sh

#!/bin/bash

$HADOOP_HOME/sbin/hadoop-daemon.sh start datanode

tail -f /dev/null

resourcemanager

- Dockerfile

FROM registry.team.com/hadoop-base:2.7.1

MAINTAINER [email protected]

ENV HADOOP_CLASSPATH=$HADOOP_CLASSPATH

COPY start-resourcemanager.sh /usr/local/bin

RUN chmod a+x /usr/local/bin/start-resourcemanager.sh

EXPOSE 8088

CMD ["/usr/local/bin/start-resourcemanager.sh"]

- start-resourcemanager.sh

#!/bin/bash

$HADOOP_HOME/sbin/yarn-daemon.sh start resourcemanager

tail -f /dev/null

nodemanager

- Dockerfile

FROM registry.team.com/hadoop-base:2.7.1

MAINTAINER [email protected]

ENV HADOOP_CLASSPATH=$HADOOP_CLASSPATH

COPY start-nodemanager.sh /usr/local/bin

RUN chmod a+x /usr/local/bin/start-nodemanager.sh

CMD ["/usr/local/bin/start-nodemanager.sh"]

- start-nodemanager.sh

#!/bin/bash

$HADOOP_HOME/sbin/yarn-daemon.sh start nodemanager

tail -f /dev/null

historyserver

- Dockerfile

FROM registry.team.com/hadoop-base:2.7.1

MAINTAINER [email protected]

COPY start-historyserver.sh /usr/local/bin

RUN chmod a+x /usr/local/bin/start-historyserver.sh

EXPOSE 8188

CMD ["/usr/local/bin/start-historyserver.sh"]

- start-historyserver.sh

#!/bin/bash

$HADOOP_HOME/sbin/./mr-jobhistory-daemon.sh start historyserver

tail -f /dev/null

zookeeper

- Dockerfile

FROM centos:6.9

MAINTAINER [email protected]

ADD zookeeper-3.4.11.tar.gz /usr/lib

ADD jdk-7u67-linux-x64.tar.gz /usr/lib

ENV ZOOKEEPER_HOME=/usr/lib/zookeeper-3.4.11 \

JAVA_HOME=/usr/lib/jdk1.7.0_67

ENV PATH=$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin:$PATH

COPY run.sh /usr/local/bin

RUN chmod a+x /usr/local/bin/run.sh \

&& mkdir -p /var/lib/zookeeper

VOLUME ["/var/lib/zookeeper"]

EXPOSE 2181

ENTRYPOINT ["/usr/local/bin/run.sh"]

- run.sh

#!/usr/bin/env bash

start-zookeeper() {

if [ ! -e $ZOOKEEPER_HOME/conf/zoo.cfg ]; then

touch $ZOOKEEPER_HOME/conf/zoo.cfg

chmod a+x $ZOOKEEPER_HOME/conf/zoo.cfg

ZOO_CFG=$ZOOKEEPER_HOME/conf/zoo.cfg

echo "zoo.cfg创建成功"

else

echo "zoo.cfg创建失败,文件已经存在"

fi

echo "clientPort=2181" >> ${ZOO_CFG}

echo "dataDir=/var/lib/zookeeper" >> ${ZOO_CFG}

echo "dataLogDir=/var/lib/zookeeper/logs" >> ${ZOO_CFG}

echo "tickTime=2000" >> ${ZOO_CFG}

echo "initLimit=5" >> ${ZOO_CFG}

echo "syncLimit=2" >> ${ZOO_CFG}

echo "autopurge.snapRetainCount=3" >> ${ZOO_CFG}

echo "autopurge.purgeInterval=0" >> ${ZOO_CFG}

echo "maxClientCnxns=60" >> ${ZOO_CFG}

echo "${SERVER_ID}" > /var/lib/zookeeper/myid

env | grep ADDITIONAL_ZOOKEEPER | cut -d= -f2,3 >> ${ZOO_CFG}

}

start-zookeeper

$ZOOKEEPER_HOME/bin/zkServer.sh start-foreground

跑一个伪分布式集群

- docker-compose.yaml

version: '2'

networks:

net:

services:

zookeeper1:

image: registry.team.com/zookeeper:latest

container_name: zookeeper1

networks:

- net

environment:

- SERVER_ID=1

- ADDITIONAL_ZOOKEEPER_1=server.1=zookeeper1:2888:3888

- ADDITIONAL_ZOOKEEPER_2=server.2=zookeeper2:2888:3888

- ADDITIONAL_ZOOKEEPER_3=server.3=zookeeper3:2888:3888

volumes:

- /home/vdp/fc/zookeeper/zookeeper1:/var/lib/zookeeper

hostname: zookeeper1

zookeeper2:

image: registry.team.com/zookeeper:latest

container_name: zookeeper2

networks:

- net

environment:

- SERVER_ID=2

- ADDITIONAL_ZOOKEEPER_1=server.1=zookeeper1:2888:3888

- ADDITIONAL_ZOOKEEPER_2=server.2=zookeeper2:2888:3888

- ADDITIONAL_ZOOKEEPER_3=server.3=zookeeper3:2888:3888

volumes:

- /home/vdp/fc/zookeeper/zookeeper2:/var/lib/zookeeper

hostname: zookeeper2

zookeeper3:

image: registry.team.com/zookeeper:latest

container_name: zookeeper3

networks:

- net

environment:

- SERVER_ID=3

- ADDITIONAL_ZOOKEEPER_1=server.1=zookeeper1:2888:3888

- ADDITIONAL_ZOOKEEPER_2=server.2=zookeeper2:2888:3888

- ADDITIONAL_ZOOKEEPER_3=server.3=zookeeper3:2888:3888

volumes:

- /home/vdp/fc/zookeeper/zookeeper3:/var/lib/zookeeper

hostname: zookeeper3

namenode:

image: registry.team.com/namenode:latest

container_name: namenode

networks:

- net

ports:

- 50070:50070

- 14000:14000

volumes:

- /home/vdp/fc/namenode:/var/hadoop/dfs/name

- /home/vdp/fc-logs/namenode:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./hadoop.env

hostname: namenode

datanode1:

image: registry.team.com/datanode:latest

container_name: datanode1

networks:

- net

depends_on:

- namenode

ports:

- 50071:50071

volumes:

- /home/vdp/fc/datanode1:/var/hadoop/dfs/data

- /home/vdp/fc-logs/datanode1:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./datanode1.env

hostname: datanode1

datanode2:

image: registry.team.com/datanode:latest

container_name: datanode2

networks:

- net

depends_on:

- namenode

ports:

- 50072:50072

volumes:

- /home/vdp/fc/datanode2:/var/hadoop/dfs/data

- /home/vdp/fc-logs/datanode2:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./datanode2.env

hostname: datanode2

datanode3:

image: registry.team.com/datanode:latest

container_name: datanode3

networks:

- net

depends_on:

- namenode

ports:

- 50073:50073

volumes:

- /home/vdp/fc/datanode3:/var/hadoop/dfs/data

- /home/vdp/fc-logs/datanode3:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./datanode3.env

hostname: datanode3

resourcemanager:

image: registry.team.com/resourcemanager:latest

container_name: resourcemanager

networks:

- net

ports:

- 9088:8088

volumes:

- /home/vdp/fc-logs/resourcemanager:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./hadoop.env

hostname: resourcemanager

depends_on:

- nodemanager1

- nodemanager2

- nodemanager3

nodemanager1:

image: registry.team.com/nodemanager:latest

container_name: nodemanager1

networks:

- net

volumes:

- /home/vdp/fc-logs/nodemanager1:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./hadoop.env

hostname: nodemanager1

nodemanager2:

image: registry.team.com/nodemanager:latest

container_name: nodemanager2

networks:

- net

volumes:

- /home/vdp/fc-logs/nodemanager2:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./hadoop.env

hostname: nodemanager2

nodemanager3:

image: registry.team.com/nodemanager:latest

container_name: nodemanager3

networks:

- net

volumes:

- /home/vdp/fc-logs/nodemanager3:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./hadoop.env

hostname: nodemanager3

historyserver:

image: registry.team.com/historyserver:latest

container_name: historyserver

networks:

- net

ports:

- 29888:19888

volumes:

- /home/vdp/fc-logs/historyserver:/usr/lib/hadoop-2.7.1/logs

env_file:

- ./hadoop.env

hostname: historyserver

docker-compose.yaml 中需要挂载的env文件

- hadoop.env

CORE_fs_defaultFS=hdfs://namenode:9000

CORE_hadoop_proxyuser_root_hosts=*

CORE_hadoop_proxyuser_root_groups=*

HDFS_dfs_webhdfs_enabled=true

HDFS_dfs_permissions_enabled=false

HDFS_dfs_permissions=false

HDFS_dfs_client_use_datanode_hostname=true

HDFS_dfs_datanode_use_datanode_hostname=true

HDFS_dfs_replication=3

HDFS_dfs_http_address=0.0.0.0:50070

YARN_yarn_log___aggregation___enable=true

YARN_yarn_resourcemanager_recovery_enabled=true

YARN_yarn_nm_liveness___monitor_expiry___interval___ms=60000

YARN_yarn_resourcemanager_store_class=org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore

YARN_yarn_nodemanager_remote___app___log___dir=/app-logs

YARN_yarn_nodemanager_aux___services=mapreduce_shuffle

YARN_yarn_resourcemanager_hostname=resourcemanager

YARN_yarn_resourcemanager_webapp_address=resourcemanager:8088

YARN_yarn_resourcemanager_scheduler_class=org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler

YARN_yarn_nodemanager_aux___services_mapreduce_shuffle_class=org.apache.hadoop.mapred.ShuffleHandler

YARN_yarn_resourcemanager_system___metrics___publisher_enabled=true

YARN_yarn_resourcemanager_address=resourcemanager:8032

YARN_yarn_resourcemanager_scheduler_address=resourcemanager:8030

YARN_yarn_resourcemanager_resource___tracker_address=resourcemanager:8031

YARN_yarn_resourcemanager_bind___host=0.0.0.0

MAPRED_mapreduce_framework_name=yarn

MAPRED_mapreduce_jobhistory_address=historyserver:10020

MAPRED_mapreduce_jobhistory_webapp_address=historyserver:19888

- datanode1.env

CORE_fs_defaultFS=hdfs://namenode:9000

HDFS_dfs_webhdfs_enabled=true

HDFS_dfs_permissions_enabled=false

HDFS_dfs_permissions=false

HDFS_dfs_client_use_datanode_hostname=true

HDFS_dfs_datanode_use_datanode_hostname=true

HDFS_dfs_replication=3

HDFS_dfs_datanode_http_address=0.0.0.0:50071

YARN_yarn_log___aggregation___enable=true

YARN_yarn_resourcemanager_recovery_enabled=true

YARN_yarn_nm_liveness___monitor_expiry___interval___ms=60000

YARN_yarn_resourcemanager_store_class=org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore

YARN_yarn_nodemanager_remote___app___log___dir=/app-logs

YARN_yarn_nodemanager_aux___services=mapreduce_shuffle

YARN_yarn_resourcemanager_hostname=resourcemanager

YARN_yarn_resourcemanager_webapp_address=resourcemanager:8088

YARN_yarn_resourcemanager_scheduler_class=org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler

YARN_yarn_nodemanager_aux___services_mapreduce_shuffle_class=org.apache.hadoop.mapred.ShuffleHandler

YARN_yarn_resourcemanager_system___metrics___publisher_enabled=true

YARN_yarn_resourcemanager_address=resourcemanager:8032

YARN_yarn_resourcemanager_scheduler_address=resourcemanager:8030

YARN_yarn_resourcemanager_resource___tracker_address=resourcemanager:8031

YARN_yarn_resourcemanager_bind___host=0.0.0.0

MAPRED_mapreduce_framework_name=yarn

MAPRED_mapreduce_jobhistory_address=historyserver:10020

MAPRED_mapreduce_jobhistory_webapp_address=historyserver:19888

- datanode2.env

CORE_fs_defaultFS=hdfs://namenode:9000

HDFS_dfs_webhdfs_enabled=true

HDFS_dfs_permissions_enabled=false

HDFS_dfs_permissions=false

HDFS_dfs_client_use_datanode_hostname=true

HDFS_dfs_datanode_use_datanode_hostname=true

HDFS_dfs_replication=3

HDFS_dfs_datanode_http_address=0.0.0.0:50072

YARN_yarn_log___aggregation___enable=true

YARN_yarn_resourcemanager_recovery_enabled=true

YARN_yarn_nm_liveness___monitor_expiry___interval___ms=60000

YARN_yarn_resourcemanager_store_class=org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore

YARN_yarn_nodemanager_remote___app___log___dir=/app-logs

YARN_yarn_nodemanager_aux___services=mapreduce_shuffle

YARN_yarn_resourcemanager_hostname=resourcemanager

YARN_yarn_resourcemanager_webapp_address=resourcemanager:8088

YARN_yarn_resourcemanager_scheduler_class=org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler

YARN_yarn_nodemanager_aux___services_mapreduce_shuffle_class=org.apache.hadoop.mapred.ShuffleHandler

YARN_yarn_resourcemanager_system___metrics___publisher_enabled=true

YARN_yarn_resourcemanager_address=resourcemanager:8032

YARN_yarn_resourcemanager_scheduler_address=resourcemanager:8030

YARN_yarn_resourcemanager_resource___tracker_address=resourcemanager:8031

YARN_yarn_resourcemanager_bind___host=0.0.0.0

MAPRED_mapreduce_framework_name=yarn

MAPRED_mapreduce_jobhistory_address=historyserver:10020

MAPRED_mapreduce_jobhistory_webapp_address=historyserver:19888

- datanode3.env

CORE_fs_defaultFS=hdfs://namenode:9000

HDFS_dfs_webhdfs_enabled=true

HDFS_dfs_permissions_enabled=false

HDFS_dfs_permissions=false

HDFS_dfs_client_use_datanode_hostname=true

HDFS_dfs_datanode_use_datanode_hostname=true

HDFS_dfs_replication=3

HDFS_dfs_datanode_http_address=0.0.0.0:50073

YARN_yarn_log___aggregation___enable=true

YARN_yarn_resourcemanager_recovery_enabled=true

YARN_yarn_nm_liveness___monitor_expiry___interval___ms=60000

YARN_yarn_resourcemanager_store_class=org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore

YARN_yarn_nodemanager_remote___app___log___dir=/app-logs

YARN_yarn_nodemanager_aux___services=mapreduce_shuffle

YARN_yarn_resourcemanager_hostname=resourcemanager

YARN_yarn_resourcemanager_webapp_address=resourcemanager:8088

YARN_yarn_resourcemanager_scheduler_class=org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler

YARN_yarn_nodemanager_aux___services_mapreduce_shuffle_class=org.apache.hadoop.mapred.ShuffleHandler

YARN_yarn_resourcemanager_system___metrics___publisher_enabled=true

YARN_yarn_resourcemanager_address=resourcemanager:8032

YARN_yarn_resourcemanager_scheduler_address=resourcemanager:8030

YARN_yarn_resourcemanager_resource___tracker_address=resourcemanager:8031

YARN_yarn_resourcemanager_bind___host=0.0.0.0

MAPRED_mapreduce_framework_name=yarn

MAPRED_mapreduce_jobhistory_address=historyserver:10020

MAPRED_mapreduce_jobhistory_webapp_address=historyserver:19888