【Kylin】Kylin安装与部署

目录

前提

依赖环境

集群规划

安装依赖的Hbase1.1.1

Kylin安装部署

-

前提

1.安装启动Hadoop

2.安装启动zookeeper

3.安装spark

-

依赖环境

| 软件 |

版本 |

| Apache hbase-1.1.1-bin.tar.gz |

1.1.1 |

| spark-2.2.0-bin-2.6.0-cdh5.14.0.tgz |

2.2.0-bin-cdh5.14.0 |

| apache-kylin-2.6.3-bin-hbase1x.tar.gz |

2.6.3 |

-

集群规划

我们当前只需要把Kylin配置到一个节点

| 主机名 |

IP |

守护进程 |

| node1 |

192.168.88.120 |

NameNode DataNode RunJar(Hive metastore) RunJar(Hive hiveserver2) QuorumPeerMain HMaster HRegionServer kylin NodeManager |

| node2 |

192.168.88.121 |

SecondaryNameNode JobHistoryServer DataNode HRegionServer QuorumPeerMain ResourceManager HistoryServer NodeManager |

| node3 |

192.168.88.122 |

HRegionServer NodeManager DataNode QuorumPeerMain |

注意:

1.kylin-2.6.3-bin-hbase1x所依赖的hbase为1.1.1版本

2.要求hbase的hbase.zookeeper.quorum值必须只能是node1,node02,不允许出现node01:2181

-

安装依赖的Hbase1.1.1

上传并解压

tar -zxvf /export/soft/hbase-1.1.1-bin.tar.gz -C /export/servers/

修改hbase-env.sh 添加JAVA_HOME环境变量

cd /export/servers/hbase-1.1.1/conf/

vim ./hbase-env.sh

#JAVA_HOME环境需要提前配置好

export JAVA_HOME=${JAVA_HOME}

export HBASE_MANAGES_ZK=false

修改hbase-site.xml配置

hbase.rootdir

hdfs://node01:8020/hbase_1.1.1

hbase.cluster.distributed

true

hbase.master.port

16000

hbase.zookeeper.property.clientPort

2181

hbase.master.info.port

60010

hbase.zookeeper.quorum

node01,node02,node03

hbase.zookeeper.property.dataDir

/export/servers/zookeeper-3.4.5-cdh5.14.0/zkdata

hbase.thrift.support.proxyuser

true

hbase.regionserver.thrift.http

true

在hbase conf文件夹中获取Hadoop上的core-site.xml和hdfs-site.xml文件

cd /export/servers/hbase-1.1.1/conf/

cp /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop/hdfs-site.xml ./

cp /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop/core-site.xml ./

修改regionservers配置文件

cd /export/servers/hbase-1.1.1/conf/

vim regionservers

node01

配置hbase环境变量

cd /etc/profile.d/

vim ./hbase.sh

export HBASE_HOME=/export/servers/hbase-1.1.1

export PATH=$PATH:$HBASE_HOME/bin

刷新环境变量

source /etc/profile

删除zk历史数据

# 进入到 zkCli中

/export/servers/zookeeper-3.4.5-cdh5.14.0/bin/zkCli.sh

# 执行删除

rmr /hbase

启动hbase

cd /export/servers/hbase-1.1.1/bin/

start-hbase.sh

验证

#进入hbase窗口

hbase shell

#查看当前数据库

list

-

Kylin安装部署

上传并解压

tar -zxvf /export/soft/apache-kylin-2.6.3-bin-hbase1x.tar.gz -C /export/servers/

拷贝hadoop\hive\hbase\spark核心配置文件到kylin的conf目录

cd /export/servers/apache-kylin-2.6.3-bin-hbase1x/conf/

cp /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop/hdfs-site.xml ./

cp /export/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop/core-site.xml ./

cp /export/servers/hive-1.1.0-cdh5.14.0/conf/hive-site.xml ./

cp /export/servers/spark-2.2.0-bin-2.6.0-cdh5.14.0/conf/spark-defaults.conf.template ./

mv ./spark-defaults.conf.template ./spark-defaults.conf

添加hadoop\hive\hbase\spark路径到bin/kylin.sh

cd /export/servers/apache-kylin-2.6.3-bin-hbase1x/bin/

vim ./kylin.sh

export HADOOP_HOME=/export/servers/hadoop-2.6.0-cdh5.14.0

export HIVE_HOME=/export/servers/hive-1.1.0-cdh5.14.0

export HBASE_HOME=/export/servers/hbase-1.1.1

export SPARK_HOME=/export/servers/spark-2.2.0-bin-2.6.0-cdh5.14.0

配置spark环境变量

cd /etc/profile.d/

vim ./spark.sh

export SPARK_HOME=/export/servers/spark-2.2.0-bin-2.6.0-cdh5.14.0

export PATH=:$SPARK_HOME/bin:$PATH

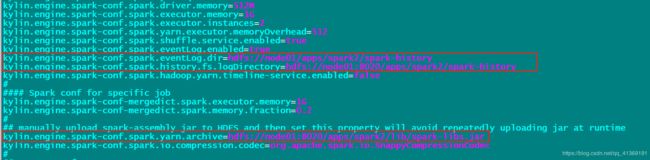

配置conf/kylin.properties

cd /export/servers/apache-kylin-2.6.3-bin-hbase1x/conf

vim kylin.properties

kylin.engine.spark-conf.spark.eventLog.dir=hdfs://node01/apps/spark2/spark-history

kylin.engine.spark-conf.spark.history.fs.logDirectory=hdfs://node01:8020/apps/spark2/spark-history

kylin.engine.spark-conf.spark.yarn.archive=hdfs://node01:8020/apps/spark2/lib/spark-libs.jar

初始化kylin在hdfs上的数据路径

hdfs dfs -mkdir -p /apps/kylin

启动集群

1.启动zookeeper

2.启动HDFS

3.启动YARN集群

4.启动HBase集群

5.启动 metastore

nohup hive --service metastore &6.启动 hiverserver2

nohup hive --service hiveserver2 &7.启动Yarn history server

mr-jobhistory-daemon.sh start historyserver8.启动spark history server【可选】

sbin/start-history-server.sh9.启动kylin

cd /export/servers/apache-kylin-2.6.3-bin-hbase1x/bin/ ./kylin.sh start

登录Kylin

http://192.168.100.201:7070/kylin/models

| url |

http://IP:7070/kylin |

| 默认用户名 |

ADMIN |

| 默认密码 |

KYLIN |