Hadoop集群搭建

资料:zookeeper-3.4.7.tar.gz

链接:http://pan.baidu.com/s/1nvNzJNb 密码:8rcw

hadoop-2.7.1_64bit.tar.gz

链接:http://pan.baidu.com/s/1i4LVGXZ 密码:z813

jdk-7u55-linux-i586.tar.gz

链接:http://pan.baidu.com/s/1hsnIjVe 密码:zvr8

1,准备6台虚拟机(centos6.5),建议1G内存

2,调通虚拟机网络http://blog.csdn.net/u010916338/article/details/78486568

3,永久关闭每台虚拟机防火墙

http://blog.csdn.net/u010916338/article/details/77511988

3.永久更改每台的主机名,big04,big05……big09

http://blog.csdn.net/u010916338/article/details/78306483

4,更改每台主机的hosts文件

http://blog.csdn.net/u010916338/article/details/78317744

示例:

127.0.0.1 localhost

192.168.1.14 big04

192.168.1.15 big05

192.168.1.16 big06

192.168.1.17 big07

192.168.1.18 big08

192.168.1.19 big09

一台配完之后,通过scp /etc/hosts 192.168.1.15:/etc/hosts

以此方法拷贝其他5台机器

5,配置免秘钥登录,36次配置(每台机器配置6次免秘钥登录)

http://blog.csdn.net/u010916338/article/details/77510826

6,节点配置示意图:

big04

Zookeeper

NameNode (active)

Resourcemanager (active)

big05

Zookeeper

NameNode (standby)

big06

Zookeeper

ResourceManager (standby)

big07

DataNode

NodeManager

JournalNode

big08

DataNode

NodeManager

JournalNode

big09

DataNode

NodeManager

JournalNode

7,为每台机器安装jdk和配置jdk环境(略)

8,前三台机器安装和配置zookeeper

http://blog.csdn.net/u010916338/article/category/7275500

9,安装和配置big04节点的hadoop

配置hadoop-env.sh

配置jdk安装所在目录

配置hadoop配置文件所在目录

10,配置big04节点core-site.xml

fs.defaultFS

hdfs://ns

hadoop.tmp.dir

/usr/local/soft/hadoop-2.7.1/tmp

ha.zookeeper.quorum

big04:2181,big05:2181,big06:2181

hadoop.proxyuser.root.hosts

*

hadoop.proxyuser.root.groups

*

hadoop.proxyuser.zhaoshb.hosts

*

hadoop.proxyuser.zhaoshb.groups

*

11,配置big04节点的hdfs-site.xml

dfs.nameservices

ns

dfs.ha.namenodes.ns

nn1,nn2

dfs.namenode.rpc-address.ns.nn1

big04:9000

dfs.namenode.http-address.ns.nn1

big04:50070

dfs.namenode.rpc-address.ns.nn2

big05:9000

dfs.namenode.http-address.ns.nn2

big05:50070

dfs.namenode.shared.edits.dir

qjournal://big07:8485;big08:8485;big09:8485/ns

dfs.journalnode.edits.dir

/usr/local/soft/hadoop-2.7.1/journal

dfs.ha.automatic-failover.enabled

true

dfs.client.failover.proxy.provider.ns

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

dfs.namenode.name.dir

file:///usr/local/soft/hadoop-2.7.1/tmp/namenode

dfs.datanode.data.dir

file:///usr/local/soft/hadoop-2.7.1/tmp/datanode

dfs.replication

3

dfs.permissions

false

12,配置big04节点mapred-site.xml

mapreduce.framework.name

yarn

13,配置big04节点yarn-site.xml

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

big04

yarn.resourcemanager.hostname.rm2

big06

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.store.class

org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore

yarn.resourcemanager.zk-address

big04:2181,big05:2181,big06:2181

For multiple zk services, separate them with comma

yarn.resourcemanager.cluster-id

yarn-ha

yarn.resourcemanager.hostname

big06

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.pmem-check-enabled

false

yarn.nodemanager.vmem-check-enabled

false

14,配置big04节点slaves文件

示例:

big07

big08

big09

15,配置big04节点hadoop的环境变量,然后拷贝给另外5台

http://blog.csdn.net/u010916338/article/details/78472908

JAVA_HOME=/home/software/jdk1.8

HADOOP_HOME=/usr/local/soft/hadoop-2.7.1

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

export JAVA_HOME PATH CLASSPATH HADOOP_HOME

16,根据配置文件,创建相关的文件夹,用来存放对应数据

在hadoop-2.7.1目录下创建:

①journal目录

②创建tmp目录

③在tmp目录下,分别创建namenode目录和datanode目录

17,通过scp 命令,将hadoop安装目录远程copy到其他5台机器上

比如向big05节点传输:

scp -r hadoop-2.7.1 big05:/usr/local/soft

18,启动zookeeper集群

在Zookeeper安装目录的bin目录下执行:sh zkServer.sh start

19,格式化zookeeper

在zk的leader节点上执行:

hdfs zkfc -formatZK,这个指令的作用是在zookeeper集群上生成ha节点(ns节点)

20,启动journalnode集群

在big07,big08,big09节点上执行:

切换到hadoop安装目录的sbin目录下,执行:

sh hadoop-daemons.sh start journalnode

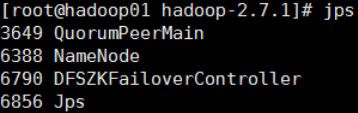

然后执行jps命令查看:

21,格式化big04节点的namenode

在big04节点上执行:

hadoop namenode -format

22,启动big04节点的namenode

在big04节点上执行:

hadoop-daemon.sh start namenode

23,把big05节点的 namenode节点变为standby namenode节点

在big05节点上执行:

hdfs namenode -bootstrapStandby

24,启动big05节点的namenode节点

在big05节点上执行:

hadoop-daemon.sh start namenode

25,在big07,big08,big09节点上启动datanode节点

在big07,big08,big09节点上执行: hadoop-daemon.sh start datanode

26,启动zkfc(启动FalioverControllerActive)

在big04,big05节点上执行:

hadoop-daemon.sh start zkfc

27,在big04节点上启动 主Resourcemanager

在big04节点上执行:start-yarn.sh

启动成功后,big07,big08,big09节点上应该有nodemanager 的进程

28,.在big06节点上启动副 Resoucemanager

在big06节点上执行:yarn-daemon.sh start resourcemanager

29,输入地址:http://192.168.1.14:50070,查看namenode的信息,由于之前倒过一次又重启,所以变成了standby

30,输入地址:http://192.168.1.14:8088,查看yarn的resourcemanager管理地址。

31,输入地址: http://192.168.1.17:8042,查看nodemanager

32,输入地址: http://192.168.1.14:9870,namenode HDFS界面

33,配置完了以后关闭虚拟机,启动Hadoop集群可以不用按上面一步一步来http://blog.csdn.net/u010916338/article/details/78487384