JavaAPI访问Hadoop2.2HA的配置下访问Hbase0.96.2 .

1、确保Hadoop和Hbase服务已经正常启动了

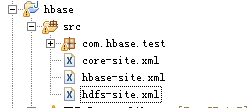

2、把hbase-site.xml,core-site.xml,hdfs-site.xml配置文件放到Java工程的src目录下

3、引入相关的依赖包

4、Java Client测试访问Hbase集群

package com.hbase.test;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.filter.CompareFilter;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.RegexStringComparator;

import org.apache.hadoop.hbase.filter.RowFilter;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

public class TestHBase {

/**

* @param args

*/

public static Configuration configuration ;

/**

* 初始化参配置信息

*/

static {

configuration = HBaseConfiguration.create() ;

//配置文件加载方式一

// configuration.set("hbase.master", "10.58.51.78:60000") ;

// configuration.set("hbase.zookeeper.quorum", "S1SF001,S1SF002,S1SF003,S1SF004,S1SF005") ;

//配置文件加载方式二

String filePath = "hbase-site.xml" ;

Path path = new Path(filePath);

configuration.addResource(path);

}

/**

* 根据给定的表名创建表

* @param tableName 表名

* @throws Exception

*/

public static void createTable(String tableName) throws Exception {

HBaseAdmin admin = new HBaseAdmin(configuration);

if (admin.tableExists(tableName)) {

admin.disableTable(tableName);

admin.deleteTable(tableName);

System.out.println("表已存在,先删除...");

}

HTableDescriptor tableDescriptor = new HTableDescriptor(tableName);

tableDescriptor.addFamily(new HColumnDescriptor("cf1"));

admin.createTable(tableDescriptor) ;

admin.close();

}

/**

* 初始化数据

* @param tableName

* @throws Exception

*/

public static void initData(String tableName) throws Exception{

HTable table = new HTable(configuration, tableName) ;

for(int i=10;i<22;i++){

String ii = String.valueOf(i);

Put put = new Put(ii.getBytes()) ;

put.add("cf1".getBytes(), "column1".getBytes(), "the first column".getBytes()) ;

put.add("cf1".getBytes(), "column2".getBytes(), "the second column".getBytes()) ;

put.add("cf1".getBytes(), "column3".getBytes(), "the third column".getBytes()) ;

table.put(put) ;

}

table.close();

}

/**

* 删除一行数据

* @param tableName 表名

* @param rowKey rowkey

* @throws Exception

*/

public static void deleteRow(String tableName,String rowKey) throws Exception{

HTable table = new HTable(configuration,tableName);

Delete delete = new Delete(rowKey.getBytes());

table.delete(delete);

}

/**

* 删除rowkey列表

* @param tableName

* @param rowKeys

* @throws Exception

*/

public static void deleteRowKeys(String tableName, List rowKeys) throws Exception

{

HTable table = new HTable(configuration, tableName) ;

List deletes = new ArrayList();

for(String rowKey:rowKeys){

Delete delete = new Delete(rowKey.getBytes());

deletes.add(delete);

}

table.delete(deletes);

table.close();

}

/**

* 根据rowkey获取所有column值

* @param tableName

* @param rowKey

* @throws Exception

*/

public static void get(String tableName,String rowKey) throws Exception{

HTable table = new HTable(configuration, tableName) ;

Get get = new Get(rowKey.getBytes());

Result result = table.get(get);

for(KeyValue kv:result.raw()){

System.out.println("cf="+new String(kv.getFamily())+", columnName="+new String(kv.getQualifier())+", value="+new String(kv.getValue()));

}

}

/**

* 批量查询

* @param tableName

* @param startRow

* @param stopRow

* @throws Exception

* select column1,column2,column3 from test_table where id between ... and

*/

public static void scan(String tableName, String startRow, String stopRow) throws Exception {

HTable table = new HTable(configuration, tableName);

Scan scan = new Scan () ;

scan.addColumn("cf1".getBytes(), "column1".getBytes()) ;

scan.addColumn("cf1".getBytes(), "column2".getBytes()) ;

scan.addColumn("cf1".getBytes(), "column3".getBytes()) ;

//rowkey>=a && rowkey