centos7.6 1810 minimal 安装kubernetes V1.15.3高可用集群

centos7.6 1810 minimal 安装kubernetes V1.15.3高可用集群

@(k8s)

文章目录

-

centos7.6 1810 minimal 安装kubernetes V1.15.3高可用集群 - 1. 安装规划

- 2. 时间同步

- 3. 调整内核参数

- 4. 加载 ipvs 模块

- 另一种方法加载ipvs

- 5. 升级系统内核

- 6. 开放防火墙端口:(实际操作中先关闭防火墙,不用设置防火墙策略,因为个人经历过7,8次重装,开放端口不全)

- 7. 关闭selinux/swap,设置主机host映射:

- 8. 安装Docker

- 9. 配置master节点haproxy、keepalived

- 所有节点(Master1~Master2)需要配置检查脚本(check_haproxy.sh), 当 haproxy 挂掉后自动停止 keepalived

- 修改系统服务(keepalived)

- 启动haproxy和keepalived

- 10. 安装kubeadm

- 11. 设置阿里云镜像加速

- 12. 部署Kubernetes Master

- 第一个master节点

- 命令行方式初始化(单master节点适用)

- config方式初始化(多master适用,基于资源有限只用了两个master,亲测可用)

- 在刚刚初始化后的master节点上检查执行如下命令是否有正常返回,且都是否正常

- 为master01节点设置taint(污点)(题外话,可能会用到删除污点)

- 删除污点

- 第二个master节点加入集群

- 其他node节点加入集群

- 12. 为集群设置node标签:

- 13. 部署dashboard(接另一篇文档)

- 14. 测试

- 至此,剧终,kubernetesV1.15.3安装完毕

- 参考链接:

1. 安装规划

Pod 分配 IP 段: 10.244.0.0/16

ClusterIP 地址: 10.99.0.0/16

CoreDns 地址: 10.99.110.110

统一安装路径: /data/apps/

| 主机名(HostName)| IP 地址 | 角色(Role) |组件|集群 IP(Vip)|

| :-------- | --------? :–: |

| k8s-master01 | 192.168.0.112 | Master | Kube-apiserver 、 kube-controller-manager、kube-scheduler、etcd、kube-proxy、docker、flanel、keepalived、haproxy | 192.168.0.119

| k8s-master02 | 192.168.0.175 | Master | Kube-apiserver 、 kube-controller-manager、kube-scheduler、etcd、kube-proxy、docker、flanel、keepalived、haproxy | 192.168.0.119

| k8s-worker01 | 192.168.0.66 | Node | Kubelet 、 kube-proxy 、 docker 、flannel、node_exporter | /

| k8s-worker02 | 192.168.0.172 | Node | Kubelet 、 kube-proxy 、 docker 、flannel、node_exporter | /

| k8s-harbor01 | 192.168.0.116 | Registr |

2. 时间同步

安装时间同步软件

yum install chrony –y

修改/etc/chrony.conf 配置文件, 增加如下内容。

server time.aliyun.com iburst

安装必备软件

yum install wget vim gcc git lrzsz net-tools tcpdump telnet rsync -y

启动 chronyd

systemctl start chronyd

3. 调整内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 10

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.ip_forward = 1

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

net.netfilter.nf_conntrack_max = 2310720

fs.inotify.max_user_watches=89100

fs.may_detach_mounts = 1

fs.file-max = 52706963

fs.nr_open = 52706963

EOF

执行以下命令使修改生效

sysctl --system

modprobe br_netfilter

4. 加载 ipvs 模块

cat << EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

ipvs_modules_dir="/usr/lib/modules/\`uname -r\`/kernel/net/netfilter/ipvs"

for i in \`ls \$ipvs_modules_dir | sed -r 's#(.*).ko.xz#\1#'\`; do

/sbin/modinfo -F filename \$i &> /dev/null

if [ \$? -eq 0 ]; then

/sbin/modprobe \$i

fi

done

EOF

chmod +x /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

另一种方法加载ipvs

- 加载ipvs相关内核模块

- 如果重新开机,需要重新加载

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

5. 升级系统内核

[root@K8S-PROD-MASTER-A1~]# rpm -import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

安装elrepo的yum源

[root@K8S-PROD-MASTER-A1 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

Retrieving http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

Retrieving http://elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

Preparing... #################################[100%]

Updating / installing...

1:elrepo-release-7.0-3.el7.elrepo #################################[100%]

使用以下命令列出可用的内核相关包

执行如下命令查看可用内核

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

安装 4.4.176 内核

yum --enablerepo=elrepo-kernel install kernel-lt kernel-lt-devel -y

更改内核默认启动顺序

grub2-set-default 0

reboot

验证内核是否升级成功

[root@K8S-PROD-LB-A1 ~]# uname -rp

4.4.176-1.el7.elrepo.x86_64 x86_64

6. 开放防火墙端口:(实际操作中先关闭防火墙,不用设置防火墙策略,因为个人经历过7,8次重装,开放端口不全)

命令:

#Master Node Inbound

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="6443" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="6443" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="8443" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="8443" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="10086" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="10086" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="udp" port="8285" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="udp" port="8285" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="udp" port="8472" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="udp" port="8472" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="2379-2380" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="2379-2380" accept"

#Worker Node Inbound

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="10250" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="10250" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="10255" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="10255" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="30000-40000" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="30000-40000" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="udp" port="8285" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="udp" port="8285" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="udp" port="8472" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="udp" port="8472" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="179" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="179" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="2379-2380" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="2379-2380" accept"

#Ingress

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="80" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="80" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="443" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="443" accept"

firewall-cmd --permanent --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="8080" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" source address="192.168.0.0/24" port protocol="tcp" port="8080" accept"

7. 关闭selinux/swap,设置主机host映射:

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

- 关闭swap:

swapoff -a $ 临时

vim /etc/fstab $ 永久

- 添加主机名与IP对应关系(记得设置主机名)

cat /etc/hosts

192.168.0.112 k8s-master01

192.168.0.175 k8s-master02

192.168.0.66 k8s-worker01

192.168.0.172 k8s-worker02

192.168.0.116 k8s-harbor

8. 安装Docker

yum install -y yum-utils device-mapper-persistent-data lvm2

#wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/dockerce.repo

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum list docker-ce --showduplicates | sort -r

yum install docker-ce-18.06.3.ce-3.el7 containerd.io -y

docker --version

- 配置 docker 参数

/etc/docker/daemon.json

# mkdir /etc/docker

# vi /etc/docker/daemon.json

{

"log-level": "warn",

"selinux-enabled": false,

"insecure-registries": ["192.168.0.116:10500"],

"registry-mirrors": ["https://3laho3y3.mirror.aliyuncs.com"],

"default-shm-size": "128M",

"data-root": "/data/docker",

"max-concurrent-downloads": 10,

"max-concurrent-uploads": 5,

"oom-score-adjust": -1000,

"debug": false,

"live-restore": true,

"exec-opts": ["native.cgroupdriver=cgroupfs"]

}

192.168.0.116:10500,为搭建的docker私有仓库harbor

参数说明

参数名称 描述

- log-level 日志级别[error|warn|info|debug]。

- insecure-registries 配置私有镜像仓库,多个地址以“,”隔开。

- registry-mirrors 默认拉取镜像仓库地址

- max-concurrent-downloads 最大下载镜像数量

- max-concurrent-uploads 最大上传镜像数量

- live-restore Docker 停止时保持容器继续运行,取值[true|false]

- native.cgroupdriver Docker 存储驱动

- data-root 设置 docker 存储路径, 默认为/var/lib/docker

详细参数请参考官方文档:

https://docs.docker.com/engine/reference/commandline/dockerd/#/linux-configuration-file

/etc/sysconfig/docker (经测试,此文件在设置过/etc/docker/daemon.json后可不设置,没什么卵用,主要是上面的/etc/docker/daemon.json文件要配置好)

[root@k8s-harbor harbor]# cat /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://fzhifedh.mirror.aliyuncs.com --insecure-registry=192.168.0.116:10500'

if [ -z "${DOCKER_CERT_PATH}" ]; then

DOCKER_CERT_PATH=/etc/docker

fi

/usr/lib/systemd/system/docker.service (docker服务启动文件)

[root@k8s-master01 dashboard4friend]# cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/sysconfig/docker

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd $other_args

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

MountFlags=slave

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

9. 配置master节点haproxy、keepalived

- 安装

yum install haproxy keepalived -y

- 配置haproxy(kubeadm不需要使用haproxy)

[root@K8S-PROD-MASTER-A1 ~]# cd /etc/haproxy/

[root@K8S-PROD-MASTER-A1 haproxy]# cp haproxy.cfg{,.bak}

[root@K8S-PROD-MASTER-A1 haproxy]# > haproxy.cfg

[root@k8s-master01 ~]# cat /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode tcp

log global

retries 3

option tcplog

option httpclose

option dontlognull

option abortonclose

option redispatch

maxconn 32000

timeout connect 5000ms

timeout client 2h

timeout server 2h

listen stats

mode http

bind :10086

stats enable

stats uri /admin?stats

stats auth admin:admin

stats admin if TRUE

frontend k8s_apiserver

bind *:18333

mode tcp

default_backend https_sri

backend https_sri

balance roundrobin

server apiserver1_192_168_0_112 192.168.0.112:6443 check inter 2000 fall 2 rise 2 weight 100

server apiserver2_192_168_0_175 192.168.0.175:6443 check inter 2000 fall 2 rise 2 weight 100

在 Master-A2 执行如下命令,配置同A1一样

[root@K8S-PROD-MASTER-A2 ~]# cd /etc/haproxy/

[root@K8S-PROD-MASTER-A2 haproxy]# cp haproxy.cfg{,.bak}

[root@K8S-PROD-MASTER-A2 haproxy]# > haproxy.cfg

[root@K8S-PROD-MASTER-A2 haproxy]# vi haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode tcp

log global

retries 3

option tcplog

option httpclose

option dontlognull

option abortonclose

option redispatch

maxconn 32000

timeout connect 5000ms

timeout client 2h

timeout server 2h

listen stats

mode http

bind :10086

stats enable

stats uri /admin?stats

stats auth admin:admin

stats admin if TRUE

frontend k8s_apiserver

bind *:18333

mode tcp

default_backend https_sri

backend https_sri

balance roundrobin

server apiserver1_192_168_0_112 192.168.0.112:6443 check inter 2000 fall 2 rise 2 weight 100

server apiserver2_192_168_0_175 192.168.0.175:6443 check inter 2000 fall 2 rise 2 weight 100

- 配置keepalived

[root@K8S-PROD-MASTER-A1 haproxy]# cd /etc/keepalived/

[root@K8S-PROD-MASTER-A1 keepalived]# cp keepalived.conf{,.bak}

[root@K8S-PROD-MASTER-A1 keepalived]# > keepalived.conf

Master-A1 节点

##创建第一节点keepalived 配置文件

[root@K8S-PROD-MASTER-A1 keepalived]# vi keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_MASTER_APISERVER

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

}

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 60

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_peer {

192.168.0.112

192.168.0.175

}

virtual_ipaddress {

192.168.0.119/24 label eth1:0

}

track_script {

check_haproxy

}

}

注意:

其他两个节点 state MASTER 字段需改为 state BACKUP, priority 100 需要分别设置 90 80

创建第二节点keepalived 配置文件

[root@K8S-PROD-MASTER-A2 haproxy]# cd /etc/keepalived/

[root@K8S-PROD-MASTER-A2 keepalived]# cp keepalived.conf{,.bak}

[root@K8S-PROD-MASTER-A2 keepalived]# > keepalived.conf

[root@K8S-PROD-MASTER-A2 keepalived]# vi keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_MASTER_APISERVER

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

}

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 60

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_peer {

192.168.0.112

192.168.0.175

}

virtual_ipaddress {

192.168.0.119/24 label eth1:0

}

track_script {

check_haproxy

}

}

所有节点(Master1~Master2)需要配置检查脚本(check_haproxy.sh), 当 haproxy 挂掉后自动停止 keepalived

[root@K8S-PROD-MASTER-A1 keepalived]# vi /etc/keepalived/check_haproxy.sh

#!/bin/bash

flag=$(systemctl status haproxy &> /dev/null;echo $?)

if [[ $flag != 0 ]];then

echo "haproxy is down,close the keepalived"

systemctl stop keepalived

fi

修改系统服务(keepalived)

[root@K8S-PROD-MASTER-A1 keepalived]# vi /usr/lib/systemd/system/keepalived.service

[Unit]

Description=LVS and VRRP High Availability Monitor

After=syslog.target network-online.target

Requires=haproxy.service #增加该字段

[Service]

Type=forking

PIDFile=/var/run/keepalived.pid

KillMode=process

EnvironmentFile=-/etc/sysconfig/keepalived

ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS

ExecReload=/bin/kill -HUP $MAINPID

[Install]

WantedBy=multi-user.target

####firewalld 放行 VRRP 协议

firewall-cmd --direct --permanent --add-rule ipv4 filter INPUT 0 --in-interface eth1 --destination 224.0.0.18 --protocol vrrp -j ACCEPT

firewall-cmd --reload

注意: --in-interface eth1 请修改为实际网卡名称

启动haproxy和keepalived

systemctl enable haproxy

systemctl start haproxy

systemctl status haproxy

systemctl enable keepalived

systemctl start keepalived

systemctl status keepalived

登陆 http://192.168.0.119:10086/admin?stats admin:admin 查看haproxy状态

10. 安装kubeadm

- 添加阿里云YUM软件源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 安装kubeadm,kubelet和kubectl

yum install -y kubelet-1.15.3 kubeadm-1.15.3 kubectl-1.15.3

systemctl enable kubelet

11. 设置阿里云镜像加速

docker login --username=QDSH registry.cn-hangzhou.aliyuncs.com

输入密码

登陆成功后,使用下面命令查看认证记录

12. 部署Kubernetes Master

下面命令只能部署单节点master,高可用master需要用config文件进行初始化

第一个master节点

命令行方式初始化(单master节点适用)

kubeadm init \

--apiserver-advertise-address=192.168.0.119 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.15.3 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

- apiserver-advertise-address该参数一般指定为haproxy+keepalived 的vip。

- service-cidr string Default: “10.96.0.0/12” 设置service的CIDRs,默认为 10.96.0.0/12。

- pod-network-cidr string 通过这个值来设定pod网络的IP地址网段;设置了这个值以后,控制平面会自动给每个节点设置CIDRs(无类别域间路由,Classless Inter-Domain Routing)。

- 其他参数。

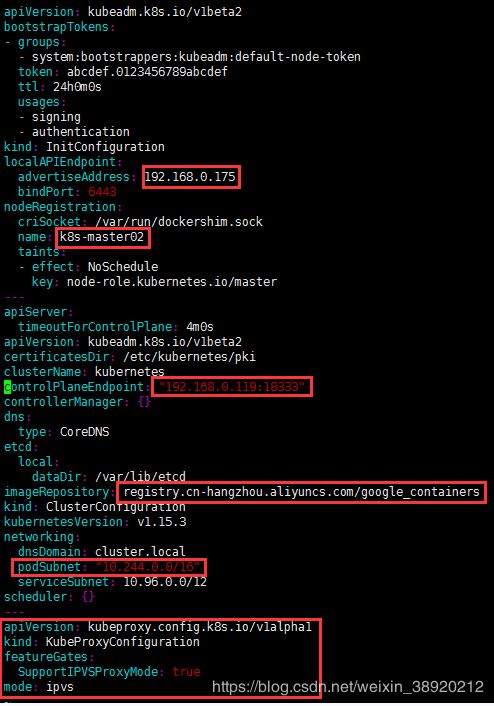

config方式初始化(多master适用,基于资源有限只用了两个master,亲测可用)

kubeadm config print init-defaults > kubeadm-config.yaml

然后对初始化的配置文件进行局部修改

下方配置参考:https://blog.csdn.net/educast/article/details/90023091

- controlPlaneEndpoint:为vip地址和haproxy监听端口18333(因为dashboard默认使用的端口是8443.避免引起额外的报错,用心良苦啊)

- imageRepository:由于国内无法访问google镜像仓库k8s.gcr.io,这里指定为阿里云镜像仓库registry.aliyuncs.com/google_containers

- podSubnet:指定的IP地址段与后续部署的网络插件相匹配,这里需要部署flannel插件,所以配置为10.244.0.0/16

- mode: ipvs:最后追加的配置为开启ipvs模式。

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.0.175

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master02

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "192.168.0.119:18333"

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.15.3

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

执行kubeadm init

mv kubeadm-config.yaml kubeadm-init-config.yaml

kubeadm init --config=kubeadm-init-config.yaml --upload-certs | tee kubeadm-init.log

kubectl -n kube-system get cm kubeadm-config -oyaml

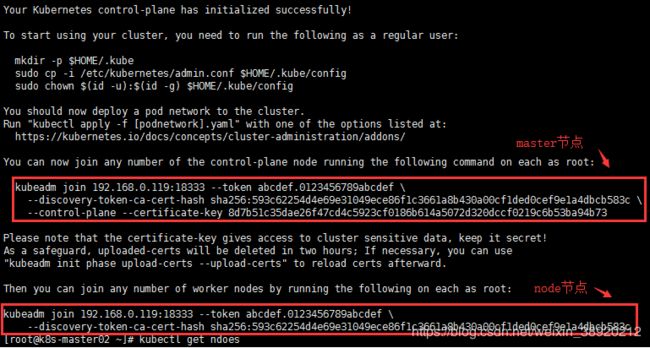

在第一个master节点上执行上述通过config方式 init后,返回如下结果:

root用户执行:

export KUBECONFIG=/etc/kubernetes/admin.conf

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

否则执行kubectl会报下面的错,node节点报错

原因1,没有master节点init后的/etc/kubernetes/admin.conf文件,需要复制一份,

原因2,没有定义环境变量

[root@k8s-worker01 ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

非root用户执行:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

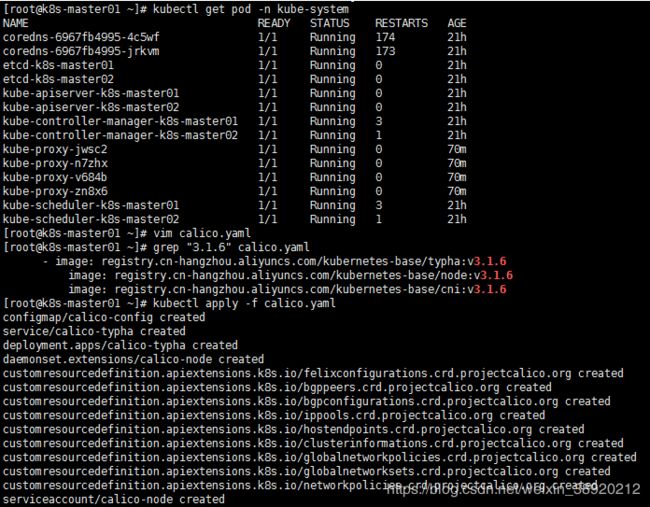

- 安装Pod网络插件(CNI)

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

kubectl apply ‐f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

因为安装flannel老是CrashLoopBackOff,所以将网络组件由flannel换成calico

#第一步,在master节点删除flannel

kubectl delete -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#第二步,在node节点清理flannel网络留下的文件

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

rm -f /etc/cni/net.d/*

注:执行完上面的操作,重启kubelet

应用calico相关的yaml文件(亲测可用)

wget https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/rbac-kdd.yaml

wget https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

修改calico.yaml CALICO_IPV4POOL_CIDR值改为Pod的网段10.244.0.0/16

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

将calico.yaml中的image镜像地址替换以下内容

registry.cn-hangzhou.aliyuncs.com/kubernetes-base/node:v3.1.6

registry.cn-hangzhou.aliyuncs.com/kubernetes-base/cni:v3.1.6

registry.cn-hangzhou.aliyuncs.com/kubernetes-base/typha:v3.1.6

执行以下命令

[root@k8s-master01 ~]# ll

total 20

-rw-r--r-- 1 root root 13685 Jun 12 18:03 calico.yaml

-rw-r--r-- 1 root root 1651 Jun 12 17:33 rbac-kdd.yaml

[root@k8s-master01 ~]# kubectl apply -f rbac-kdd.yaml

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

[root@k8s-master01 ~]# kubectl apply -f calico.yaml

configmap/calico-config created

service/calico-typha created

deployment.apps/calico-typha created

daemonset.extensions/calico-node created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

serviceaccount/calico-node created

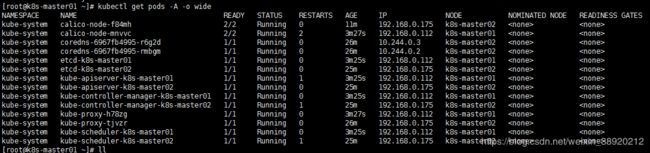

在刚刚初始化后的master节点上检查执行如下命令是否有正常返回,且都是否正常

kubectl get node -A -o wide

kubectl get pods -A -o wide

kubectl describe pod coredns-bccdc95cf-pkn9x -n kube-system

为master01节点设置taint(污点)(题外话,可能会用到删除污点)

语法:

kubectl taint node [node] key=value[effect]

其中[effect] 可取值: [ NoSchedule | PreferNoSchedule | NoExecute ]

NoSchedule: 一定不能被调度

PreferNoSchedule: 尽量不要调度

NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod添加污点

kubectl taint nodes k8s-master01 node-role.kubernetes.io/master=:NoSchedule

查看污点

kubectl describe nodes --all

kubectl describe node k8s-master01

删除污点

删除taint:

kubectl taint node node1 key1:NoSchedule- # 这里的key可以不用指定value

kubectl taint node node1 key1:NoExecute-

# kubectl taint node node1 key1- 删除指定key所有的effect

kubectl taint node node1 key2:NoSchedule-解决:(master 节点去污)

kubectl taint nodes --all node-role.kubernetes.io/master-

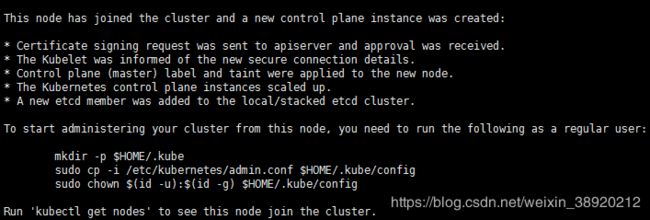

第二个master节点加入集群

拉取第一个master节点的证书

scp -P58528 [email protected]:/etc/kubernetes/pki/ca.* /etc/kubernetes/pki/

scp -P58528 [email protected]:/etc/kubernetes/pki/sa.* /etc/kubernetes/pki/

scp -P58528 [email protected]:/etc/kubernetes/pki/front-proxy-ca.* /etc/kubernetes/pki/

mkdir -p /etc/kubernetes/pki/etcd/

scp -P58528 [email protected]:/etc/kubernetes/pki/etcd/ca.* /etc/kubernetes/pki/etcd/

scp -P58528 [email protected]:/etc/kubernetes/admin.conf /etc/kubernetes/

加入集群:

kubeadm join 192.168.0.119:18333 --token 3l7bd4.xkcwpcjkdn3jyvbd \

--discovery-token-ca-cert-hash sha256:934b534e9849ce2e87de4df3dca6ff96a35ec3b137332127bb2d0ac08f4ef107 \

--control-plane

其他node节点加入集群

在node节点上执行master节点初始化后的命令:

加入成功

node节点加入集群不需要复制master节点生成的证书。但是需要master节点初始化后的/etc/kubernetes/admin.conf文件

获取到master节点的/etc/kubernetes/admin.conf文件后,需要执行如下命令设置环境变量

root用户执行:

export KUBECONFIG=/etc/kubernetes/admin.conf

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

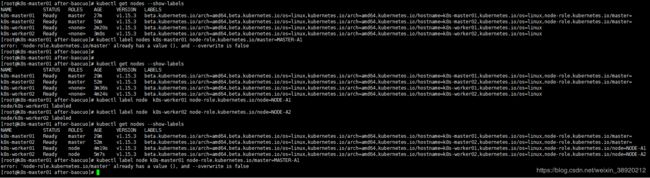

12. 为集群设置node标签:

在任意 master 节点操作, 为集群节点打 label

master需要先重置原有的label

kubectl label nodes k8s-master01 node-role.kubernetes.io/master- --overwrite

kubectl label nodes k8s-master01 node-role.kubernetes.io/master=MASTER-A1

kubectl label nodes k8s-master02 node-role.kubernetes.io/master- --overwrite

kubectl label nodes k8s-master02 node-role.kubernetes.io/master=MASTER-A2

设置 192.168.0.66,192.168.0.172 lable 为 node

node节点直接打

kubectl label node k8s-worker01 node-role.kubernetes.io/node=NODE-A1

kubectl label node k8s-worker02 node-role.kubernetes.io/node=NODE-A2

为 Ingress 边缘节点(harbor)设置 label

kubectl label nodes k8s-harbor node-role.kubernetes.io/LB=LB-A1

为 Ingress 边缘节点设置 污点,不允许分配pod

kubectl taint node k8s-harbor node-role.kubernetes.io/LB=LB-A1:NoSchedule

设置 master 一般情况下不接受负载

kubectl taint nodes k8s-master01 node-role.kubernetes.io/master=MASTER-A1:NoSchedule --overwrite

kubectl taint nodes k8s-master02 node-role.kubernetes.io/master=MASTER-A2:NoSchedule --overwrite

如何删除 lable ?–overwrite

kubectl label nodes k8s-master01 node-role.kubernetes.io/master- --overwrite

kubectl label nodes k8s-master02 node-role.kubernetes.io/master- --overwrite

kubectl label nodes k8s-worker01 node-role.kubernetes.io/node- --overwrite

kubectl label nodes k8s-worker02 node-role.kubernetes.io/node- --overwrite

kubectl label nodes k8s-harbor node-role.kubernetes.io/LB- --overwrite

kubectl get nodes --show-labels

13. 部署dashboard(接另一篇文档)

《kuberneter dashboard 安装番外篇(无数次重装)》

14. 测试

创建Pod

kubectl run nginx --image=nginx --replicas=2

扩容

kubectl scale deployment nginx --replicas=3

kubectl edit deployment nginx

让nginx能进行外部访问:

kubectl expose deployment nginx --port=80 --type=NodePort

重启各个节点上的kube-proxy pod:

kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

root@master1:/# vim /etc/profilecd #添加下面这句,再source

source <(kubectl completion bash)

root@master1:/# source /etc/profile

master节点默认不可部署pod

执行如下,node-role.kubernetes.io/master 可以在 kubectl edit node master1中taint配置参数下查到

root@master1:/var/lib/kubelet# kubectl taint node master1 node-role.kubernetes.io/master-

node "master1" untainted

至此,剧终,kubernetesV1.15.3安装完毕

参考链接:

kubeadm快速安装kubernetes v1.14.3 – 第一章

使用kubeadm部署Kubernetes1.14.3集群之dashboard ui界面 – 第二章

kubernetes1.9部署

centos7使用kubeadm安装kubernetes 1.11版本多主高可用

Kubernetes 1.15.0 ubuntu16.04 高可用安装步骤

![[外链图片转存失败(img-5b2Jqvy1-1569305501758)(./1566979435147.png)]](http://img.e-com-net.com/image/info8/bb50421004d84c40aadf71ec33e36154.jpg)