CentOS7.2离线部署Ceph

-

-

-

- 系统网络配置

- 创建ceph-deploy用户

- 配置yum源

- 安装ntp时间同步服务

- 配置防火墙(使用firewalld)

- 克隆另外两台虚拟机,并完成配置

- 节点tj01上安装ceph-deploy

- 安装及搭建ceph集群

- 安装启动网关rgw

- 创建对象网关用户(S3接口需要)

- 使用java测试S3接口

- 配置mgr仪表盘

-

-

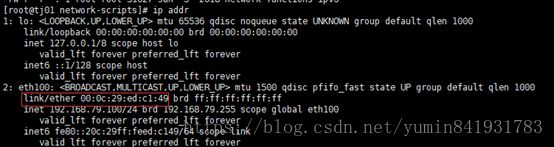

系统网络配置

1、安装centos7.2在tj01节点上(略),修改网络连接为NAT

2、修改网络为静态IP,参考https://www.cnblogs.com/leokale-zz/p/7832576.html

systemctl start NetworkManager.service

systemctl disable NetworkManager.serviceip addr查看除了lo的另一张网卡的mac地址,记录下来

cd /etc/sysconfig/network-scripts/

mv ifcfg-eno16777736 ifcfg-eth100

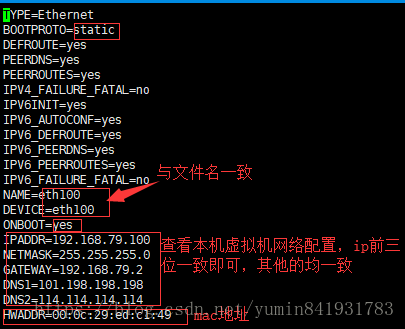

vi ifcfg-eth100按以下修改ifcfg-eth100文件

删除文件rm -f /etc/udev/rules.d/*fix.rules

关闭NetworkManage服务,systemctl stop NetworkManager.service

重启网络服务systemctl restart network.service(出现问题可以先reboot,然后查看网卡状态service network status)

创建ceph-deploy用户

在集群内的所有 Ceph 节点上给 ceph-deploy 创建一个特定的用户,这里我们创建用户名iflyceph,设置密码

sudo useradd -d /home/iflyceph -m iflyceph

sudo passwd iflyceph确保各 Ceph 节点上新创建的用户都有 sudo 权限(三个节点)

su root

touch /etc/sudoers.d/iflyceph

vi /etc/sudoers.d/iflyceph添加如下内容

iflyceph ALL = (root) NOPASSWD:ALL执行sudo chmod 0440 /etc/sudoers.d/iflyceph

配置yum源

1、下载离线安装包,这里安装luminous12.2.6版本,只需要下载12.2.6相关的包即可:

yum install wget

su iflyceph

cd ~

mkdir cephyumsource

cd cephyumsource

#这里我们提供所有的包

wget http://eu.ceph.com/rpm-luminous/el7/x86_64/{某版本包名}

...

...

#下载完后,安装`createrepo`

yum install createrepo

#生成符合要求的yum仓库,文件夹里面会多出一个repodata仓库数据文件夹,此时创建库成功

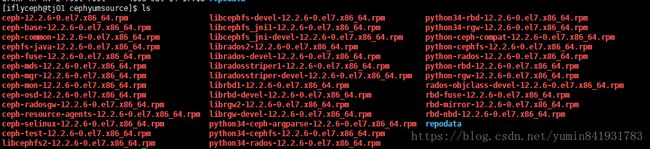

createrepo /home/iflyceph/cephyumsource下载好的包如下图所示

su root

cd /etc/yum.repos.d/

mkdir backup

mv ./* ./backup

#使用阿里源

wget -O ./CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O ./epel-7.repo http://mirrors.aliyun.com/repo/epel-7.repovim CentOS-Base.repo在最后面添加以下内容,保存

[LocalCeph]

name=CentOS-$releasever - LocalCeph

baseurl=file:///home/iflyceph/cephyumsource/

gpgcheck=0

enabled=1

gpgkey=执行下面命令

yum clean all

rm -rf /var/cache/yum

yum makecache

#离线安装ceph

yum install ceph

#查看安装版本

ceph -v至此,ceph12.2.6已经在单节点安装完毕,为减少工作量,后面我们先在单节点上做些准备工作,然后克隆另外的两个节点

安装ntp时间同步服务

#安装ntp

yum install ntp ntpdate ntp-doc

#启动ntp

systemctl start ntpd

#验证

ps -ef|grep ntpd

#设置开机启动

systemctl enable ntpd配置防火墙(使用firewalld)

参考https://blog.csdn.net/xlgen157387/article/details/52672988

参考https://www.cnblogs.com/moxiaoan/p/5683743.html

#安装

yum install firewalld

#添加规则,以下服务使用的端口允许

sudo firewall-cmd --zone=public --add-service=ceph-mon --permanent

sudo firewall-cmd --zone=public --add-service=ceph --permanent

sudo firewall-cmd --zone=public --add-service=http --permanent

sudo firewall-cmd --zone=public --add-service=https --permanent

sudo firewall-cmd --zone=public --add-service=ssh --permanent

#ceph-mgr的tcp端口允许

sudo firewall-cmd --zone=public --add-port=6800/tcp --permanent

#启动防火墙

systemctl start firewalld

#查看防火墙规则

firewall-cmd --list-all

#开机启动

systemctl enable firewalld

#查看服务状态

systemctl status firewalld

#若修改规则后,使用reload立即生效

firewall-cmd --reload

#禁用SELINUX

setenforce 0克隆另外两台虚拟机,并完成配置

此时,基础配置工作基本完成

1、vmware克隆虚拟机tj02和tj03

2、按系统网络配置中配置虚拟机网络(参考上面第一节)

3、以root用户分别在三个节点上修改hostname

#节点1上执行

hostnamectl set-hostname tj01

#节点2上执行

hostnamectl set-hostname tj02

#节点3上执行

hostnamectl set-hostname tj03

#三个节点均执行

vi /etc/hosts

#根据实际ip添加下面行

192.168.79.100 tj01

192.168.79.101 tj02

192.168.79.102 tj03修改完后重新登录生效

4、切换用户iflyceph生成免密登录(三个节点)

#tj01、tj02、tj03均执行

su iflyceph

cd ~

#生成ssh登录秘钥

ssh-keygen

#均使用默认一直回车,结束后/home/iflyceph/.ssh下会生成两个文件id_rsa,id_rsa.pub

#在tj01,tj02,tj03均上执行

ssh-copy-id iflyceph@tj01

ssh-copy-id iflyceph@tj02

ssh-copy-id iflyceph@tj03

#验证免密登录

ssh tj01 uptime

ssh tj02 uptime

ssh tj03 uptime节点tj01上安装ceph-deploy

sudo yum install ceph-deploy

sudo ceph-deploy --version安装及搭建ceph集群

su iflyceph

cd ~

#创建目录用于存放admin节点ceph配置信息

mkdir ceph-cluster

cd ceph-cluster

#执行下面语句,当前文件夹下生成ceph.conf和ceph.mon.keyring文件

ceph-deploy new tj01可能会出现如下错误提示

[iflyceph@tj01 ceph-cluster]$ ceph-deploy new tj01

Traceback (most recent call last):

File "/usr/bin/ceph-deploy", line 18, in <module>

from ceph_deploy.cli import main

File "/usr/lib/python2.7/site-packages/ceph_deploy/cli.py", line 1, in <module>

import pkg_resources

ImportError: No module named pkg_resourcespython问题,执行以下解决

su root

wget https://bootstrap.pypa.io/ez_setup.py -O - | python解决后再次执行ceph-deploy new tj01无提示错误,接着

vi ceph.conf

#添加以下内容

osd pool default size = 3

public network = 192.168.79.0/24

osd pool default pg num = 128

osd pool default pgp num = 128

mon_max_pg_per_osd = 1000#初始化ceph-mon,当前文件夹下生成一系列keyring

ceph-deploy mon create-initial

#写入当前文件夹配置(当作admin)到tj01、tj02、tj03节点

ceph-deploy admin tj01 tj02 tj03

#创建mgr用于系统监控,提供仪表盘(注意mgr需要高可用,官方文档建议一个mon节点启动一个mgr)

ceph-deploy mgr create tj01

ceph-deploy mgr create tj02

ceph-deploy mgr create tj03#添加osd,为保证性能,最好以单独的硬盘做osd,这里添加了一个硬盘/dev/vdb,(别挂载目录)

ceph-deploy osd create --data /dev/vdb tj01

ceph-deploy osd create --data /dev/vdb tj02

ceph-deploy osd create --data /dev/vdb tj03#检查集群状态

sudo ceph health

sudo ceph –s#扩展集群,添加多个mon,mon需要高可用

ceph-deploy mon add tj02

ceph-deploy mon add tj03#检查结果状态:

sudo ceph quorum_status --format json-pretty至此,ceph集群搭建完毕,执行ps -ef|grep ceph查看ceph进程

安装启动网关rgw

yum install ceph-radosgw

#网关管理员节点

ceph-deploy admin tj01 tj02 tj03

#创建网关实例

ceph-deploy rgw create tj01

#查看进程

ps -ef|grep gw如网关启动失败,可在/var/log/ceph/下查看网关启动日志,可能碰到问题

#[问题]

this can be due to a pool or placement group misconfiguration, e.g. pg_num < pgp_num or mon_max_pg_per_osd exceeded#[解决]

#ceph.conf添加一行

mon_max_pg_per_osd = 1000

#执行

ceph-deploy admin tj01 tj02 tj03

#重启ceph所有进程(从tj01、tj02、tj03依次执行)

sudo systemctl restart ceph.target访问验证

http://client-node:7480

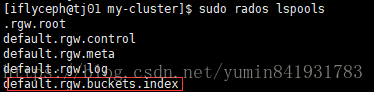

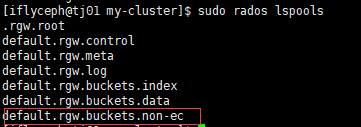

使用rados -h查看rados命令用法,执行sudo rados lspools查看集群存储池如下

创建对象网关用户(S3接口需要)

为了使用 REST 接口,首先需要为S3接口创建一个初始 Ceph 对象网关用户。然后,为 Swift 接口创建一个子用户。然后你需要验证创建的用户是否能够访问网关。

#格式如下:

radosgw-admin user create --uid={username} --display-name="{display-name}" [--email={email}]

#这里我们执行

sudo radosgw-admin user create --uid=tjwy --display-name="tjwy" [--email=tjwy@iflytek.com]使用java测试S3接口

public class TestCeph {

private static final Logger LOGGER = LoggerFactory.getLogger(TestCeph.class);

private static AmazonS3 amazonS3 = null;

@Before

public void initClient() {

final String hosts = "192.168.79.100:7480";

final String accessKey = "6DLM20O4E23QNEXDY6ZM";

final String secretKey = "v80dbNDduag5CPCXJ73J7IfaD0Nziqs0jmG6D17x";

AWSCredentialsProvider credentialsProvider = new AWSCredentialsProvider() {

public AWSCredentials getCredentials() {

return new BasicAWSCredentials(accessKey, secretKey);

}

public void refresh() {

}

};

ClientConfiguration clientConfiguration = new ClientConfiguration();

clientConfiguration.setProtocol(Protocol.HTTP);

EndpointConfiguration endpointConfiguration = new EndpointConfiguration(hosts, null);

amazonS3 = AmazonS3ClientBuilder.standard().withCredentials(credentialsProvider)

.withEndpointConfiguration(endpointConfiguration)

.withClientConfiguration(clientConfiguration).build();

LOGGER.info("ceph client init success!");

}

@Test

public void testBucket() {

Bucket bucket1 = amazonS3.createBucket("my-new-bucket3");

Bucket bucket2 = amazonS3.createBucket("my-new-bucket2");

List buckets = amazonS3.listBuckets();

for (Bucket bucket : buckets) {

LOGGER.info(bucket.getName() + "\t" + com.amazonaws.util.StringUtils.fromDate(bucket.getCreationDate()));

}

}

/**

* @Description: ACL控制,上传,下载,删除,生成签名URL

* @Auther: minyu

* @Date: 2018/7/12 11:31

*/

@Test

public void testObject() throws IOException {

String infile = "D://Download/export.csv";

// String outfile = "D://hello.txt";

//新建对象

String bucketName = "my-new-bucket2";

String fileName = "export.csv";

ByteArrayInputStream inputStream = new ByteArrayInputStream(FileUtils.inputStream2ByteArray(infile));

amazonS3.putObject(bucketName, fileName, inputStream, new ObjectMetadata());

//修改对象ACL

amazonS3.setObjectAcl(bucketName, fileName, CannedAccessControlList.PublicReadWrite);

// amazonS3.setObjectAcl(bucketName,fileName, CannedAccessControlList.Private);

//下载对象

// amazonS3.getObject(new GetObjectRequest(bucketName,fileName), new File(outfile));

// S3Object s3Object = amazonS3.getObject(bucketName, fileName);

// new FileInputStream(s3Object.getObjectContent());

//删除对象

// amazonS3.deleteObject(bucketName,fileName);

//生成对象的下载 URLS (带签名和不带签名)

GeneratePresignedUrlRequest request = new GeneratePresignedUrlRequest(bucketName, fileName);

URL url = amazonS3.generatePresignedUrl(request);

LOGGER.info(url.toString());

// amazonS3.getObject()

}

/**

* @Description: 异步分片上传测试

* @Auther: minyu

* @Date: 2018/7/12 11:30

*/

@Test

public void multipartUploadUsingHighLevelAPI() {

String filePath = "E://学习ppt/test.pptx";

String bucketName = "my-new-bucket2";

String keyName = "spark3";

TransferManager tm = TransferManagerBuilder.standard()

.withMultipartUploadThreshold(5L).withS3Client(amazonS3).build();

LOGGER.info("start uploading...");

long start = System.currentTimeMillis();

// TransferManager processes all transfers asynchronously,

// so this call will return immediately.

Upload upload = tm.upload(

bucketName, keyName, new File(filePath));

LOGGER.info("asynchronously return ...go on other opration");

try {

// Or you can block and wait for the upload to finish

upload.waitForCompletion();

LOGGER.info("Upload complete.");

LOGGER.info("文件描述:" + upload.getDescription());

LOGGER.info("耗时:" + (System.currentTimeMillis() - start) + "ms");

GeneratePresignedUrlRequest request = new GeneratePresignedUrlRequest(bucketName, keyName);

URL url = amazonS3.generatePresignedUrl(request);

LOGGER.info("下载地址:" + url);

} catch (AmazonClientException e) {

LOGGER.info("Unable to upload file, upload was aborted.");

e.printStackTrace();

} catch (InterruptedException e) {

LOGGER.info("Unable to upload file, upload was aborted.");

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

}

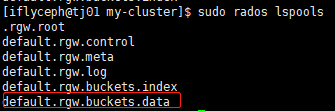

} 运行程序新建两个bucket后,查看存储池变化

运行程序写入对象后,查看存储池变化

运行程序测试异步分片上传之后,查看存储池变化

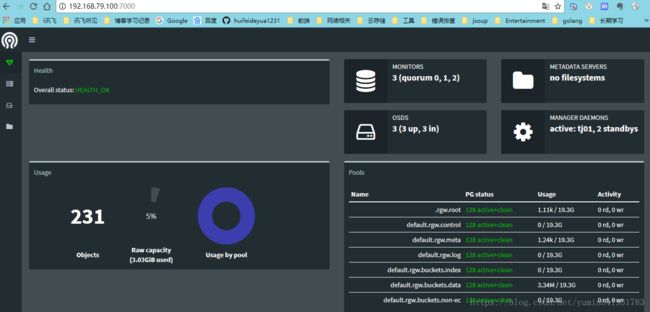

配置mgr仪表盘

#主节点上执行

ceph config-key put mgr/dashboard/server_addr 192.168.79.100

#列出mgr所有插件模块

ceph mgr module ls

#开启仪表盘模块

ceph mgr module enable dashboard

#查看mgr相关的http/https服务地址

ceph mgr services访问http://192.168.79.100:7000验证如下