论文Convolutional Naural Networks for Sentence Classification--TensorFlow实现篇

其实该论文作者已经将文章代码提供了出来,该代码用的是Theano实现的,但是因为最近看了TensorFlow,所以想着用用练练手,所以本文主要参考Denny Britz的一篇博文 来实现CNN和本篇论文,其代码也上传到了github上。说到Denny Britz,大神就是大神,之前也读过他一篇介绍CNN在NLP领域应用场景和方法的文章,写的很透彻也被很多国内网友翻译和转载,他的博客上有很多好的文章,有事Ian一定要好好读一遍,瞻仰和膜拜一下==

因为刚接触TensorFlow,之前只是安装好跑过一两个例子,对其大致了解了一下,知道了Graph、Tensor、Session等一些基本概念。这里就分析一下大神的代码,自己按着撸一遍,边学习TensorFlow边实现论文仿真。好了,废话不多说,开始看代码。

1,实验数据

实验所用的数据集是Movie Review data from Rotten Tomatoes,即MR电影评论数据,其中包含10662条评论,一半正面评论,一般负面。共包含18758个单词(vocab_size),最长的评论有56个单词(Padding,sequence_len),保存在data目录下的rt-polarity.neg和rt-poliarity.pos文件中。这里我们使用10%作为验证集,剩下的作为训练集。数据预处理部分写在data_helpers.py文件中,其实代码很简单,这里仅对load_data_and_labels函数进行介绍:

def load_data_and_labels(positive_data_file, negative_data_file):

#读取正面、负面评论保存在列表中

positive_examples = list(open(positive_data_file, "r").readlines())

positive_examples = [s.strip() for s in positive_examples]

negative_examples = list(open(negative_data_file, "r").readlines())

negative_examples = [s.strip() for s in negative_examples]

#调用clean_str()函数对评论进行处理,安单词进行分割,保存在x_text列表中

x_text = positive_examples + negative_examples

x_text = [clean_str(sent) for sent in x_text]

#为每个评论添加标签,并保存在y中

positive_labels = [[0, 1] for _ in positive_examples]

negative_labels = [[1, 0] for _ in negative_examples]

y = np.concatenate([positive_labels, negative_labels], 0)

return [x_text, y]读取完之后还要对样本进行处理以获得vocabulary并将每个样本转化为单词索引列表。这一部分的代码在train.py中实现。代码入下:

#载入实验数据

x_text, y = data_helpers.load_data_and_labels(FLAGS.positive_data_file, FLAGS.negative_data_file)

#获得句子的最大长度,用于padding。这里其值为56

max_document_length = max([len(x.split(" ")) for x in x_text])

#调用tf内部函类VocabularyProcessor,其会读取x_text,按照词语出现顺序构建vocabulary,并给每个单词以索引,然后返回的x是形如[[1,2,3,4...],...,[55,66,777...]]的嵌套列表。内列表代表每个句子,其值是评论中每个词在vocabulary中的索引。所以x是10662*56维

vocab_processor = learn.preprocessing.VocabularyProcessor(max_document_length)

x = np.array(list(vocab_processor.fit_transform(x_text)))

# Randomly shuffle data将数据进行随机打乱

np.random.seed(10)

shuffle_indices = np.random.permutation(np.arange(len(y)))

x_shuffled = x[shuffle_indices]

y_shuffled = y[shuffle_indices]

# Split train/test set构建训练集和验证集

dev_sample_index = -1 * int(FLAGS.dev_sample_percentage * float(len(y)))

x_train, x_dev = x_shuffled[:dev_sample_index], x_shuffled[dev_sample_index:]

y_train, y_dev = y_shuffled[:dev_sample_index], y_shuffled[dev_sample_index:]

print("Vocabulary Size: {:d}".format(len(vocab_processor.vocabulary_)))

print("Train/Dev split: {:d}/{:d}".format(len(y_train), len(y_dev)))2,构建CNN神经网络代码

这部分代码在text_cnn.py文件中。TensorFlow中构建神经网络时思路还是比较清晰的,按照我们上篇博客的介绍,逐层构建即可。这里使用TextCNN类来实现这部分代码。

首先实现init()函数。这里要传入的参数意义如下:

sequence_length:句子的长度(56)

num_classes:输出类别,这里是2,正面和负面

vocab_size:字典大小,这里是18758

embedding_size:词向量的维度,这里是128

filter_size:卷积核的纵向宽度,这里是[3,4,5]三个

num_filters:卷积核个数,这里是128

l2_reg_lambda:正则化强度。默认为0

具体定义代码入下,已经加了比较详细的注释代码。

class TextCNN(object):

"""

A CNN for text classification.

Uses an embedding layer, followed by a convolutional, max-pooling and softmax layer.

"""

def __init__(

self, sequence_length, num_classes, vocab_size,

embedding_size, filter_sizes, num_filters, l2_reg_lambda=0.0):

# 输入、输出、dropout比率的占位符定义

self.input_x = tf.placeholder(tf.int32, [None, sequence_length], name="input_x")

self.input_y = tf.placeholder(tf.float32, [None, num_classes], name="input_y")

self.dropout_keep_prob = tf.placeholder(tf.float32, name="dropout_keep_prob")

# Keeping track of l2 regularization loss (optional)

l2_loss = tf.constant(0.0)

# 输入层,根据输入x中每个单词在voca中的索引经过lookup得到其词向量。这里词向量使用随机初始化W,而并未使用已经训练好的word2vec。

with tf.device('/cpu:0'), tf.name_scope("embedding"):

self.W = tf.Variable(

tf.random_uniform([vocab_size, embedding_size], -1.0, 1.0),

name="W")

self.embedded_chars = tf.nn.embedding_lookup(self.W, self.input_x)

#因为卷积操作conv2d()需要输入的是四维数据,分别代表着批处理大小、宽度、高度、通道数。而embedded_chars只有前三维,所以需要添加一维,设为1。变为:[None, sequence_length, embedding_size, 1]

self.embedded_chars_expanded = tf.expand_dims(self.embedded_chars, -1)

#为每一个filter_size创建卷积层+池化层。并将最后的结果合并成一个大的特征向量

pooled_outputs = []

for i, filter_size in enumerate(filter_sizes):

with tf.name_scope("conv-maxpool-%s" % filter_size):

#构建卷积核尺寸,输入和输出channel分别为1和num_filters

filter_shape = [filter_size, embedding_size, 1, num_filters]

W = tf.Variable(tf.truncated_normal(filter_shape, stddev=0.1), name="W")

b = tf.Variable(tf.constant(0.1, shape=[num_filters]), name="b")

conv = tf.nn.conv2d(

self.embedded_chars_expanded,

W,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")

#非线性操作,激活函数:relu(W*x + b)

h = tf.nn.relu(tf.nn.bias_add(conv, b), name="relu")

# 池化,选取卷积结果的最大值pooled的尺寸为[None, 1,1,128](卷积核个数)

pooled = tf.nn.max_pool(

h,

ksize=[1, sequence_length - filter_size + 1, 1, 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool")

#pooled_outputs最终为一个长度为3的列表。每一个元素都是[None,1,1,128]的Tensor张量

pooled_outputs.append(pooled)

# Combine all the pooled features

num_filters_total = num_filters * len(filter_sizes)

#对pooled_outputs在第四个维度上进行合并,变成一个[None,1,1,384]Tensor张量

self.h_pool = tf.concat(pooled_outputs, 3)

#展开成两维Tensor[None,384]

self.h_pool_flat = tf.reshape(self.h_pool, [-1, num_filters_total])

# Add dropout

with tf.name_scope("dropout"):

self.h_drop = tf.nn.dropout(self.h_pool_flat, self.dropout_keep_prob)

#全连接层计算输出向量(w*h+b)和预测(scores向量中的最大值即为预测结果)

with tf.name_scope("output"):

W = tf.get_variable(

"W",

shape=[num_filters_total, num_classes],

initializer=tf.contrib.layers.xavier_initializer())

b = tf.Variable(tf.constant(0.1, shape=[num_classes]), name="b")

l2_loss += tf.nn.l2_loss(W)

l2_loss += tf.nn.l2_loss(b)

self.scores = tf.nn.xw_plus_b(self.h_drop, W, b, name="scores")

self.predictions = tf.argmax(self.scores, 1, name="predictions")

# CalculateMean cross-entropy loss计算scores和input_y的交叉熵损失函数

with tf.name_scope("loss"):

losses = tf.nn.softmax_cross_entropy_with_logits(logits=self.scores, labels=self.input_y)

self.loss = tf.reduce_mean(losses) + l2_reg_lambda * l2_loss

# Accuracy计算准确度,预测和真实标签相同即为正确

with tf.name_scope("accuracy"):

correct_predictions = tf.equal(self.predictions, tf.argmax(self.input_y, 1))

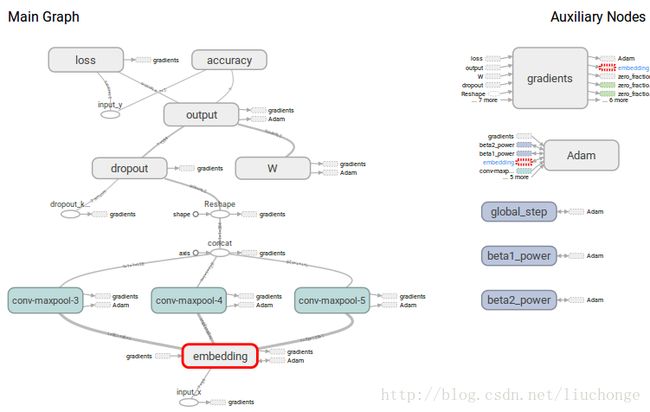

self.accuracy = tf.reduce_mean(tf.cast(correct_predictions, "float"), name="accuracy")最终网络结构在TensorBoard中可视化结果如下图所示:

对上述代码中使用的常用函数做个总结:

1,tf.nn.conv2d(input, filter, strides, padding, use_cudnn_on_gpu=None, data_format=None,

- name=None):执行卷积操作

- input是一个四维Tensor:[batch, in_height, in_width, in_channels]

- filter是一个四维Tensor:[filter_height, filter_width, in_channels,

out_channels] - strides卷积步长四维Tensor:必须满足[1, stride, stride, 1]的格式,每一位代表在输入上每维移动的步长。

- padding:“SAME”或者“VALID”,意味着宽卷积和窄卷积。

2,tf.random_uniform(shape, minval=0, maxval=None, dtype=tf.float32, seed=None, name=None)输出服从均匀分布[minval, maxval)的随机初始化函数。shape为要输出结果尺寸

3,tf.truncated_normal(shape, mean=0.0, stddev=1.0, dtype=tf.float32, seed=None, name=None)输出服从截断正态随机分布的随机初始化函数。生成的值会遵循一个指定了平均值和标准差的正态分布,只保留两个标准差以内的值,超出的值会被弃掉重新生成。

训练部分代码

既然我们的CNN神经网络已经搭建完毕,接下来就是传入数据开始一步步的训练了。训练部分代码在train.py文件中,代码如下所示:

with tf.Graph().as_default():

session_conf = tf.ConfigProto(

#允许TensorFlow回退到特定设备,并记录程序运行的设备信息

allow_soft_placement=FLAGS.allow_soft_placement,

log_device_placement=FLAGS.log_device_placement)

sess = tf.Session(config=session_conf)

#指定session

with sess.as_default():

#初始化CNN网络结构

cnn = TextCNN(

sequence_length=x_train.shape[1],

num_classes=y_train.shape[1],

vocab_size=len(vocab_processor.vocabulary_),

embedding_size=FLAGS.embedding_dim,

filter_sizes=list(map(int, FLAGS.filter_sizes.split(","))),

num_filters=FLAGS.num_filters,

l2_reg_lambda=FLAGS.l2_reg_lambda)

# trainable=False表明该参数虽然是Variable但并不属于网络运行参数,无需计算梯度并更新

global_step = tf.Variable(0, name="global_step", trainable=False)

optimizer = tf.train.AdamOptimizer(1e-3)

grads_and_vars = optimizer.compute_gradients(cnn.loss)

#计算梯度并根据AdamOptimizer优化函数跟新网络参数

train_op = optimizer.apply_gradients(grads_and_vars, global_step=global_step)

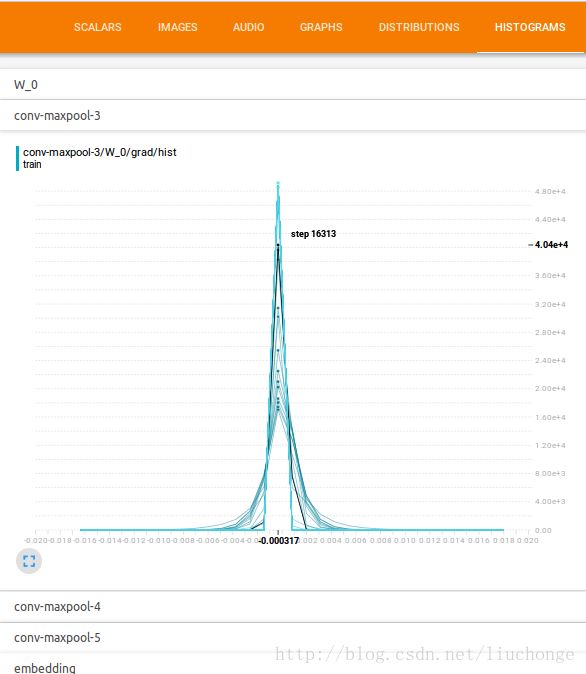

# 将一些想要在TensorBoard中观察的网络参数记录下来,保存到Summary中

grad_summaries = []

for g, v in grads_and_vars:

if g is not None:

grad_hist_summary = tf.summary.histogram("{}/grad/hist".format(v.name), g)

sparsity_summary = tf.summary.scalar("{}/grad/sparsity".format(v.name), tf.nn.zero_fraction(g))

grad_summaries.append(grad_hist_summary)

grad_summaries.append(sparsity_summary)

grad_summaries_merged = tf.summary.merge(grad_summaries)

# Output directory for models and summaries

timestamp = str(int(time.time()))

out_dir = os.path.abspath(os.path.join(os.path.curdir, "runs", timestamp))

print("Writing to {}\n".format(out_dir))

# Summaries for loss and accuracy

loss_summary = tf.summary.scalar("loss", cnn.loss)

acc_summary = tf.summary.scalar("accuracy", cnn.accuracy)

# Train Summaries

train_summary_op = tf.summary.merge([loss_summary, acc_summary, grad_summaries_merged])

train_summary_dir = os.path.join(out_dir, "summaries", "train")

train_summary_writer = tf.summary.FileWriter(train_summary_dir, sess.graph)

# Dev summaries

dev_summary_op = tf.summary.merge([loss_summary, acc_summary])

dev_summary_dir = os.path.join(out_dir, "summaries", "dev")

dev_summary_writer = tf.summary.FileWriter(dev_summary_dir, sess.graph)

# Checkpoint directory. Tensorflow assumes this directory already exists so we need to create it

checkpoint_dir = os.path.abspath(os.path.join(out_dir, "checkpoints"))

checkpoint_prefix = os.path.join(checkpoint_dir, "model")

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

saver = tf.train.Saver(tf.global_variables(), max_to_keep=FLAGS.num_checkpoints)

# Write vocabulary保存vocabulary

vocab_processor.save(os.path.join(out_dir, "vocab"))

# Initialize all variables初始化所有的网络参数

sess.run(tf.global_variables_initializer())

def train_step(x_batch, y_batch):

"""

A single training step

"""

#定义要传入的数据

feed_dict = {

cnn.input_x: x_batch,

cnn.input_y: y_batch,

cnn.dropout_keep_prob: FLAGS.dropout_keep_prob

}

#sess.run()运行一次网络优化,并将相应信息输出。

_, step, summaries, loss, accuracy = sess.run(

[train_op, global_step, train_summary_op, cnn.loss, cnn.accuracy],

feed_dict)

time_str = datetime.datetime.now().isoformat()

print("{}: step {}, loss {:g}, acc {:g}".format(time_str, step, loss, accuracy))

train_summary_writer.add_summary(summaries, step)

#验证集效果检测

def dev_step(x_batch, y_batch, writer=None):

"""

Evaluates model on a dev set

"""

feed_dict = {

cnn.input_x: x_batch,

cnn.input_y: y_batch,

cnn.dropout_keep_prob: 1.0

}

step, summaries, loss, accuracy = sess.run(

[global_step, dev_summary_op, cnn.loss, cnn.accuracy],

feed_dict)

time_str = datetime.datetime.now().isoformat()

print("{}: step {}, loss {:g}, acc {:g}".format(time_str, step, loss, accuracy))

if writer:

writer.add_summary(summaries, step)

# Generate batches

batches = data_helpers.batch_iter(

list(zip(x_train, y_train)), FLAGS.batch_size, FLAGS.num_epochs)

# Training loop. For each batch...

for batch in batches:

x_batch, y_batch = zip(*batch)

train_step(x_batch, y_batch)

current_step = tf.train.global_step(sess, global_step)

if current_step % FLAGS.evaluate_every == 0:

print("\nEvaluation:")

dev_step(x_dev, y_dev, writer=dev_summary_writer)

print("")

if current_step % FLAGS.checkpoint_every == 0:

path = saver.save(sess, checkpoint_prefix, global_step=current_step)

print("Saved model checkpoint to {}\n".format(path))然后我们就可以运行程序了,我在自己电脑上运行了大概三个小时==电脑太渣也是没有办法啊。然后再TensorBoard上可以查看其输出结果,但是现在还有一些图表示并看不太懂其含义,所以接下来需要再研究一下这些结果图都分别表明了什么。