Hadoop安装实验及MapReduce编程实验指导

实验环境:Red Hat 6.5、Hadoop-2.6.5 JDK1.7.0版本

具体参考实验指导书,本文档做辅助工作,详细命令请看教学实验指导书。

1.Hadoop安装实验

- 准备工作

- 配置主机名

更改主机名方法:

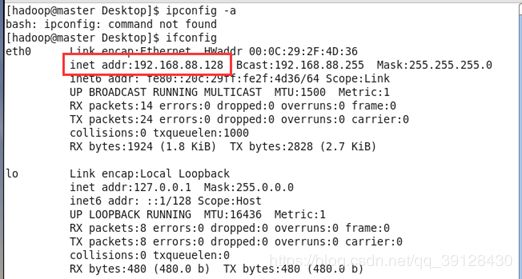

查看自己虚拟机的IP地址

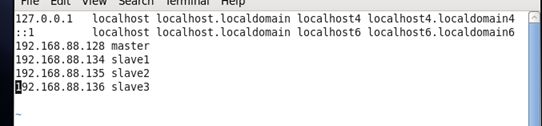

修改每台服务器上的/etc/hosts文件,添加主机名配置。

-

-

- 安装配置JDK

-

Red Hat系统yum需要注册,所以我们手动下载

Jdk下载版本为:jdk-7u67-linux-x64.tar.gz

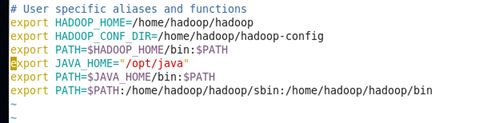

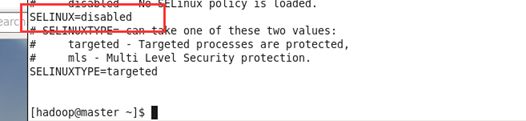

配置 在./bashrc文件中添加:

-

-

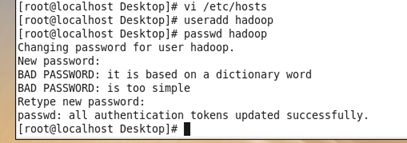

- 创建运行Hadoop程序的用户

-

在系统中添加运行Hadoop程序的用户hadoop,并修改其登录密码为hadoop

————————————

Linux下赋予普通用户超级权限sudo免密码

vim /etc/sudoers

找到这行 root ALL=(ALL) ALL

在他下面添加xxx ALL=(ALL) ALL

可以sudoers添加下面四行中任意一条

root ALL=(ALL) ALL

user ALL=(ALL) NOPASSWD: ALL

user ALL=(root) //赋予root用户拥有的权限

————————————

-

-

- 配置SSH无密钥验证配置

-

为了实现无口令SSH登录,需要在主机上配置公私密钥方式登录。此时需要通过root帐号登录,修改每个主机上的/etc/ssh/sshd_config文件,去掉RSAAuthentication yes和PubkeyAuthentication yes前面的“#”。

使用创建的hadoop重新登录系统,在每台主机上运行ssh-keygen -t rsa命令生成本地的公私密钥对。

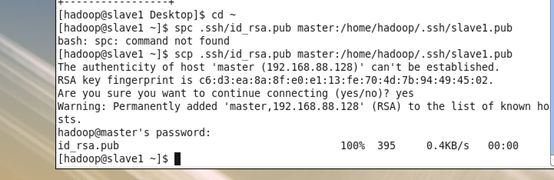

同样的过程需要在3台slave主机上执行。然后通过scp命令将3台slave主机的公钥拷贝到master主机上

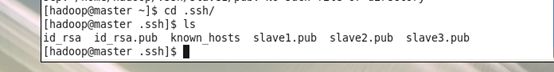

其中id_rsa.pub内容如下,是用户hadoop在master主机上的公钥;而slave1.pub、slave2.pub和slave3.pub分别是slave主机的公钥。

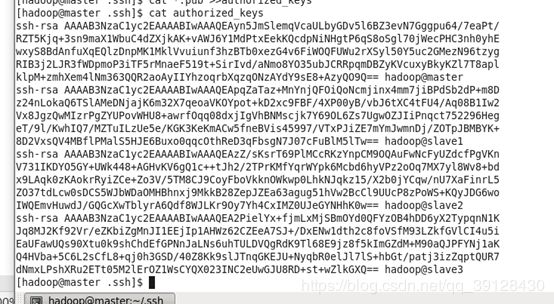

之后,将4个主机的公钥加入到master主机的授权文件中[1],之后看起来如下所示:

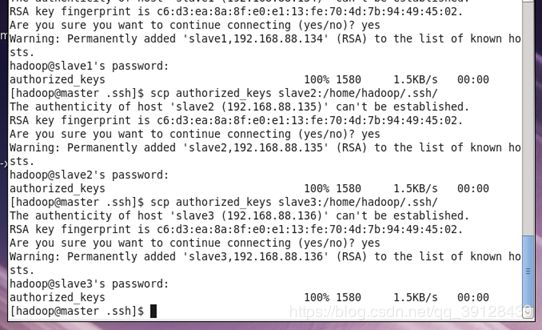

然后将master主机上的authorized_keys文件分别scp到3台slave主机上

命令 scp authorized_keys slave1:/home/hadoop/.ssh/

此外,还必须修改相关目录及文件的权限,需要在每台服务器上运行:

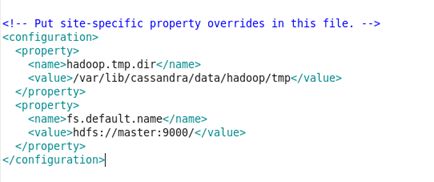

关闭防火墙

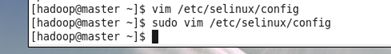

将selinux的值设为disabled

-

- 安装Hadoop集群

- 在master上安装

- 安装Hadoop集群

上传文件hadoop-2.6.5.tar.gz到master结点,解压

[hadoop@master ~]tar zxvf hadoop-2.6.5.tar.gz

建立符号连接

[hadoop@master ~]ln -s hadoop-2.6.5 hadoop

为建行配置过程,建立hadoop-config目录,并建立环境变量

[hadoop@master ~]mkdir hadoop-config

[hadoop@master ~]cp hadoop/conf/* ./hadoop-config/

[hadoop@master ~]export HADOOP_CONF_DIR=/home/hadoop/hadoop-config/

建立环境变量

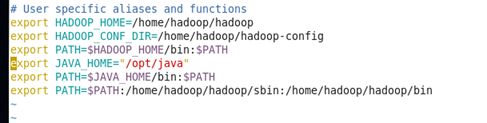

修改etc/hadoop下的配置文件

Core-site.xml

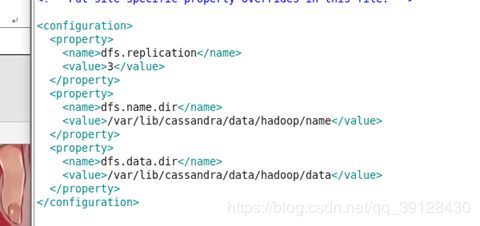

Hdfs-site.xml

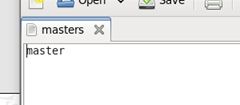

masters

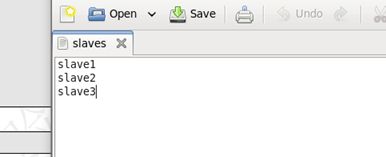

slaves

-

-

- 修改slave主机

-

拷贝master主机上的配置和安装文件:

[hadoop@master ~]$ scp -r .bashrc hadoop-config/ hadoop-2.6.5.tar.gz slave1:/home/hadoop/

[hadoop@slave1 ~]# mkdir /var/lib/cassandra/data/hadoop

[hadoop@slave1 ~]# mkdir /var/lib/cassandra/data/hadoop/tmp

[hadoop@slave1 ~]# mkdir /var/lib/cassandra/data/hadoop/data

[hadoop@slave1 ~]# mkdir /var/lib/cassandra/data/hadoop/name

解压安装文件,创建符号连接

tar zxvf hadoop-0.20.2.tar.gz && ln -s hadoop-2.6.5 hadoop

-

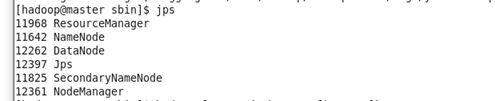

- 启动服务

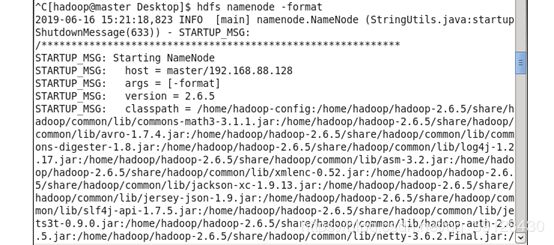

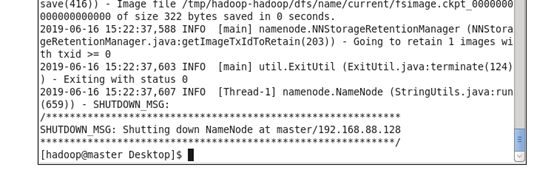

若第一次启动服务,首先需要对NameNode结点进行格式化:

[hadoop@master ~]$ hadoop namenode -format

如果报错:则原因是权限不够无法在目录内新建文件,输入

sudo chmod -R a+w /var/lib/

就可以了

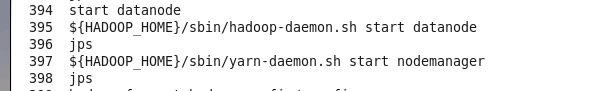

如果jps datanode没有启动:

则命令行输入:${HADOOP_HOME}/sbin/hadoop-daemon.sh start datanode

其他节点为启动也类似的方法

关闭Hadoop:stop-all.sh

上传文件

[hadoop@master ~]$ hadoop fs -put hadoop-config/ config

查看

Hadoop fs -ls /usr/Hadoop/

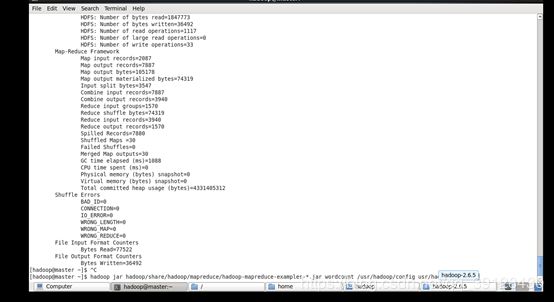

运行例子程序

[hadoop@master hadoop]hadoop jar hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-*.jar wordcount /usr/hadoop/config usr/hadoop/results

结果:

…

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000027_0 decomp: 41 len: 45 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 41 bytes from map-output for attempt_local2077883811_0001_m_000027_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 41, inMemoryMapOutputs.size() -> 11, commitMemory -> 25025, usedMemory ->25066

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000003_0 decomp: 3942 len: 3946 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 3942 bytes from map-output for attempt_local2077883811_0001_m_000003_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 3942, inMemoryMapOutputs.size() -> 12, commitMemory -> 25066, usedMemory ->29008

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000016_0 decomp: 1723 len: 1727 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 1723 bytes from map-output for attempt_local2077883811_0001_m_000016_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 1723, inMemoryMapOutputs.size() -> 13, commitMemory -> 29008, usedMemory ->30731

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000002_0 decomp: 4795 len: 4799 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 4795 bytes from map-output for attempt_local2077883811_0001_m_000002_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 4795, inMemoryMapOutputs.size() -> 14, commitMemory -> 30731, usedMemory ->35526

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000029_0 decomp: 15 len: 19 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 15 bytes from map-output for attempt_local2077883811_0001_m_000029_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 15, inMemoryMapOutputs.size() -> 15, commitMemory -> 35526, usedMemory ->35541

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000017_0 decomp: 1777 len: 1781 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 1777 bytes from map-output for attempt_local2077883811_0001_m_000017_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 1777, inMemoryMapOutputs.size() -> 16, commitMemory -> 35541, usedMemory ->37318

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000011_0 decomp: 2140 len: 2144 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 2140 bytes from map-output for attempt_local2077883811_0001_m_000011_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2140, inMemoryMapOutputs.size() -> 17, commitMemory -> 37318, usedMemory ->39458

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000001_0 decomp: 4637 len: 4641 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 4637 bytes from map-output for attempt_local2077883811_0001_m_000001_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 4637, inMemoryMapOutputs.size() -> 18, commitMemory -> 39458, usedMemory ->44095

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000025_0 decomp: 938 len: 942 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 938 bytes from map-output for attempt_local2077883811_0001_m_000025_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 938, inMemoryMapOutputs.size() -> 19, commitMemory -> 44095, usedMemory ->45033

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000024_0 decomp: 1019 len: 1023 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 1019 bytes from map-output for attempt_local2077883811_0001_m_000024_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 1019, inMemoryMapOutputs.size() -> 20, commitMemory -> 45033, usedMemory ->46052

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000012_0 decomp: 2144 len: 2148 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 2144 bytes from map-output for attempt_local2077883811_0001_m_000012_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2144, inMemoryMapOutputs.size() -> 21, commitMemory -> 46052, usedMemory ->48196

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000000_0 decomp: 12150 len: 12154 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 12150 bytes from map-output for attempt_local2077883811_0001_m_000000_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 12150, inMemoryMapOutputs.size() -> 22, commitMemory -> 48196, usedMemory ->60346

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000026_0 decomp: 386 len: 390 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 386 bytes from map-output for attempt_local2077883811_0001_m_000026_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 386, inMemoryMapOutputs.size() -> 23, commitMemory -> 60346, usedMemory ->60732

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000013_0 decomp: 2240 len: 2244 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 2240 bytes from map-output for attempt_local2077883811_0001_m_000013_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2240, inMemoryMapOutputs.size() -> 24, commitMemory -> 60732, usedMemory ->62972

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000008_0 decomp: 2387 len: 2391 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 2387 bytes from map-output for attempt_local2077883811_0001_m_000008_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2387, inMemoryMapOutputs.size() -> 25, commitMemory -> 62972, usedMemory ->65359

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000021_0 decomp: 1323 len: 1327 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 1323 bytes from map-output for attempt_local2077883811_0001_m_000021_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 1323, inMemoryMapOutputs.size() -> 26, commitMemory -> 65359, usedMemory ->66682

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000009_0 decomp: 2992 len: 2996 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 2992 bytes from map-output for attempt_local2077883811_0001_m_000009_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2992, inMemoryMapOutputs.size() -> 27, commitMemory -> 66682, usedMemory ->69674

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000023_0 decomp: 1212 len: 1216 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 1212 bytes from map-output for attempt_local2077883811_0001_m_000023_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 1212, inMemoryMapOutputs.size() -> 28, commitMemory -> 69674, usedMemory ->70886

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000022_0 decomp: 1202 len: 1206 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 1202 bytes from map-output for attempt_local2077883811_0001_m_000022_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 1202, inMemoryMapOutputs.size() -> 29, commitMemory -> 70886, usedMemory ->72088

19/06/16 21:15:08 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local2077883811_0001_m_000010_0 decomp: 2111 len: 2115 to MEMORY

19/06/16 21:15:08 INFO reduce.InMemoryMapOutput: Read 2111 bytes from map-output for attempt_local2077883811_0001_m_000010_0

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2111, inMemoryMapOutputs.size() -> 30, commitMemory -> 72088, usedMemory ->74199

19/06/16 21:15:08 INFO reduce.EventFetcher: EventFetcher is interrupted.. Returning

19/06/16 21:15:08 INFO mapred.LocalJobRunner: 30 / 30 copied.

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: finalMerge called with 30 in-memory map-outputs and 0 on-disk map-outputs

19/06/16 21:15:08 INFO mapred.Merger: Merging 30 sorted segments

19/06/16 21:15:08 INFO mapred.Merger: Down to the last merge-pass, with 30 segments left of total size: 73995 bytes

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: Merged 30 segments, 74199 bytes to disk to satisfy reduce memory limit

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: Merging 1 files, 74145 bytes from disk

19/06/16 21:15:08 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce

19/06/16 21:15:08 INFO mapred.Merger: Merging 1 sorted segments

19/06/16 21:15:08 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 74136 bytes

19/06/16 21:15:08 INFO mapred.LocalJobRunner: 30 / 30 copied.

19/06/16 21:15:08 INFO Configuration.deprecation: mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

19/06/16 21:15:08 INFO mapred.Task: Task:attempt_local2077883811_0001_r_000000_0 is done. And is in the process of committing

19/06/16 21:15:08 INFO mapred.LocalJobRunner: 30 / 30 copied.

19/06/16 21:15:08 INFO mapred.Task: Task attempt_local2077883811_0001_r_000000_0 is allowed to commit now

19/06/16 21:15:08 INFO output.FileOutputCommitter: Saved output of task 'attempt_local2077883811_0001_r_000000_0' to hdfs://master:9000/user/hadoop/usr/hadoop/results/_temporary/0/task_local2077883811_0001_r_000000

19/06/16 21:15:08 INFO mapred.LocalJobRunner: reduce > reduce

19/06/16 21:15:08 INFO mapred.Task: Task 'attempt_local2077883811_0001_r_000000_0' done.

19/06/16 21:15:08 INFO mapred.LocalJobRunner: Finishing task: attempt_local2077883811_0001_r_000000_0

19/06/16 21:15:08 INFO mapred.LocalJobRunner: reduce task executor complete.

19/06/16 21:15:09 INFO mapreduce.Job: map 100% reduce 100%

19/06/16 21:15:09 INFO mapreduce.Job: Job job_local2077883811_0001 completed successfully

19/06/16 21:15:09 INFO mapreduce.Job: Counters: 38

File System Counters

FILE: Number of bytes read=10542805

FILE: Number of bytes written=19367044

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1847773

HDFS: Number of bytes written=36492

HDFS: Number of read operations=1117

HDFS: Number of large read operations=0

HDFS: Number of write operations=33

Map-Reduce Framework

Map input records=2087

Map output records=7887

Map output bytes=105178

Map output materialized bytes=74319

Input split bytes=3547

Combine input records=7887

Combine output records=3940

Reduce input groups=1570

Reduce shuffle bytes=74319

Reduce input records=3940

Reduce output records=1570

Spilled Records=7880

Shuffled Maps =30

Failed Shuffles=0

Merged Map outputs=30

GC time elapsed (ms)=1088

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=4331405312

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=77522

File Output Format Counters

Bytes Written=36492

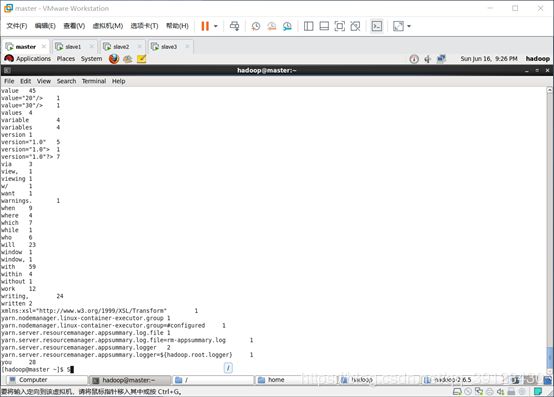

在线查看part-r-00000文件中的内容

hadoop fs -cat /user/hadoop/results/part-r-00000

结果如下

…

java 3

javadoc 1

job 6

jobs 10

jobs, 1

jobs. 2

jsvc 2

jvm 3

jvm.class=org.apache.hadoop.metrics.ganglia.GangliaContext 1

jvm.class=org.apache.hadoop.metrics.ganglia.GangliaContext31 1

jvm.period=10 1

jvm.servers=localhost:8649 1

key 10

key. 1

key="capacity" 1

key="user-limit" 1

keys 1

keystore 9

keytab 2

killing 1

kms 2

kms-audit 1

language 24

last 1

law 24

leaf 2

level 6

levels 2

library 1

license 12

licenses 12

like 3

limit 1

limitations 24

line 1

links 1

list 44

location 2

log 14

log4j.additivity.kms-audit=false 1

log4j.additivity.org.apache.hadoop.hdfs.server.namenode.FSNamesystem.audit=false 1

log4j.additivity.org.apache.hadoop.mapred.AuditLogger=false 1

log4j.additivity.org.apache.hadoop.mapred.JobInProgress$JobSummary=false 1

log4j.additivity.org.apache.hadoop.yarn.server.resourcemanager.RMAppManager$ApplicationSummary=false 1

log4j.appender.DRFA.DatePattern=.yyyy-MM-dd 1

log4j.appender.DRFA.File=${hadoop.log.dir}/${hadoop.log.file} 1

log4j.appender.DRFA.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.DRFA.layout=org.apache.log4j.PatternLayout 1

log4j.appender.DRFA=org.apache.log4j.DailyRollingFileAppender 1

log4j.appender.DRFAS.DatePattern=.yyyy-MM-dd 1

log4j.appender.DRFAS.File=${hadoop.log.dir}/${hadoop.security.log.file} 1

log4j.appender.DRFAS.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.DRFAS.layout=org.apache.log4j.PatternLayout 1

log4j.appender.DRFAS=org.apache.log4j.DailyRollingFileAppender 1

log4j.appender.EventCounter=org.apache.hadoop.log.metrics.EventCounter 1

log4j.appender.JSA.File=${hadoop.log.dir}/${hadoop.mapreduce.jobsummary.log.file} 1

log4j.appender.JSA.MaxBackupIndex=${hadoop.mapreduce.jobsummary.log.maxbackupindex} 1

log4j.appender.JSA.MaxFileSize=${hadoop.mapreduce.jobsummary.log.maxfilesize} 1

log4j.appender.JSA.layout.ConversionPattern=%d{yy/MM/dd 1

log4j.appender.JSA.layout=org.apache.log4j.PatternLayout 1

log4j.appender.JSA=org.apache.log4j.RollingFileAppender 1

log4j.appender.MRAUDIT.File=${hadoop.log.dir}/mapred-audit.log 1

log4j.appender.MRAUDIT.MaxBackupIndex=${mapred.audit.log.maxbackupindex} 1

log4j.appender.MRAUDIT.MaxFileSize=${mapred.audit.log.maxfilesize} 1

log4j.appender.MRAUDIT.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.MRAUDIT.layout=org.apache.log4j.PatternLayout 1

log4j.appender.MRAUDIT=org.apache.log4j.RollingFileAppender 1

log4j.appender.NullAppender=org.apache.log4j.varia.NullAppender 1

log4j.appender.RFA.File=${hadoop.log.dir}/${hadoop.log.file} 1

log4j.appender.RFA.MaxBackupIndex=${hadoop.log.maxbackupindex} 1

log4j.appender.RFA.MaxFileSize=${hadoop.log.maxfilesize} 1

log4j.appender.RFA.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.RFA.layout=org.apache.log4j.PatternLayout 1

log4j.appender.RFA=org.apache.log4j.RollingFileAppender 1

log4j.appender.RFAAUDIT.File=${hadoop.log.dir}/hdfs-audit.log 1

log4j.appender.RFAAUDIT.MaxBackupIndex=${hdfs.audit.log.maxbackupindex} 1

log4j.appender.RFAAUDIT.MaxFileSize=${hdfs.audit.log.maxfilesize} 1

log4j.appender.RFAAUDIT.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.RFAAUDIT.layout=org.apache.log4j.PatternLayout 1

log4j.appender.RFAAUDIT=org.apache.log4j.RollingFileAppender 1

log4j.appender.RFAS.File=${hadoop.log.dir}/${hadoop.security.log.file} 1

log4j.appender.RFAS.MaxBackupIndex=${hadoop.security.log.maxbackupindex} 1

log4j.appender.RFAS.MaxFileSize=${hadoop.security.log.maxfilesize} 1

log4j.appender.RFAS.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.RFAS.layout=org.apache.log4j.PatternLayout 1

log4j.appender.RFAS=org.apache.log4j.RollingFileAppender 1

log4j.appender.RMSUMMARY.File=${hadoop.log.dir}/${yarn.server.resourcemanager.appsummary.log.file} 1

log4j.appender.RMSUMMARY.MaxBackupIndex=20 1

log4j.appender.RMSUMMARY.MaxFileSize=256MB 1

log4j.appender.RMSUMMARY.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.RMSUMMARY.layout=org.apache.log4j.PatternLayout 1

log4j.appender.RMSUMMARY=org.apache.log4j.RollingFileAppender 1

log4j.appender.TLA.isCleanup=${hadoop.tasklog.iscleanup} 1

log4j.appender.TLA.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.TLA.layout=org.apache.log4j.PatternLayout 1

log4j.appender.TLA.taskId=${hadoop.tasklog.taskid} 1

log4j.appender.TLA.totalLogFileSize=${hadoop.tasklog.totalLogFileSize} 1

log4j.appender.TLA=org.apache.hadoop.mapred.TaskLogAppender 1

log4j.appender.console.layout.ConversionPattern=%d{yy/MM/dd 1

log4j.appender.console.layout=org.apache.log4j.PatternLayout 1

log4j.appender.console.target=System.err 1

log4j.appender.console=org.apache.log4j.ConsoleAppender 1

log4j.appender.httpfs.Append=true 1

log4j.appender.httpfs.DatePattern='.'yyyy-MM-dd 1

log4j.appender.httpfs.File=${httpfs.log.dir}/httpfs.log 1

log4j.appender.httpfs.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.httpfs.layout=org.apache.log4j.PatternLayout 1

log4j.appender.httpfs=org.apache.log4j.DailyRollingFileAppender 1

log4j.appender.httpfsaudit.Append=true 1

log4j.appender.httpfsaudit.DatePattern='.'yyyy-MM-dd 1

log4j.appender.httpfsaudit.File=${httpfs.log.dir}/httpfs-audit.log 1

log4j.appender.httpfsaudit.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.httpfsaudit.layout=org.apache.log4j.PatternLayout 1

log4j.appender.httpfsaudit=org.apache.log4j.DailyRollingFileAppender 1

log4j.appender.kms-audit.Append=true 1

log4j.appender.kms-audit.DatePattern='.'yyyy-MM-dd 1

log4j.appender.kms-audit.File=${kms.log.dir}/kms-audit.log 1

log4j.appender.kms-audit.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.kms-audit.layout=org.apache.log4j.PatternLayout 1

log4j.appender.kms-audit=org.apache.log4j.DailyRollingFileAppender 1

log4j.appender.kms.Append=true 1

log4j.appender.kms.DatePattern='.'yyyy-MM-dd 1

log4j.appender.kms.File=${kms.log.dir}/kms.log 1

log4j.appender.kms.layout.ConversionPattern=%d{ISO8601} 1

log4j.appender.kms.layout=org.apache.log4j.PatternLayout 1

log4j.appender.kms=org.apache.log4j.DailyRollingFileAppender 1

log4j.category.SecurityLogger=${hadoop.security.logger} 1

log4j.logger.com.amazonaws.http.AmazonHttpClient=ERROR 1

log4j.logger.com.amazonaws=ERROR 1

log4j.logger.com.sun.jersey.server.wadl.generators.WadlGeneratorJAXBGrammarGenerator=OFF 1

log4j.logger.httpfsaudit=INFO, 1

log4j.logger.kms-audit=INFO, 1

log4j.logger.org.apache.hadoop.conf.Configuration.deprecation=WARN 1

log4j.logger.org.apache.hadoop.conf=ERROR 1

log4j.logger.org.apache.hadoop.fs.http.server=INFO, 1

log4j.logger.org.apache.hadoop.fs.s3a.S3AFileSystem=WARN 1

log4j.logger.org.apache.hadoop.hdfs.server.namenode.FSNamesystem.audit=${hdfs.audit.logger} 1

log4j.logger.org.apache.hadoop.lib=INFO, 1

log4j.logger.org.apache.hadoop.mapred.AuditLogger=${mapred.audit.logger} 1

log4j.logger.org.apache.hadoop.mapred.JobInProgress$JobSummary=${hadoop.mapreduce.jobsummary.logger} 1

log4j.logger.org.apache.hadoop.yarn.server.resourcemanager.RMAppManager$ApplicationSummary=${yarn.server.resourcemanager.appsummary.logger} 1

log4j.logger.org.apache.hadoop=INFO 1

log4j.logger.org.jets3t.service.impl.rest.httpclient.RestS3Service=ERROR 1

log4j.rootLogger=${hadoop.root.logger}, 1

log4j.rootLogger=ALL, 1

log4j.threshold=ALL 1

logger 2

logger. 1

logging 4

logs 2

logs. 1

loops 1

manage 1

manager 3

map 2

mapping 1

mapping]* 1

mappings 1

mappings. 1

mapred 1

mapred.audit.log.maxbackupindex=20 1

mapred.audit.log.maxfilesize=256MB 1

mapred.audit.logger=INFO,NullAppender 1

mapred.class=org.apache.hadoop.metrics.ganglia.GangliaContext 1

mapred.class=org.apache.hadoop.metrics.ganglia.GangliaContext31 1

mapred.class=org.apache.hadoop.metrics.spi.NullContext 1

mapred.period=10 1

mapred.servers=localhost:8649 1

mapreduce.cluster.acls.enabled 3

mapreduce.cluster.administrators 2

maps 1

master 1

masters 1

match="configuration"> 1

material 3

max 3

maximum 4

may 52

means 18

message 1

messages 2

metadata 1

method="html"/> 1

metrics 3

midnight 1

might 1

milliseconds. 4

min.user.id=1000#Prevent 1

missed 1

modified. 1

modify 1

modifying 1

more 12

mradmin 1

ms) 1

multi-dimensional 1

multiple 4

must 1

name 3

name. 1

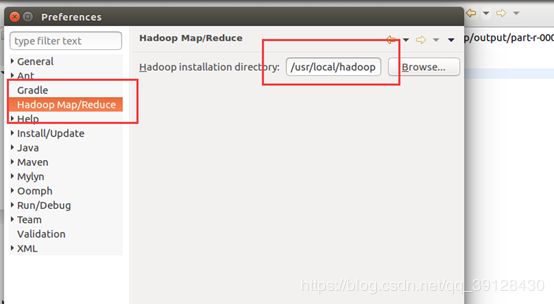

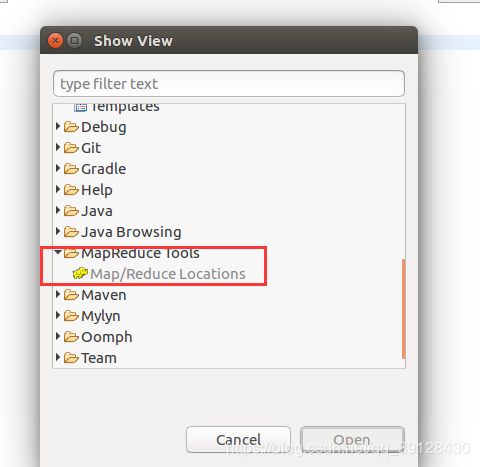

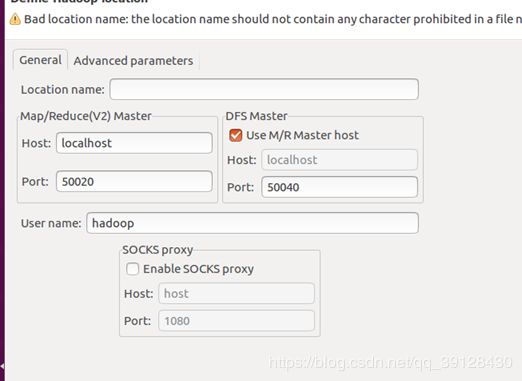

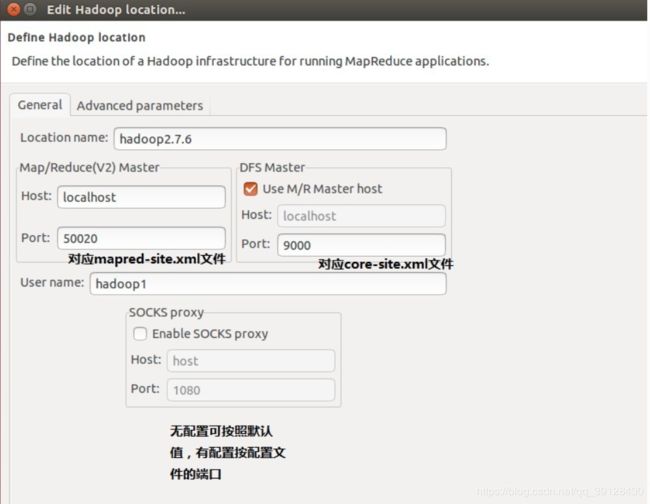

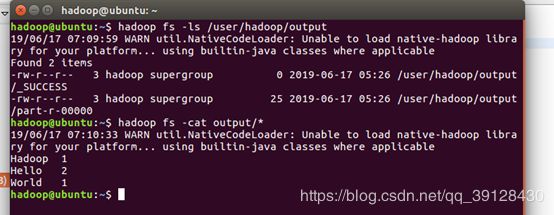

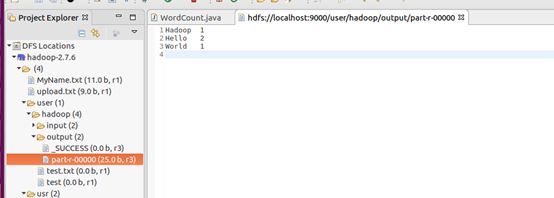

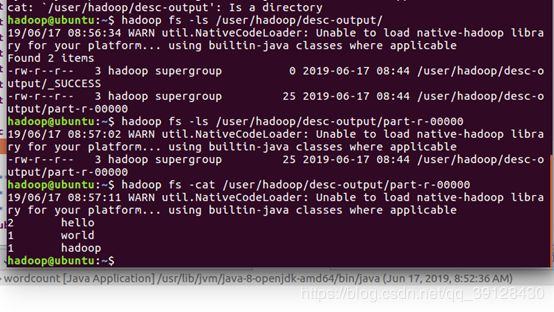

name="{name}"> namenode 1 namenode-metrics.out 1 namenode. 2 namenode. 1 namenode: 1 names. 19 nesting 1 new 2 no 4 nodes 2 nodes. 2 non-privileged 2 normal 1 not 51 null 5 number 6 numerical 3 obtain 24 of 138 off 4 on 30 one 15 one: 2 only 9 operation. 3 operations 8 operations. 9 opportunities 1 option 6 optional. 2 options 11 options. 2 or 71 ordinary 1 org.apache.hadoop.metrics2 1 other 1 other. 6 others 2 overridden 4 override 5 overrides 4 owner 1 ownership. 12 package-info.java 1 parameters 4 parent 1 part 2 password 3 path 2 pending 1 per 1 percent 1 percentage 1 period 1 period, 1 permissions 24 picked 3 pid 3 place 1 please 1 policy 3 port 5 ports 2 ports. 2 potential 2 preferred 3 prefix. 1 present, 1 principal 4 principal. 1 printed 1 priorities. 1 priority 1 privileged 2 privileges 1 privileges. 1 properties 7 property 11 protocol 6 protocol, 2 protocol. 2 provide 3 q1. 1 q2 2 q2. 1 quashed 1 query 1 queue 12 queue). 1 queue, 1 queue. 10 queues 9 queues, 1 queues. 3 rack 1 rack-local 1 recovery. 1 reduce 2 refresh 2 regarding 12 reload 2 remote 2 representing 3 required 29 resolve 2 resources 2 response. 2 restore 1 retrieve 1 return 1 returned 2 rolling 1 rollover-key 1 root 2 rootlogger 1 rpc.class=org.apache.hadoop.metrics.ganglia.GangliaContext 1 rpc.class=org.apache.hadoop.metrics.ganglia.GangliaContext31 1 rpc.class=org.apache.hadoop.metrics.spi.NullContext 1 rpc.period=10 1 rpc.servers=localhost:8649 1 run 10 running 3 running, 1 running. 1 runs 3 runtime 2 same 1 sample 1 sampling 2 scale 3 schedule 1 scheduler. 1 schedulers, 1 scheduling 2 secondary 1 seconds 1 seconds). 2 secret 3 secure 7 security 1 segment 1 select="description"/> 1 select="name"/> 1 select="property"> 1 select="value"/> 1 send 1 sending 1 separate 2 separated 20 separated. 1 server 3 service 2 service-level 2 set 60 set." 1 sets 2 setting 7 setup 1 severity 1 should 6 sign 1 signature 2 similar 3 single 1 sinks 1 site-specific 4 sizes 1 slave1 1 slave2 1 slave3 1 so 3 softlink 1 software 24 some 2 sometimes 1 source 2 space 1 space), 2 spaces 1 special 19 specific 33 specification. 1 specified 13 specified, 3 specified. 6 specifiying 1 specify 3 specifying 2 split 1 stand-by 1 start 3 started 2 starting 3 state 4 states 1 status 2 stopped, 1 store 1 stored 2 stored. 7 string 3 string, 1 submission 1 submit 2 submitting 1 such 1 summary 5 super-users 1 support 2 supported 1 supports 1 supportsparse 1 suppress 1 symlink 2 syntax 1 syntax: 1 system 3 tag 1 tags 3 target 1 tasks 2 tasktracker. 1 template 1 temporary 2 than 1 that 19 the 370 them 1 then 8 there 2 therefore 3 this 78 threads 1 time 4 timeline 2 timestamp. 1 to 163 top 1 traffic. 1 transfer 4 true. 3 turn 1 two. 3 type 1 type, 1 type="text/xsl" 6 typically 1 u:%user:%user 1 ugi.class=org.apache.hadoop.metrics.ganglia.GangliaContext 1 ugi.class=org.apache.hadoop.metrics.ganglia.GangliaContext31 1 ugi.class=org.apache.hadoop.metrics.spi.NullContext 1 ugi.period=10 1 ugi.servers=localhost:8649 1 uncommented 1 under 84 unset 1 up 2 updating 1 usage 2 use 30 use, 2 use. 4 used 36 used. 3 user 48 user. 2 user1,user2 2 user? 1 users 27 users,wheel". 18 uses 2 using 14 value 45 value="20"/> 1 value="30"/> 1 values 4 variable 4 variables 4 version 1 version="1.0" 5 version="1.0"> 1 version="1.0"?> 7 via 3 view, 1 viewing 1 w/ 1 want 1 warnings. 1 when 9 where 4 which 7 while 1 who 6 will 23 window 1 window, 1 with 59 within 4 without 1 work 12 writing, 24 written 2 xmlns:xsl="http://www.w3.org/1999/XSL/Transform" 1 yarn.nodemanager.linux-container-executor.group 1 yarn.nodemanager.linux-container-executor.group=#configured 1 yarn.server.resourcemanager.appsummary.log.file 1 yarn.server.resourcemanager.appsummary.log.file=rm-appsummary.log 1 yarn.server.resourcemanager.appsummary.logger 2 yarn.server.resourcemanager.appsummary.logger=${hadoop.root.logger} 1 you 28 将结果下载到本地: hadoop fs -get /user/hadoop/usr/hadoop/results count-results ls 下载到本地后查看,如果成功 那么,实验一完成。 了解MapReduce的工作原理 掌握基本的MapReduce的编程方法 学习Eclipse下的MapReduce编程 学会设计、实现、运行MapReduce程序 备注:因为版本的原因,教学实验指导书的内容比较陈旧,现在Hadoop内容已经到了2.x、3.x版本,对于实验指导书中Hadoop-0.20版本修改了很多类、方法及API,故本实验在参考教学实验指导书的同时,采用下面的步骤。 示例程序WordCount用于统计一批文本文件中单词出现的频率,完整的代码可在Hadoop安装包中得到(在src/examples 目录中)。(Hadoop2.x版本已有所变化,找不到) WordCount代码: 我这里用的是hadoop-eclipse-plugin-2.7.3.jar包,将编译好的hadoop-eclipse-plugin相关的jar包,复制到eclipse安装目录下的plugins下。重启eclipse。点击菜单栏Windows–>Preferences,如果插件安装成功,就会出现如下图,在红圈范围内选择hadoop安装目录。 点击Windows–> Show View –> Others –> Map/Redure Location 。 点击下方Map/Redure Locations 窗口,空白处右键New Hadoop location。或点击右边带加号的蓝色小象。填写相关信息。 如何设置信息看下图: 在Eclipse环境下可以方便地进行Hadoop并行程序的开发和调试。推荐使用IBM MapReduce Tools for Eclipse,使用这个Eclipse plugin可以简化开发和部署Hadoop并行程序的过程。基于这个plugin,可以在Eclipse中创建一个Hadoop MapReduce应用程序,并且提供了一些基于MapReduce框架的类开发的向导,可以打包成JAR文件,部署一个Hadoop MapReduce应用程序到一个Hadoop服务器(本地和远程均可),可以通过一个专门的视图(perspective)查看Hadoop服务器、Hadoop分布式文件系统(DFS)和当前运行的任务的状态。 可在IBM alpha Works网站下载这个MapReduce Tool,或在本文的下载清单中下载。将下载后的压缩包解压到你Eclipse安装目录,重新启动Eclipse即可使用了。点击Eclipse主菜单上Windows->Preferences,然后在左侧选择Hadoop Home Directory,设定你的Hadoop主目录。 配置完成,在Eclipse中运行示例。 File —> Project,选择Map/Reduce Project,输入项目名称WordCount等 写入WordCount代码。 点击WordCount.java,右键,点击Run As—>Run Configurations,配置运行参数,参数为:hdfs://localhost:9000/user/hadoop/input hdfs://localhost:9000/user/hadoop/output,分别对应输入和输出。 运行完成,查看结果: 方法1:在终端里面使用命令查看 方法2:直接在Eclipse中查看,DFS Locations,双击打开part-r00000查看结果。 下面对WordCount程序进行一些改进,目标: WordCount降序输出代码: 运行结果: 到此为止,实验一和实验二完成。 致谢: [1]https://blog.csdn.net/u010223431/article/details/51191978 [2]https://blog.csdn.net/xingyyn78/article/details/81085100 [3]https://blog.csdn.net/abcjennifer/article/details/22393197 [4]https://www.cnblogs.com/StevenSun1991/p/6931500.html [5]https://www.ibm.com/developerworks/cn/opensource/os-cn-hadoop2/index.html 2.MapReduce编程实验

实验目标

实验内容

/**

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount {

public static class TokenizerMapper

extends Mapper

package desc;

/**

* WordCount

* 统计输入文件各个单词出现频率

* 统计的时候对于“停词”(从文本文件读入)将不参与统计

* 最后按统计的词频从高到底输出

*

* 特别主import某个类的时候,确定你是要用哪个包所属的该类

*

* */

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.util.*;

import java.util.Map.Entry;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapreduce.*;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.map.InverseMapper;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class WordCount {

/**

* Map: 将输入的文本数据转换为