Android端基于WebRTC的音视频通话小demo

版本信息

AndroidStudio :3.5.2

org.webrtc:google-webrtc:1.0.28513

WebRTC简介

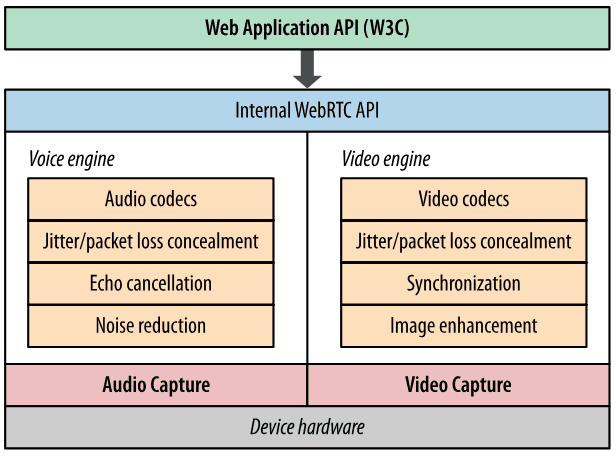

WebRTC是什么

WebRTC,名称源自网页即时通信(英语:Web Real-Time Communication)的缩写,是一个支持网页浏览器进行实时语音对话或视频对话的API。它于2011年6月1日开源并在Google、Mozilla、Opera支持下被纳入万维网联盟的W3C推荐标准

应用场景

点对点音频通话,视频通话,数据共享

优势

- 方便。对于用户来说,在WebRTC出现之前想要进行实时通信就需要安装插件和客户端,但是对于很多用户来说,插件的下载、软件的安装和更新这些操作是复杂而且容易出现问题的,现在WebRTC技术内置于浏览器中,用户不需要使用任何插件或者软件就能通过浏览器来实现实时通信。对于开发者来说,在Google将WebRTC开源之前,浏览器之间实现通信的技术是掌握在大企业手中,这项技术的开发是一个很困难的任务,现在开发者使用简单的HTML标签和JavaScript API就能够实现Web音/视频通信的功能。

- 免费。虽然WebRTC技术已经较为成熟,其集成了最佳的音/视频引擎,十分先进的codec,但是Google对于这些技术不收取任何费用。

- 强大的打洞能力。WebRTC技术包含了使用STUN、ICE、TURN、RTP-over-TCP的关键NAT和防火墙穿透技术,并支持代理。

劣势

- 缺乏服务器方案的设计和部署。

- 传输质量难以保证。WebRTC的传输设计基于P2P,难以保障传输质量,优化手段也有限,只能做一些端到端的优化,难以应对复杂的互联网环境。比如对跨地区、跨运营商、低带宽、高丢包等场景下的传输质量基本是靠天吃饭,而这恰恰是国内互联网应用的典型场景。

- WebRTC比较适合一对一的单聊,虽然功能上可以扩展实现群聊,但是没有针对群聊,特别是超大群聊进行任何优化。

- 设备端适配,如回声、录音失败等问题层出不穷。这一点在安卓设备上尤为突出。由于安卓设备厂商众多,每个厂商都会在标准的安卓框架上进行定制化,导致很多可用性问题(访问麦克风失败)和质量问题(如回声、啸叫)。

- 对Native开发支持不够。WebRTC顾名思义,主要面向Web应用,虽然也可以用于Native开发,但是由于涉及到的领域知识(音视频采集、处理、编解码、实时传输等)较多,整个框架设计比较复杂,API粒度也比较细,导致连工程项目的编译都不是一件容易的事。

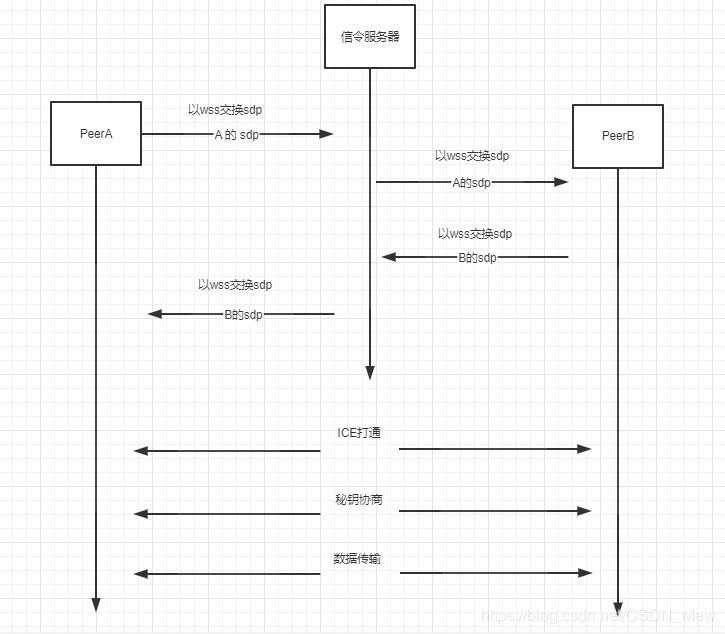

原理

基础架构

SDP交换

- A进入空房间,等待其它设备进入房间。

- B进入房间,A收到wss的"_new_peer"通知,建立与B的PeerConnection,在PeerConnection中调用addStream方法,加入A本地的stream

- B进入房间,B收到wss的"_peers"通知,建立与A的PeerConnection连接,在PeerConnection中调用addStream方法,加入B本地的stream。调用PeerConnection的createOffer方法创建一个包含sdp的offer信令。信令创建成功,回调SdpObserver监听中的onCreateSucces函数,在此处B通过peerConnection.setLocalDescription()方法将SDP赋予自己的PeerConnection对象,设置成功会回调SdpObserver.onSetSuccess方法,在此处B将offer信令发送给服务器。

- 服务器将B的offer信令转发给A

- A收到wss的“_offer”通知,收到B的offer信令。在PeerConnection中调用setRemoteDescription方法将B的sdp写入。设置成功会回调SdpObserver.onSetSuccess方法,在此处A调用PeerConnection的createAnswer方法,创建一个包含sdp的answer信令。信令创建成功,回调SdpObserver监听中的onCreateSucces函数,在此处A通过peerConnection.setLocalDescription()方法将SDP赋予自己的PeerConnection对象,设置成功会回调SdpObserver.onSetSuccess方法,在此处A将answer信令发送给服务器。

- 服务器将A的answer信令转发给B

- B收到wss的“_answer”通知,收到A的answer信令。在PeerConnection中调用setRemoteDescription方法将A的sdp写入。设置成功会回调SdpObserver.onSetSuccess方法。

- SDP交换完成。

IceCandidate交换

- A与Ice服务器连接后回调PeerConnection.Observer.onIceCandidate方法,A将IceCandidate发送给服务器

- B进入房间后, 同样将IceCandidate发送给服务器

- A收到wss的“_ice_candidate”通知,收到B的IceCandidate,调用PeerConnection.addIceCandidate方法设置IceCandidate

- B收到wss的“_ice_candidate”通知,收到A的IceCandidate,调用PeerConnection.addIceCandidate方法设置IceCandidate

- IceCandidate交换完成即建立了Peer-To-Peer的连接,A与B可以进行数据交换。

代码实现

创建WebRTC连接工厂

/**

* 创建WebRTC连接工厂

*

* @return

*/

private PeerConnectionFactory createPeerConnectionFactory() {

VideoEncoderFactory encoderFactory;

VideoDecoderFactory decoderFactory;

// 其他参数设置成默认的

PeerConnectionFactory.initialize(PeerConnectionFactory.InitializationOptions.builder(context).createInitializationOptions());

// 使用默认的视频编解码器, true -是否支持Vp8编码 true -是否支持H264编码

encoderFactory = new DefaultVideoEncoderFactory(rootGLContext.getEglBaseContext(), true, true);

decoderFactory = new DefaultVideoDecoderFactory(rootGLContext.getEglBaseContext());

PeerConnectionFactory.Options options = new PeerConnectionFactory.Options();

return PeerConnectionFactory.builder().setOptions(options)

.setAudioDeviceModule(JavaAudioDeviceModule.builder(context).createAudioDeviceModule()) // 设置音频设备

.setVideoDecoderFactory(decoderFactory) // 设置视频解码工厂

.setVideoEncoderFactory(encoderFactory) // 设置视频编码工厂

.createPeerConnectionFactory();

}

创建本地stream

localStream = factory.createLocalMediaStream("ARDAMS");

增加音频轨

创建音频源

/**

* 音频源的参数信息

*

* @return

*/

// googEchoCancellation 回音消除

private static final String AUDIO_ECHO_CANCELLATION_CONSTRAINT = "googEchoCancellation";

// googNoiseSuppression 噪声抑制

private static final String AUDIO_NOISE_SUPPRESSION_CONSTRAINT = "googNoiseSuppression";

// googAutoGainControl 自动增益控制

private static final String AUDIO_AUTO_GAIN_CONTROL_CONSTRAINT = "googAutoGainControl";

// googHighpassFilter 高通滤波器

private static final String AUDIO_HIGH_PASS_FILTER_CONSTRAINT = "googHighpassFilter";

/**

* 设置音频源参数

*

* @return

*/

private MediaConstraints createAudioConstraints() {

MediaConstraints audioConstraints = new MediaConstraints();

audioConstraints.mandatory.add(new MediaConstraints.KeyValuePair(AUDIO_ECHO_CANCELLATION_CONSTRAINT

, "true"));

audioConstraints.mandatory.add(

new MediaConstraints.KeyValuePair(AUDIO_NOISE_SUPPRESSION_CONSTRAINT, "true"));

audioConstraints.mandatory.add(

new MediaConstraints.KeyValuePair(AUDIO_AUTO_GAIN_CONTROL_CONSTRAINT, "false"));

audioConstraints.mandatory.add(

new MediaConstraints.KeyValuePair(AUDIO_HIGH_PASS_FILTER_CONSTRAINT, "true"));

return audioConstraints;

}

// 音频源

AudioSource audioSource = factory.createAudioSource(createAudioConstraints());

创建并添加音频轨

// 音频轨

AudioTrack audioTrack = factory.createAudioTrack("ARDAMSa0", audioSource);

// 添加音频轨

localStream.addTrack(audioTrack);

增加视频轨

创建视频捕捉器

/**

* 创建视频捕捉器

*

* @return

*/

private VideoCapturer createVideoCapturer() {

VideoCapturer videoCapturer;

if (Camera2Enumerator.isSupported(context)) {

// 支持Camera2

Camera2Enumerator camera2Enumerator = new Camera2Enumerator(context);

videoCapturer = createVideoCapturer(camera2Enumerator);

} else {

// 不支持Camera2

Camera1Enumerator camera1Enumerator = new Camera1Enumerator(true);

videoCapturer = createVideoCapturer(camera1Enumerator);

}

return videoCapturer;

}

/**

* 使用前置或者后置摄像头

* 首选前置摄像头,如果没有前置摄像头,才用后置摄像头

*

* @param enumerator

* @return

*/

private VideoCapturer createVideoCapturer(CameraEnumerator enumerator) {

String[] deviceNames = enumerator.getDeviceNames();

for (String deviceName : deviceNames) {

if (enumerator.isFrontFacing(deviceName)) {

VideoCapturer videoCapturer = enumerator.createCapturer(deviceName, null);

if (videoCapturer != null) {

return videoCapturer;

}

}

}

for (String deviceName : deviceNames) {

if (enumerator.isBackFacing(deviceName)) {

VideoCapturer videoCapturer = enumerator.createCapturer(deviceName, null);

if (videoCapturer != null) {

return videoCapturer;

}

}

}

return null;

}

创建视频源

VideoCapturer videoCapturer = createVideoCapturer();

// 视频源

VideoSource videoSource = factory.createVideoSource(videoCapturer.isScreencast());

SurfaceTextureHelper surfaceTextureHelper = SurfaceTextureHelper.create(

"CaptureThread", rootGLContext.getEglBaseContext()

);

// 初始化视频捕捉, 需要将GL上下文传入, 直接渲染,不需要自己编解码了

videoCapturer.initialize(surfaceTextureHelper, context, videoSource.getCapturerObserver());

// 开启预览, 宽,高,帧数

videoCapturer.startCapture(300, 240, 10);

创建并添加视频轨

// 视频轨

VideoTrack videoTrack = factory.createVideoTrack("ARDAMSv0", videoSource);

// 添加视频轨

localStream.addTrack(videoTrack);

展示本地stream

/**

* 本地流创建完成回调,显示画面

*

* @param localStream

* @param userId

*/

public void onSetLocalStream(final MediaStream localStream, final String userId) {

runOnUiThread(new Runnable() {

@Override

public void run() {

addView(localStream, userId);

}

});

}

/**

* 向FrameLayout中添加View

*

* @param stream

* @param userId

*/

private void addView(MediaStream stream, String userId) {

// 不用SurfaceView, 使用WebRTC提供的,他们直接渲染,开发者不需要关心

SurfaceViewRenderer renderer = new SurfaceViewRenderer(this);

// 初始化renderer

renderer.init(rootEglBase.getEglBaseContext(), null);

// SCALE_ASPECT_FIT 设置缩放模式 按照View的宽度 和高度设置 , SCALE_ASPECT_FILL按照摄像头预览的画面大小设置

renderer.setScalingType(RendererCommon.ScalingType.SCALE_ASPECT_FIT);

//翻转

renderer.setMirror(true);

// 将摄像头的数据 SurfaceViewRenderer

if (stream.videoTracks.size() > 0) {

stream.videoTracks.get(0).addSink(renderer);

}

// 会议室 1 + N个人

videoViews.put(userId, renderer);

persons.add(userId);

// 将SurfaceViewRenderer添加到FrameLayout width=0 height=0

mFrameLayout.addView(renderer);

int size = videoViews.size();

for (int i = 0; i < size; i++) {

String peerId = persons.get(i);

SurfaceViewRenderer renderer1 = videoViews.get(peerId);

if (renderer1 != null) {

FrameLayout.LayoutParams layoutParams = new FrameLayout.LayoutParams(

ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT);

layoutParams.height = Utils.getWidth(this, size);

layoutParams.width = Utils.getWidth(this, size);

layoutParams.leftMargin = Utils.getX(this, size, i);

layoutParams.topMargin = Utils.getY(this, size, i);

renderer1.setLayoutParams(layoutParams);

}

}

}

建立Peer-To-Peer的连接

建立PeerConnection连接

peerConnection = factory.createPeerConnection(servers, callback);

信令的参数

/**

* 向房间其它人发送请求时的参数

*

*

* 设置传输音视频

* 音频()

* 视频(false)

*

* @return

*/

private MediaConstraints offerOrAnswerConstraint() {

// 媒体约束

MediaConstraints mediaConstraints = new MediaConstraints();

ArrayList keyValuePairs = new ArrayList<>();

// 音频 必须传输

keyValuePairs.add(new MediaConstraints.KeyValuePair("OfferToReceiveAudio", "true"));

// videoEnable

keyValuePairs.add(new MediaConstraints.KeyValuePair("OfferToReceiveVideo", String.valueOf(isVideoOpen)));

mediaConstraints.mandatory.addAll(keyValuePairs);

return mediaConstraints;

}

创建offer信令

peerConnection.createOffer(mPeer, offerOrAnswerConstraint());

offer信令建立成功,设置本地sdp

@Override

public void onCreateSuccess(SessionDescription sessionDescription) {

Log.i(TAG, "onCreateSuccess: ");

Log.d(TAG, "onCreateSuccess" + Peer.this.toString());

// 设置本地的SDP 如果设置成功则回调onSetSuccess

peerConnection.setLocalDescription(this, sessionDescription);

}

本地sdp设置成功,发送offer信令

@Override

public void onSetSuccess() {

Log.d(TAG, "onSetSuccess" + Peer.this.toString());t

javaWebSocket.sendOffer(socketId, peerConnection.getLocalDescription());

}

/**

* 将sdp发送给远端

*

* @param socketId

* @param sdp

*/

public void sendOffer(String socketId, SessionDescription sdp) {

HashMap childMap1 = new HashMap();

childMap1.put("type", "offer");

childMap1.put("sdp", sdp.description);

HashMap childMap2 = new HashMap();

childMap2.put("socketId", socketId);

childMap2.put("sdp", childMap1);

HashMap map = new HashMap();

map.put("eventName", "__offer");

map.put("data", childMap2);

JSONObject object = new JSONObject(map);

String jsonString = object.toString();

Log.d(TAG, "send-->" + jsonString);

mWebSocketClient.send(jsonString);

}

收到offer信令,设置远端sdp

peerConnection.setRemoteDescription(mPeer, sdp);

远端sdp设置成功,创建answer信令

@Override

public void onSetSuccess() {

Log.d(TAG, "onSetSuccess" + Peer.this.toString());

peerConnection.createAnswer(Peer.this, offerOrAnswerConstraint());

}

answer信令创建成功,设置本地sdp

@Override

public void onCreateSuccess(SessionDescription sessionDescription) {

Log.i(TAG, "onCreateSuccess: ");

Log.d(TAG, "onCreateSuccess" + Peer.this.toString());

// 设置本地的SDP 如果设置成功则回调onSetSuccess

peerConnection.setLocalDescription(this, sessionDescription);

}

本地sdp设置成功,发送answer信令

@Override

public void onSetSuccess() {

Log.d(TAG, "onSetSuccess" + Peer.this.toString());

javaWebSocket.sendAnswer(socketId, peerConnection.getLocalDescription().description);

}

收到answer信令,设置远端sdp

peerConnection.setRemoteDescription(mPeer, sessionDescription);

远端sdp设置成功

@Override

public void onSetSuccess() {

Log.d(TAG, "onSetSuccess" + Peer.this.toString());

}

交换IceCandidate

发送IceCandidate

@Override

public void onIceCandidate(IceCandidate iceCandidate) {

// socket-----》 传递

Log.d(TAG, "onIceCandidate" + Peer.this.toString());

javaWebSocket.sendIceCandidate(socketId, iceCandidate);

}

/**

* 将本机到服务的ice发送给服务器

*

* @param userId

* @param iceCandidate

*/

public void sendIceCandidate(String userId, IceCandidate iceCandidate) {

HashMap childMap = new HashMap();

childMap.put("id", iceCandidate.sdpMid);

childMap.put("label", iceCandidate.sdpMLineIndex);

childMap.put("candidate", iceCandidate.sdp);

childMap.put("socketId", userId);

HashMap map = new HashMap();

map.put("eventName", "__ice_candidate");

map.put("data", childMap);

JSONObject object = new JSONObject(map);

String jsonString = object.toString();

Log.d(TAG, "send-->" + jsonString);

mWebSocketClient.send(jsonString);

}

收到IceCandidate

/**

* 收到对方的ice

*

* @param socketId

* @param iceCandidate

*/

public void onRemoteIceCandidate(String socketId, IceCandidate iceCandidate) {

// 通过socketId 取出连接对象

peerConnection.addIceCandidate(iceCandidate);

}

展示远端stream

//p2p建立成功之后 mediaStream(视频流 音段流) 子线程

@Override

public void onAddStream(MediaStream mediaStream) {

context.onAddRemoteStream(mediaStream, socketId);

Log.d(TAG, "onAddStream" + Peer.this.toString());

}

/**

* 增加其它用户的流

*

* @param stream

* @param userId

*/

public void onAddRemoteStream(MediaStream stream, String userId) {

runOnUiThread(new Runnable() {

@Override

public void run() {

addView(stream, userId);

}

});

}

/**

* 向FrameLayout中添加View

*

* @param stream

* @param userId

*/

private void addView(MediaStream stream, String userId) {

// 不用SurfaceView, 使用WebRTC提供的,他们直接渲染,开发者不需要关心

SurfaceViewRenderer renderer = new SurfaceViewRenderer(this);

// 初始化renderer

renderer.init(rootEglBase.getEglBaseContext(), null);

// SCALE_ASPECT_FIT 设置缩放模式 按照View的宽度 和高度设置 , SCALE_ASPECT_FILL按照摄像头预览的画面大小设置

renderer.setScalingType(RendererCommon.ScalingType.SCALE_ASPECT_FIT);

//翻转

renderer.setMirror(true);

// 将摄像头的数据 SurfaceViewRenderer

if (stream.videoTracks.size() > 0) {

stream.videoTracks.get(0).addSink(renderer);

}

// 会议室 1 + N个人

videoViews.put(userId, renderer);

persons.add(userId);

// 将SurfaceViewRenderer添加到FrameLayout width=0 height=0

mFrameLayout.addView(renderer);

int size = videoViews.size();

for (int i = 0; i < size; i++) {

String peerId = persons.get(i);

SurfaceViewRenderer renderer1 = videoViews.get(peerId);

if (renderer1 != null) {

FrameLayout.LayoutParams layoutParams = new FrameLayout.LayoutParams(

ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT);

layoutParams.height = Utils.getWidth(this, size);

layoutParams.width = Utils.getWidth(this, size);

layoutParams.leftMargin = Utils.getX(this, size, i);

layoutParams.topMargin = Utils.getY(this, size, i);

renderer1.setLayoutParams(layoutParams);

}

}

}

参考博客

感谢博主们写这么好的博客,给了我很多的帮助。

https://rtcdeveloper.com/t/topic/13777

https://www.jianshu.com/p/2a760b56e3a9