kerberos + Ranger 实现对Kafka的认证以及权限管理

1. 安装Ranger

- 安装JDK(略)

- 编译Ranger(略)

- 安装MySQL(略)

- 创建名为ranger的数据库(

CREATE USER 'ranger'@'%' IDENTIFIED BY 'ranger';)。 - 创建名为ranger的用户,并授权ranger数据库所有权限给ranger(

GRANT ALL PRIVILEGES ON ranger.* TO 'ranger'@'%';) - 刷新生效(

FLUSH PRIVILEGES;)

- 创建名为ranger的数据库(

- 安装Ranger

-

解压ranger-2.0.0-admin.tar.gz

-

安装内置solr

-

编辑solr安装配置文件:

vim $RANGER_ADMIN_HOME/contrib/solr_for_audit_setup/install.propertiesJAVA_HOME=/opt/jdk1.8.0_201 SOLR_USER=solr SOLR_GROUP=solr SOLR_INSTALL=true #设置为true标示自动安装 SOLR_DOWNLOAD_URL=http://mirror.bit.edu.cn/apache/lucene/solr/8.5.1/solr-8.5.1.tgz # 修改solr安装包下载地址 SOLR_INSTALL_FOLDER=/opt/solr # 安装目录 SOLR_RANGER_HOME=/opt/solr/ranger_audit_server SOLR_RANGER_PORT=6083 SOLR_DEPLOYMENT=standalone SOLR_RANGER_DATA_FOLDER=/opt/solr/ranger_audit_server/data -

执行

$RANGER_ADMIN_HOME/contrib/solr_for_audit_setup/setup.sh进行solr安装。 -

切换到solr用户启动solr

/opt/solr/ranger_audit_server/scripts/start_solr.sh(停止solr命令为相同目录下的stop_solr.sh) -

访问

http://hostname:6083,如果能正常访问页面,表示solr安装成功。

-

-

ranger-admin安装。

- 修改ranger-admin配置文件。需要修改项如下,其他保持默认。

# DB DB_FLAVOR=MYSQL SQL_CONNECTOR_JAR=${your path}/mysql-connector-java-8.0.16.jar db_root_user=root db_root_password=root db_host=${your mysql server host}:3306 # # DB UserId used for the Ranger schema # db_name=ranger db_user=ranger db_password=ranger audit_store=solr audit_solr_urls=http://${your solr server host}:6083/solr/ranger_audits - 执行初始化脚本

$RANGER_ADMIN_HOME/setup.sh - 启动ranger-admin

ranger-admin start - 访问

http://hostname:6080/,如果能正常访问,表示ranger安装成功。 - 用admin/admin登录ranger。

- 修改ranger-admin配置文件。需要修改项如下,其他保持默认。

-

2. 安装Kafka(略)

3. 安装 Ranger kafka 插件

-

编译ranger-kafka 插件(略)

-

解压kafka 插件 ranger-2.0.0-kafka-plugin.tar

-

配置install.properties,修改或添加如下项,其他保持默认

vim $ranger-2.0.0-kafka-plugin-HOME/install.propertiesCOMPONENT_INSTALL_DIR_NAME=$KAFKA_HOME POLICY_MGR_URL=http://${your ranger server hostname}:6080/ # ranger SQL_CONNECTOR_JAR=${your path}/mysql-connector-java-8.0.16.jar XAAUDIT.SOLR.ENABLE=true XAAUDIT.SOLR.URL=http://${your solr server hostname}:6083/solr/ranger_audits JAVA_HOME=/opt/jdk1.8.0_201 REPOSITORY_NAME=kafkadev XAAUDIT.SUMMARY.ENABLE=true XAAUDIT.SOLR.SOLR_URL=http://${your solr server hostname:6083/solr/ranger_audits CUSTOM_USER=${username} CUSTOM_GROUP=${username} -

拷贝配置好的ranger-2.0.0-kafka-plugin到其他kafka broker节点。

-

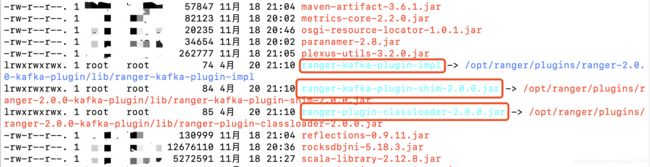

在各个kafka broker节点执行ranger-kafka 插件中的脚本

${ranger-2.0.0-kafka-plugin_HOME}/enable-kafka-plugin.sh,插件会自动拷贝(创建软连接)插件目录下lib下的包到kafka的libs目录下,并在kafka的config目录下创建对应的以ranger开头的配置文件。 -

查看配置文件是否已经创建(ranger开头的文件)。

3. 安装Kerberos

- Kerberos概念以及介绍(略)

- 安装Kerberos 服务器(只需要安装一个机器)。

sudo yum install -y krb5-server - 安装Kerberos 客户端 (需要使用Kerberos认证的节点都需要安装,如kafka 的各个broker所在的节也需要安装)。

yum install -y krb5-workstation - 修改kdc配置文件。

vim /var/kerberos/krb5kdc/kdc.conf[kdcdefaults] kdc_ports = 88 kdc_tcp_ports = 88 [realms] BUGBOY.COM = { # 修改为自己的域名 #master_key_type = aes256-cts acl_file = /var/kerberos/krb5kdc/kadm5.acl dict_file = /usr/share/dict/words admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal } - 修改

/var/kerberos/krb5kdc/kadm5.acl文件*/[email protected] * - 修改

/etc/krb5.kdc,并拷贝到其他客户端/etc 目录下# Configuration snippets may be placed in this directory as well includedir /etc/krb5.conf.d/ [logging] default = FILE:/var/log/krb5libs.log kdc = FILE:/var/log/krb5kdc.log admin_server = FILE:/var/log/kadmind.log [libdefaults] dns_lookup_realm = false ticket_lifetime = 24h renew_lifetime = 7d forwardable = true rdns = false pkinit_anchors = /etc/pki/tls/certs/ca-bundle.crt # 修改域名为自己设置的域名 default_realm = BUGBOY.COM default_ccache_name = KEYRING:persistent:%{uid} [realms] BUGBOY.COM = { # 修改域名为自己设置的域名 kdc = kdchost.bugboy.com #kdchost为kdc 服务器所在的主机名 admin_server = kdchost.bugboy.com #kdchost为kadmin 所在的主机名 } # 修改域名为自己设置的域名 [domain_realm] .bugboy.com = BUGBOY.COM bugboy.com = BUGBOY.COM - 修改kdc服务器所在主机的hosts ,并在其他客户端的hosts下也添加上。

# ip 地址为kdc服务器的ip地址 192.168.254.66 kdchost kdchost.bugboy.com - 配置kdc、kadmin服务开机启动。

sudo systemctl enable krb5kdc.service或者sudo chkconfig --level 35 krb5kdc on

sudo systemctl enable kadmin.service或者sudo chkconfig --level 35 kadmin on - 创建Kerberos数据库

kdb5_util create -s -r BUGBOY.COM - 启动krb5kdc 服务和 kadmin 服务。

sudo systemctl start krb5kdc.service或者sudo service krb5kdc start

sudo systemctl start kadmin.service或者sudo service kadmin start - 进入kadmin命令行,

sudo kadmin.local - 创建管理员主体

addprinc -randkey root/admin - 创建kafka主体,其中broker1、broker2、broker3为kakfa broker的hostname:

addprinc -randkey kafka/[email protected]

addprinc -randkey kafka/[email protected]addprinc -randkey kafka/[email protected] - 导出keytab文件到kafka.keytab,导出的kafka.keytab文件就在运行kadmin.local时的目录下。

xst -k kafka.keytab kafka/[email protected]

xst -k kafka.keytab kafka/[email protected]

xst -k kafka.keytab kafka/[email protected] - 测试认证。

sudo kinit -kt kafka.keytab路径 kafka/[email protected] - 在kafka home 下新建keytabs目录,并将kafka.keytab拷贝到该目录下,并同步到其他broker节点。

- 在kafka home 下新建jassconf目录,并在该目录下新建kafka_server_jaas.conf文件,内容如下:

KafkaServer { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true storeKey=true keyTab="${KAFKA_HOME/keytabs/kafka.keytab}" # KAFKA_HOME需要修改为KAFKA安装目录的路径 principal="kafka/[email protected]"; }; - 将jassconf拷贝到各个broker的kafka 安装目录下。并修改kafka_server_jaas.conf中的principal="kafka/[email protected]"为当前节点对应的principal

- 修改kafka bin目录下的kafka-run-class.sh,在

# JVM performance options的地方即KAFKA_JVM_PERFORMANCE_OPTS追加-Djava.security.krb5.conf=/etc/krb5.conf $KAFKA_JAAS,并拷贝到其他broker对应目录下。结果如下:# JVM performance options if [ -z "$KAFKA_JVM_PERFORMANCE_OPTS" ]; then KAFKA_JVM_PERFORMANCE_OPTS="-server -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent -Djava.awt.headless=true -Djava.security.krb5.conf=/etc/krb5.conf $KAFKA_JAAS" fi - 修改kafka bin目录下的kafka-server-start.sh,在如下位置添加如下内容。并拷贝到其他broker对应目录下。

EXTRA_ARGS=${EXTRA_ARGS-'-name kafkaServer -loggc'} COMMAND=$1 case $COMMAND in -daemon) EXTRA_ARGS="-daemon "$EXTRA_ARGS shift ;; *) ;; esac # 增加kafka server对应的KAFKA_JAAS变量,其中$KAFKA_HOME需要保证为有效路径。 export KAFKA_JAAS="-Djava.security.auth.login.config=$KAFKA_HOME/jassconf/kafka_server_jaas.conf" exec $base_dir/kafka-run-class.sh $EXTRA_ARGS kafka.Kafka "$@" - 每个broker修改kafka config 下的server.properties,将下面内容追加到文件末尾。注意,SASL_PLAINTEXT后面的地址为当前broker的主机地址

authorizer.class.name=org.apache.ranger.authorization.kafka.authorizer.RangerKafkaAuthorizer advertised.listeners=SASL_PLAINTEXT://broker1:9092 security.inter.broker.protocol=SASL_PLAINTEXT sasl.mechanism.inter.broker.protocol=GSSAPI sasl.enabled.mechanisms=GSSAPI sasl.kerberos.service.name=kafka listeners=SASL_PLAINTEXT://broker1:9092 - 启动kafka。

- 查看ranger中是否有kafka的插件,以及审计日志是否正常。

4. 使用kafka 客户端(Topic创建,生产数据以及消费)时的认证和用户切换

- 用kadmin创建两个Kerberos的Principal,分别为client1,clinet2。

addprinc -randkey client1/[email protected]

addprinc -randkey client2/[email protected] - 分别导出其keytab为client1.keytab、client2.keytab。并将client1.keytab、client2.keytab放到${KAFKA_HOME}/keytabs目录下。

- 为client1新建kafka_client1_jaas.conf文件并放到${KAFKA_HOME}/jassconf目录下,内容如下:

KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true storeKey=true keyTab="${KAFKA_HOME}/keytabs/client1.keytab" #KAFKA_HOME需要修改为绝对路径 principal="client1/[email protected]"; }; - 为client2新建kafka_client2_jaas.conf文件并放到${KAFKA_HOME}/jassconf目录下,内容如下:

KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true storeKey=true keyTab="${KAFKA_HOME}/keytabs/client2.keytab" #KAFKA_HOME需要修改为绝对路径 principal="client2/[email protected]"; }; - 在KAFKA_HOME目录下新建一个userConf目录,并新建一个client.conf文件,内容如下:

security.protocol=SASL_PLAINTEXT sasl.mechanism=GSSAPI sasl.kerberos.service.name=kafka - 用kafka principal新建一个名为test-topic的topic。主要,根据自己的情况将KAFKA_HOME替换为自己的目录

- 在shell中执行

export KAFKA_JAAS="-Djava.security.auth.login.config=${KKAFKA_HOME}/jaasconf/kafka_server_jaas.conf" - 创建 topic:

$AKFKA_HOME/bin/kafka-topics.sh --create --bootstrap-server broker1:9092 --topic test-topic --partitions 3 --replication-factor 2 --command-config $AKFKA_HOME/userConf/client.conf - 查看ranger的审计日志

- 在shell中执行

- 在ranger中新建两个用户,分别为client1,client1,并授予向 test-topic生产数据的权限。

- 使用client1 的principal生产数据使用kafka的脚本进行生产数据。

- 在shell 中执行

export KAFKA_JAAS="-Djava.security.auth.login.config=${KKAFKA_HOME}/jaasconf/kafka_client1_jaas.conf" - 往test-topic中生产数据

$AKFKA_HOME/bin/k/kafka-console-producer.sh --broker-list broker1:9092 --topic test-topic --producer.config $AKFKA_HOME/config/userConf/client.conf

- 在shell 中执行

- 使用client2 的principal生产数据使用kafka的脚本进行生产数据。

- 在shell 中执行

export KAFKA_JAAS="-Djava.security.auth.login.config=${KKAFKA_HOME}/jaasconf/kafka_client2_jaas.conf" - 往test-topic中生产数据

$AKFKA_HOME/bin/k/kafka-console-producer.sh --broker-list broker1:9092 --topic test-topic --producer.config $AKFKA_HOME/config/userConf/client.conf

- 在shell 中执行

- 查看Ranger的审计日志。

- 在ranger中将client2的权限修改为拒绝。

- 再使用client2 的principal生产数据使用kafka的脚本进行生产数据。

- 在shell 中执行

export KAFKA_JAAS="-Djava.security.auth.login.config=${KKAFKA_HOME}/jaasconf/kafka_client2_jaas.conf" - 往test-topic中生产数据

$AKFKA_HOME/bin/k/kafka-console-producer.sh --broker-list broker1:9092 --topic test-topic --producer.config $AKFKA_HOME/config/userConf/client.conf