第一次参加Kaggle

过程:

下午看书看得太难受了,超无聊的,就想着干脆先跳过这些公式和语法,直接先开一场Kaggle

注册,头一次选择项目参赛,选了个看起来数据很规整,纯数字的(因为我不会图像):

树叶分类比赛:

https://www.kaggle.com/c/leaf-classification/

磨磨磨了一个下午加晚上终于写完了:

# -*- coding: utf-8 -*-

"""

Created on Sun Sep 25 13:51:57 2016

@author: ATE

"""

#import------------------------------------

from pandas import DataFrame

from pandas import Series

import pandas as pd

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import RandomForestRegressor

import numpy as np

#read-------------------------------------

trainPath='train.csv'

prodictPath='test.csv'

samplePath='sample_submission.csv'

savePath='result.csv'

trainSet=pd.read_csv(trainPath)

prodictSet=pd.read_csv(prodictPath)

submissionSet=pd.read_csv(samplePath)

#data_transform---------------------------

targetSet=trainSet['species']

train=trainSet.drop('species',axis=1)

targetTypeDict=Series(targetSet.unique()).to_dict()

targetTypeMapDict=dict((v,k) for k,v in targetTypeDict.iteritems())

targetSet=targetSet.map(targetTypeMapDict)

#randomForest------------------------------------

rf = RandomForestRegressor()

rf.fit(train,targetSet)

res=rf.predict(prodictSet)

#data_reform--------------------------------

res=np.around(res, decimals=0)

resSeries=Series(res)

resSeries=resSeries.map(targetTypeDict)

submissionSetS=submissionSet.applymap(lambda x:0)

indexList=range(len(resSeries))

for index in indexList:

typeName=resSeries[index]

submissionSetS.loc[index,typeName]=1

submissionSetS['id']=submissionSet['id']

submissionSetS.to_csv(savePath,index=False)

结果:

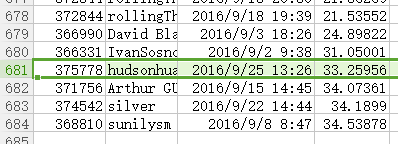

竟然最后还不是最后一名:

倒数第四,嗯嗯,还是比三个人厉害呢!

而且分类错误率“只有”33%,对于一个不是生物学专业的来说还是很强?

哈哈哈哈哈哈哈,总之第一次熟悉了流程,感觉还是很兴奋和好玩的

结果我去看一下别人的代码:

import pandas as pd

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.grid_search import GridSearchCV

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import StandardScaler

from sklearn import svm

from sklearn.ensemble import RandomForestClassifier

np.random.seed(42)

train = pd.read_csv('../input/train.csv')

x_train = train.drop(['id', 'species'], axis=1).values

le = LabelEncoder().fit(train['species'])

y_train = le.transform(train['species'])

scaler = StandardScaler().fit(x_train)

x_train = scaler.transform(x_train)

test = pd.read_csv('../input/test.csv')

test_ids = test.pop('id')

x_test = test.values

scaler = StandardScaler().fit(x_test)

x_test = scaler.transform(x_test)

#params = {'C':[1, 10, 50, 100, 500, 1000, 2000], 'tol': [0.001, 0.0001, 0.005], 'solver': ["newton-cg"]}

#log_reg = LogisticRegression(multi_class="multinomial")

#clf = GridSearchCV(log_reg, params, scoring='log_loss', refit='True', n_jobs=1, cv=5)

#clf.fit(x_train, y_train)

#y_test = clf.predict_proba(x_test)

log_reg = LogisticRegression(C=2000, multi_class="multinomial", tol=0.0001, solver='newton-cg')

log_reg.fit(x_train, y_train)

y_test = log_reg.predict_proba(x_test)

#params = {'n_estimators':[1, 10, 50, 100, 500]}

#random_forest = RandomForestClassifier()

#clf = GridSearchCV(random_forest, params, scoring='log_loss', refit='True', n_jobs=1, cv=5)

#clf.fit(x_train, y_train)

#y_test = clf.predict_proba(x_test)

submission = pd.DataFrame(y_test, index=test_ids, columns=le.classes_)

submission.to_csv('submission2.csv')what?二十行就写完了?what?就一个逻辑回归就搞定了?错误率才0.02?要知道我可是用了高大上的随机森林啊!what?我翻来倒去弄来弄去的数据规整,竟然sklearn里有一个叫LabelEncoder的工具两行就搞定了?

吊飞了!哈哈哈看来还要好多东西可以学啊!

感受:

真的能学到好多东西。

感觉最大的不同就是kaggle里你是按照自己的想法去写代码的,想到哪里写哪里,如果一个语法写不出来就用别的办法绕过,所以基本上都能写出来。终于没有那种被python的奇葩语法各种烦的感觉了,可能是自己也熟一点了,也可能是感觉自己做的事情更有意义了。

而且最后看看自己是怎么被大神各种虐的,学到一招两招,也是超爽的感觉。

如果不自己试着做一遍,可能看到大神的代码,也是一点感觉都没有的,有感触的句子,可能也很少吧。

而且,一开始我还在想可能排前面的人用了各种优化,比如,根据名字的拼写来确定两个分类间的接近的关系(比如Acer_Opalus和Acer_Platanoids可能就有点相近的关系),或者用图像的方法把图片做了特征什么的,结果并不是这样......人家直接一个逻辑回归就把正确率压倒0.02了,用神经网络的话更是很快就降到0了。。。。可能是这个例子太简单了吧。。。。