人脸对齐之Adaptive Wing Loss

论文:Adaptive Wing Loss for Robust Face Alignment via Heatmap Regression

Github: https://github.com/protossw512/AdaptiveWingLoss

ICCV2019

论文贡献:

- 改进了Wing loss,提出了Adaptive Wing loss,实现了前景和背景的误差平衡

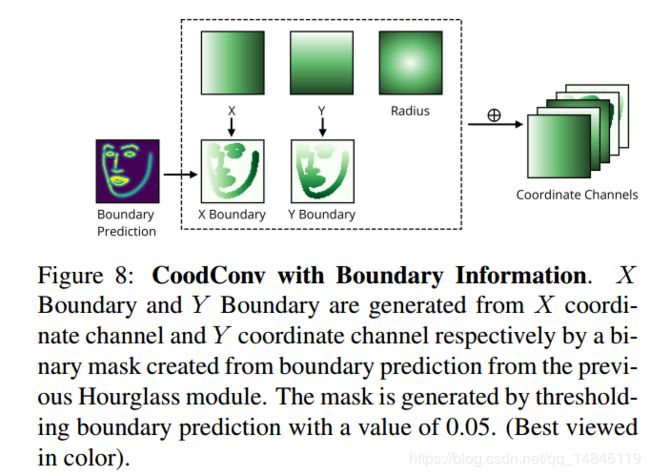

- 提出了编码坐标信息的CoordConv

- 提出了将关键点的边界Boundary和关键点landmark一起训练的思路。

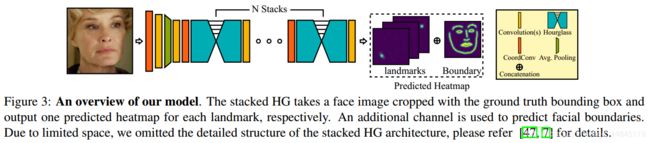

整体网络结构:

整体模型结构N=4个hourglass模块。输入图像大小为256*256,输出图像大小为64*64。

输入图像为人脸检测得到的图像,会对该图像进行长宽各10%的扩充。

预测的特征图包含c个通道的landmarks和1个通道的boundary。其中,landmarks表示人脸关键点,一个channel预测一个点,boundary表示人脸轮廓的分割的线。Landmarks+boundary一起预测有助于促进网络学习的更好。

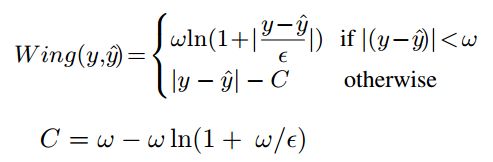

传统的Wing loss:

出自论文,Wing Loss for Robust Facial Landmark Localisation with Convolutional Neural Networks

可以参考,https://github.com/TropComplique/wing-loss

y:groundtruth上的heatmap

y^:预测的heatmap

基于tensorflow 的实现,

import tensorflow as tf

import math

def wing_loss(landmarks, labels, w=10.0, epsilon=2.0):

"""

Arguments:

landmarks, labels: float tensors with shape [batch_size, num_landmarks, 2].

w, epsilon: a float numbers.

Returns:

a float tensor with shape [].

"""

with tf.name_scope('wing_loss'):

x = landmarks - labels

c = w * (1.0 - math.log(1.0 + w/epsilon))

absolute_x = tf.abs(x)

losses = tf.where(

tf.greater(w, absolute_x),

w * tf.log(1.0 + absolute_x/epsilon),

absolute_x - c

)

loss = tf.reduce_mean(tf.reduce_sum(losses, axis=[1, 2]), axis=0)

return loss

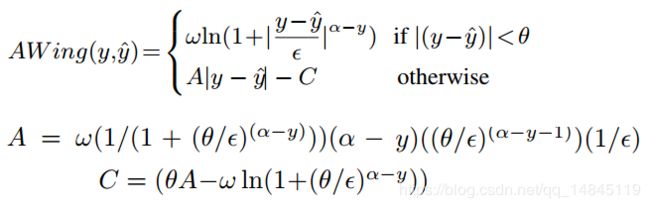

改进版Adaptive Wing loss:

w:正数,等于14

θ:正数,等于0.5

e:正数,等于1

α :大于2的数,等于 2.1

基于pytorch的实现,

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

class AWing(nn.Module):

def __init__(self, alpha=2.1, omega=14, epsilon=1, theta=0.5):

super().__init__()

self.alpha = float(alpha)

self.omega = float(omega)

self.epsilon = float(epsilon)

self.theta = float(theta)

def forward(self, y_pred , y):

lossMat = torch.zeros_like(y_pred)

A = self.omega * (1/(1+(self.theta/self.epsilon)**(self.alpha-y)))*(self.alpha-y)*((self.theta/self.epsilon)**(self.alpha-y-1))/self.epsilon

C = self.theta*A - self.omega*torch.log(1+(self.theta/self.epsilon)**(self.alpha-y))

case1_ind = torch.abs(y-y_pred) < self.theta

case2_ind = torch.abs(y-y_pred) >= self.theta

lossMat[case1_ind] = self.omega*torch.log(1+torch.abs((y[case1_ind]-y_pred[case1_ind])/self.epsilon)**(self.alpha-y[case1_ind]))

lossMat[case2_ind] = A[case2_ind]*torch.abs(y[case2_ind]-y_pred[case2_ind]) - C[case2_ind]

return lossMat

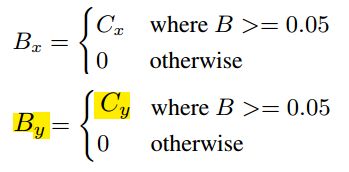

Weighted loss map:

其中,W = 10

这样设置loss,可以使得前景(经过高斯处理的关键点位置)获得比背景区域更大的loss传递。

基于pytorch的实现,

class Loss_weighted(nn.Module):

def __init__(self, W=10, alpha=2.1, omega=14, epsilon=1, theta=0.5):

super().__init__()

self.W = float(W)

self.Awing = AWing(alpha, omega, epsilon, theta)

def forward(self, y_pred , y, M):

M = M.float()

Loss = self.Awing(y_pred,y)

weighted = Loss * (self.W * M + 1.)

return weighted.mean()其中,M的产生如下,

def generate_weight_map(weight_map,heatmap):

k_size = 3

dilate = ndimage.grey_dilation(heatmap ,size=(k_size,k_size))

weight_map[np.where(dilate>0.2)] = 1

return weight_map

Coordinate aggregation:

其他:

本文的方法,人脸关键点的检测也可以应用于人体关键点的检测,也会有不错的效果。

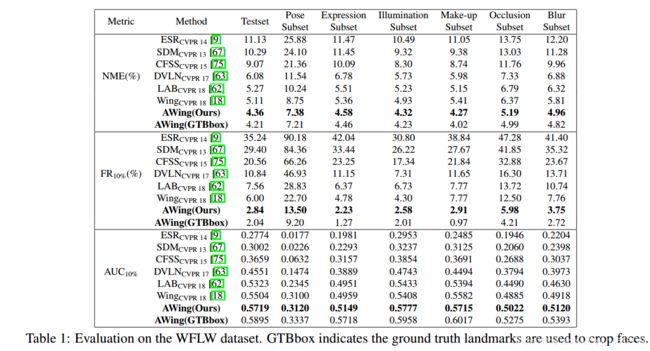

实验结果:

总结:

- 基于heatmap的关键点预测的方法精度会远高于基于回归的方法。缺点就是最后的参数量大。

- Adaptive Wing loss可以应用于好多关键点回归的程序,比如人体关键点回归的。

- Weighted Loss Map有助于使得前景回传更大的loss,背景传递更小的loss,使得训练效果更好。

- CoordConv更像一种attention机制,有助于网络学习到更好的效果。

- Boundary和landmark的相互促进作用。