HDP中 Ranger 整合Kerberos进行细粒度的权限访问控制

目录

一、Ranger 权限问题

1、 Ranger 是什么?

2、内容提要

二、具体组件的配置实现

2.1、HDFS 进行权限控制

2.2、HBase权限控制

2.3、Hive 权限控制

2.4、YARN 权限控制

一、Ranger 权限问题

1、 Ranger 是什么?

ranger针对组件内的权限 ,比如HDFS的读写执行,Hive和Hbase的读写更新,yarn的队列资源使用权、任务提交权限,目前ranger只支持 hdfs,hive,hbase,kafka,yarn等组件,针对于组和用户对资源的访问权限进行细粒度的控制。

2、内容提要

主要是围绕ranger 整合 Kerberos 进行 细粒度的用户访问权限控制 , Ranger Audit 审计功能的说明

- HDFS权限控制(整合 Kerberos)

- HBase 权限控制

- Hive 权限 控制

- yarn 任务提交资源访问控制

二、具体组件的配置实现

集群中已经安装了 Kerberos ,创建了 kangll, ranger_hive 用户 并创建了 Kerberos用户。

Kerberos客户端支持两种,一是使用 principal + Password,二是使用 principal + keytab,前者适合用户进行交互式应用,例如hadoop fs -ls这种,后者适合服务,例如yarn的rm、nm等。

2.1、HDFS 进行权限控制

1、新建操作系统用户 useradd kangll(可以编写脚本实现集群中每个节点用户的创建)

2、在kdc主机上对 kangll 用户进行kerberos的认证,以及将生成的 keytab文件拷贝到 hadoop 集群的机器 并修改权限,进行kinit以获取服务的Tiket

3、查看 /kangna 目录下的权限设置,此时仅 hdfs 用户可读写

4、在service中添加 Kerberos配置

说明:(1)此处的 hadoop.secirity.auth_to_local 配置,在安装Kerberos 后会在在 core-site.xml 中 会有生成,具体的意义所在可以参考:https://www.jianshu.com/p/2ad4be7ecf39

RULE:[1:$1@$0]([email protected])s/.*/hdfs/

RULE:[2:$1@$0]([email protected])s/.*/hdfs/

(2)principal 可以在我们安装Kerberos 下载 Kerberos.csv 文件 中找到,或者直接进入 kadmin.local 使用 listprincs 查看

5、创建对应的 policy

此时 /kangll 文件夹 仅 hdfs用户可读写 ,其他用户都不可以 操作

kangll用户 开启 对HDFS /kangll 文件夹的 读权限

6、权限验证

对 /kangll 文件夹 进行 读写 访问,下面我们看到由于我只开启了 读的权限,写入文件是不可以的。

下面我开启 写 的权限

再次验证,就很完美

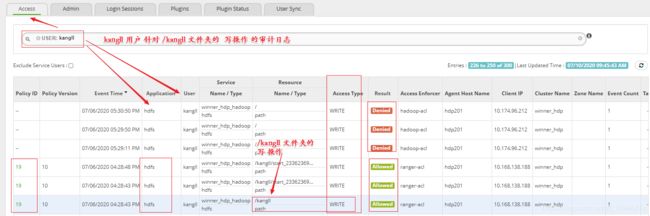

7.Ranger Audit

说明:Kangll 用户在 Ranger 审计功能模块的操作记录,针对kangll 用户对 HDFS 下的 /kangll 文件夹的写操作是被允许的。

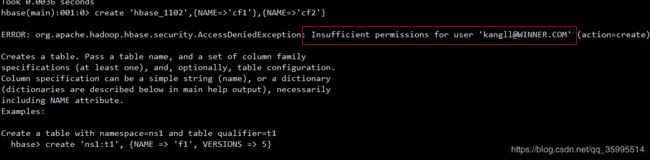

2.2、HBase权限控制

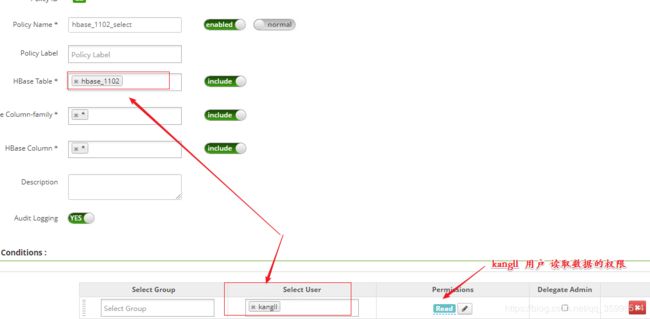

1、policy 配置

2、没有添加创建表权限前

开启读数据的权限

写数据的权限 没有开启

kangll用户 写数据的权限开启之后

创建用户 kangll, 控制 kangll 用户 对 HBase表的操作权限, 此处跟 hive 表一样 可以 分配具体的 表、列权限,对用户分配具体表的操作权限如: 创建、 读取、写、管理员权限。

5.Ranger Auit

2.3、Hive 权限控制

1.首先要确认 Ambari 是否开启了 ranger-hive-plugin

HiveServer2 也进不去(hdp202)。此处只需要做主体 认证就可以 创建 kangll 用户, 主体认证 kinit kangll

2.进入Ranger 的 WebUI, 点击Add New Service(URL 为 Ranger Admin 那台机 ip:6080)

说明此处安装后 ranger 后 会有默认的service 只是没有配置 Kerberos 我们可以修改 这个 Service就可以

3.Edit Service

- jdbc.url* 中填 : jdbc:hive2://hdp202:2181,hdp201:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2

- jdbc.driverClassName : org.apache.hive.jdbc.HiveDriver

Test conection 测试通过就好了, 有的时候也是没通过,不过也没事,后面执行没问题就行, jdbc.url 为 hiveServer2 IP:10000

4.使用 hive 用户 创建表 ,并插入数据

获取票据

[root@hdp202 keytabs]# kinit -kt hive.service.keytab hive/[email protected]

[root@hdp202 keytabs]# kinit -kt hive.service.keytab hive/[email protected]

[hive@hdp202 keytabs]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://hdp202:2181,hdp201:2181/default;password=hive;principal=hive/[email protected];serviceDiscoveryMode=zooKeeper;user=hive;zooKeeperNamespace=hiveserver2

20/07/07 21:20:47 [main]: INFO jdbc.HiveConnection: Connected to hdp202:10000

Connected to: Apache Hive (version 3.1.0.3.1.4.0-315)

Driver: Hive JDBC (version 3.1.0.3.1.4.0-315)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.0.3.1.4.0-315 by Apache Hive

0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> show databases;

+---------------------+

| database_name |

+---------------------+

| default |

| information_schema |

| ranger_hive |

| sys |

+---------------------+

4 rows selected (0.154 seconds)

0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> use ranger_hive;

0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> create table employee(name String,age int,address String) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE;

0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> load data local inpath '/hadoop/data/ranger_hive.txt' into table employee;

0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> select * from employee;

+----------------+---------------+-------------------+

| employee.name | employee.age | employee.address |

+----------------+---------------+-------------------+

| kangna | 12 | shanxi |

| zhangsan | 34 | Shanghai |

| lisi | 23 | beijing |

| wangwu | 21 | guangzhou |

+----------------+---------------+-------------------+

4 rows selected (2.285 seconds)5.查看修改配置策略

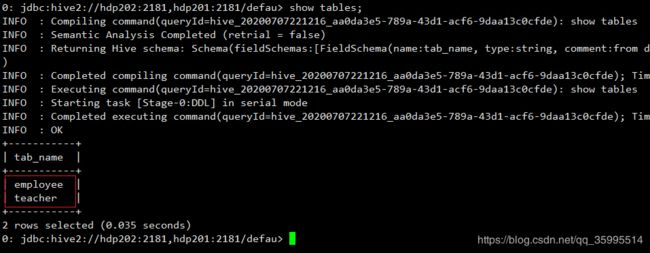

添加了kangll 用户的对数据库的操作权限后, 现在我们回过头来继续 使用kangll 用户进入 hiveServer2 使用 show database 命令查看 hive数据库就不会报错了。

切换到 kangll 用户 , kinit 获取票据,连接 hiveserver2

[kangll@hdp202 keytabs]$ kinit kangll Password for [email protected]: [kangll@hdp202 keytabs]$ klist Ticket cache: FILE:/tmp/krb5cc_1017 Default principal: [email protected] Valid starting Expires Service principal 07/07/2020 21:57:04 07/08/2020 21:57:04 krbtgt/[email protected] [kangll@hdp202 keytabs]$ beeline SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Connecting to jdbc:hive2://hdp202:2181,hdp201:2181/default;principal=hive/[email protected];serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2 20/07/07 21:57:27 [main]: INFO jdbc.HiveConnection: Connected to hdp202:10000 Connected to: Apache Hive (version 3.1.0.3.1.4.0-315) Driver: Hive JDBC (version 3.1.0.3.1.4.0-315) Transaction isolation: TRANSACTION_REPEATABLE_READ Beeline version 3.1.0.3.1.4.0-315 by Apache Hive 0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> show databases; +----------------+ | database_name | +----------------+ | ranger_hive | +----------------+ 1 row selected (0.157 seconds) 0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> use ranger_hive; 0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> show tables; +-----------+ | tab_name | +-----------+ | employee | +-----------+ 1 row selected (0.044 seconds) 0: jdbc:hive2://hdp202:2181,hdp201:2181/defau> select * from employee; +----------------+---------------+-------------------+ | employee.name | employee.age | employee.address | +----------------+---------------+-------------------+ | kangna | 12 | shanxi | | zhangsan | 34 | Shanghai | | lisi | 23 | beijing | | wangwu | 21 | guangzhou | +----------------+---------------+-------------------+ 4 rows selected (0.271 seconds) 0: jdbc:hive2://hdp202:2181,hdp201:2181/defau>没有开启创建表的权限

开启创建表的权限

6.Ranger Audit 审计功能 模块

说明:对 kangll 用户创建的 policy ,在没有创建policy 之前 kangll 用户对集群组件的操作也是被记录的,不过权限不允许而已。Kangll 用户在 Hive中 执行了查询操作,而且这个权限的执行结果是被允许的。

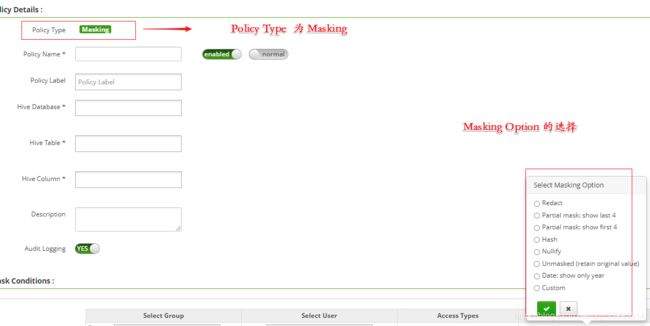

7.hive补充

hive 除了 Access 的策略类型之外,还有 Masking,Row Level Filter, 此处不做具体的演示。

Policy Type 为 Masking

Policy Type 为 Row Level Filter

2.4、YARN 权限控制

1、mapreduce自带 worldcount 提交任务测试

(1)创建 wordcount.txt 文件 上传到 HDFS

[root@hdp201 tmp]# vim wordcount.txt

[root@hdp201 tmp]# cat wordcount.txt

world is a new world

I will do my world do this job

bye bye

[root@hdp201 tmp]# hdfs dfs -put wordcount.txt /data/input(2)hdp中wordcount的jar位置

[kangll@hdp201 mapreduce]$ pwd

/usr/hdp/3.1.4.0-315/hadoop/hadoop/share/hadoop/mapreduce(3)执行 jar ,此处不配置kangll 用户的任何策略直接执行

[kangll@hdp201 mapreduce]$ hadoop jar hadoop-mapreduce-examples-3.1.1.3.1.4.0-315.jar wordcount /data/input/wordcount.txt /data/output

20/07/06 18:02:36 INFO client.RMProxy: Connecting to ResourceManager at hdp201/10.168.138.188:8050

20/07/06 18:02:36 INFO client.AHSProxy: Connecting to Application History server at hdp202/10.174.96.212:10200

20/07/06 18:02:37 INFO hdfs.DFSClient: Created token for kangll: HDFS_DELEGATION_TOKEN [email protected], renewer=yarn, realUser=, issueDate=1594029757336, maxDate=1594634557336, sequenceNumber=5, masterKeyId=6 on 10.168.138.188:8020

20/07/06 18:02:37 INFO security.TokenCache: Got dt for hdfs://hdp201:8020; Kind: HDFS_DELEGATION_TOKEN, Service: 10.168.138.188:8020, Ident: (token for kangll: HDFS_DELEGATION_TOKEN [email protected], renewer=yarn, realUser=, issueDate=1594029757336, maxDate=1594634557336, sequenceNumber=5, masterKeyId=6)

20/07/06 18:02:37 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /user/kangll/.staging/job_1594028454535_0001

20/07/06 18:02:37 INFO input.FileInputFormat: Total input files to process : 1

20/07/06 18:02:38 INFO mapreduce.JobSubmitter: number of splits:1

20/07/06 18:02:38 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1594028454535_0001

20/07/06 18:02:38 INFO mapreduce.JobSubmitter: Executing with tokens: [Kind: HDFS_DELEGATION_TOKEN, Service: 10.168.138.188:8020, Ident: (token for kangll: HDFS_DELEGATION_TOKEN [email protected], renewer=yarn, realUser=, issueDate=1594029757336, maxDate=1594634557336, sequenceNumber=5, masterKeyId=6)]

20/07/06 18:02:38 INFO conf.Configuration: found resource resource-types.xml at file:/etc/hadoop/3.1.4.0-315/0/resource-types.xml

20/07/06 18:02:38 INFO impl.TimelineClientImpl: Timeline service address: hdp202:8188

20/07/06 18:02:39 INFO mapreduce.JobSubmitter: Cleaning up the staging area /user/kangll/.staging/job_1594028454535_0001

java.io.IOException: org.apache.hadoop.yarn.exceptions.YarnException: org.apache.hadoop.security.AccessControlException: User kangll does not have permission to submit application_1594028454535_0001 to queue default

at org.apache.hadoop.yarn.ipc.RPCUtil.getRemoteException(RPCUtil.java:38)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.createAndPopulateNewRMApp(RMAppManager.java:427)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.submitApplication(RMAppManager.java:320)

at org.apache.hadoop.yarn.server.resourcemanager.ClientRMService.submitApplication(ClientRMService.java:645)

at org.apache.hadoop.yarn.api.impl.pb.service.ApplicationClientProtocolPBServiceImpl.submitApplication(ApplicationClientProtocolPBServiceImpl.java:277)

at org.apache.hadoop.yarn.proto.ApplicationClientProtocol$ApplicationClientProtocolService$2.callBlockingMethod(ApplicationClientProtocol.java:563)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:524)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1025)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:876)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:822)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2682)

Caused by: org.apache.hadoop.security.AccessControlException: User kangll does not have permission to submit application_1594028454535_0001 to queue default

我们可从任务执行的日志中看到 用户 kangll 没有提交任务的权限,下面我们在配置策略中开启

2、policy 配置