MapReduce实现数据的二级排序并统计指定字段

引言

在搭建了hadoop集群后,可以把实现聚焦于业务的具体实现,以一个实例为引子,巩固mapreduce的编程实践。

如何配置hadoop集群,且看上一篇博客

文章目录

- 引言

- 对运营商基站数据进行排序、统计。

- MapReduce工作流程

- MapTask工作机制

- ReduceTask工作机制

- 明确目标:

- DataBean和TimeUtil的定义

- TimeUtils

- DataBean

- Mapper和Reducer的定义

- Mapper

- Reducer

- Driver的定义

- 在排序的基础上完成统计

- SumDataBean

- DataAggregateMapper

- DataAggregateReducer

- DataAggregateDriver

- 在集群中进行测试

对运营商基站数据进行排序、统计。

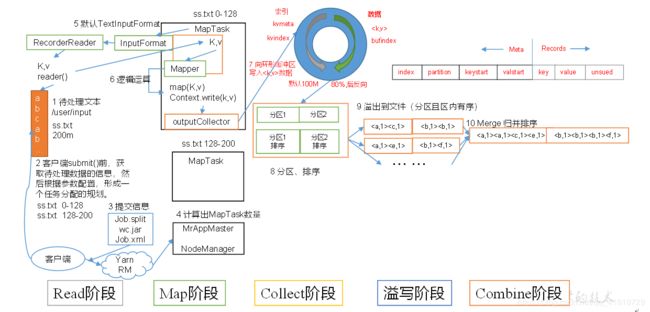

MapReduce工作流程

在hadoop框架中,要实现业务逻辑,首先需要理清楚MapReduce的工作流程,只有清楚一个作业从Client提交到结束的过程,才能真正的学会如何编程实践而非模仿copy.

MapTask工作机制

(1)Read阶段:MapTask通过用户编写的RecordReader,从输入InputSplit中解析出一个个key/value。

(2)Map阶段:该节点主要是将解析出的key/value交给用户编写map()函数处理,并产生一系列新的key/value。

(3)Collect收集阶段:在用户编写map()函数中,当数据处理完成后,一般会调用OutputCollector.collect()输出结果。在该函数内部,它会将生成的key/value分区(调用Partitioner),并写入一个环形内存缓冲区中。

(4)Spill阶段:即“溢写”,当环形缓冲区满后,MapReduce会将数据写到本地磁盘上,生成一个临时文件。需要注意的是,将数据写入本地磁盘之前,先要对数据进行一次本地排序,并在必要时对数据进行合并、压缩等操作。

溢写阶段详情:

- 步骤1:利用快速排序算法对缓存区内的数据进行排序,排序方式是,先按照分区编号Partition进行排序,然后按照key进行排序。这样,经过排序后,数据以分区为单位聚集在一起,且同一分区内所有数据按照key有序。

- 步骤2:按照分区编号由小到大依次将每个分区中的数据写入任务工作目录下的临时文件output/spillN.out(N表示当前溢写次数)中。如果用户设置了Combiner,则写入文件之前,对每个分区中的数据进行一次聚集操作。

- 步骤3:将分区数据的元信息写到内存索引数据结构SpillRecord中,其中每个分区的元信息包括在临时文件中的偏移量、压缩前数据大小和压缩后数据大小。如果当前内存索引大小超过1MB,则将内存索引写到文件output/spillN.out.index中。

(5)Combine阶段:

- 当所有数据处理完成后,MapTask对所有临时文件进行一次合并,以确保最终只会生成一个数据文件。

- 当所有数据处理完后,MapTask会将所有临时文件合并成一个大文件,并保存到文件output/file.out中,同时生成相应的索引文件output/file.out.index。

- 在进行文件合并过程中,MapTask以分区为单位进行合并。对于某个分区,它将采用多轮递归合并的方式。每轮合并io.sort.factor(默认10)个文件,并将产生的文件重新加入待合并列表中,对文件排序后,重复以上过程,直到最终得到一个大文件。

- 让每个MapTask最终只生成一个数据文件,可避免同时打开大量文件和同时读取大量小文件产生的随机读取带来的开销。

ReduceTask工作机制

(1)Copy阶段:ReduceTask从各个MapTask上远程拷贝一片数据,并针对某一片数据,如果其大小超过一定阈值,则写到磁盘上,否则直接放到内存中。

(2)Merge阶段:在远程拷贝数据的同时,ReduceTask启动了两个后台线程对内存和磁盘上的文件进行合并,以防止内存使用过多或磁盘上文件过多。

(3)Sort阶段:按照MapReduce语义,用户编写reduce()函数输入数据是按key进行聚集的一组数据。为了将key相同的数据聚在一起,Hadoop采用了基于排序的策略。由于各个MapTask已经实现对自己的处理结果进行了局部排序,因此,ReduceTask只需对所有数据进行一次归并排序即可。

(4)Reduce阶段:reduce()函数将计算结果写到HDFS上。

明确目标:

首先,对用户的电话号码进行升序排列,并对相同用户的基站到达时间进行降序排列,同时统计用户数及用户使用次数。

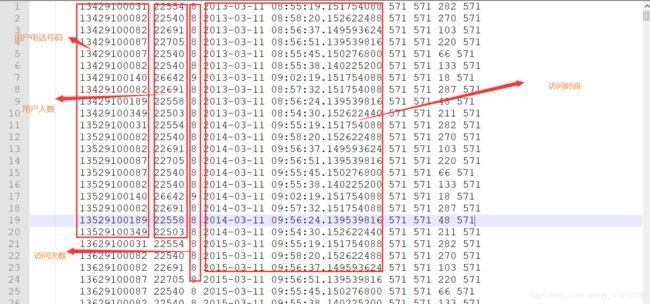

我们先明确在数据中每列所代表的含义,如上图所示,可以知道

- fields[0]:用户电话号码

- fields[1]:用户使用次数

- fields[2]:用户数

- fields[3]:访问到达时间

DataBean和TimeUtil的定义

由对给出的数据进行分许可以得出结论,在map阶段的输入KV值应该是<每行数据的偏移量,每行数据>,输入KV应该是<包含了fileds中我们所需信息的对象,NullWritable>,因为需要对电话号码以及基站到达时间进行升序/降序的排列,所以自定义DataBean,实现WritableComparable接口,而且在DataBean中应该包含以下私有属性:

private String phone;

private String arriveDate;//到达的秒次时间

private String arriveMili;//到达的毫秒次时间

private Long userNum;

private Long useTime;

同时,重写Writable接口的序列化和反序列方法

//序列化方法

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeUTF(this.phone);

dataOutput.writeUTF(this.arriveDate);

dataOutput.writeUTF(this.arriveMili);

dataOutput.writeLong(this.userNum);

dataOutput.writeLong(this.useTime);

}

//反序列化方法

public void readFields(DataInput dataInput) throws IOException {

phone = dataInput.readUTF();

arriveDate = dataInput.readUTF();

arriveMili = dataInput.readUTF();

userNum = dataInput.readLong();

useTime = dataInput.readLong();

}

因为在map阶段的输出是DataBean作为Key会被MR框架进行排序的操作,所以还需要实现Comparable接口的compareTo方法

/**

* 首先,对用户的电话号码进行升序排列,并对相同用户的基站到达时间进行降序排列

* @param o

* @return

*/

@Override

public int compareTo(DataBean o) {

int compare_phone = this.phone.compareTo(o.phone);

if(compare_phone==0){

ArrayList<String> timeStamp1 = new ArrayList<>();

ArrayList<String> timeStamp2 = new ArrayList<>();

timeStamp1.add(this.arriveDate);

timeStamp1.add(this.arriveMili);

timeStamp2.add(o.arriveDate);

timeStamp2.add(o.arriveMili);

return TimeUtils.timeSort(timeStamp1, timeStamp2);

}else {

return compare_phone;

}

}

因为是二级排序(先升序排列手机号,再降序排列到达时间),所以定义了一个工具类TimeUtils:

TimeUtils

package com.cqupt.baseDataProcess;

import org.apache.commons.lang.time.DateUtils;

import org.junit.Test;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Date;

public class TimeUtils {

public static Date stringToDate(String dateStr) {

SimpleDateFormat format = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

Date date = null;

try {

date = format.parse(dateStr);

} catch (ParseException e) {

e.printStackTrace();

}

return date;

}

public static ArrayList<String> getTimeStr(String line) {

String[] fields = line.split(" ");

String time_day = fields[3];

String[] seconds = fields[4].split("\\.");

String time_secondtemp = seconds[0];

String time_milis = seconds[1];

String time_second = time_day + " " + time_secondtemp;

ArrayList<String> timeList = new ArrayList<>();

timeList.add(time_second);

timeList.add(time_milis);

return timeList;

}

/**

*

* @param timeStamp1 列表形式的时间戳1 {yyyy-MM-dd HH:mm:ss,SSS}

* @param timeStamp2 列表形式的时间戳2 {yyyy-MM-dd HH:mm:ss,SSS}

* @return 返回二者二级排序的结果

*/

public static int timeSort(ArrayList<String> timeStamp1, ArrayList<String> timeStamp2) {

String timeStr_1 = timeStamp1.get(0);

String secondStr_1 = timeStamp1.get(1);

String timeStr_2 = timeStamp2.get(0);

String secondStr_2 = timeStamp2.get(1);

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

long thisValue = 0;

long thatValue = 0;

try {

thisValue = sdf.parse(timeStr_1).getTime();

thatValue = sdf.parse(timeStr_2).getTime();

int compare_sec = Long.compare(thatValue, thisValue);

if(compare_sec==0){

return secondStr_2.compareTo(secondStr_1);

}else {

return compare_sec;

}

} catch (ParseException e) {

e.printStackTrace();

}

return 0;

}

// @Test

// public void test() {

// String line = "13429100189 22558 8 2013-03-11 08:56:24.139539816 571 571 48 571";

// String line2 = "13429100082 22540 8 2013-03-11 08:55:20.152622488 571 571 270 571";

//// String timeStr = getTimeStr(line).get(0);

//// String secondStr = getTimeStr(line).get(1);

//// System.out.println(timeStr);

//// System.out.println(secondStr);

// int i = timeSort(getTimeStr(line), getTimeStr(line2));

// System.out.println(i);

//

// }

}

DataBean的完整代码如下:

DataBean

package com.cqupt.baseDataProcess;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

/**

* 包含4个关键信息

* 1. 用户的电话字符串

* 2. 基站的到达时间

* 3. 用户数

* 4. 用户使用次数

*

* 首先,对用户的电话号码进行升序排列,并对相同用户的基站到达时间进行降序排列,同时统计用户数及用户使用次数;

*/

public class DataBean implements WritableComparable<DataBean> {

private String phone;

private String arriveDate;//到达的秒次时间

private String arriveMili;//到达的毫秒次时间

private Long userNum;

private Long useTime;

public DataBean() {

super();

}

public DataBean(String phone, String arriveDate, String arriveMili, Long userNum, Long useTime) {

super();

this.phone = phone;

this.arriveDate = arriveDate;

this.arriveMili = arriveMili;

this.userNum = userNum;

this.useTime = useTime;

}

public String getPhone() {

return phone;

}

public void setPhone(String phone) {

this.phone = phone;

}

public String getArriveDate() {

return arriveDate;

}

public void setArriveDate(String arriveDate) {

this.arriveDate = arriveDate;

}

public String getArriveMili() {

return arriveMili;

}

public void setArriveMili(String arriveMili) {

this.arriveMili = arriveMili;

}

public Long getUserNum() {

return userNum;

}

public void setUserNum(Long userNum) {

this.userNum = userNum;

}

public Long getUseTime() {

return useTime;

}

public void setUseTime(Long useTime) {

this.useTime = useTime;

}

@Override

public String toString() {

return "DataBean{" +

"phone='" + phone + '\'' +

", arriveDate=" + arriveDate +

", userNum=" + userNum +

", useTime=" + useTime +

'}';

}

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeUTF(this.phone);

dataOutput.writeUTF(this.arriveDate);

dataOutput.writeUTF(this.arriveMili);

dataOutput.writeLong(this.userNum);

dataOutput.writeLong(this.useTime);

}

public void readFields(DataInput dataInput) throws IOException {

phone = dataInput.readUTF();

arriveDate = dataInput.readUTF();

arriveMili = dataInput.readUTF();

userNum = dataInput.readLong();

useTime = dataInput.readLong();

}

/**

* 首先,对用户的电话号码进行升序排列,并对相同用户的基站到达时间进行降序排列

* @param o

* @return

*/

@Override

public int compareTo(DataBean o) {

int compare_phone = this.phone.compareTo(o.phone);

if(compare_phone==0){

ArrayList<String> timeStamp1 = new ArrayList<>();

ArrayList<String> timeStamp2 = new ArrayList<>();

timeStamp1.add(this.arriveDate);

timeStamp1.add(this.arriveMili);

timeStamp2.add(o.arriveDate);

timeStamp2.add(o.arriveMili);

return TimeUtils.timeSort(timeStamp1, timeStamp2);

}else {

return compare_phone;

}

}

}

Mapper和Reducer的定义

在上一节定义DataBean时我们明确了Mapper的输入和输出键值对形式,所以顺理成章地我们可以定义Mapper如下

Mapper

package com.cqupt.baseDataProcess;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

import java.util.ArrayList;

/**

* 首先,对用户的电话号码进行升序排列,并对相同用户的基站到达时间进行降序排列,同时统计用户数及用户使用次数

* 先完成统计用户数和使用次数试试

*/

public class DataProcessMapper extends Mapper<LongWritable,Text,DataBean,NullWritable> {

private DataBean dataBean= new DataBean();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] fields = value.toString().split(" ");

dataBean.setPhone(fields[0]);

ArrayList<String> timeStr = TimeUtils.getTimeStr(value.toString());

dataBean.setArriveDate(timeStr.get(0));

dataBean.setArriveMili(timeStr.get(1));

long useTime = Long.parseLong(fields[1]);

long userNum = Long.parseLong(fields[2]);

dataBean.setUseTime(useTime);

dataBean.setUserNum(userNum);

context.write(dataBean,NullWritable.get());

}

}

我们可以预见Mapper输出的内容应该是一个个排好序的DataBean,所以在Reducer阶段不作任何处理输出即可,我们就可以完成数据的排序工作。

Reducer

package com.cqupt.baseDataProcess;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* 首先,对用户的电话号码进行升序排列,并对相同用户的基站到达时间进行降序排列

*/

public class DataProcessReducer extends Reducer<DataBean,NullWritable,DataBean,NullWritable> {

@Override

protected void reduce(DataBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

context.write(key,NullWritable.get());

}

}

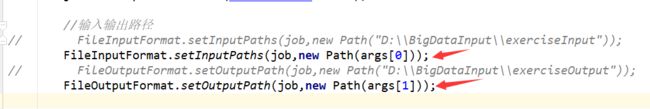

Driver的定义

有了Mapper和Reducer,我们可以定义MR框架中的Client客户端,通过定义的客户端向hadoop-MapReduce发布任务(Job)

package com.cqupt.baseDataProcess;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class DataProcessDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Job job = Job.getInstance(new Configuration());

job.setJarByClass(DataProcessDriver.class);

//mapper/reducer

job.setMapperClass(DataProcessMapper.class);

job.setReducerClass(DataProcessReducer.class);

//in/out type

job.setMapOutputKeyClass(DataBean.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(DataBean.class);

job.setOutputValueClass(NullWritable.class);

//输入输出路径

FileInputFormat.setInputPaths(job,new Path("D:\\BigDataInput\\exerciseInput"));

FileOutputFormat.setOutputPath(job,new Path("D:\\BigDataInput\\exerciseOutput"));

//提交

boolean jobStatus = job.waitForCompletion(true);

System.exit(jobStatus?0:1);

}

}

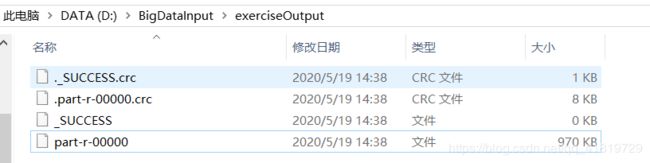

我们可以看到输出内容如下:

至此,排序工作完成。

在排序的基础上完成统计

在上面的工作中,对基站数据的排序已然完成,但是统计用户数及用户使用次数的任务还没做完,笔者在尝试原来的代码的基础上做了诸多尝试,都没有得到满意的结果,最终决定新定义一个Job,在新的Job中对上面工作得到的输出数据进行处理从而得到对用户数和用户使用次数的统计。

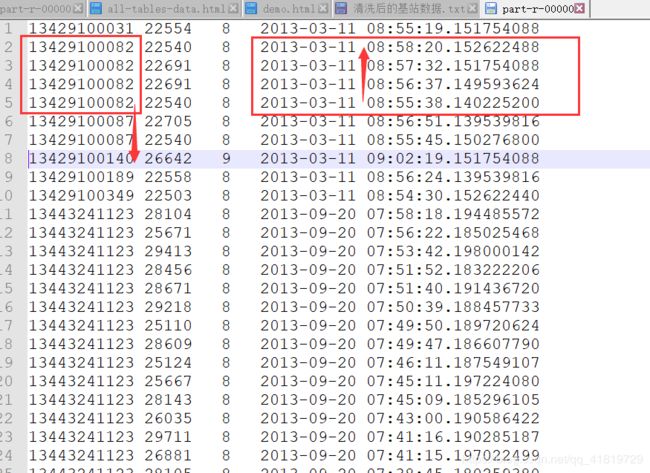

在构思阶段,我们需要先明确Map输入和输出应该是什么,在读取出的数据中,笔者根据要求,如下图定义了map的输入KV:

将电话号码作为Key的好处在于Map任务运行时会自动帮我们排好序并在shuffle后完成自动分组,对于value,我们应该定义一个SumDataBean来封装用户数和用户使用次数两个参量。然后输出

SumDataBean

SumDataBean代码如下:

package com.cqupt.baseDataProcess;

import org.apache.hadoop.io.Writable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class SumDataBean implements Writable {

private Long sumUser;

private Long sumUseTime;

public Long getSumUser() {

return sumUser;

}

public void setSumUser(Long sumUser) {

this.sumUser = sumUser;

}

public Long getSumUseTime() {

return sumUseTime;

}

public void setSumUseTime(Long sumUseTime) {

this.sumUseTime = sumUseTime;

}

@Override

public String toString() {

return "SumDataBean{" +

"sumUser=" + sumUser +

", sumUseTime=" + sumUseTime +

'}';

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeLong(this.sumUser);

dataOutput.writeLong(this.sumUseTime);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

sumUser = dataInput.readLong();

sumUseTime = dataInput.readLong();

}

}

有了SumDataBean,就很好得出Mapper如下

DataAggregateMapper

package com.cqupt.baseDataProcess;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class DataAggregateMapper extends Mapper<LongWritable,Text,Text,SumDataBean> {

private Text phone=new Text();

private SumDataBean sumDataBean = new SumDataBean();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] fields = value.toString().split("\t");

phone.set(fields[0]);

sumDataBean.setSumUseTime(Long.parseLong(fields[1]));

sumDataBean.setSumUser(Long.parseLong(fields[2]));

context.write(phone,sumDataBean);

}

}

在Reducer中对map输出并经过shuffle的数据–

DataAggregateReducer

package com.cqupt.baseDataProcess;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class DataAggregateReducer extends Reducer<Text,SumDataBean,Text,SumDataBean> {

private SumDataBean finalBean = new SumDataBean();

@Override

protected void reduce(Text key, Iterable<SumDataBean> values, Context context) throws IOException, InterruptedException {

Long sumUseTime=0L;

Long sumUser=0L;

for (SumDataBean value : values) {

sumUseTime+=value.getSumUseTime();

sumUser+=value.getSumUser();

}

finalBean.setSumUseTime(sumUseTime);

finalBean.setSumUser(sumUser);

context.write(key,finalBean);

}

}

在新定义的mapper和reducer基础之上构建Job并通过客户端将其发布

DataAggregateDriver

package com.cqupt.baseDataProcess;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class DataAggregateDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Job job = Job.getInstance(new Configuration());

job.setJarByClass(DataAggregateDriver.class);

job.setMapperClass(DataAggregateMapper.class);

job.setReducerClass(DataAggregateReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(SumDataBean.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(SumDataBean.class);

//注意这里的输入路径是上一个排序Job的输出路径

FileInputFormat.setInputPaths(job, new Path("D:\\BigDataInput\\exerciseOutput"));

FileOutputFormat.setOutputPath(job, new Path("D:\\BigDataInput\\aggregateOutput"));

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

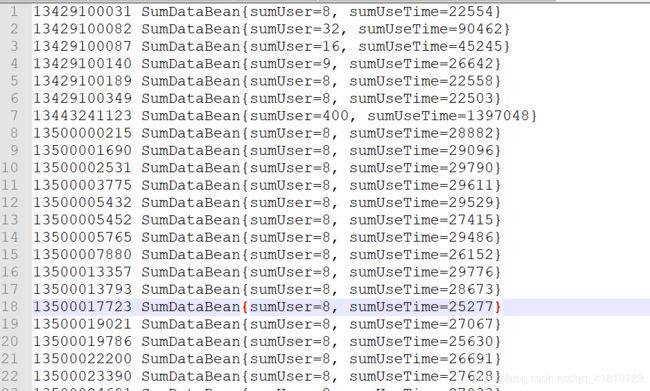

得到输出如下:

至此,所有的需求都完成了。

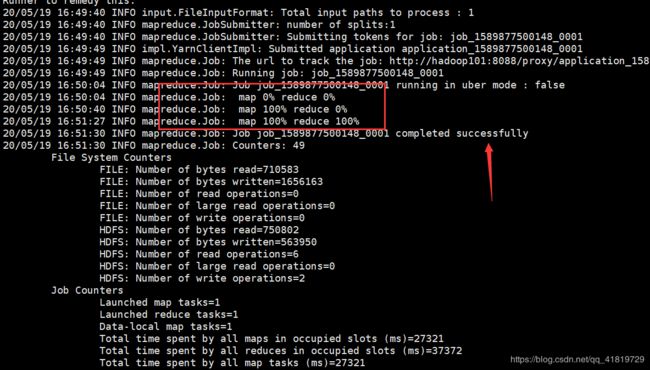

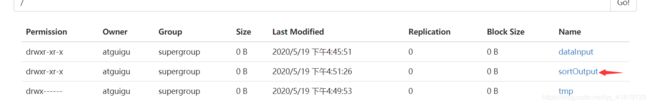

在集群中进行测试

在本地通过测试之后,我们把项目达成jar包将其放在第一节搭建的hadoop集群中进行测试。

我们先把各个Driver中指定输入路径输出路径的参数重新设置一下:

通过maven提供的打包工具来对项目进行打包操作

我们把jar包以及原始数据通过Xftp5发送到linux集群中,然后在终端中启动hadoop100的hadoop-hdfs,

[atguigu@hadoop100 hadoop-2.7.2]$ start-dfs.sh

Starting namenodes on [hadoop100]

hadoop100: starting namenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-atguigu-namenode-hadoop100.out

hadoop102: starting datanode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-atguigu-datanode-hadoop102.out

hadoop101: starting datanode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-atguigu-datanode-hadoop101.out

hadoop100: starting datanode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-atguigu-datanode-hadoop100.out

Starting secondary namenodes [hadoop102]

hadoop102: starting secondarynamenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-atguigu-secondarynamenode-hadoop102.out

在hadoop101启动hadoop-Yarn

[atguigu@hadoop101 hadoop-2.7.2]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-atguigu-resourcemanager-hadoop101.out

hadoop100: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-atguigu-nodemanager-hadoop100.out

hadoop102: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-atguigu-nodemanager-hadoop102.out

hadoop101: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-atguigu-nodemanager-hadoop101.out

通过shell命令上传包含data.txt原始数据的文件夹dataInput到hdfs文件系统的根目录

[atguigu@hadoop102 hadoop-2.7.2]$ hadoop fs -put dataInput/ /

然后通过以下命令执行jar包中的DataProcessDriver,其输入为dataInput,输入为sortOutput.完成对数据的排序过程:

[atguigu@hadoop102 hadoop-2.7.2]$ hadoop jar dataprocess.jar com.cqupt.baseDataProcess.DataProcessDriver /dataInput /sortOutput

通过命令将sortOutput下载到本地查看,可以看到排序完成的数据

[atguigu@hadoop102 hadoop-2.7.2]$ hadoop fs -get /sortOutput ./

[atguigu@hadoop102 hadoop-2.7.2]$ more sortOutput/part-r-00000

最后通过以下命令执行jar包中的DataAggregateDriver,使得其输入为sortOutput,输出为finalOutput,完成数据的统计过程:

[atguigu@hadoop102 hadoop-2.7.2]$ hadoop jar dataprocess.jar com.cqupt.baseDataProcess.DataAggregateDriver /sortOutput /finalOutput

同样,通过hadoop fs -cat /finalOutput/part-r-00000查看统计后的数据:

至此,实现了对运营商基站数据排序并统计的所有需求。