rhcs集群服务

集群

五个组成一个群体,同一时刻只有一个提供服务

彼此发送心跳

rhcs 红帽的高可用套件

测试;

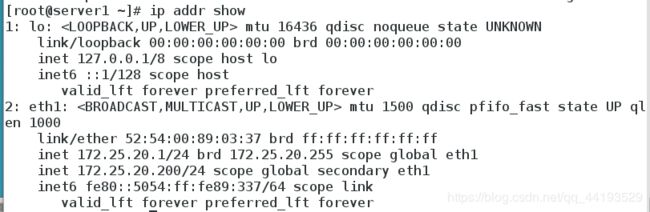

在server1中:

配置yum源:

[rhel-source]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.20.250/rhel6.5/

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[HighAvailability]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.20.250/rhel6.5/HighAvailability

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[LoadBalancer]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.20.250/rhel6.5/LoadBalancer

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[ResilientStorage]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.20.250/rhel6.5/ResilientStorage

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[ScalableFileSystem]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.20.250/rhel6.5/ScalableFileSystem

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

yum install luci ricci -y

id ricci

passwd ricci

/etc/init.d/ricci start

/etc/init.d/luci start

chkconfig ricci on

chkconfig luci on

yum install fence-virtd-libvirt.x86_64 fence-virtd-multicast.x86_64 fence-virtd.x86_64

![]()

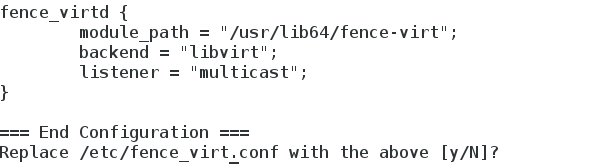

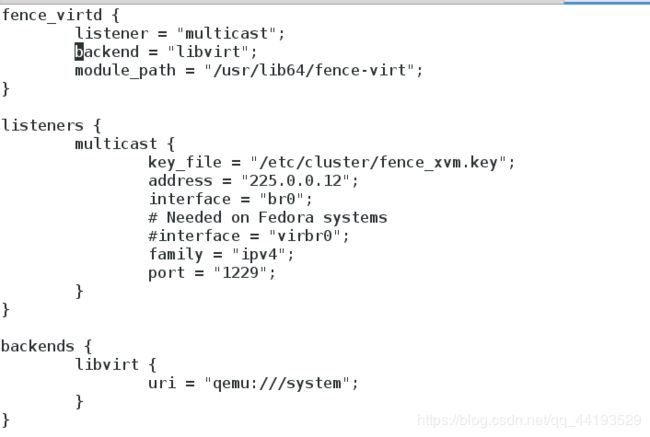

fence_virtd -c

将interface改为br0

fence_virtd {

listener = “multicast”;

backend = “libvirt”;

module_path = “/usr/lib64/fence-virt”;

}

listeners {

multicast {

key_file = “/etc/cluster/fence_xvm.key”;

address = “225.0.0.12”;

interface = “br0”;

# Needed on Fedora systems

#interface = “virbr0”;

family = “ipv4”;

port = “1229”;

}

}

backends {

libvirt {

uri = “qemu:///system”;

}

}

dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1

生成密钥

将密钥发送给server1和server2

systemctl start fence_virtd.server 开启fence服务

在浏览器上配置fence服务

操作完后server1和server2会被重启

进入到/etc/cluster内可以看到刚才创建的集群配置文件

在server1上:

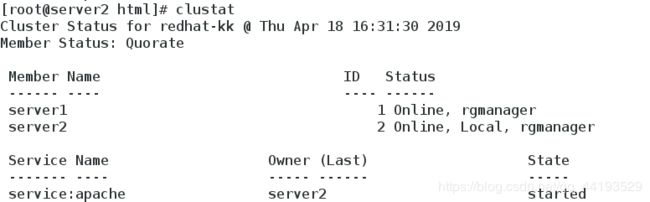

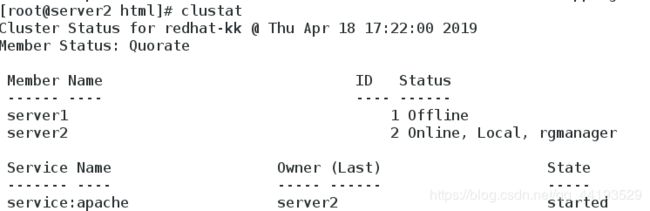

clustat查看状态

fence_node server2

##集群控制虚拟机的服务

在server1和server2上下载httpd服务但不开启服务

在浏览器上配置集群的服务

添加Failover Domain

勾选对号

配置Resources 添加IP服务和httpd服务

IP配置为server同网段的IP

添加httpd服务的启动脚本

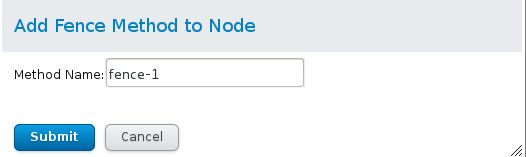

添加Service Groups

添加服务名为apache

勾选对号

选择Failover Domain

Add Resource

先选择IP服务,后选择httpd服务

测试:

1.在server1上:

clustat 查看到已经开启httpd服务,真机上可以查看到curl 172.25.20.200

然后将内核写崩

echo c >/proc/sysrq-trigger

![]()

稍等即可看到server1被集群关闭,server2开启httpd服务并获得ip

curl 172.25.20.200 查看到server2

2.在server2上:

关闭httpd服务:

在server1上即可查看到httpd服务开启,获得IP

curl 172.25.20.200

3.在server1上:

关闭network

在server2上即可查看到httpd服务开启,获得IP

curl 172.25.20.200

##文件系统执行集群服务

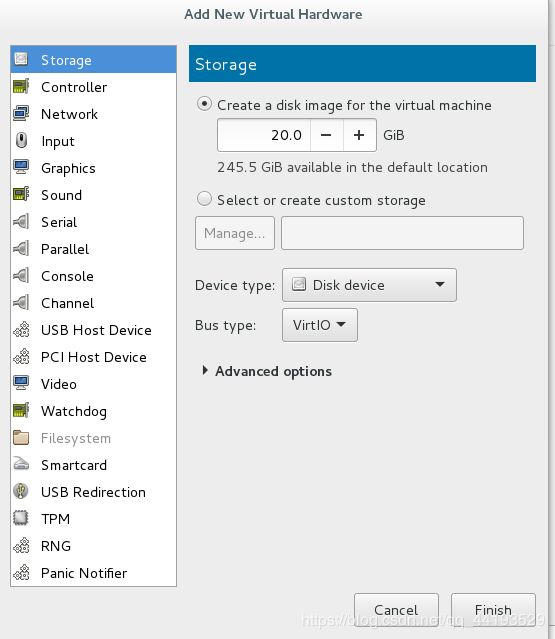

新建一个虚拟机server4

配置好yum源

添加虚拟硬盘20G

yum install scsi-* -y

vim /etc/tgt/targets.conf

38

fdisk -l ##查看可用硬盘名

/etc/init.d/tgtd start

tgt-admin -s

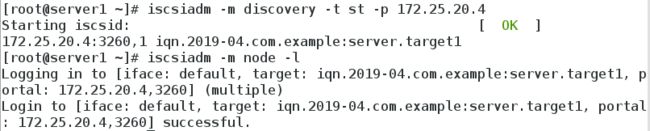

在server1上:

下载iscsi服务

iscsiadm -m discovery -t st -p 172.25.20.4 ##发现server4共享出来的目录

iscsiadm -m node -l ##添加目录

vim /etc/lvm/lvm.conf

![]()

/etc/init.d/clvmd status

fsisk -l

fdisk -cu /dev/sdb ##配置硬盘的大小

创建一个/dev/sdb1 标签为8e

partprobe

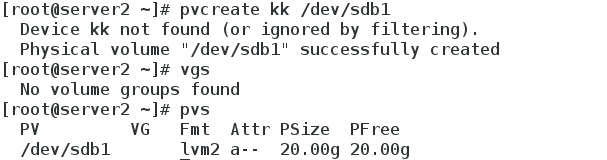

pvcreate /dev/sdb1

pvs ##查看添加的物理卷

每次执行一部操作,都要在server1和server2上同步

vgcreate kk /dev/sdb1

vgs

lvcreate -L 4G -n cc kk

lvs

mkfs.ext4 /dev/kk/cc ##本地格式化文件系统

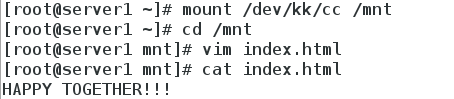

mount /dev/kk/cc /mnt ##挂载文件系统至/mnt

cd /mnt

vim index.html

HAPPY TOGETHER!!!

clustat查看服务,停止apache

在浏览器上图形配置一个data服务

添加进group中(在httpd服务之前)

开启apache

df查看挂载

/etc/init.d/httpd status

在真机上

curl 172.25.20.200

HAPPY TOGETHER!!!

rhcs 红帽的高可用套件

###gfs2依赖于集群的文件系统

支持同时多点一起写入

在server1上:

mkfs.gfs2 -p lock_dlm -j 3 -t westos-kk:mygfs2 /dev/kk/cc ##格式化为gfs2文件系统

clustat

vim /etc/fstab

/dev/kk/cc /var/www/html gfs2 _netdev 0 0

umount /mnt

mount -a

##在浏览器上:

删除文件系统可执行集群服务(webdata)

开启apache

cd /var/www/html

vim index.html

HAPPY APRIL!!!

在真机上:

curl 172.25.20.200

HAPPY APRIL!!!

在server1上:

clusvcadm -r apache -m server1

再次

[root@foundation20 ~]# curl 172.25.20.200

HAPPY APRIL!!!

##关于gfs2的一些小拓展

[root@server1 ~]# gfs2_tool sb /dev/kk/cc all

mh_magic = 0x01161970

mh_type = 1

mh_format = 100

sb_fs_format = 1801

sb_multihost_format = 1900

sb_bsize = 4096

sb_bsize_shift = 12

no_formal_ino = 2

no_addr = 23

no_formal_ino = 1

no_addr = 22

sb_lockproto = lock_dlm

sb_locktable = westos-kk:mygfs2

uuid = 33980b0a-66cc-fcfc-551d-b6542e5e3d18

[root@server1 ~]# gfs2_tool journals /dev/kk/cc

journal2 - 128MB

journal1 - 128MB

journal0 - 128MB

3 journal(s) found.

[root@server1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 1.1G 17G 7% /

tmpfs 499M 32M 468M 7% /dev/shm

/dev/sda1 485M 33M 427M 8% /boot

/dev/mapper/kk-cc 4.0G 388M 3.7G 10% /var/www/html

[root@server1 ~]# gfs2_jadd -j 3 /dev/kk/cc

Filesystem: /var/www/html

Old Journals 3

New Journals 6

[root@server1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 1.1G 17G 7% /

tmpfs 499M 32M 468M 7% /dev/shm

/dev/sda1 485M 33M 427M 8% /boot

/dev/mapper/kk-cc 4.0G 776M 3.3G 19% /var/www/html

[root@server1 ~]# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 18.54g

lv_swap VolGroup -wi-ao---- 992.00m

cc kk -wi-ao---- 4.00g

[root@server1 ~]# lvextend -L +1G /dev/kk/cc

Extending logical volume cc to 5.00 GiB

Logical volume cc successfully resized

[root@server1 ~]# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 18.54g

lv_swap VolGroup -wi-ao---- 992.00m

cc kk -wi-ao---- 5.00g

[root@server1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 1.1G 17G 7% /

tmpfs 499M 32M 468M 7% /dev/shm

/dev/sda1 485M 33M 427M 8% /boot

/dev/mapper/kk-cc 4.0G 776M 3.3G 19% /var/www/html

[root@server1 ~]# gfs2_grow /dev/kk/cc

FS: Mount Point: /var/www/html

FS: Device: /dev/dm-2

FS: Size: 1048575 (0xfffff)

FS: RG size: 65533 (0xfffd)

DEV: Size: 1310720 (0x140000)

The file system grew by 1024MB.

gfs2_grow complete.

[root@server1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 1.1G 17G 7% /

tmpfs 499M 32M 468M 7% /dev/shm

/dev/sda1 485M 33M 427M 8% /boot

/dev/mapper/kk-cc 5.0G 776M 4.3G 16% /var/www/html

[root@server1 ~]# df -H

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 20G 1.2G 18G 7% /

tmpfs 523M 33M 490M 7% /dev/shm

/dev/sda1 508M 35M 448M 8% /boot

/dev/mapper/kk-cc 5.4G 814M 4.6G 16% /var/www/html