Flink结合canal同步数据

企业运维的数据库最常见的是mysql;但是mysql有个缺陷:当数据量达到千万条的时候,mysql的相关操作会变的非常迟缓;

如果这个时候有需求需要实时展示数据;对于mysql来说是一种灾难;而且对于mysql来说,同一时间还要给多个开发人员和用户操作;

所以经过调研,将mysql数据实时同步到hbase中;

最开始使用的架构方案:

Mysql---logstash—kafka---sparkStreaming---hbase---web

Mysql—sqoop---hbase---web

但是无论使用logsatsh还是使用kafka,都避免不了一个尴尬的问题:

他们在导数据过程中需要去mysql中做查询操作:

比如logstash:

比如sqoop:

不可避免的,都需要去sql中查询出相关数据,然后才能进行同步;这样对于mysql来说本身就是增加负荷操作;

所以我们真正需要考虑的问题是:有没有什么方法,能将mysql数据实时同步到hbase;但是不增加mysql的负担;

答案是有的:可以使用canal或者maxwell来解析mysql的binlog日志

那么之前的架构就需要改动了:

Mysql---canal—kafka—flink—hbase—web

开发

第一步:开启mysql的binlog日志

Mysql的binlog日志作用是用来记录mysql内部增删等对mysql数据库有更新的内容的记录(对数据库的改动),对数据库的查询select或show等不会被binlog日志记录;主要用于数据库的主从复制以及增量恢复。

mysql的binlog日志必须打开log-bin功能才能生存binlog日志

-rw-rw---- 1 mysql mysql 669 8月 10 21:29 mysql-bin.000001

-rw-rw---- 1 mysql mysql 126 8月 10 22:06 mysql-bin.000002

-rw-rw---- 1 mysql mysql 11799 8月 15 18:17 mysql-bin.000003

修改/etc/my.cnf,在里面添加如下内容

[mysqld]

log-bin=/var/lib/mysql/mysql-bin 【binlog日志存放路径】

binlog-format=ROW 【日志中会记录成每一行数据被修改的形式】

server_id=1 【指定当前机器的服务ID(如果是集群,不能重复)】

配置完毕之后,登录mysql,输入如下命令:

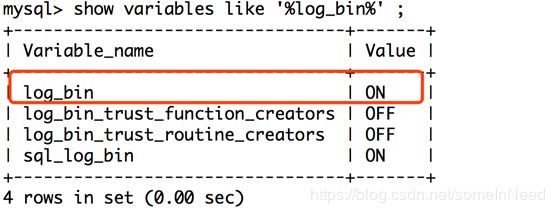

show variables like ‘%log_bin%’

出现如下形式,代表binlog开启;

第二步:安装canal

Canal介绍

canal是阿里巴巴旗下的一款开源项目,纯Java开发。基于数据库增量日志解析,提供增量数据订阅&消费,目前主要支持了MySQL(也支持mariaDB)。

起源:早期,阿里巴巴B2B公司因为存在杭州和美国双机房部署,存在跨机房同步的业务需求。不过早期的数据库同步业务,主要是基于trigger的方式获取增量变更,不过从2010年开始,阿里系公司开始逐步的尝试基于数据库的日志解析,获取增量变更进行同步,由此衍生出了增量订阅&消费的业务,从此开启了一段新纪元。

工作原理

原理相对比较简单:

1、canal模拟mysql slave的交互协议,伪装自己为mysql slave,向mysql master发送dump协议

2、mysql master收到dump请求,开始推送binary log给slave(也就是canal)

3、canal解析binary log对象(原始为byte流)

使用canal解析binlog,数据落地到kafka

(1):解压安装包:canal.deployer-1.0.23.tar.gz

tar -zxvf canal.deployer-1.0.23.tar.gz -C /export/servers/canal

修改配置文件:

vim /export/servers/canal/conf/example/instance.properties

(2):编写canal代码

仅仅安装了canal是不够的;canal从架构的意义上来说相当于mysql的“从库”,此时还并不能将binlog解析出来实时转发到kafka上,因此需要进一步开发canal代码;

Canal已经帮我们提供了示例代码,只需要根据需求稍微更改即可;

Canal提供的代码:

https://github.com/alibaba/canal/wiki/ClientExample

上面的代码中可以解析出binlog日志,但是没有将数据落地到kafka的代码逻辑,所以我们还需要添加将数据落地kafka的代码;

Maven导入依赖:

com.alibaba.otter

canal.client

1.0.25

org.apache.kafka

kafka_2.11

0.9.0.1

Canal代码:

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.client.CanalConnectors;

import com.alibaba.otter.canal.protocol.CanalEntry;

import com.alibaba.otter.canal.protocol.Message;

import com.google.protobuf.InvalidProtocolBufferException;

import java.net.InetSocketAddress;

import java.util.ArrayList;

import java.util.List;

import java.util.UUID;

/**

* Created by angel;

*/

public class Canal_Client {

public static void main(String[] args) {

//TODO 1:连接cnnal

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress("hadoop01" , 11111) , "example" , "root" , "root");

int batchSize = 100 ;

int emptyCount = 1 ;

try{

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

int totalEmptyCount = 120 ;

while (totalEmptyCount > emptyCount){

final Message message = connector.getWithoutAck(batchSize);

final long batchid = message.getId();

final int size = message.getEntries().size();

if(batchid == -1 || size ==0){

System.out.println("暂时没有数据 :"+emptyCount);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}else{

//TODO 解析binlog

Analysis(message.getEntries() , emptyCount);

emptyCount++;

}

}

}catch(Exception e){

e.printStackTrace();

}finally {

connector.disconnect();

}

}

//TODO 3:将解析出来的数据区分好:增 删 改 发送到kafka

public static void Analysis(List entries , int emptyCount){

for(CanalEntry.Entry entry : entries){

if(entry.getEntryType() == CanalEntry.EntryType.TRANSACTIONBEGIN || entry.getEntryType() == CanalEntry.EntryType.TRANSACTIONEND){

continue;

}

try {

final CanalEntry.RowChange rowChange = CanalEntry.RowChange.parseFrom(entry.getStoreValue());

final CanalEntry.EventType eventType = rowChange.getEventType();

final String logfileName = entry.getHeader().getLogfileName();

final long logfileOffset = entry.getHeader().getLogfileOffset();

final String dbname = entry.getHeader().getSchemaName();

final String tableName = entry.getHeader().getTableName();

for(CanalEntry.RowData rowData : rowChange.getRowDatasList()){

//区分增删改操作,然后发送给kafka

if(eventType == CanalEntry.EventType.DELETE){

//删除操作

System.out.println("=======删除操作=======");

dataDetails(rowData.getAfterColumnsList() , logfileName , logfileOffset , dbname , tableName , emptyCount);

}else if (eventType == CanalEntry.EventType.INSERT){

//插入操作

System.out.println("=======插入操作=======");

dataDetails(rowData.getAfterColumnsList() , logfileName , logfileOffset , dbname , tableName , emptyCount);

}else {

//更改操作

System.out.println("=======更改操作=======");

dataDetails(rowData.getAfterColumnsList() , logfileName , logfileOffset , dbname , tableName , emptyCount);

}

}

} catch (InvalidProtocolBufferException e) {

e.printStackTrace();

}

}

}

public static void dataDetails(List columns , String fileName , Long offset , String DBname , String tableName , int emptyCount){

List

Kafka代码:

import kafka.javaapi.producer.Producer;

import kafka.producer.KeyedMessage;

import kafka.producer.ProducerConfig;

import kafka.serializer.StringEncoder;

import java.util.Properties;

/**

* Created by angel;

*/

public class KafkaProducer {

private String topic;

public KafkaProducer(String topic){

super();

this.topic = topic ;

}

public static void sendMsg(String topic , String sendKey , String data){

Producer producer = createProducer();

producer.send(new KeyedMessage(topic,sendKey,data));

}

public static Producer createProducer(){

Properties properties = new Properties();

properties.put("zookeeper.connect" , "hadoop01:2181");

properties.put("metadata.broker.list" , "hadoop01:9092");

properties.put("serializer.class" , StringEncoder.class.getName());

final ProducerConfig producerConfig = new ProducerConfig(properties);

return new Producer(producerConfig);

}

}

测试canal代码

启动kafka并创建topic

/export/servers/kafka/bin/kafka-server-start.sh /export/servers/kafka/config/server.properties >/dev/null 2>&1 &

/export/servers/kafka/bin/kafka-topics.sh --create --zookeeper hadoop01:2181 --replication-factor 1 --partitions 1 --topic canal

启动mysql的消费者客户端,观察canal是否解析binlog

/export/servers/kafka/bin/kafka-console-consumer.sh --zookeeper hadoop01:2181 --from-beginning --topic canal

启动canal:canal/bin/startup.sh

启动mysql:service mysqld start

进入mysql:mysql -u 用户 -p 密码;然后进行增删改

使用flink将kafka中的数据解析成Hbase的DML操作,然后实时存储到hbase中

import java.util

import java.util.Properties

import org.apache.commons.lang3.StringUtils

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer09

import org.apache.flink.streaming.util.serialization.SimpleStringSchema

import org.apache.flink.api.scala._

import org.apache.flink.runtime.state.filesystem.FsStateBackend

import org.apache.flink.streaming.api.{CheckpointingMode, TimeCharacteristic}

import org.apache.hadoop.hbase.{HBaseConfiguration, HColumnDescriptor, HTableDescriptor, TableName}

import org.apache.hadoop.hbase.client.{ConnectionFactory, Delete, Put}

import org.apache.hadoop.hbase.util.Bytes

/**

* Created by angel;

*/

object DataExtraction {

//1指定相关信息

val zkCluster = "hadoop01,hadoop02,hadoop03"

val kafkaCluster = "hadoop01:9092,hadoop02:9092,hadoop03:9092"

val kafkaTopicName = "canal"

val hbasePort = "2181"

val tableName:TableName = TableName.valueOf("canal")

val columnFamily = "info"

def main(args: Array[String]): Unit = {

//2.创建流处理环境

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setStateBackend(new FsStateBackend("hdfs://hadoop01:9000/flink-checkpoint/checkpoint/"))

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

//定期发送

env.getConfig.setAutoWatermarkInterval(2000)

env.getCheckpointConfig.setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE)

env.getCheckpointConfig.setCheckpointInterval(6000)

System.setProperty("hadoop.home.dir", "/");

//3.创建kafka数据流

val properties = new Properties()

properties.setProperty("bootstrap.servers", kafkaCluster)

properties.setProperty("zookeeper.connect", zkCluster)

properties.setProperty("group.id", kafkaTopicName)

val kafka09 = new FlinkKafkaConsumer09[String](kafkaTopicName, new SimpleStringSchema(), properties)

//4.添加数据源addSource(kafka09)

val text = env.addSource(kafka09).setParallelism(1)

//5、解析kafka数据流,封装成canal对象

val values = text.map{

line =>

val values = line.split("#CS#")

val valuesLength = values.length

//

val fileName = if(valuesLength > 0) values(0) else ""

val fileOffset = if(valuesLength > 1) values(1) else ""

val dbName = if(valuesLength > 2) values(2) else ""

val tableName = if(valuesLength > 3) values(3) else ""

val eventType = if(valuesLength > 4) values(4) else ""

val columns = if(valuesLength > 5) values(5) else ""

val rowNum = if(valuesLength > 6) values(6) else ""

//(mysql-bin.000001,7470,test,users,[uid, 18, true, uname, spark, true, upassword, 1111, true],null,1)

Canal(fileName , fileOffset , dbName , tableName ,eventType, columns , rowNum)

}

//6、将数据落地到Hbase

val list_columns_ = values.map{

line =>

//处理columns字符串

val strColumns = line.columns

//[[uid, 22, true], [uname, spark, true], [upassword, 1111, true]]

val array_columns = packaging_str_list(strColumns)

//获取主键

val primaryKey = getPrimaryKey(array_columns)

//拼接rowkey DB+tableName+primaryKey

val rowkey = line.dbName+"_"+line.tableName+"_"+primaryKey

//获取操作类型INSERT UPDATE DELETE

val eventType = line.eventType

//获取触发的列:inser update

val triggerFileds: util.ArrayList[UpdateFields] = getTriggerColumns(array_columns , eventType)

//因为不同表直接有关联,肯定是有重合的列,所以hbase表=line.dbName + line.tableName

val hbase_table = line.dbName + line.tableName

//根据rowkey删除数据

if(eventType.equals("DELETE")){

operatorDeleteHbase(rowkey , eventType)

}else{

if(triggerFileds.size() > 0){

operatorHbase(rowkey , eventType , triggerFileds)

}

}

}

env.execute()

}

//封装字符串列表

def packaging_str_list(str_list:String):String ={

val substring = str_list.substring(1 , str_list.length-1)

substring

}

//获取每个表的主键

def getPrimaryKey(columns :String):String = {

// [uid, 1, false], [uname, abc, false], [upassword, uabc, false]

val arrays: Array[String] = StringUtils.substringsBetween(columns , "[" , "]")

val primaryStr: String = arrays(0)//uid, 13, true

primaryStr.split(",")(1).trim

}

//获取触发更改的列

def getTriggerColumns(columns :String , eventType:String): util.ArrayList[UpdateFields] ={

val arrays: Array[String] = StringUtils.substringsBetween(columns , "[" , "]")

val list = new util.ArrayList[UpdateFields]()

eventType match {

case "UPDATE" =>

for(index <- 1 to arrays.length-1){

val split: Array[String] = arrays(index).split(",")

if(split(2).trim.toBoolean == true){

list.add(UpdateFields(split(0) , split(1)))

}

}

list

case "INSERT" =>

for(index <- 1 to arrays.length-1){

val split: Array[String] = arrays(index).split(",")

list.add(UpdateFields(split(0) , split(1)))

}

list

case _ =>

list

}

}

//增改操作

def operatorHbase(rowkey:String , eventType:String , triggerFileds:util.ArrayList[UpdateFields]): Unit ={

val config = HBaseConfiguration.create();

config.set("hbase.zookeeper.quorum", zkCluster);

config.set("hbase.master", "hadoop01:60000");

config.set("hbase.zookeeper.property.clientPort", hbasePort);

config.setInt("hbase.rpc.timeout", 20000);

config.setInt("hbase.client.operation.timeout", 30000);

config.setInt("hbase.client.scanner.timeout.period", 200000);

val connect = ConnectionFactory.createConnection(config);

val admin = connect.getAdmin

//构造表描述器

val hTableDescriptor = new HTableDescriptor(tableName)

//构造列族描述器

val hColumnDescriptor = new HColumnDescriptor(columnFamily)

hTableDescriptor.addFamily(hColumnDescriptor)

if(!admin.tableExists(tableName)){

admin.createTable(hTableDescriptor);

}

//如果表存在,则开始插入数据

val table = connect.getTable(tableName)

val put = new Put(Bytes.toBytes(rowkey))

//获取对应的列[UpdateFields(uname, spark), UpdateFields(upassword, 1111)]

for(index <- 0 to triggerFileds.size()-1){

val fields = triggerFileds.get(index)

val key = fields.key

val value = fields.value

put.addColumn(Bytes.toBytes(columnFamily) , Bytes.toBytes(key) , Bytes.toBytes(value))

}

table.put(put)

}

//删除操作

def operatorDeleteHbase(rowkey:String , eventType:String): Unit ={

val config = HBaseConfiguration.create();

config.set("hbase.zookeeper.quorum", zkCluster);

config.set("hbase.zookeeper.property.clientPort", hbasePort);

config.setInt("hbase.rpc.timeout", 20000);

config.setInt("hbase.client.operation.timeout", 30000);

config.setInt("hbase.client.scanner.timeout.period", 200000);

val connect = ConnectionFactory.createConnection(config);

val admin = connect.getAdmin

//构造表描述器

val hTableDescriptor = new HTableDescriptor(tableName)

//构造列族描述器

val hColumnDescriptor = new HColumnDescriptor(columnFamily)

hTableDescriptor.addFamily(hColumnDescriptor)

if(admin.tableExists(tableName)){

val table = connect.getTable(tableName)

val delete = new Delete(Bytes.toBytes(rowkey))

table.delete(delete)

}

}

}

//[uname, spark, true], [upassword, 11122221, true]

case class UpdateFields(key:String , value:String)

//(fileName , fileOffset , dbName , tableName ,eventType, columns , rowNum)

case class Canal(fileName:String ,

fileOffset:String,

dbName:String ,

tableName:String ,

eventType:String ,

columns:String ,

rowNum:String

)

测试flink-hbase的程序:

1、启动hbase

2、登录hbase shell

3、启动程序

4、观察数据是否实时落地到hbase

打包上线

添加maven打包依赖:

1:打包java程序

src/main/java

src/test/scala

org.apache.maven.plugins

maven-compiler-plugin

2.5.1

1.7

1.7

net.alchim31.maven

scala-maven-plugin

3.2.0

compile

testCompile

-dependencyfile

${project.build.directory}/.scala_dependencies

org.apache.maven.plugins

maven-surefire-plugin

2.18.1

false

true

**/*Test.*

**/*Suite.*

org.apache.maven.plugins

maven-shade-plugin

2.3

package

shade

*:*

META-INF/*.SF

META-INF/*.DSA

META-INF/*.RSA

canal.CanalClient

打包scala程序

将上述的maven依赖红色标记处修改成:

<sourceDirectory>src/main/scalasourceDirectory>

<mainClass>scala的驱动类mainClass>

maven打包步骤: