kaggle--Digit Recognizer(python实现)

使用xgboost并对其进行简单的调参,准确率可达到97%

排名较低,就不要在意这些细节啦,小白一枚,新手上路。

xgboost原理可参考陈天奇的论文 XGBoost: A Scalable Tree Boosting System

https://arxiv.org/pdf/1603.02754.pdf

1、安装环境

这里使用anaconda(python 3.6)版本。

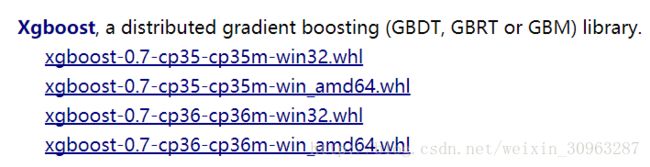

首先从https://www.lfd.uci.edu/~gohlke/pythonlibs/下载xgboost的whl文件

选择相应的版本。

打开cmd并cd到相应的文件夹下使用pip install xgboost‑0.7‑cp36‑cp36m‑win_amd64.whl

import xgboost验证xgboost是否安装成功

2、代码实现

# -*- coding: utf-8 -*-

import xgboost as xgb

from sklearn.datasets import load_digits

from sklearn.cross_validation import train_test_split

from sklearn.metrics import accuracy_score

import pandas as pd

import numpy as np

def createDataSet():

trainDataSet = pd.read_csv('train.csv')

testDataSet = pd.read_csv('test.csv')

trainDataSet = np.array(trainDataSet)

testDataSet = np.array(testDataSet)

trainData = trainDataSet[:, 1:len(trainDataSet)]

trainLabels = trainDataSet[:, 0]

testData = testDataSet

return trainData, trainLabels, testData

def getPredict(datas, labels):

x_train, x_test, y_train, y_test = train_test_split(datas, labels, test_size = 0.1)

param = {

'booster':'gbtree',

'objective': 'multi:softmax', #多分类的问题

'num_class':10, # 类别数,与 multisoftmax 并用

'gamma':0.1, # 用于控制是否后剪枝的参数,越大越保守,一般0.1、0.2

'max_depth':12, # 构建树的深度,越大越容易过拟合

'lambda':2, # 控制模型复杂度的权重值的L2正则化项参数,参数越大,模型越不容易过拟合。

'subsample':0.8, # 随机采样训练样本

'colsample_bytree':0.7, # 生成树时进行的列采样

'min_child_weight':5,

# 这个参数默认是 1,是每个叶子里面 h 的和至少是多少,对正负样本不均衡时的 0-1 分类而言

#,假设 h 在 0.01 附近,min_child_weight 为 1 意味着叶子节点中最少需要包含 100 个样本。

#这个参数非常影响结果,控制叶子节点中二阶导的和的最小值,该参数值越小,越容易 overfitting。

'silent':False,#设置成True无信息输出,

'learning_rate': 0.05, # 学习率

'seed':1000

}

xgb_train = xgb.DMatrix(data = x_train, label = y_train)

xgb_val = xgb.DMatrix(data = x_test, label = y_test)

xgb_test = xgb.DMatrix(x_test)

watchlist = [(xgb_train, 'train'),(xgb_val, 'val')]

model = xgb.train(params = param,

dtrain = xgb_train,

num_boost_round = 5000, #初始boost迭代次数

evals = watchlist,

early_stopping_rounds=100 #100轮后当模型基本没有提升时会提前结束

)

print('best best_ntree_limit:', model.best_ntree_limit)

# 保存模型

# model.save_model('1.model')

y_pred = model.predict(xgb_test)

print(accuracy_score(y_test, y_pred))

trainData, trainLabels, testData = createDataSet()

getPredict(trainData, trainLabels)

xgbPredict = xgb.DMatrix(testData)

#训练完后可加载模型

model = xgb.Booster()

model.load_model('1.model')

y_pred = model.predict(xgbPredict)

print(y_pred)

#保存文件

f = open('submission.csv', 'w', encoding = 'utf-8')

f.write('ImageId,Label\n')

for i in range(len(y_pred)):

f.write(str(i + 1) + ',' + str(int(y_pred[i])) + '\n')

f.close()3、调参小结

xgboost设置参数

max_depth:树的最大深度,缺省值为6通常取值3-10

eta:为了防止过拟合,更新过程中用到的收缩步长,在每次提升计算之后,算法会直接获得新特征的权重 ,通常设置为[0.01-0.2]

silent:取0时表示打印出运行时信息,取1时表示以缄默方式运行,不打印运行时信息。缺省值为0 ,建议取0,过程中的输出数据有助于理解模型以及调参。另外实际上我设置其为1也通常无法缄默运行

objective:缺省值 reg:linear 定义学习任务及相应的学习目标,可选目标函数如下:

“reg:linear” –线性回归。

“reg:logistic” –逻辑回归。

“binary:logistic” –二分类的逻辑回归问题,输出为概率。

“binary:logitraw” –二分类的逻辑回归问题,输出的结果为wTx。

“count:poisson” –计数问题的poisson回归,输出结果为poisson分布,在

“multi:softmax” –让XGBoost采用softmax目标函数处理多分类问题,同时需要设置参数num_class(类别个数)

“multi:softprob” –和softmax一样,但是输出的是ndata * nclass的向量,可以将该向量reshape成ndata行nclass列的矩阵。没行数据表示样本所属于每个类别的概率。

“rank:pairwise” –set XGBoost to do ranking task by minimizing the pairwise loss

每个参数的意义可参考xgboost官方文档:

http://xgboost.readthedocs.io/en/latest/parameter.html

xgboost快速调参指南可参考:

http://blog.csdn.net/wzmsltw/article/details/52382489

Keras CNN实现:

准确率达到0.99585

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from keras.models import Sequential

from keras.utils.np_utils import to_categorical

import matplotlib.pyplot as plt

from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPool2D

from keras.optimizers import RMSprop

from keras.preprocessing.image import ImageDataGenerator

from keras.callbacks import ReduceLROnPlateau

train = pd.read_csv('data/train.csv')

test = pd.read_csv('data/test.csv')

target = train['label']

train = train.drop(['label'], axis = 1)

train = train / 255.0

test = test / 255.0

train = train.values

test = test.values

train = train.reshape(-1, 28, 28, 1)

test = test.reshape(-1, 28, 28, 1)

target = to_categorical(target, num_classes = 10)

x_train, x_val, y_train, y_val = train_test_split(train, target, test_size = 0.2, random_state = 2018)

model = Sequential()

model.add(Conv2D(filters = 32, kernel_size = (5,5),padding = 'Same',

activation ='relu', input_shape = (28,28,1)))

model.add(Conv2D(filters = 32, kernel_size = (5,5),padding = 'Same',

activation ='relu'))

model.add(MaxPool2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Conv2D(filters = 64, kernel_size = (3,3),padding = 'Same',

activation ='relu'))

model.add(Conv2D(filters = 64, kernel_size = (3,3),padding = 'Same',

activation ='relu'))

model.add(MaxPool2D(pool_size=(2,2), strides=(2,2)))

model.add(Dropout(0.25))

model.add(Conv2D(filters = 128, kernel_size = (3,3),padding = 'Same',

activation ='relu'))

model.add(Conv2D(filters = 128, kernel_size = (3,3),padding = 'Same',

activation ='relu'))

model.add(MaxPool2D(pool_size=(2,2), strides=(2,2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(256, activation = "relu"))

model.add(Dropout(0.5))

model.add(Dense(10, activation = "softmax"))

optimizer = RMSprop(lr=0.001, rho=0.9, epsilon=1e-08, decay=0.0)

model.compile(optimizer = optimizer , loss = "categorical_crossentropy", metrics=["accuracy"])

epochs = 30

batch_size = 86

learning_rate_reduction = ReduceLROnPlateau(monitor='val_acc',

patience=3,

verbose=1,

factor=0.5,

min_lr=0.00001)

img = ImageDataGenerator(featurewise_center=False,

samplewise_center=False,

featurewise_std_normalization=False,

samplewise_std_normalization=False,

zca_whitening=False,

rotation_range=10,

zoom_range = 0.1,

width_shift_range=0.1,

height_shift_range=0.1,

horizontal_flip=False,

vertical_flip=False)

img.fit(x_train)

model.fit_generator(img.flow(x_train, y_train, batch_size = batch_size),

validation_data = (x_val, y_val),

steps_per_epoch = x_train.shape[0] // batch_size,

epochs = epochs,

callbacks = [learning_rate_reduction])

results = model.predict(test)

print(results)

results = np.argmax(results, axis = 1)

print(results)

results = pd.Series(results, name="Label")

submission = pd.concat([pd.Series(range(1,28001), name = "ImageId"), results], axis = 1)

submission.to_csv("submission/cnn_mnist.csv", index = False)参考:

http://blog.csdn.net/Eddy_zheng/article/details/50496186

http://blog.csdn.net/lujiandong1/article/details/52777168