Predict Future Sales(时间序列)——Kaggle银牌(TOP 4%)基础方案(四):单模预测及模型融合

构造完特征接下来就是模型预测部分了,至于这里说的模型融合,实际上已经是各大数据竞赛上分的约定俗成的套路。

单模一:CatBoost

catboost是俄罗斯大佬发布于2017年的一个强大的Boost算法,据发布者所说是继XGBoost和LightGBM后的又一个树模型大杀器,且性能要优于前面两个:原文链接。

一般认为CatBoost有如下优点:

- 它自动采用特殊的方式处理类别型特征(categorical features)。首先对categorical features做一些统计,计算某个类别特征(category)出现的频率,之后加上超参数,生成新的数值型特征(numerical features)。这也是我在这里介绍这个算法最大的motivtion,有了catboost,再也不用手动处理类别型特征了。

- catboost还使用了组合类别特征,可以利用到特征之间的联系,这极大的丰富了特征维度。

- catboost的基模型采用的是对称树,同时计算leaf-value方式和传统的boosting算法也不一样,传统的boosting算法计算的是平均数,而catboost在这方面做了优化采用了其他的算法,这些改进都能防止模型过拟合。

cat_features = [0, 1, 7, 8]

catboost_model = CatBoostRegressor(

iterations=500,

max_ctr_complexity=4,

random_seed=0,

od_type='Iter',

od_wait=25,

verbose=50,

depth=4

)

catboost_model.fit(

X_train, Y_train,

cat_features=cat_features,

eval_set=(X_validation, Y_validation)

)cat_features是类别特征,这也是catboost的一个优势,他能自动encoding类别特征,类似于mean encoding。

查看特征重要性:

feature_score = pd.DataFrame(list(zip(X_train.dtypes.index, catboost_model.get_feature_importance(Pool(X_train, label=Y_train, cat_features=cat_features)))), columns=['Feature','Score'])

feature_score = feature_score.sort_values(by='Score', ascending=False, inplace=False, kind='quicksort', na_position='last')

plt.rcParams["figure.figsize"] = (19, 6)

ax = feature_score.plot('Feature', 'Score', kind='bar', color='c')

ax.set_title("Catboost Feature Importance Ranking", fontsize = 14)

ax.set_xlabel('')

rects = ax.patches

labels = feature_score['Score'].round(2)

for rect, label in zip(rects, labels):

height = rect.get_height()

ax.text(rect.get_x() + rect.get_width()/2, height + 0.35, label, ha='center', va='bottom')

plt.show()特征重要性反应各特征对预测结果的影响,可以明显看出哪些不重要特征,哪些是强特。一般而言,在进行特征选择的时候,选择强特是能提高预测结果的,当要避免发生过拟合。

单模二:XGBoost

XGBoost不用说,成名已久,直接开代码:

# Use only part of features on XGBoost.

xgb_features = ['item_cnt','item_cnt_mean', 'item_cnt_std', 'item_cnt_shifted1',

'item_cnt_shifted2', 'item_cnt_shifted3', 'shop_mean',

'shop_item_mean', 'item_trend', 'mean_item_cnt']

xgb_train = X_train[xgb_features]

xgb_val = X_validation[xgb_features]

xgb_test = X_test[xgb_features]

xgb_model = XGBRegressor(max_depth=8,

n_estimators=500,

min_child_weight=1000,

colsample_bytree=0.7,

subsample=0.7,

eta=0.3,

seed=0)

xgb_model.fit(xgb_train,

Y_train,

eval_metric="rmse",

eval_set=[(xgb_train, Y_train), (xgb_val, Y_validation)],

verbose=20,

early_stopping_rounds=20)XGBoost不像CatBoost,不能直接接受类别特征,所以这里如果不做类别特征编码的话,是要进行一次特征选择的,当然可以对类别特征进行编码,比如LabelEncoding,One-Hot以及target encoding,甚至可以做NLP中的embedding,不过这里如果做one-hot编码的话,由于类别过多,encoding后数据会变得很稀疏。查看XGBoost的特征重要性如下:

单模三:随机森林

RF同样为树模型的集成算法,区别于XGB的是它属于bagging集成。

# Use only part of features on random forest.

rf_features = ['shop_id', 'item_id', 'item_cnt', 'transactions', 'year',

'item_cnt_mean', 'item_cnt_std', 'item_cnt_shifted1',

'shop_mean', 'item_mean', 'item_trend', 'mean_item_cnt']

rf_train = X_train[rf_features]

rf_val = X_validation[rf_features]

rf_test = X_test[rf_features]

rf_model = RandomForestRegressor(n_estimators=50, max_depth=7, random_state=0, n_jobs=-1)

rf_model.fit(rf_train, Y_train)同样要进行特征选择,drop掉cate特征,线下预测如下:

rf_train_pred = rf_model.predict(rf_train)

rf_val_pred = rf_model.predict(rf_val)

rf_test_pred = rf_model.predict(rf_test)

print('Train rmse:', np.sqrt(mean_squared_error(Y_train, rf_train_pred)))

print('Validation rmse:', np.sqrt(mean_squared_error(Y_validation, rf_val_pred)))单模四:LinearRegression

线性回归在处理特征之间的一些线性关系时表现较为突出,所以这里会进行部分特征选择。

lr_features = ['item_cnt', 'item_cnt_shifted1', 'item_trend', 'mean_item_cnt', 'shop_mean']

lr_train = X_train[lr_features]

lr_val = X_validation[lr_features]

lr_test = X_test[lr_features]

lr_scaler = MinMaxScaler()

lr_scaler.fit(lr_train)

lr_train = lr_scaler.transform(lr_train)

lr_val = lr_scaler.transform(lr_val)

lr_test = lr_scaler.transform(lr_test)

lr_model = LinearRegression(n_jobs=-1)

lr_model.fit(lr_train, Y_train)线下结果如下:

单模五:KNN(无监督聚类)

knn_features = ['item_cnt', 'item_cnt_mean', 'item_cnt_std', 'item_cnt_shifted1',

'item_cnt_shifted2', 'shop_mean', 'shop_item_mean',

'item_trend', 'mean_item_cnt']

# Subsample train set (using the whole data was taking too long).

X_train_sampled = X_train[:100000]

Y_train_sampled = Y_train[:100000]

knn_train = X_train_sampled[knn_features]

knn_val = X_validation[knn_features]

knn_test = X_test[knn_features]

knn_scaler = MinMaxScaler()

knn_scaler.fit(knn_train)

knn_train = knn_scaler.transform(knn_train)

knn_val = knn_scaler.transform(knn_val)

knn_test = knn_scaler.transform(knn_test)

knn_model = KNeighborsRegressor(n_neighbors=9, leaf_size=13, n_jobs=-1)

knn_model.fit(knn_train, Y_train_sampled)

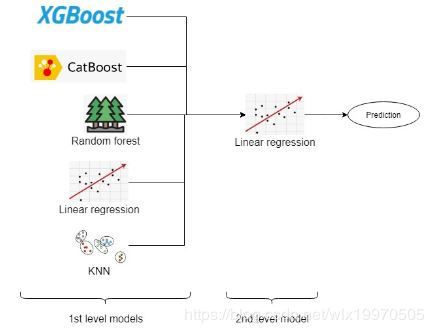

模型融合

这里会对各个单模做一个简单的ensembling,我们将五个单模作为1st level预测,将其预测值作为2st level的输入,也就是作为2st level的特征去学习。示意图如下:

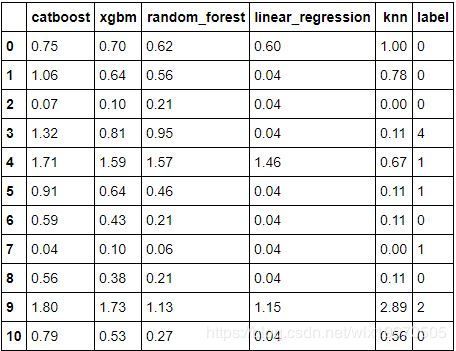

构建一级特征:

first_level = pd.DataFrame(catboost_val_pred, columns=['catboost'])

first_level['xgbm'] = xgb_val_pred

first_level['random_forest'] = rf_val_pred

first_level['linear_regression'] = lr_val_pred

first_level['knn'] = knn_val_pred

first_level['label'] = Y_validation.values

first_level.head(10)查看前10行数据:

同样对测试集进行同步的操作:

first_level_test = pd.DataFrame(catboost_test_pred, columns=['catboost'])

first_level_test['xgbm'] = xgb_test_pred

first_level_test['random_forest'] = rf_test_pred

first_level_test['linear_regression'] = lr_test_pred

first_level_test['knn'] = knn_test_pred2st level预测并产生最终结果。

meta_model = LinearRegression(n_jobs=-1)

first_level.drop('label', axis=1, inplace=True)

meta_model.fit(first_level, Y_validation)

ensemble_pred = meta_model.predict(first_level)

final_predictions = meta_model.predict(first_level_test)

prediction_df = pd.DataFrame(test['ID'], columns=['ID'])

prediction_df['item_cnt_month'] = final_predictions.clip(0., 20.)

prediction_df.to_csv('submission.csv', index=False)

prediction_df.head(10)这里可以将结果转换为整型,加上astype(int)即可。查看预测结果如下: