基于卷积与反卷积的GAN简单实现---MNIST+Tensorflow

目前来说简单的GAN模型生成器的框架主要有两种,一种是通过全连接的方式实现,而另一种是通过反卷积的方式实现;全连接的方式简单但是对于一个多层模型来说,其参数量显得过于庞大,而反卷积方法的好处就在于能够在较少参数量的情况下实现不错质量的图像生成。

本文主要参照DCGAN的程序,演示如何使用卷积和反卷积网络构建GAN;设计的生成器由一个100维的随机向量通过反卷积网络生成28*28的MNIST类似的手写字体样本,鉴别器主要是通过卷积网络对图像进行处理最终输出图像真实概率。

程序及注释如下:

from tensorflow.examples.tutorials.mnist import input_data

import tensorflow as tf

import os

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import math

#数据集路径

mnist = input_data.read_data_sets("/home/mnist/raw/",one_hot=True)

sess = tf.InteractiveSession()

#参数定义

batchs = 64

df_dim = 64#鉴别器通道

gf_dim = 64#生成器通道

z_dim = 100#随机向量维度

output_height = 28#输出图像高度

output_weight = 28#输出图像宽度

image_dims = [output_height,output_weight,1]

inputs = tf.placeholder(tf.float32,[batchs]+image_dims,name = "real_images")

z = tf.placeholder(tf.float32,[None,z_dim],name = 'z')

x = tf.placeholder(tf.float32,[batchs,784],name = 'x')

x_image = tf.reshape(x ,[batchs,28,28,1])

#相关函数定义

#卷积函数定义

def conv2d(input_,output_dim,k_h=5,k_w=5,d_h=2,d_w=2,steddev=0.02,name="conv2d"):

with tf.variable_scope(name):

w = tf.get_variable('w',[k_h,k_w,input_.get_shape()[-1],output_dim],\

initializer= tf.truncated_normal_initializer(stddev=steddev))

conv = tf.nn.conv2d(input_,w,strides=[1,d_h,d_w,1],padding='SAME')

biases = tf.get_variable('biases',[output_dim],initializer=tf.constant_initializer(0.0))

conv = tf.reshape(tf.nn.bias_add(conv,biases),conv.get_shape())

return conv

#反卷积函数定义

def deconv2d(input_,output_shape,k_h=5,k_w=5,d_h=2,d_w=2,steddev=0.02,name="deconv2d"):

with tf.variable_scope(name):

w = tf.get_variable('w',[k_h,k_w,output_shape[-1],input_.get_shape()[-1]],\

initializer=tf.random_normal_initializer(stddev=steddev))

deconv = tf.nn.conv2d_transpose(input_,w,output_shape=output_shape,strides=[1,d_h,d_w,1])

biases = tf.get_variable('biases',[output_shape[-1]],initializer=tf.constant_initializer(0.0))

deconv = tf.reshape(tf.nn.bias_add(deconv,biases),deconv.get_shape())

return deconv

#线性化函数定义

def linear(input_,output_size,scope=None,steddev=0.02):

shape = input_.get_shape().as_list()

with tf.variable_scope(scope or "Linear"):

matrix = tf.get_variable("matrix",[shape[1],output_size],tf.float32,tf.random_normal_initializer(stddev=steddev))

bias = tf.get_variable("bias",[output_size],initializer=tf.constant_initializer(0.0))

return tf.matmul(input_,matrix) + bias

def conv_out_size_same(size,stride):

return int(math.ceil(float(size) / float(stride)))

#鉴别器

def discriminator(image,reuse = False):

with tf.variable_scope("discriminator") as scope:

if reuse:

scope.reuse_variables()

h0 = tf.nn.leaky_relu(conv2d(image,df_dim,name='d_h0_conv'))

h1 = tf.nn.leaky_relu(tf.contrib.layers.batch_norm(conv2d(h0,df_dim*2,name='d_h1_conv')))

h2 = tf.nn.leaky_relu(tf.contrib.layers.batch_norm(conv2d(h1,df_dim*4,name='d_h2_conv')))

h3 = tf.nn.leaky_relu(tf.contrib.layers.batch_norm(conv2d(h2,df_dim*8,name='d_h3_conv')))

h4 = linear(tf.reshape(h3,[batchs,-1]),1,'d_h4_lin')

return tf.nn.sigmoid(h4),h4

#生成器

def generator(z):

with tf.variable_scope("generator") as scope:

s_h,s_w = output_height,output_weight

s_h2,s_w2 = conv_out_size_same(s_h,2),conv_out_size_same(s_w,2)

s_h4,s_w4 = conv_out_size_same(s_h2,2),conv_out_size_same(s_w2,2)

s_h8,s_w8 = conv_out_size_same(s_h4,2),conv_out_size_same(s_w4,2)

s_h16,s_w16 = conv_out_size_same(s_h8,2),conv_out_size_same(s_w8,2)

z_ = linear(z,gf_dim*8*s_h16*s_w16,'g_h0_lin')

h0 = tf.reshape(z_,[-1,s_h16,s_w16,gf_dim*8])

h0 = tf.nn.relu(tf.contrib.layers.batch_norm(h0))

h1 = deconv2d(h0,[batchs,s_h8,s_w8,gf_dim*4],name='g_h1')

h1 = tf.nn.relu(tf.contrib.layers.batch_norm(h1))

h2 = deconv2d(h1,[batchs,s_h4,s_w4,gf_dim*2],name='g_h2')

h2 = tf.nn.relu(tf.contrib.layers.batch_norm(h2))

h3 = deconv2d(h2,[batchs,s_h2,s_w2,gf_dim*1],name='g_h3')

h3 = tf.nn.relu(tf.contrib.layers.batch_norm(h3))

h4 = deconv2d(h3,[batchs,s_h,s_w,1],name='g_h4')

return tf.nn.sigmoid(h4)

#生成器、鉴别器输出设置

g_sample = generator(z)

d_real,d_log_real = discriminator(x_image,reuse=False)

d_fake,d_log_fake = discriminator(g_sample,reuse=True)

t_vars = tf.trainable_variables()

#参数更新限定

d_vars = [var for var in t_vars if 'd_' in var.name]

g_vars = [var for var in t_vars if 'g_' in var.name]

#交叉熵损失定义

d_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_log_real,labels=tf.ones_like(d_real)) + \

tf.nn.sigmoid_cross_entropy_with_logits(logits=d_log_fake,labels=tf.zeros_like(d_fake)))#交叉熵损失

g_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=d_log_fake,labels=tf.ones_like(d_fake)))

#定义原始损失

#d_loss = - tf.reduce_mean(tf.log(d_real) + tf.log(1.- d_fake))

#g_loss = - tf.reduce_mean(tf.log(d_fake))

#g_loss = tf.reduce_mean(tf.square(1.-d_fake)-tf.log(d_fake))

d_slover = tf.train.AdamOptimizer(0.0002,beta1=0.5).minimize(d_loss,var_list = d_vars)

g_slover = tf.train.AdamOptimizer().minimize(g_loss,var_list = g_vars)

#生成图像保存

def plot(samples):

fig = plt.figure(figsize=(4, 4))

gs = gridspec.GridSpec(4, 4)

gs.update(wspace=0.05, hspace=0.05)

for i, sample in enumerate(samples):

ax = plt.subplot(gs[i])

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(sample.reshape(28, 28), cmap='Greys_r')

return fig

#训练过程

sess.run(tf.global_variables_initializer())

i=0

for epoch in range (10):

for it in range(800):

#输出image

sample_z = np.random.uniform(-1, 1, [batchs, z_dim]).astype(np.float32)

x_md,_ =mnist.train.next_batch(batchs)

_,g_loss_curr = sess.run([g_slover,g_loss],feed_dict={z: sample_z})

#d_loss_curr = sess.run(d_loss,feed_dict={x: x_md, z: sample_z})

_,d_loss_curr = sess.run([d_slover,d_loss],feed_dict={x: x_md, z: sample_z})

_,g_loss_curr = sess.run([g_slover,g_loss],feed_dict={z: sample_z})

_,g_loss_curr = sess.run([g_slover,g_loss],feed_dict={z: sample_z})

_,g_loss_curr = sess.run([g_slover,g_loss],feed_dict={z: sample_z})

if epoch % 1 == 0:

#print('iter:{}'.format(epoch),'g loss : {:.4}'.format(g_loss_curr))

sampl = sess.run(g_sample,feed_dict={z: sample_z})

samples = sampl[:16]

fig = plot(samples)

plt.savefig('out/{}.png'.format(str(i).zfill(5)),bbox_inches = 'tight')

i+=1

plt.close(fig)

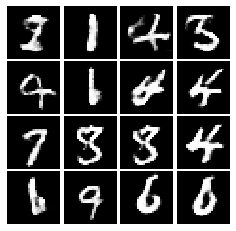

print('iter:{}'.format(epoch),'d loss : {:.4}'.format(d_loss_curr),'g loss : {:.4}'.format(g_loss_curr))结果:运行5个epoch后生成器生成图像:

生成效果不是很好,一方面可能是参数调的不够好,另一方面由于 生成器中使用了反卷积,而反卷积固有地存在“棋盘效应(Checkerboard Artifacts)”,这个棋盘效应约束了DCGAN的生成能力上限(这里仅是提一下,反卷积生成MNIST应该是够用的)。关于棋盘效应,详细可以参考 Deconvolution and Checkerboard Artifacts