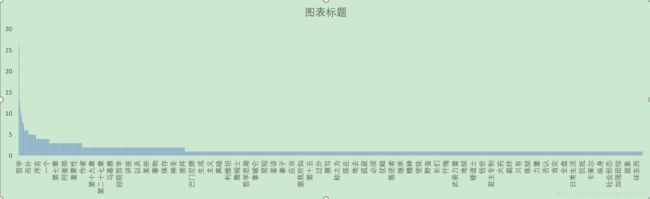

【统计词频】python+excel +jieba

python简单词频统计——简单统计一个小说中哪些个汉字出现的频率最高

参考:https://www.cnblogs.com/jiayongji/p/7119065.html |

好玩的分词——python jieba分词模块的基本用法

-------------------------------------------------------------------------------------

>>> import jieba

>>> from collections import Counter

>>> santi_text = open('E:/西方哲学史.txt').read()

>>> santi_words = [x for x in jieba.cut(santi_text) if len(x) >= 2]

Building prefix dict from the default dictionary ...

Loading model from cache C:\Users\oil\AppData\Local\Temp\jieba.cache

Loading model cost 0.882 seconds.

Prefix dict has been built succesfully.

>>> c = Counter(santi_words).most_common(20)

>>> print(c)

[('他们', 51), ('哲学', 48), ('一种', 46), ('一个', 43), ('对于', 34), ('教会', 25), ('我们', 25), ('哲学家', 24), ('如 果', 24), ('这种', 24), ('时代', 21), ('就是', 21), ('世纪', 20), ('但是', 20), ('任何', 20), ('社会', 19), ('方面', 17), ('这些', 16), ('罗马', 16), ('没有', 16)]

>>>

----------------------------------------------------------------------------------

参考文章:python jieba分词并统计词频后输出结果到Excel和txt文档

----------------------------------------------------------------------------------

>>> import jieba

>>> import jieba.analyse

>>> import xlwt

>>>

>>> if __name__=="__main__":

... wbk = xlwt.Workbook(encoding = 'ascii')

... sheet = wbk.add_sheet("wordCount")#Excel单元格名字

... word_lst = []

... key_list=[]

... for line in open('E:/西方哲学史.txt'):

... item = line.strip('\n\r').split('\t')

... tags = jieba.analyse.extract_tags(item[0])

... for t in tags:

... word_lst.append(t)

... word_dict= {}

... with open("E:/wordCount.txt",'w') as wf2:

... for item in word_lst:

... if item not in word_dict:

... word_dict[item] = 1

... else:

... word_dict[item] += 1

... orderList=list(word_dict.values())

... orderList.sort(reverse=True)

... for i in range(len(orderList)):

... for key in word_dict:

... if word_dict[key]==orderList[i]:

... wf2.write(key+' '+str(word_dict[key])+'\n')

... key_list.append(key)

... word_dict[key]=0

... for i in range(len(key_list)):

... sheet.write(i, 1, label = orderList[i])

... sheet.write(i, 0, label = key_list[i])

... wbk.save('wordCount.xls')

...

Building prefix dict from the default dictionary ...

Loading model from cache C:\Users\oil\AppData\Local\Temp\jieba.cache

Loading model cost 1.548 seconds.

Prefix dict has been built succesfully.

#!/usr/bin/python

# -*- coding:utf-8 -*-

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

import jieba

import jieba.analyse

import xlwt #写入Excel表的库

if __name__=="__main__":

wbk = xlwt.Workbook(encoding = 'ascii')

sheet = wbk.add_sheet("wordCount")#Excel单元格名字

word_lst = []

key_list=[]

for line in open('1.txt'):#1.txt是需要分词统计的文档

item = line.strip('\n\r').split('\t') #制表格切分

# print item

tags = jieba.analyse.extract_tags(item[0]) #jieba分词

for t in tags:

word_lst.append(t)

word_dict= {}

with open("wordCount.txt",'w') as wf2: #打开文件

for item in word_lst:

if item not in word_dict: #统计数量

word_dict[item] = 1

else:

word_dict[item] += 1

orderList=list(word_dict.values())

orderList.sort(reverse=True)

# print orderList

for i in range(len(orderList)):

for key in word_dict:

if word_dict[key]==orderList[i]:

wf2.write(key+' '+str(word_dict[key])+'\n') #写入txt文档

key_list.append(key)

word_dict[key]=0

for i in range(len(key_list)):

sheet.write(i, 1, label = orderList[i])

sheet.write(i, 0, label = key_list[i])

wbk.save('wordCount.xls') #保存为 wordCount.xls文件

---------------------------------------------------------------------------------------------