100行实现草榴种子爬虫

项目依赖Python3,需要requests库。国内运行需要。

首先要设置好请求的header:

{

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'Accept-Encoding': '',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Cache-Control': 'max-age=0',

'Connection': 'keep-alive',

'Host': 'www.t66y.com',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/59.0.3071.115 Safari/537.36',

}

下面一个重点是采用正则匹配页面标签:

p_ref = re.compile("name=\"ref\" value=\"(.+?)\"")

p_reff = re.compile("NAME=\"reff\" value=\"(.+?)\"")

ref = p_ref.findall(download_text)[0]

reff = p_reff.findall(download_text)[0]

关于参数设置:

打开Caoliu.py,最后几行如下:

if __name__ == "__main__":

c = Caoliu()

c.start(downloadtype="yazhouwuma",

page_start=1,

page_end=20,

max_thread_num=50)

type参数负责下载类型,其对应如下:

| 下载类型 | type |

|---|---|

| 亚洲无码 | yazhouwuma |

| 亚洲有码 | yazhouyouma |

| 欧美原创 | oumeiyuanchuang |

| 动漫原创 | dongmanyuanchuang |

| 国产原创 | guochanyuanchuang |

| 中字原创 | zhongziyuanchuang |

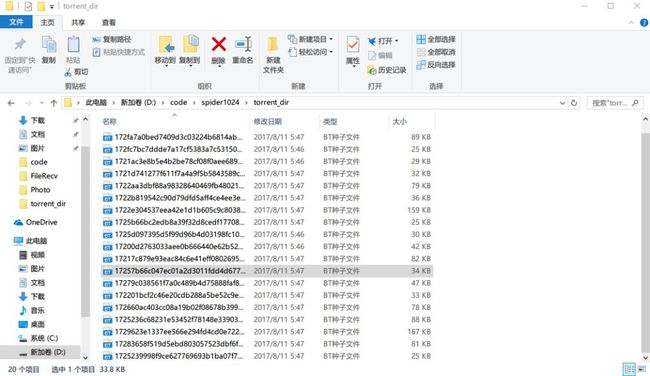

page_start,page_end代表起始页和终止页 max_thread_num代表允许程序使用的最大线程数 设定好各参数后,运行Caoliu.py。会在目录下创建一个torrent_dir,并开始下载种子。

详细代码如下:

import requests

import re

import os

import threading

import random

class Caoliu:

def __init__(self):

self.header_data = {

'Accept': 'text/html,application/xhtml+xml,application/xml;'

'q=0.9,image/webp,image/apng,*/*;q=0.8',

'Accept-Encoding': '',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Cache-Control': 'max-age=0',

'Connection': 'keep-alive',

'Host': 'www.t66y.com',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64;'

' x64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/59.0.3071.115 Safari/537.36',

}

# if not exist torrent_dir, then create it

if "torrent_dir" not in os.listdir(os.getcwd()):

os.makedirs("torrent_dir")

def download_page(self, url):

header_data2 = {

'Accept': 'text/html,application/xhtml+xml,application/xml;'

'q=0.9,image/webp,image/apng,*/*;q=0.8',

'Accept-Encoding': 'gzip, deflate',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Cache-Control': 'max-age=0',

'Connection': 'keep-alive',

'Host': 'rmdown.com',

'Referer': 'http://www.viidii.info/?'

'http://rmdown______com/link______php?'

+ url.split("?")[1],

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64;'

' x64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/59.0.3071.115 Safari/537.36'

}

try:

download_text = requests.get(url, headers=header_data2).text

p_ref = re.compile("name=\"ref\" value=\"(.+?)\"")

p_reff = re.compile("NAME=\"reff\" value=\"(.+?)\"")

ref = p_ref.findall(download_text)[0]

reff = p_reff.findall(download_text)[0]

r = requests.get("http://www.rmdown.com/download.php?ref=" +

ref + "&reff=" + reff + "&submit=download")

# just get green torrent link

with open("torrent_dir\\" + ref +

str(random.randint(1, 100)) + ".torrent", "wb") as f:

f.write(r.content) # add random number to name , avoid conflicting

except:

print("download page " + url + " failed")

def index_page(self, fid=2, offset=1):

p = re.compile("\"(.+?)\"")

try:

tmp_url = "http://www.t66y.com/thread0806.php?fid=" + str(fid) +\

"&search=&page=" + str(offset)

r = requests.get(tmp_url)

for i in p.findall(r.text):

self.detail_page(i)

except:

print("index page " + str(offset) + " get failed")

def detail_page(self, url):

p1 = re.compile("(http://rmdown.com/link.php.+?)<")

p2 = re.compile("(http://www.rmdown.com/link.php.+?)<")

base_url = "http://www.t66y.com/"

try:

r = requests.get(url=base_url + url, headers=self.header_data)

url_set = set()

for i in p1.findall(r.text):

url_set.add(i)

for i in p2.findall(r.text):

url_set.add(i)

url_list = list(url_set)

for i in url_list:

self.download_page(i)

except:

print("detail page " + url + " get failed")

def start(self, downloadtype, page_start=1, page_end=10, max_thread_num=10):

type_dict = {

"yazhouwuma": 2,

"yazhouyouma": 15,

"oumeiyuanchuang": 4,

"dongmanyuanchuang": 5,

"guochanyuanchuang": 25,

"zhongziyuanchuang": 26,

}

if downloadtype in type_dict.keys():

fid = type_dict[downloadtype]

else:

raise ValueError("type wrong!")

max_thread_num = min(page_end - page_start + 1, max_thread_num)

thread_list = []

for i in range(page_start, page_end + 1):

thread_list.append(threading.Thread(target=self.index_page,

args=(fid, i,)))

# create thread to search page and download torrent

# multi-thread in index page not download torrent, it's deliberate to avoid DDOS

for t in range(len(thread_list)):

thread_list[t].start()

print("No." + str(t) + " thread start")

while True:

if len(threading.enumerate()) < max_thread_num:

break

if __name__ == "__main__":

c = Caoliu()

c.start(downloadtype="yazhouwuma",

page_start=1,

page_end=5,

max_thread_num=50)

喜欢的话就来点个“在看”吧!

![]()

“这么可爱的萝莉你不扫一扫?”