K8s资源第一篇(Pod)

资源配置格式:

- apiVersion:用来定义api群组的版本。

- Kind:用来定义资源类型。

- metadata:用来定义元数据,元数据中包含,资源的名字,标签,隶属的名称空间等。

- sepc:

- 用来定义资源的期望状态。

- status:资源的实际状态,用户不能够定义,由k8s自行维护。

获取集群所支持的所有资源类型:

[root@k8smaster data]# kubectl api-resources

NAME SHORTNAMES APIGROUP NAMESPACED KIND

bindings true Binding

componentstatuses cs false ComponentStatus

configmaps cm true ConfigMap

endpoints ep true Endpoints

events ev true Event

limitranges limits true LimitRange

namespaces ns false Namespace

nodes no false Node

persistentvolumeclaims pvc true PersistentVolumeClaim

persistentvolumes pv false PersistentVolume

pods po true Pod

podtemplates true PodTemplate

replicationcontrollers rc true ReplicationController

resourcequotas quota true ResourceQuota

secrets true Secret

serviceaccounts sa true ServiceAccount

services svc true Service

mutatingwebhookconfigurations admissionregistration.k8s.io false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io false CustomResourceDefinition

apiservices apiregistration.k8s.io false APIService

controllerrevisions apps true ControllerRevision

daemonsets ds apps true DaemonSet

deployments deploy apps true Deployment

replicasets rs apps true ReplicaSet

statefulsets sts apps true StatefulSet

tokenreviews authentication.k8s.io false TokenReview

localsubjectaccessreviews authorization.k8s.io true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling true HorizontalPodAutoscaler

cronjobs cj batch true CronJob

jobs batch true Job

certificatesigningrequests csr certificates.k8s.io false CertificateSigningRequest

leases coordination.k8s.io true Lease

events ev events.k8s.io true Event

daemonsets ds extensions true DaemonSet

deployments deploy extensions true Deployment

ingresses ing extensions true Ingress

networkpolicies netpol extensions true NetworkPolicy

podsecuritypolicies psp extensions false PodSecurityPolicy

replicasets rs extensions true ReplicaSet

ingresses ing networking.k8s.io true Ingress

networkpolicies netpol networking.k8s.io true NetworkPolicy

runtimeclasses node.k8s.io false RuntimeClass

poddisruptionbudgets pdb policy true PodDisruptionBudget

podsecuritypolicies psp policy false PodSecurityPolicy

clusterrolebindings rbac.authorization.k8s.io false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io false ClusterRole

rolebindings rbac.authorization.k8s.io true RoleBinding

roles rbac.authorization.k8s.io true Role

priorityclasses pc scheduling.k8s.io false PriorityClass

csidrivers storage.k8s.io false CSIDriver

csinodes storage.k8s.io false CSINode

storageclasses sc storage.k8s.io false StorageClass

volumeattachments storage.k8s.io false VolumeAttachment

Kubectl操作类型:

- 陈述式操作:通过命令来配置资源(create,delete…)。

- 声明式操作:把配置清单写在文件中,让k8s来应用文件中的配置(kubectl apply -f xxx.yaml)。

官方操作文档:https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.16/

创建资源:

apply和create:

[root@k8smaster ~]# mkdir /data

[root@k8smaster ~]# cd /data/

[root@k8smaster data]# vim develop-ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: develop

[root@k8smaster data]# kubectl create -f develop-ns.yaml

namespace/develop created

[root@k8smaster data]# kubectl get ns

NAME STATUS AGE

default Active 36d

develop Active 31s

kube-node-lease Active 36d

kube-public Active 36d

kube-system Active 36d

[root@k8smaster data]# cp develop-ns.yaml sample-ns.yaml

[root@k8smaster data]# vim sample-ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: sample

[root@k8smaster data]# kubectl apply -f sample-ns.yaml

namespace/sample created

[root@k8smaster data]# kubectl get ns

NAME STATUS AGE

default Active 36d

develop Active 2m7s

kube-node-lease Active 36d

kube-public Active 36d

kube-system Active 36d

sample Active 4s

[root@k8smaster data]# kubectl create -f develop-ns.yaml

Error from server (AlreadyExists): error when creating "develop-ns.yaml": namespaces "develop" already exists

[root@k8smaster data]# kubectl apply -f sample-ns.yaml

namespace/sample unchanged

- create:不能重复创建已经存在的资源。

- apply:apply为应用配置,如果资源不存在则创建,如果资源与配置清单有不一样的地方则应用配置清单的配置,可以重复应用,而且apply还可以应用一个目录下所有的配置文件。

输出:

以yaml模板的形式输出pod的配置:

[root@k8smaster data]# kubectl get pods/nginx-deployment-6f77f65499-8g24d -o yaml --export

Flag --export has been deprecated, This flag is deprecated and will be removed in future.

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

generateName: nginx-deployment-6f77f65499-

labels:

app: nginx-deployment

pod-template-hash: 6f77f65499

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: nginx-deployment-6f77f65499

uid: c22cc3e8-8fbe-420f-b517-5a472ba1ddef

selfLink: /api/v1/namespaces/default/pods/nginx-deployment-6f77f65499-8g24d

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-kk2fq

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: k8snode1

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: default-token-kk2fq

secret:

defaultMode: 420

secretName: default-token-kk2fq

status:

phase: Pending

qosClass: BestEffort

运行多个容器:

[root@k8smaster data]# vim pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

spec:

containers:

- name: bbox

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sleep 86400"]

- name: myapp

image: ikubernetes/myapp:v1

[root@k8smaster data]# kubectl apply -f pod-demo.yaml

pod/pod-demo created

[root@k8smaster data]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-deployment-558f94fb55-plk4v 1/1 Running 1 33d

myapp-deployment-558f94fb55-rd8f5 1/1 Running 1 33d

myapp-deployment-558f94fb55-zzmpg 1/1 Running 1 33d

nginx-deployment-6f77f65499-8g24d 1/1 Running 1 33d

pod-demo 2/2 Running 0 83s

进入容器中:

kubectl exec

- pod-demo:pod的名字。

- -c:如果一个pod中有多个容器,需要使用-c 指定容器名来进入指定的容器。

- -n:指定名称空间。

- -it:交互式界面。

- – /bin/sh:运行的shell。

[root@k8smaster data]# kubectl exec pod-demo -c bbox -n default -it -- /bin/sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr DE:5A:59:84:21:8D

inet addr:10.244.1.105 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:13 errors:0 dropped:0 overruns:0 frame:0

TX packets:1 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:978 (978.0 B) TX bytes:42 (42.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

bbox容器中的ip为Pod的ip,一个Pod中所有的容器都是通过共享一个ip来实现访问。

/ # netstat -tnl

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

/ # ps aux

PID USER TIME COMMAND

1 root 0:00 sleep 86400

6 root 0:00 /bin/sh

13 root 0:00 ps aux

通过netstat -tnl命令查看容器监听的端口为80,显然80不是bbox中的端口,而是myapp容器的80端口。由此证明了一个Pod中所有的容器都使用了一个共享ip。

/ # wget -O - -q 127.0.0.1 #并且访问本地的端口,会出现myapp的页面。

Hello MyApp | Version: v1 | Pod Name

/ # exit

查看指定容器的日志:

kubectl logs:

- pod-demo:pod的名字。

- -c:如果一个pod中有多个容器,需要使用-c 指定容器名来进入指定的容器。

- -n:指定名称空间。

[root@k8smaster data]# kubectl logs pod-demo -n default -c myapp

127.0.0.1 - - [04/Dec/2019:06:36:04 +0000] "GET / HTTP/1.1" 200 65 "-" "Wget" "-"

127.0.0.1 - - [04/Dec/2019:06:36:11 +0000] "GET / HTTP/1.1" 200 65 "-" "Wget" "-"

127.0.0.1 - - [04/Dec/2019:06:36:17 +0000] "GET / HTTP/1.1" 200 65 "-" "Wget" "-"

Pod中的容器和节点主机共享同一个网络:

不建议在生产过程中使用,如果容器过多,可能造成端口号冲突。

[root@k8smaster data]# vim host-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypod

namespace: default

spec:

containers:

- name : myapp

image: ikubernetes/myapp:v1

hostNetwork: true

[root@k8smaster data]# kubectl apply -f host-pod.yaml

pod/mypod created

[root@k8smaster data]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-deployment-558f94fb55-plk4v 1/1 Running 1 33d 10.244.2.94 k8snode2

myapp-deployment-558f94fb55-rd8f5 1/1 Running 1 33d 10.244.2.95 k8snode2

myapp-deployment-558f94fb55-zzmpg 1/1 Running 1 33d 10.244.1.104 k8snode1

mypod 1/1 Running 0 9s 192.168.43.176 k8snode2

nginx-deployment-6f77f65499-8g24d 1/1 Running 1 33d 10.244.1.103 k8snode1

pod-demo 2/2 Running 0 26m 10.244.1.105 k8snode1

[root@k8smaster data]# curl 192.168.43.176:80

Hello MyApp | Version: v1 | Pod Name

hostPort:

[root@k8smaster data]# kubectl delete -f host-pod.yaml

pod "mypod" deleted

[root@k8smaster data]# vim host-pod.yaml #映射容器的80端口到容器运行的主机上的8080端口。

apiVersion: v1

kind: Pod

metadata:

name: mypod

namespace: default

spec:

containers:

- name : myapp

image: ikubernetes/myapp:v1

ports:

- protocol: TCP

containerPort: 80

name: http

hostPort: 8080

[root@k8smaster data]# kubectl apply -f host-pod.yaml

pod/mypod created

[root@k8smaster data]# kubectl get pods -o wide #发现myapp是运行在node2节点上,我们直接访问node2的8080端口。

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-deployment-558f94fb55-plk4v 1/1 Running 1 33d 10.244.2.94 k8snode2

myapp-deployment-558f94fb55-rd8f5 1/1 Running 1 33d 10.244.2.95 k8snode2

myapp-deployment-558f94fb55-zzmpg 1/1 Running 1 33d 10.244.1.104 k8snode1

mypod 1/1 Running 0 23s 10.244.2.96 k8snode2

nginx-deployment-6f77f65499-8g24d 1/1 Running 1 33d 10.244.1.103 k8snode1

pod-demo 2/2 Running 0 37m 10.244.1.105 k8snode1

[root@k8smaster data]# curl 192.168.43.176:8080

Hello MyApp | Version: v1 | Pod Name

可以实现外部访问Pod的方式:

- Containers/hostPort:端口映射,将容器的端口映射到运行这个容器的节点主机上。

- hostNetwork:Pod和本机共享同一个网络。

- Nodeport:在每个节点上都开放一个特定的端口,任何发送到该端口的流量都被转发到对应服务,端口的范围在30000~32767。

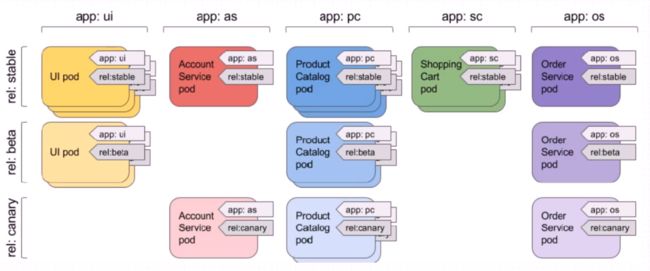

标签:

标签就是“键值”类型的数据,他们可用于资源创建时直接指定,也可随时按需添加与活动对象,而后即可由标签选择器进行匹配度检查从而完成资源挑选。

- 一个对象可拥有不止一个标签,而同一个标签也可以被添加至多个资源之上。

- 实践中,可以为资源附加多个不同纬度的标签以实现灵活的资源分组管理功能,例如版本标签,环境标签,分层架构标签等,用于交叉标识同一个资源所属的不同版本,环境以及架构层级等。

- 标签中的键名通常由键前缀和键名组成,其中键前缀可选,其格式形如“KEY_PREFIX/KEY_NAME”。

- 键名最多能使用63个字符,可使用字母,数字,连接号,下划线,点号等字符,且只能以字母或数字开头。

- 键前缀必须为DNS子域名格式,且不能超过253个字符,省略键前缀时,键将被视为用户的私有数据,不过由k8s系统组件或第三方组件自动为用户资源添加的键必须使用键前缀,而“kubernetes.io/”前缀预留给kubernetes的核心组件使用。

- 标签中的键值必须不能多于63个字符,它要么为空,要么是以字母或数字开头及结尾,且中间仅使用了字母,数字,连接号,下划线,点号等字符的数据。

- rel:释出的版本。

- stable:稳定版。

- beta:测试版。

- canary:金丝雀版。

标签选择器:

标签选择器用于表达标签的查询条件或选择标准,Kubernetes API目前支持两个选择器。

- 基于等值关系(equality-based)

- 操作符有=,==和!=三种,其中前两个意义相同,都表示有“等值”关系,最后一个表示“不等”关系。

- 基于集合关系(set-based)

- KEY in (Value1,Value2…)

- KEY not in (Vlaue1,Value2…)

- KEY:所有存在此键名标签的资源。

- !KEY:所有不存在此键名标签的资源。

使用标签选择遵循的逻辑:

- 同时指定的多个选择器之间的逻辑关系为“与”操作。

- 使用空值的标签选择器意味着每个资源对象都将被选中。

- 空的标签选择器将无法选出任何资源。

定义标签选择器的方式:

kubernetes的诸多资源对象必须以标签选择器的方式关联到Pod资源对象,例如Service,Deployment和ReplicaSet类型的资源等,它们在spec字段中嵌套使用嵌套的“selector”字段,通过“matchLabbels”来指定标签选择器,有的甚至还支持使用“matchExpressions”构造复杂的标签选择机制。

- matchLabels:通过直接给定键值对指定标签选择器。

- matchExpressions:基于表达式指定的标签选择器列表,每个选择器形如“{key:KEY_NAME, operator:OPERATOR,values:[VALUE1,VALUE2…]}”,选择器列表间为“逻辑与关系”。

- 使用In或者NotIn操作时,其value非必须为空的字符串列表,而使用Exists或者DostNotExist时,其values必须为空。

管理标签(创建,修改,删除):

[root@k8smaster ~]# kubectl get pods --show-labels #查看pod资源的标签。

NAME READY STATUS RESTARTS AGE LABELS

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

mypod 1/1 Running 1 45h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d app=nginx-deployment,pod-template-hash=6f77f65499

pod-demo 2/2 Running 2 46h

[root@k8smaster data]# vim pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

labels:

app: pod-demo #定义一个app标签,值为pod-demo。

rel: stable #定义一个rel标签,职位stable。

spec:

containers:

- name: bbox

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sleep 86400"]

- name: myapp

image: ikubernetes/myapp:v1

[root@k8smaster data]# kubectl apply -f pod-demo.yaml #创建标签。

pod/pod-demo configured

[root@k8smaster data]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

mypod 1/1 Running 1 46h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d app=nginx-deployment,pod-template-hash=6f77f65499

pod-demo 2/2 Running 2 46h app=pod-demo,rel=stable

[root@k8smaster data]# kubectl label pods pod-demo -n default tier=frontend #使用陈述式语句添加标签。

pod/pod-demo labeled

[root@k8smaster data]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

mypod 1/1 Running 1 46h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d app=nginx-deployment,pod-template-hash=6f77f65499

pod-demo 2/2 Running 2 47h app=pod-demo,rel=stable,tier=frontend

[root@k8smaster data]# kubectl label pods pod-demo -n default --overwrite app=myapp #覆盖(修改)已有的标签。

pod/pod-demo labeled

[root@k8smaster data]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

mypod 1/1 Running 1 46h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d app=nginx-deployment,pod-template-hash=6f77f65499

pod-demo 2/2 Running 2 47h app=myapp,rel=stable,tier=frontend

[root@k8smaster data]# kubectl label pods pod-demo -n default rel- #删除指定的标签,只需要在标签名后面加一个减号。

pod/pod-demo labeled

[root@k8smaster data]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

mypod 1/1 Running 1 46h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d app=nginx-deployment,pod-template-hash=6f77f65499

pod-demo 2/2 Running 2 47h app=myapp,tier=frontend

使用标签过滤资源:

#查看标签app等于myapp的资源。

[root@k8smaster data]# kubectl get pods -n default -l app=myapp

NAME READY STATUS RESTARTS AGE

pod-demo 2/2 Running 2 47h

#查看标签app不等于myapp的资源。

[root@k8smaster data]# kubectl get pods -n default -l app!=myapp --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

mypod 1/1 Running 1 46h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d app=nginx-deployment,pod-template-hash=6f77f65499

#查看app标签为nginx-deployment和myapp-deployment的资源。

[root@k8smaster data]# kubectl get pods -n default -l "app in (nginx-deployment,myapp-deployment)" --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d app=myapp-deployment,pod-template-hash=558f94fb55

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d app=nginx-deployment,pod-template-hash=6f77f65499

#-L:将app标签以字段的形式输出信息

[root@k8smaster data]# kubectl get pods -n default -l "app in (nginx-deployment,myapp-deployment)" -L app

NAME READY STATUS RESTARTS AGE APP

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d myapp-deployment

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d myapp-deployment

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d myapp-deployment

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d nginx-deployment

#查看标签app不是nginx-deployment和myapp-deployment的资源。

[root@k8smaster data]# kubectl get pods -n default -l "app notin (nginx-deployment,myapp-deployment)" -L app

NAME READY STATUS RESTARTS AGE APP

mypod 1/1 Running 1 47h

pod-demo 2/2 Running 2 47h myapp

#查看存在app标签的资源。

[root@k8smaster data]# kubectl get pods -n default -l "app" -L app

NAME READY STATUS RESTARTS AGE APP

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d myapp-deployment

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d myapp-deployment

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d myapp-deployment

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d nginx-deployment

pod-demo 2/2 Running 2 47h myapp

#查看不存在app标签的资源。

[root@k8smaster data]# kubectl get pods -n default -l '!app' -L app

NAME READY STATUS RESTARTS AGE APP

mypod 1/1 Running 1 47h

资源注解(annotation):

注解也是“键值”类型的数据,不过它不能用于标签及挑选kubernetes对象,仅用于对资源提供“元数据”信息。

注解中的元数据不受字符数量的限制,它可大可小,可以为结构化或非结构话形式,也支持使用在标签中禁止使用的其他字符。

在kubernetes的新版本中(Alpha或Beta阶段)为某资源引入新字段时,常以注解方式提供以避免其增删等变动给用户带来困扰,一旦确定支持使用它们,这些新增字段再引入到资源中并淘汰相关的注解。

[root@k8smaster data]# vim pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

labels:

app: pod-demo

rel: stable

annotations:

ik8s.io/project: hello

spec:

containers:

- name: bbox

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sleep 86400"]

- name: myapp

image: ikubernetes/myapp:v1

[root@k8smaster data]# kubectl apply -f pod-demo.yaml

pod/pod-demo configured

[root@k8smaster data]# kubectl describe pods pod-demo -n default

Name: pod-demo

Namespace: default

Priority: 0

Node: k8snode1/192.168.43.136

Start Time: Wed, 04 Dec 2019 14:20:01 +0800

Labels: app=pod-demo

rel=stable

tier=frontend

Annotations: ik8s.io/project: hello

kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{"ik8s.io/project":"hello"},"labels":{"app":"pod-demo","rel":"stable"},"name":"p...

Status: Running

IP: 10.244.1.106

IPs:

Pod生命周期:

状态:

- 挂起(Pending):Pod已被Kubernetes系统接受,但有一个或多个容器镜像尚未创建,需要等待调度Pod和下载镜像的时间,Pod才能运行。

- 运行中(Running):该Pod已经绑定到了一个节点上,Pod中所有的容器都已被创建,至少有一个容器正在运行,或者正在处于启动和重启的状态。

- 成功(Succeeded):Pod中所有的容器都被成功终止,并且不会重启。

- 失败(Failed):Pod中的所有容器都已经终止了,并且至少有一个容器是唯一失败终止。

- 未知(Unkonwn):因为某些原因无法取得Pod的状态,通常是因为Pod与所在主机通信失败。

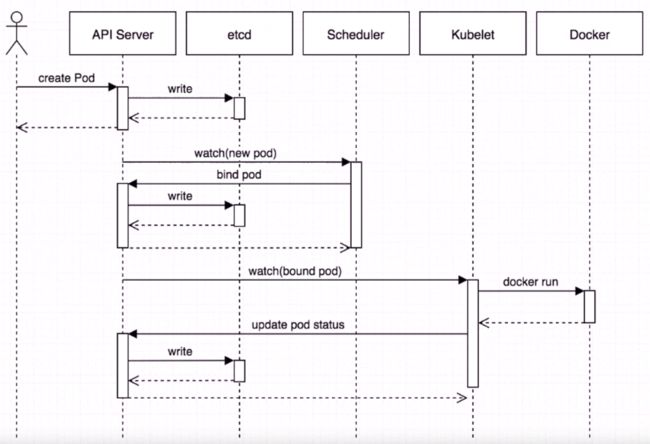

Pod启动过程:

- 用户通过命令或者yaml文件创建Pod,提交请求给API Server。

- API Server将Pod的配置等信息存储到etcd中。

- API Server再把Watch(监控)时间通知给Scheduler。

- Scheduler通过调度算法来选择目标主机。

- API Server再把调度的主机的信息存储到etcd中。

- API Server去通知调度的主机的Kubelet,Kubelet再去etcd中读取对应Pod的配置和属性,然后把这些配置和属性信息交给docker引擎。

- docker引擎会启动出一个容器,启动成功后会把容器的信息提交给API Server。

- API Server再把Pod的实际状态保存到etcd中。

- 之后如果实际状态和期望状态有变动,它们之间又会进行一系列的协同工作。

- 完成容器的初始化。

- 在主容器启动之后执行post start的内容。

- 在主容器运行后做探测(LiveinessProbe和ReadinessProbe)。

容器探针:

- LivenessProbe:指示容器是否正在运行,如果存活探测失败,则kubelet会杀死容器,并且容器将收到重启策略,如果重启失败,会继续重启,重启的间隔时间会依次递增,指定成功为止。如果容器不提供存活探针,则默认状态为Success。

- ReadinessProbe:指示而哦那个其是否准备好服务请求,如果就绪探测失败,端点控制器将从Pod匹配的所有Service的端点中删除该Pod的IP地址,初始延迟之前的就绪状态默认为Failure,如果容器不提供就绪探针,则默认状态为Success。

探针的三种处理程序:

- ExecAction:在容器内执行指定命令,如果命令退出时返回代码为0,则认为诊断成功。

- HTTPGetAction:对指定的端口和路径上的容器IP地址执行HTTP Get请求,如果响应的状态码大于等于200且小于400,则认为诊断是成功的。

- TCPSocketAction:对指定端口上的容器的IP地址进行TCP检查,如果端口打开,则诊断被认为是成功的。

探测状态结构:

- 成功:容器通过了诊断。

- 失败:容器未通过诊断。

- 未知:诊断失败,不会采取任何行动。

健康状态检测LivenessProbe:

ExecAction实例:

我们通过touch创建一个检查文件,并且使用test命令验证文件是否存在,存在则存活。

在创建完文件之后,容器会睡眠30秒,30秒过后容器会删除这个文件,这时候test命令的返回结果为1,认为容器没有存活,会对容器进行重启策略,重启后后容器会重新创建好原来的文件,然后test命令返回0,确定重启成功。

[root@k8smaster data]# git clone https://github.com/iKubernetes/Kubernetes_Advanced_Practical.git

Cloning into 'Kubernetes_Advanced_Practical'...

remote: Enumerating objects: 489, done.

remote: Total 489 (delta 0), reused 0 (delta 0), pack-reused 489

Receiving objects: 100% (489/489), 148.75 KiB | 110.00 KiB/s, done.

Resolving deltas: 100% (122/122), done.

[root@k8smaster chapter4]# cat liveness-exec.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness-exec

name: liveness-exec

spec:

containers:

- name: liveness-demo

image: busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- test

- -e

- /tmp/healthy

[root@k8smaster chapter4]# kubectl describe pods liveness-exec

Name: liveness-exec

Namespace: default

Priority: 0

Node: k8snode2/192.168.43.176

Start Time: Fri, 06 Dec 2019 15:27:55 +0800

Labels: test=liveness-exec

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness-exec"},"name":"liveness-exec","namespace":"default...

Status: Running

IP: 10.244.2.100

IPs:

Containers:

liveness-demo:

Container ID: docker://f91cec7c45f5a025e049b2d2e0b1dc15593e9c35f183a9a9aa8e09d40f22df4f

Image: busybox

Image ID: docker-pullable://busybox@sha256:24fd20af232ca4ab5efbf1aeae7510252e2b60b15e9a78947467340607cd2ea2

Port:

Host Port:

Args:

/bin/sh

-c

touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

State: Running

Started: Fri, 06 Dec 2019 15:28:02 +0800

Ready: True

Restart Count: 0 #这时候重启次数为0,等待30秒后,我们再查看一次

Liveness: exec [test -e /tmp/healthy] delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-kk2fq (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-kk2fq:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-kk2fq

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 43s default-scheduler Successfully assigned default/liveness-exec to k8snode2

Normal Pulling 42s kubelet, k8snode2 Pulling image "busybox"

Normal Pulled 36s kubelet, k8snode2 Successfully pulled image "busybox"

Normal Created 36s kubelet, k8snode2 Created container liveness-demo

Normal Started 36s kubelet, k8snode2 Started container liveness-demo

Warning Unhealthy 4s kubelet, k8snode2 Liveness probe failed:

[root@k8smaster chapter4]# kubectl describe pods liveness-exec

Name: liveness-exec

Namespace: default

Priority: 0

Node: k8snode2/192.168.43.176

Start Time: Fri, 06 Dec 2019 15:27:55 +0800

Labels: test=liveness-exec

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness-exec"},"name":"liveness-exec","namespace":"default...

Status: Running

IP: 10.244.2.100

IPs:

Containers:

liveness-demo:

Container ID: docker://96f7dfd4ef1df503152542fdd1336fd0153773fb7dde3ed32f4388566888d6f0

Image: busybox

Image ID: docker-pullable://busybox@sha256:24fd20af232ca4ab5efbf1aeae7510252e2b60b15e9a78947467340607cd2ea2

Port:

Host Port:

Args:

/bin/sh

-c

touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

State: Running

Started: Fri, 06 Dec 2019 15:29:25 +0800

Last State: Terminated

Reason: Error

Exit Code: 137

Started: Fri, 06 Dec 2019 15:28:02 +0800

Finished: Fri, 06 Dec 2019 15:29:24 +0800

Ready: True

Restart Count: 1 #发现重启次数变为1。

Liveness: exec [test -e /tmp/healthy] delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-kk2fq (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-kk2fq:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-kk2fq

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m25s default-scheduler Successfully assigned default/liveness-exec to k8snode2

Normal Killing 86s kubelet, k8snode2 Container liveness-demo failed liveness probe, will be restarted

Normal Pulling 56s (x2 over 2m24s) kubelet, k8snode2 Pulling image "busybox"

Normal Pulled 56s (x2 over 2m18s) kubelet, k8snode2 Successfully pulled image "busybox"

Normal Created 56s (x2 over 2m18s) kubelet, k8snode2 Created container liveness-demo

Normal Started 55s (x2 over 2m18s) kubelet, k8snode2 Started container liveness-demo

Warning Unhealthy 6s (x5 over 106s) kubelet, k8snode2 Liveness probe failed:

[root@k8smaster chapter4]# kubectl delete -f liveness-exec.yaml

pod "liveness-exec" deleted

HTTPGetAction实例:

我们通过postStart在nginx容器启动成功之后,在网页文件的根目录下创建一个健康检查文件,然后通过HTTPGet来对这个健康检查文件进行请求,如果请求成功则认为服务是健康的,否则服务是不健康的。

[root@k8smaster chapter4]# cat liveness-http.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-http

spec:

containers:

- name: liveness-demo

image: nginx:1.12-alpine

ports:

- name: http

containerPort: 80

lifecycle:

postStart:

exec:

command:

- /bin/sh

- -c

- 'echo Healty > /usr/share/nginx/html/healthz'

livenessProbe:

httpGet:

path: /healthz

port: http

scheme: HTTP

periodSeconds: 2 #健康检查间隔时间为2秒

failureThreshold: 2 #错误失败次数,超过2次就不进行重启。

initialDelaySeconds: 3 #初始化延迟时间,会在容器启动成功后等待3秒进行初始化

[root@k8smaster chapter4]# kubectl get pods

NAME READY STATUS RESTARTS AGE

liveness-http 1/1 Running 0 23s

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d

mypod 1/1 Running 1 2d1h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d

pod-demo 2/2 Running 2 2d2h

[root@k8smaster chapter4]# kubectl describe pods liveness-http

Name: liveness-http

Namespace: default

Priority: 0

Node: k8snode2/192.168.43.176

Start Time: Fri, 06 Dec 2019 16:28:26 +0800

Labels: test=liveness

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness"},"name":"liveness-http","namespace":"default"},"s...

Status: Running

IP: 10.244.2.101

IPs:

Containers:

liveness-demo:

Container ID: docker://cc2d4ad2e37ec04b0d629c15d3033ecf9d4ab7453349ab40def9eb8cfca28936

Image: nginx:1.12-alpine

Image ID: docker-pullable://nginx@sha256:3a7edf11b0448f171df8f4acac8850a55eff30d1d78c46cd65e7bc8260b0be5d

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 06 Dec 2019 16:28:27 +0800

Ready: True

Restart Count: 0 #这时候重启次数为0,我们进入容器中,人为删掉检查文件测试一下。

Liveness: http-get http://:http/healthz delay=3s timeout=1s period=2s #success=1 #failure=2

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-kk2fq (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-kk2fq:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-kk2fq

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 70s default-scheduler Successfully assigned default/liveness-http to k8snode2

Normal Pulling 70s kubelet, k8snode2 Pulling image "nginx:1.12-alpine"

Normal Pulled 69s kubelet, k8snode2 Successfully pulled image "nginx:1.12-alpine"

Normal Created 69s kubelet, k8snode2 Created container liveness-demo

Normal Started 69s kubelet, k8snode2 Started container liveness-demo

[root@k8smaster chapter4]# kubectl exec -it liveness-http -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # ls

50x.html healthz index.html

/usr/share/nginx/html # rm -rf healthz

/usr/share/nginx/html # exit

[root@k8smaster chapter4]# kubectl exec -it liveness-http -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # ls

50x.html healthz index.html

/usr/share/nginx/html # rm -rf healthz

/usr/share/nginx/html # exit

[root@k8smaster chapter4]# kubectl describe pods liveness-http

Name: liveness-http

Namespace: default

Priority: 0

Node: k8snode2/192.168.43.176

Start Time: Fri, 06 Dec 2019 16:28:26 +0800

Labels: test=liveness

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"test":"liveness"},"name":"liveness-http","namespace":"default"},"s...

Status: Running

IP: 10.244.2.101

IPs:

Containers:

liveness-demo:

Container ID: docker://90f3016f707bcfc0e22c42dac54f4e4691e74db7fcc5d5d395f22c482c9ea704

Image: nginx:1.12-alpine

Image ID: docker-pullable://nginx@sha256:3a7edf11b0448f171df8f4acac8850a55eff30d1d78c46cd65e7bc8260b0be5d

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 06 Dec 2019 16:34:34 +0800

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Fri, 06 Dec 2019 16:28:27 +0800

Finished: Fri, 06 Dec 2019 16:34:33 +0800

Ready: True

Restart Count: 1 #发现重启次数变为1。

Liveness: http-get http://:http/healthz delay=3s timeout=1s period=2s #success=1 #failure=2

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-kk2fq (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-kk2fq:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-kk2fq

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 6m24s default-scheduler Successfully assigned default/liveness-http to k8snode2

Normal Pulling 6m24s kubelet, k8snode2 Pulling image "nginx:1.12-alpine"

Normal Pulled 6m23s kubelet, k8snode2 Successfully pulled image "nginx:1.12-alpine"

Warning Unhealthy 17s (x2 over 19s) kubelet, k8snode2 Liveness probe failed: HTTP probe failed with statuscode: 404

Normal Killing 17s kubelet, k8snode2 Container liveness-demo failed liveness probe, will be restarted

Normal Pulled 17s kubelet, k8snode2 Container image "nginx:1.12-alpine" already present on machine

Normal Created 16s (x2 over 6m23s) kubelet, k8snode2 Created container liveness-demo

Normal Started 16s (x2 over 6m23s) kubelet, k8snode2 Started container liveness-demo

[root@k8smaster chapter4]# kubectl exec -it liveness-http -- /bin/sh #再次查看检查文件,发现又被创建了。

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # ls

50x.html healthz index.html

/usr/share/nginx/html # exit

[root@k8smaster chapter4]# kubectl delete -f liveness-http.yaml

pod "liveness-http" deleted

就绪状态检测ReadinessProbe:

ExecAction实例:

[root@k8smaster chapter4]# cat readiness-exec.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

test: readiness-exec

name: readiness-exec

spec:

containers:

- name: readiness-demo

image: busybox

args: ["/bin/sh", "-c", "while true; do rm -f /tmp/ready; sleep 30; touch /tmp/ready; sleep 300; done"]

readinessProbe:

exec:

command: ["test", "-e", "/tmp/ready"]

initialDelaySeconds: 5

periodSeconds: 5

[root@k8smaster chapter4]# kubectl apply -f readiness-exec.yaml

pod/readiness-exec created

[root@k8smaster chapter4]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d

mypod 1/1 Running 1 2d1h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d

pod-demo 2/2 Running 2 2d2h

readiness-exec 1/1 Running 0 57s

root@k8smaster chapter4]# kubectl exec readiness-exec -- rm -f /tmp/ready

[root@k8smaster chapter4]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d

mypod 1/1 Running 1 2d1h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d

pod-demo 2/2 Running 2 2d2h

readiness-exec 0/1 Running 0 2m46s

[root@k8smaster chapter4]# kubectl exec readiness-exec -- touch /tmp/ready

[root@k8smaster chapter4]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-deployment-558f94fb55-plk4v 1/1 Running 2 35d

myapp-deployment-558f94fb55-rd8f5 1/1 Running 2 35d

myapp-deployment-558f94fb55-zzmpg 1/1 Running 2 35d

mypod 1/1 Running 1 2d1h

nginx-deployment-6f77f65499-8g24d 1/1 Running 2 35d

pod-demo 2/2 Running 2 2d2h

readiness-exec 1/1 Running 0 3m54s

容器的重启策略:

Pod对象因容器程序崩溃或容器申请超出限制的资源等原因都可能导致其被终止,此时是否应该重建它则取决与其重启策略(restartPolicy)属性的定义。

- Always:但凡Pod对象终止就将其重启,此为默认设定。

- OnFailure:尽在Pod对象出现错误时,才将其重启。

- Never:从不重启。

Pod的终止过程:

- 用户请求去删除一个Pod,提交给API Server。

- API Server将删除信息记录在etcd中,但是由于宽限期的原因,不会立即进行删除操作。

- 之后在返回给API Server,API Server将该Pod标记为要终止的状态(Terminating),并将其通知给对应节点的kubelet。

- kubelet在与docker引擎通信,docker引擎再去终止对应的容器,并且在主容器终止之前执行pre stop的内容,运行结束后,Docker引擎就会停止容器,并且返回给API Server。

- API Server再去通知端点控制器(EndPoint Controller),端点控制器从端点列表中将该Pod的信息移除,之后通知但是如果超出宽限期时间,就会向容器进程发送KILL信号杀死容器,杀死之后会反馈给API Server。

限制Pod的权限(安全上下文):

Security Context的目的是限制不可信容器的行为,保护系统或者系统内的其他容器不受器影响。

K8s提供了三种配置Security Context的方法:

- Container-level Security Context:仅应用到指定容器。

- Pod-level Security Context:应用到Pod内所有容器以及volume。

- Pod Security Policies:应用到集群内部所有Pod及Volume。

Pod资源限制:

容器计算资源的配额:

- CPU属于可压缩型资源,即资源额度可按需求收缩,而内存(当前)则是不可压缩型资源。

- CPU资源的计量方式:

- 一个核心相当于1000个微核心,即1=1000m,0.5=500m。

- 内存资源的计量方式:

- 默认单位为字节,也可以使用E,P,T,G,M和K后缀单位,或Ei,Pi,Ti,Gi,Mi,和Ki形式的单位后缀。

限制方法:

- priorityClassName:用来限制Pod使用操作系统资源的优先级。

- resource:用来限制Pod使用cpu,内存,硬盘等资源的范围(上限~下限)。

- limits:上限。

- requests:下限。

[root@k8smaster chapter4]# cat stress-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: stress-pod

spec:

containers:

- name: stress

image: ikubernetes/stress-ng

command: ["/usr/bin/stress-ng", "-c 1", "-m 1", "--metrics-brief"]

resources:

requests:

memory: "128Mi"

cpu: "200m"

limits:

memory: "512Mi"

cpu: "400m"

[root@k8smaster chapter4]# cat memleak-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: memleak-pod

spec:

containers:

- name: simmemleak

image: saadali/simmemleak

resources:

requests:

memory: "64Mi"

cpu: "1"

limits:

memory: "64Mi"

cpu: "1"

[root@k8smaster chapter4]# kubectl apply -f memleak-pod.yaml

pod/memleak-pod created

[root@k8smaster chapter4]# kubectl get pods

NAME READY STATUS RESTARTS AGE

memleak-pod 0/1 ContainerCreating 0 6s

myapp-deployment-558f94fb55-plk4v 1/1 Running 3 39d

myapp-deployment-558f94fb55-rd8f5 1/1 Running 3 39d

myapp-deployment-558f94fb55-zzmpg 1/1 Running 3 39d

mypod 1/1 Running 2 6d

nginx-deployment-6f77f65499-8g24d 1/1 Running 3 39d

pod-demo 2/2 Running 4 6d1h

readiness-exec 1/1 Running 1 3d22h

[root@k8smaster chapter4]# kubectl describe memleak-pod

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 76s default-scheduler Successfully assigned default/memleak-pod to k8snode1

Normal Pulling 21s (x4 over 75s) kubelet, k8snode1 Pulling image "saadali/simmemleak"

Normal Pulled 20s (x4 over 64s) kubelet, k8snode1 Successfully pulled image "saadali/simmemleak"

Normal Created 20s (x4 over 64s) kubelet, k8snode1 Created container simmemleak

Normal Started 20s (x4 over 64s) kubelet, k8snode1 Started container simmemleak

Warning BackOff 9s (x7 over 62s) kubelet, k8snode1 Back-off restarting failed container #由于我们给的内存太小了,会启动失败。

[root@k8smaster chapter4]# kubectl describe pod memleak-pod | grep Reason

Reason: CrashLoopBackOff

Reason: OOMKilled #启动失败的原因是内存耗尽。

Type Reason Age From Message

Pod服务质量类别:

根据Pod对象的requests和limits属性,Kubernetest把Pod对象归类到BestEffort,Burstable和Guaranteed三个服务质量(Quality of Service,QoS)类别下:

- Guaranteed:每个容器都为CPU资源设置了具有相同值的requests和limits属性,以及每个容器都为内存资源设置了具有相同值的requests和limits属性的pod资源会字段归属此类别,这类Pod资源具有最高优先级。

- Burstable:至少有一个容器设置了CPU或内存资源的requests属性,但不满足Guaranteed类别要求的Pod资源自动归属此类别,它们具有中等优先级。

- BestEffort:没有为任何一个容器设置requests或limits属性的Pod资源自动归属此类别,它们的优先级为最低级别。

[root@k8smaster chapter4]# kubectl get pods

NAME READY STATUS RESTARTS AGE

memleak-pod 0/1 CrashLoopBackOff 7 13m

myapp-deployment-558f94fb55-plk4v 1/1 Running 3 39d

myapp-deployment-558f94fb55-rd8f5 1/1 Running 3 39d

myapp-deployment-558f94fb55-zzmpg 1/1 Running 3 39d

mypod 1/1 Running 2 6d

nginx-deployment-6f77f65499-8g24d 1/1 Running 3 39d

pod-demo 2/2 Running 4 6d1h

readiness-exec 1/1 Running 1 3d23h

[root@k8smaster chapter4]# kubectl describe pod memleak-pod | grep QoS

QoS Class: Guaranteed

[root@k8smaster chapter4]# kubectl describe pod mypod | grep QoS

QoS Class: BestEffort

总结:

apiVersion,kind,metadata,spec,status(只读) #必要字段。

spec: #spec中的内嵌字段。

containers

nodeSelector

nodeName

restartPolicy #重启策略。

Always,Never,OnFailure

containers:

name

image

imagePullPolicy: Alwasy,Never,IfNotPresent #拉取镜像策略。

ports:

name

containerPort

livenessProbe

readinessProbe

liftcycle

ExecAction: exec

TCPSocketAction: tcpSocket

HTTPGetAction: httpGet