Prometheus+Grafana 监控k8s系统-较详细文档

prometheus简介

Prometheus是一个开源的系统监控和报警系统,现在已经加入到CNCF基金会,成为继k8s之后第二个在CNCF托管的项目,在kubernetes容器管理系统中,通常会搭配prometheus进行监控,同时也支持多种exporter采集数据,还支持pushgateway进行数据上报,Prometheus性能足够支撑上万台规模的集群。

prometheus特点

作为新一代的监控框架,Prometheus 具有以下特点:

- 强大的多维度数据模型:

时间序列数据通过 metric 名和键值对来区分。

所有的 metrics 都可以设置任意的多维标签。

数据模型更随意,不需要刻意设置为以点分隔的字符串。

可以对数据模型进行聚合,切割和切片操作。

支持双精度浮点类型,标签可以设为全 unicode。 - 灵活而强大的查询语句(PromQL):在同一个查询语句,可以对多个 metrics 进行乘法、加法、连接、取分数位等操作。

- 易于管理: Prometheus server 是一个单独的二进制文件,可直接在本地工作,不依赖于分布式存储。

- 高效:平均每个采样点仅占 3.5 bytes,且一个 Prometheus server 可以处理数百万的 metrics。

- 使用 pull 模式采集时间序列数据,这样不仅有利于本机测试而且可以避免有问题的服务器推送坏的 metrics。

- 可以采用 push gateway 的方式把时间序列数据推送至 Prometheus server 端。

- 可以通过服务发现或者静态配置去获取监控的 targets。

- 有多种可视化图形界面。

- 易于伸缩。

需要指出的是,由于数据采集可能会有丢失,所以 Prometheus 不适用对采集数据要 100% 准确的情形。但如果用于记录时间序列数据,Prometheus 具有很大的查询优势,此外,Prometheus 适用于微服务的体系架构。

prometheus组件介绍

Prometheus 生态圈中包含了多个组件,其中许多组件是可选的:

- Prometheus Server: 用于收集和存储时间序列数据。

- Client Library: 客户端库,为需要监控的服务生成相应的 metrics 并暴露给 Prometheus server。当 Prometheus server 来 pull 时,直接返回实时状态的 metrics。

- Push Gateway: 主要用于短期的 jobs。由于这类 jobs 存在时间较短,可能在 Prometheus 来 pull 之前就消失了。为此,这次 jobs 可以直接向 Prometheus server 端推送它们的 metrics。

- 这种方式主要用于服务层面的 metrics,对于机器层面的 metrices,需要使用 node exporter。

Exporters: 用于暴露已有的第三方服务的 metrics 给 Prometheus。 - Alertmanager: 从 Prometheus server 端接收到 alerts 后,会进行去除重复数据,分组,并路由到对收的接受方式,发出报警。常见的接收方式有:电子邮件,pagerduty,OpsGenie, webhook 等。

这里就不再过多的介绍 Prometheus ,更多详情请参考

https://www.ibm.com/developerworks/cn/cloud/library/cl-lo-prometheus-getting-started-and-practice/index.html

k8s集群中部署prometheus

- 建立namespaces

[root@k8s-master-01 namespaces]# more prom-grafana-namespaces.yaml

apiVersion: v1

kind: Namespace

metadata:

name: prom-grafana

labels:

name: prom-grafana

[root@k8s-master-01 namespaces]# kubectl create -f prom-grafana-namespaces.yaml

namespace/prom-grafana created

- 创建一个sa账号

[root@k8s-master-01 prom+grafana]# kubectl create serviceaccount drifter -n prom-grafana

serviceaccount/drifter created

- 把sa 账号drifter通过clusterrolebing绑定到clusterrole上

[root@k8s-master-01 prom+grafana]# kubectl create clusterrolebinding drifter-clusterrolebinding -n prom-grafana --clusterrole=cluster-admin --serviceaccount=prom-grafana:drifter

clusterrolebinding.rbac.authorization.k8s.io/drifter-clusterrolebinding created

- 创建数据目录

在k8s集群的任何一个node节点操作,或者挂载OSS等,我是部署在某一台节点之上

[root@k8s-node-06 ~]# mkdir /data

[root@k8s-node-06 ~]# chmod 777 /data/

安装node-exporter组件

采集机器(物理机、虚拟机、云主机等)的监控指标数据,能够采集到的指标包括CPU, 内存,磁盘,网络,文件数等信息。

安装node-exporter组件,在k8s集群的master节点操作

[root@k8s-master-01 prom+grafana]# more node-export.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: prom-grafana

labels:

name: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- name: node-exporter

image: prom/node-exporter:v0.16.0

ports:

- containerPort: 9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

部署并查看node-export 组件状态

[root@k8s-master-01 prom+grafana]# kubectl create -f node-export.yaml

daemonset.apps/node-exporter created

[root@k8s-master-01 prom+grafana]# kubectl get pods -n prom-grafana

NAME READY STATUS RESTARTS AGE

node-exporter-69gdh 1/1 Running 0 20s

node-exporter-6ptnr 1/1 Running 0 20s

node-exporter-7pdgm 1/1 Running 0 20s

node-exporter-fjlq2 1/1 Running 0 20s

node-exporter-jfncm 1/1 Running 0 20s

node-exporter-p86f8 1/1 Running 0 20s

node-exporter-qcp6w 1/1 Running 0 20s

node-exporter-v857r 1/1 Running 0 20s

node-exporter-vclgh 1/1 Running 0 20s

可以看到所有的pod 的状态为 Running ,证明部署是OK的可以查看下,通过node-exporter采集的数据,node-export默认的监听端口是9100,可以看到当前主机获取到的所有监控数据(如图)

部分参数含义:

HELP:解释当前指标的含义,上面表示在每种模式下node节点的cpu花费的时间,以s为单位

TYPE:说明当前指标的数据类型,上面是counter类型

node_load1:该指标反映了当前主机在最近一分钟以内的负载情况,系统的负载情况会随系统资源的使用而变化,因此node_load1反映的是当前状态,数据可能增加也可能减少,从注释中可以看出当前指标类型为gauge(标准尺寸)

node_cpu_seconds_total{cpu=“0”,mode=“idle”} :

cpu0上idle进程占用CPU的总时间,CPU占用时间是一个只增不减的度量指标,从类型中也可以看出node_cpu的数据类型是counter(计数器)

counter计数器:只是采集递增的指标

gauge标准尺寸:统计的指标可增加可减少

安装prometheus

- 创建一个configmap存储卷,用来存放prometheus配置信息

[root@k8s-master-01 prom+grafana]# more prometheus-cfg.yaml

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus-config

namespace: monitor-sa

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 10s

evaluation_interval: 1m

scrape_configs:

- job_name: 'kubernetes-node'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-node-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-apiserver'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- 通过kubectl create 创建 configmap

[root@k8s-master-01 prom+grafana]# kubectl create -f prometheus-cfg.yaml

configmap/prometheus-config created

- 通过deployment部署prometheus

[root@k8s-master-01 prom+grafana]# more prometheus-deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-server

namespace: prom-grafana

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

component: server

#matchExpressions:

#- {key: app, operator: In, values: [prometheus]}

#- {key: component, operator: In, values: [server]}

template:

metadata:

labels:

app: prometheus

component: server

annotations:

prometheus.io/scrape: 'false'

spec:

nodeName: k8s-node-06

serviceAccountName: drifter

containers:

- name: prometheus

image: prom/prometheus:v2.2.1

imagePullPolicy: IfNotPresent

command:

- prometheus

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus

- --storage.tsdb.retention=720h

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /etc/prometheus/prometheus.yml

name: prometheus-config

subPath: prometheus.yml

- mountPath: /prometheus/

name: prometheus-storage-volume

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

items:

- key: prometheus.yml

path: prometheus.yml

mode: 0644

- name: prometheus-storage-volume

hostPath:

path: /data

type: Directory

注意:在上面的prometheus-deploy.yaml文件有个nodeName字段,这个就是用来指定创建的这个prometheus的pod调度到哪个节点上,我们这里让nodeName=k8s-node-06,也即是让pod调度到k8s-node-06节点上,因为k8s-node-06节点我们创建了数据目录/data,所以大家记住:你在k8s集群的哪个节点创建/data,就让pod调度到哪个节点

- 通过 kubectl create -f 部署 prometheus-server 并查看是否创建成功

[root@k8s-master-01 prom+grafana]# kubectl create -f prometheus-deployment.yaml

deployment.apps/prometheus-server created

[root@k8s-master-01 prom+grafana]# kubectl get pod -n prom-grafana

NAME READY STATUS RESTARTS AGE

node-exporter-69gdh 1/1 Running 0 7h36m

node-exporter-6ptnr 1/1 Running 0 7h36m

node-exporter-7pdgm 1/1 Running 0 7h36m

node-exporter-fjlq2 1/1 Running 0 7h36m

node-exporter-jfncm 1/1 Running 0 7h36m

node-exporter-p86f8 1/1 Running 0 7h36m

node-exporter-qcp6w 1/1 Running 0 7h36m

node-exporter-v857r 1/1 Running 0 7h36m

node-exporter-vclgh 1/1 Running 0 7h36m

prometheus-server-6bf69dddc5-v527d 1/1 Running 0 7m48s

- 给prometheus pod创建一个service

[root@k8s-master-01 prom+grafana]# more prometheus-svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: prometheus-server

namespace: prom-grafana

labels:

app: prometheus

spec:

# type: NodePort

type: ClusterIP

ports:

- port: 9090

targetPort: 9090

# protocol: TCP

selector:

app: prometheus

component: prometheus-server

- 通过 kubectl create 创建 service 并查看

[root@k8s-master-01 prom+grafana]# kubectl create -f prometheus-svc.yaml

service/prometheus created

[root@k8s-master-01 prom+grafana]# kubectl get svc -n prom-grafana

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus-server ClusterIP 10.103.172.209 9090/TCP 4m57s

- 给prometheus service 创建一个 ingress 访问

[root@k8s-master-01 prom+grafana]# more prometheus-ing.yaml

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: prometheus

namespace: prom-grafana

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: "20M"

# nginx.ingress.kubernetes.io/ssl-redirect: false

# nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: prometheus.drifter.net

http:

paths:

- path: /

backend:

serviceName: prometheus-server

servicePort: 9090

status:

loadBalancer:

ingress:

- ip: 10.10.100.74

- ip: 10.10.100.75

- ip: 10.10.100.76

- ip: 10.10.100.77

- ip: 10.10.100.78

- ip: 10.10.100.79

- 通过 kubectl create -f 创建 prometheus-ing ingress 并查看是否部署成功

[root@k8s-master-01 prom+grafana]# kubectl create -f prometheus-ing.yaml

ingress.extensions/prometheus created

[root@k8s-master-01 prom+grafana]# kubectl get ing -n prom-grafana

NAME CLASS HOSTS ADDRESS PORTS AGE

prometheus prometheus.drifter.net 80 4s

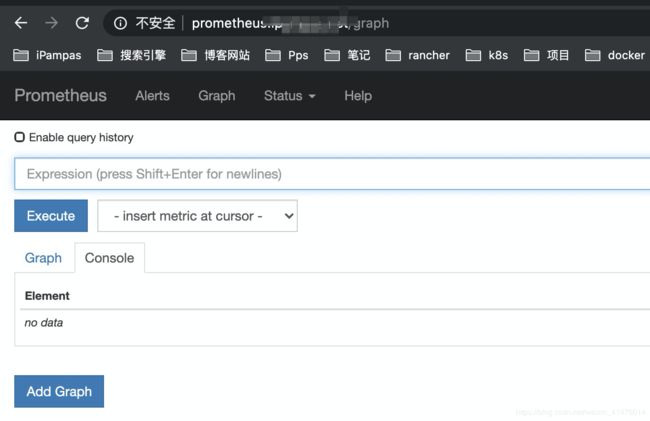

- 点击页面的Status->Targets,可看到如下,说明我们配置的服务发现可以正常采集数据

- prometheus热更新

为了每次修改配置文件可以热加载prometheus,也就是不停止prometheus,就可以使配置生效,如修改prometheus-cfg.yaml,想要使配置生效可用如下热加载命令:

curl -X POST http://100.119.255.145:9090/-/reload

100.119.255.145 便是 prometheus的pod的ip地址

[root@k8s-master-01 prom+grafana]# kubectl get pods -n prom-grafana -o wide | grep prometheus

prometheus-server-6bf69dddc5-v527d 1/1 Running 0 10m 100.119.255.145 k8s-node-06

- 热加载速度比较慢,可以暴力重启prometheus,如修改上面的prometheus-cfg.yaml文件之后,可执行如下强制删除:

kubectl delete -f prometheus-cfg.yaml

kubectl delete -f prometheus-deployment.yaml

- 然后再通过apply更新:

kubectl apply -f prometheus-cfg.yaml

kubectl apply -f prometheus-deployment.yaml

- 注意:线上最好热加载,暴力删除可能造成监控数据的丢失

Grafana安装和配置

- 下载安装Grafana需要的镜像

我这里使用的是内部构建好的grafana 镜像(可自定义)

[root@k8s-master-01 prom+grafana]# more grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-server

namespace: prom-grafana

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: grafana

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

imagePullSecrets:

- name: registry-pps

containers:

- name: grafana-server

image: registry.drifter.net/grafana:5.0.4

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

- 通过kubectl create 更新grafana

[root@k8s-master-01 prom+grafana]# kubectl create -f grafana.yaml

deployment.apps/grafana-server created

- 查看grafana是否部署成功

[root@k8s-master-01 prom+grafana]# kubectl get pods -n prom-grafana

NAME READY STATUS RESTARTS AGE

grafana-server-657495c99d-x5hnn 1/1 Running 0 23s

node-exporter-g747h 1/1 Running 0 6m16s

node-exporter-gbfvk 1/1 Running 0 6m16s

node-exporter-glvvw 1/1 Running 0 6m16s

node-exporter-hpvmj 1/1 Running 0 6m16s

node-exporter-ndp9l 1/1 Running 0 6m16s

node-exporter-nfbdg 1/1 Running 0 6m16s

node-exporter-rjrzw 1/1 Running 0 6m16s

node-exporter-z9c97 1/1 Running 0 6m16s

node-exporter-zcb6h 1/1 Running 0 6m16s

prometheus-server-6bf69dddc5-9m4nw 1/1 Running 0 4m16s

- 创建 grafana-svc 服务

[root@k8s-master-01 prom+grafana]# more grafana-svc.yaml

---

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: grafana-server

name: grafana-server

namespace: prom-grafana

spec:

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

# type: NodePort

type: ClusterIP

- 通过kubectl create创建并查看

[root@k8s-master-01 prom+grafana]# kubectl create -f grafana-svc.yaml

service/grafana-server created

[root@k8s-master-01 prom+grafana]# kubectl get svc -n prom-grafana

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana-server ClusterIP 10.102.129.245 3000/TCP 2m3s

prometheus-server ClusterIP 10.96.17.72 9090/TCP 12m

- 给grafana service 创建一个 ingress 访问

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana-server

namespace: prom-grafana

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: "20M"

# nginx.ingress.kubernetes.io/ssl-redirect: false

# nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: graf.drifter.net

http:

paths:

- path: /

backend:

serviceName: grafana-server

servicePort: 80

status:

loadBalancer:

ingress:

- ip: 10.10.100.74

- ip: 10.10.100.75

- ip: 10.10.100.76

- ip: 10.10.100.77

- ip: 10.10.100.78

- ip: 10.10.100.79

- 创建并查看

[root@k8s-master-01 prom+grafana]# kubectl delete -f grafana-ing.yaml

ingress.extensions "grafana-server" deleted

[root@k8s-master-01 prom+grafana]# kubectl get ing -n prom-grafana

NAME CLASS HOSTS ADDRESS PORTS AGE

grafana-server graf.drifter.net 80 28m

prometheus prometheus.drifter.net 80 170m

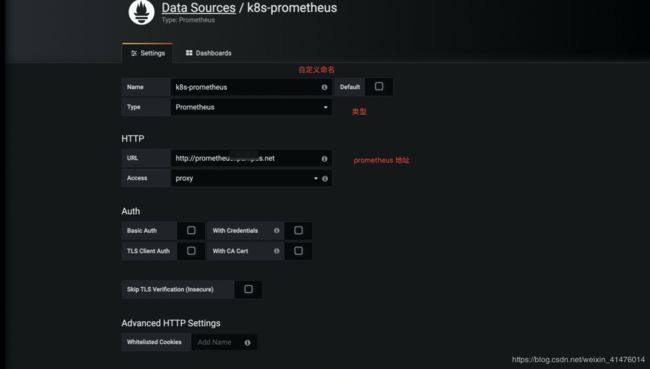

- Grafan界面接入prometheus数据源

打开浏览器访问 http://graf.ipampas.net

开始配置grafana的web界面:选择Create your first data source

点击左下角Save & Test,出现如下Data source is working,说明prometheus数据源成功的被grafana接入了

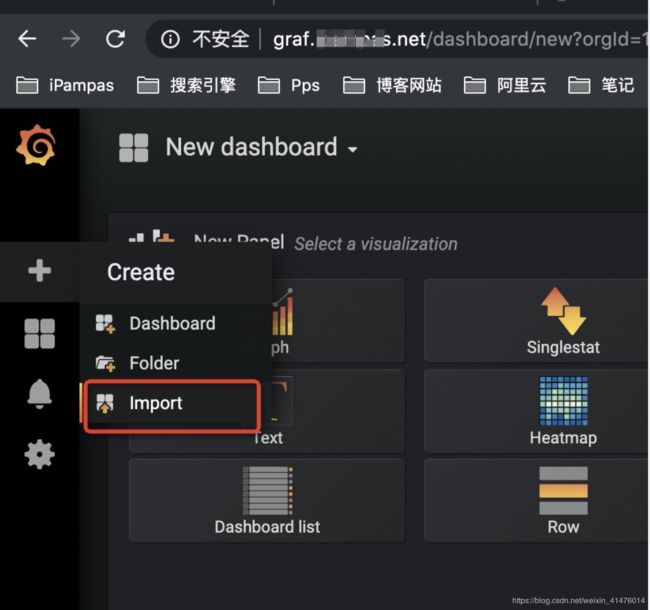

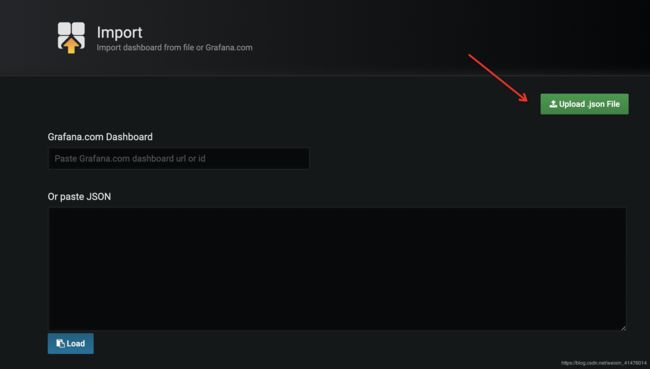

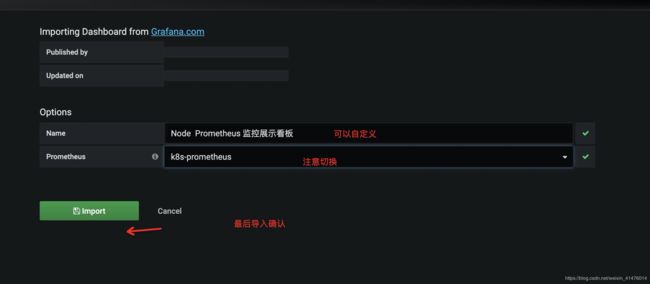

导入监控模板,可在如下链接搜索(监控模板不再显示了哈)

https://grafana.com/dashboards?dataSource=prometheus&search=kubernetes

也可直接导入node_exporter.json监控模板,这个可以把node节点指标显示出来 - 导入监控模板

- 最后展示效果

其他监控模板继续导入即可,至此 prometheus + grafana 监控已部署OK。