MapReduce编程规范-wordcount实战 && hadoop序列化例子

一、程序分为3部分:Mapper,Reducer,Driver

通过java实现map_reduce版本的word_count例子

1、Mapper阶段

(1)用户自定义的Mapper要继承 org.apache.hadoop.mapreduce.Mapper类

(2)Mapper的输入数据是KV对的形式

(3)Mapper中的业务逻辑在map()方法中实现

(4)Mapper的输出数据是KV对的形式

(5)map()方法(MapTask进程)对每一个

2、Reducer阶段

(1)用户自定义的Reducer要继承 org.apache.hadoop.mapreduce.Reducer类

(2)Reducer的输入类型对应Mapper的输出类型;也是KV对的形式

(3)Reducer中的业务逻辑在reduce()方法中实现

(4)ReduceTask进程对每一组相同的

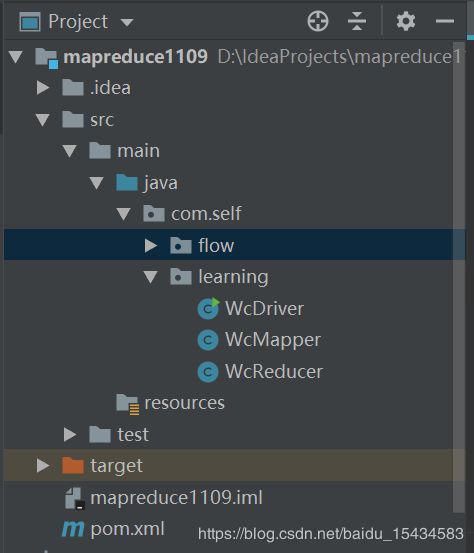

项目的层级结构如下

创建三个java类;分别是WcDriver,WcMapper,WcReducer

1.创建 WcMapper.class 内容如下

package com.self.learning;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.io.LongWritable;

import java.io.IOException;

//输入类型 <每一行输入文件的偏移量offset,行数据line> 对应的类型是LongWritable, Text

//输出类型 text,IntWritable

public class WcMapper extends Mapper{

private Text word = new Text();

private IntWritable one = new IntWritable(1);

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//拿到一行数据

String line = value.toString();

//按空格切分数据

String[]words = line.split(" ");

//遍历数组,把单词变成(word,1)的形式交给框架

for(String w:words){

//context.write(new Text(word),new IntWritable(1)); //大量生成新的对象,会促使频繁的调用垃圾回收

this.word.set(w);

context.write(this.word,this.one);

}

}

} 2.创建WcReducer.class

package com.self.learning;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.io.Text;

import java.io.IOException;

//map任务输出的数据输入到reduce

//因此reduce的输入(word,1) Text, IntWritable

//reduce的输出泛型 是(word,n) Text, IntWritable

public class WcReducer extends Reducer {

private IntWritable total = new IntWritable();

@Override

//此时,是按照相同的key为一组的,很多个value;所以values是一个可迭代的对象

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

//对相同key的value进行累加操作

int sum=0;

for(IntWritable value:values){

sum+=value.get();

}

//包装结果并输出

total.set(sum);

context.write(key,this.total);

}

}

3.创建WcDriver.class

package com.self.learning;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class WcDriver {

public static void main(String []args) throws IOException ,ClassNotFoundException,InterruptedException{

//1.获取job实例

Job job = Job.getInstance(new Configuration());

//2.设置类路径classpath

job.setJarByClass(WcDriver.class);

//3.设置mapper和reducer

job.setMapperClass(WcMapper.class);

job.setReducerClass(WcReducer.class);

//4.设置mapper和reducer的输出类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//5.设置输入输出数据源

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

boolean b = job.waitForCompletion(true);

System.exit(b? 0:1);

}

}

二、Hadoop序列化

什么是序列化?

序列化就是把内存的对象,转换成字节序列(或其他数据传输协议)以便于存储到磁盘(持久化)和网络传输。

反序列化就是将收到的字节序列或者磁盘的持久化数据,转换成内存中的对象

为什么没有用Java的序列化?

Java的序列化是一个重量级的框架(Serializable),一个对象被序列化后,会附带很多额外的信息(各种校验信息,Header,继承体系等),不便于在网络中高效传输。所以hadoop自己开发了一套序列化机制(Writable)

Hadoop序列化的特点:

- 紧凑:高效使用存储空间

- 快速:读写数据的额外开销小

- 可扩展:随着通信协议的升级而可升级

- 互操作:支持多语言的交互

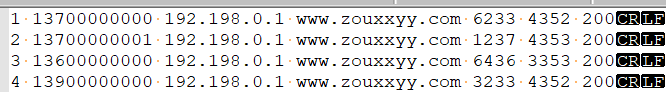

假设我们有一个需求是获取同一个手机号用户的总上行流量,总下行流量和总流量

数据文件如下(特此声明:数据并非真实数据):文件以空格隔开,第二列是用户手机号,倒数第三列是上行记录,倒数第二列是下行记录

这种情况下,我们的map的输出 key应该为手机号,value为(上行,下行,上下行的综合)这样的一个对象

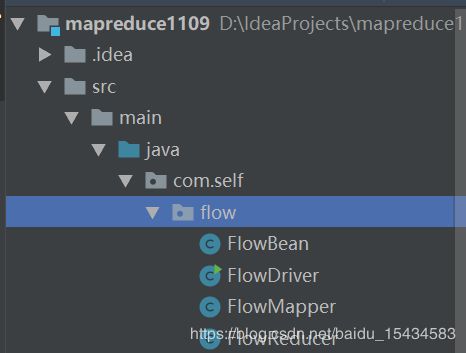

其中FlowBean类里实现了,我们自定义的数据对象类型

1、创建FlowBean.class

package com.self.flow;

import org.apache.hadoop.io.Writable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class FlowBean implements Writable { //需要实现hadoop的序列化Writable

private long upFlow;

private long downFlow;

private long sumFlow;

public FlowBean() { //构造函数

}

@Override

public String toString() {

return upFlow+"\t"+downFlow+"\t"+sumFlow;

}

public void set(long upFlow, long downFlow){ //实现一个set函数

this.upFlow = upFlow;

this.downFlow = downFlow;

this.sumFlow = upFlow + downFlow;

}

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getDownFlow() {

return downFlow;

}

public void setDownFlow(long downFlow) {

this.downFlow = downFlow;

}

//注意:序列化和反序列化的变量顺序一定要保证是一样的

/**

*序列化方法

* @param dataOutput

* @throws IOException**/

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeLong(upFlow);

dataOutput.writeLong(downFlow);

dataOutput.writeLong(sumFlow);

}

/***

* 反序列化

* @param dataInput

* @throws IOException**/

public void readFields(DataInput dataInput) throws IOException {

upFlow = dataInput.readLong();

downFlow = dataInput.readLong();

sumFlow = dataInput.readLong();

}

}

2、创建FlowMapper.class

package com.self.flow;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

import java.util.List;

public class FlowMapper extends Mapper {

private Text phone=new Text();

private FlowBean flow = new FlowBean();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String []fields = value.toString().split(" ");

phone.set(fields[1]);

long upFlow = Long.parseLong(fields[fields.length-3]);

long downFlow = Long.parseLong(fields[fields.length-2]);

flow.set(upFlow,downFlow);

context.write(phone,flow);

}

}

3.创建FlowReducer.class

package com.self.flow;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class FlowReducer extends Reducer {

private FlowBean sumFlow = new FlowBean();

@Override

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

long sumUpFlow = 0;

long sumDownFlow = 0;

for(FlowBean value:values){

sumUpFlow +=value.getUpFlow();

sumDownFlow += value.getDownFlow();

}

sumFlow.set(sumUpFlow,sumDownFlow);

context.write(key,sumFlow);

}

}

4、创建FlowDriver.class

package com.self.flow;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class FlowDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Job job = Job.getInstance(new Configuration());

job.setJarByClass(FlowDriver.class);

job.setMapperClass(FlowMapper.class);

job.setReducerClass(FlowReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FlowBean.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

boolean b = job.waitForCompletion(true);

System.exit(b? 0:1);

}

}