HBase第一天:HBase组件及架构、安装HBase部署集群、HBase的shell操作、HBase数据结构、命名空间、原理、读写流程、flush与合并、hbase-default.xml配置详解

本文目录

第1章 HBase简介

1.1 什么是HBase

1.2 Hbase特点

1.3 HBase架构

1.3 HBase中的角色

1.3.1 HMaster

1.3.2 RegionServer

1.2.3 其他组件

第2章 HBase安装

2.1 Zookeeper正常部署

2.2 Hadoop正常部署

2.3 HBase的解压

2.4 HBase的配置文件

2.5 HBase远程发送到其他集群

2.6 HBase服务的启动

2.7 查看HBase页面

第3章 HBase Shell操作

3.1 基本操作

3.2 表的操作

第4章 HBase数据结构

4.1 RowKey

4.2 Column Family

4.3 Cell

4.4 Time Stamp

4.5 命名空间 相当于mysql的数据库

第5 章 HBase原理

5.1 读流程

5.2 写流程

5.3 数据flush过程

5.4 数据合并过程

第1章 HBase简介

1.1 什么是HBase

HBase的原型是Google的BigTable论文,受到了该论文思想的启发,目前作为Hadoop的子项目来开发维护,用于支持结构化的数据存储。

官方网站:http://hbase.apache.org

-- 2006年Google发表BigTable白皮书

-- 2006年开始开发HBase

-- 2008年北京成功开奥运会,程序员默默地将HBase弄成了Hadoop的子项目

-- 2010年HBase成为Apache顶级项目

-- 现在很多公司二次开发出了很多发行版本,你也开始使用了。

HBase是一个高可靠性、高性能、面向列、可伸缩的分布式存储系统,利用HBASE技术可在廉价PC Server上搭建起大规模结构化存储集群。

HBase的目标是存储并处理大型的数据,更具体来说是仅需使用普通的硬件配置,就能够处理由成千上万的行和列所组成的大型数据。

HBase是Google Bigtable的开源实现,但是也有很多不同之处。比如:Google Bigtable利用GFS作为其文件存储系统,HBase利用Hadoop HDFS作为其文件存储系统;Google运行MAPREDUCE来处理Bigtable中的海量数据,HBase同样利用Hadoop MapReduce来处理HBase中的海量数据;Google Bigtable利用Chubby作为协同服务,HBase利用Zookeeper作为对应。

1.2 Hbase特点

1)海量存储

Hbase适合存储PB级别的海量数据,在PB级别的数据以及采用廉价PC存储的情况下,能在几十到百毫秒内返回数据。这与Hbase的极易扩展性息息相关。正式因为Hbase良好的扩展性,才为海量数据的存储提供了便利。

2)列式存储

这里的列式存储其实说的是列族存储,Hbase是根据列族来存储数据的。列族下面可以有非常多的列,列族在创建表的时候就必须指定。

3)极易扩展

Hbase的扩展性主要体现在两个方面,一个是基于上层处理能力(RegionServer)的扩展,一个是基于存储的扩展(HDFS)。

通过横向添加RegionSever的机器,进行水平扩展,提升Hbase上层的处理能力,提升Hbsae服务更多Region的能力。

备注:RegionServer的作用是管理region、承接业务的访问,这个后面会详细的介绍通过横向添加Datanode的机器,进行存储层扩容,提升Hbase的数据存储能力和提升后端存储的读写能力。

4)高并发

由于目前大部分使用Hbase的架构,都是采用的廉价PC,因此单个IO的延迟其实并不小,一般在几十到上百ms之间。这里说的高并发,主要是在并发的情况下,Hbase的单个IO延迟下降并不多。能获得高并发、低延迟的服务。

5)稀疏

稀疏主要是针对Hbase列的灵活性,在列族中,你可以指定任意多的列,在列数据为空的情况下,是不会占用存储空间的。

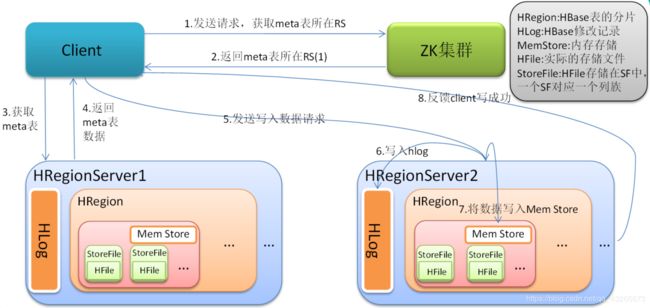

1.3 HBase架构

Hbase架构如图1所示:

图1 HBase架构图

从图中可以看出Hbase是由Client、Zookeeper、Master、HRegionServer、HDFS等几个组件组成,下面来介绍一下几个组件的相关功能:

1)Client

Client包含了访问Hbase的接口,另外Client还维护了对应的cache来加速Hbase的访问,比如cache的.META.元数据的信息。

2)Zookeeper

HBase通过Zookeeper来做master的高可用、RegionServer的监控、元数据的入口以及集群配置的维护等工作。具体工作如下:

通过Zoopkeeper来保证集群中只有1个master在运行,如果master异常,会通过竞争机制产生新的master提供服务

通过Zoopkeeper来监控RegionServer的状态,当RegionSevrer有异常的时候,通过回调的形式通知Master RegionServer上下线的信息

通过Zoopkeeper存储元数据的统一入口地址 (元数据存储在哪里?——master和zk)

3)Hmaster

master节点的主要职责如下:

为RegionServer分配Region

维护整个集群的负载均衡

维护集群的元数据信息

发现失效的Region,并将失效的Region分配到正常的RegionServer上(RegionServer维护对应Hdfs上文件的元数据)

当RegionSever失效的时候,协调对应Hlog的拆分

4)HregionServer

HregionServer直接对接用户的读写请求,是真正的“干活”的节点。它的功能概括如下:

管理master为其分配的Region

处理来自客户端的读写请求

负责和底层HDFS的交互,存储数据到HDFS

负责Region变大以后的拆分

负责Storefile的合并工作

一个regionserver 有一个Hlog hlog 类似fsimagine

Region 类似ReginServer 的一张表,可以被横向切分

Region可以有多个列族(store),一个store 存储一个列族的表 ,切分之后列族(一个列族可以在多个region下的store中存储)

数据在内存中存的 ,溢写一次形成一个StoreFile,HFile 是存储形式 ,StoreFile是存储的组件

Region对应 表 (一个region只能来自一张表,一张表可以放在多个不同regionserver的region中)

store 对应 列族 (一个store 只能对应一个列族,但是被切分之后 一个列族可以放在多个不同regionserver的region的store中)

5)HDFS

HDFS为Hbase提供最终的底层数据存储服务,同时为HBase提供高可用(Hlog存储在HDFS)的支持,具体功能概括如下:

提供元数据和表数据的底层分布式存储服务

数据多副本,保证的高可靠和高可用性

1.3 HBase中的角色

1.3.1 HMaster

功能

1.监控RegionServer

2.处理RegionServer故障转移

3.处理元数据的变更

4.处理region的分配或转移

5.在空闲时间进行数据的负载均衡

6.通过Zookeeper发布自己的位置给客户端

1.3.2 RegionServer

功能

1.负责存储HBase的实际数据

2.处理分配给它的Region

3.刷新缓存到HDFS

4.维护Hlog

5.执行压缩

6.负责处理Region分片

1.2.3 其他组件

1.Write-Ahead logs

HBase的修改记录,当对HBase读写数据的时候,数据不是直接写进磁盘,它会在内存中保留一段时间(时间以及数据量阈值可以设定)。但把数据保存在内存中可能有更高的概率引起数据丢失,为了解决这个问题,数据会先写在一个叫做Write-Ahead logfile的文件中,然后再写入内存中。所以在系统出现故障的时候,数据可以通过这个日志文件重建。

2.Region

Hbase表的分片,HBase表会根据RowKey值被切分成不同的region存储在RegionServer中,在一个RegionServer中可以有多个不同的region。

3.Store

HFile存储在Store中,一个Store对应HBase表中的一个列族。

4.MemStore

顾名思义,就是内存存储,位于内存中,用来保存当前的数据操作,所以当数据保存在WAL中之后,RegsionServer会在内存中存储键值对。

5.HFile

这是在磁盘上保存原始数据的实际的物理文件,是实际的存储文件。StoreFile是以Hfile的形式存储在HDFS的。

第2章 HBase安装

2.1 Zookeeper正常部署

首先保证Zookeeper集群的正常部署,并启动之:

[atguigu@hadoop102 zookeeper-3.4.10]$ bin/zkServer.sh start

[atguigu@hadoop103 zookeeper-3.4.10]$ bin/zkServer.sh start

[atguigu@hadoop104 zookeeper-3.4.10]$ bin/zkServer.sh start

2.2 Hadoop正常部署

Hadoop集群的正常部署并启动:

[atguigu@hadoop102 hadoop-2.7.2]$ sbin/start-dfs.sh

[atguigu@hadoop103 hadoop-2.7.2]$ sbin/start-yarn.sh

2.3 HBase的解压

准备hadoop-hbase-jar和hbase-bin包,选择hbse-bin包

解压HBase到指定目录:

[atguigu@hadoop102 software]$ tar -zxvf hbase-1.3.1-bin.tar.gz -C /opt/module

重命名

mv hbase-1.3.1 hbase

2.4 HBase的配置文件

修改HBase对应的配置文件。

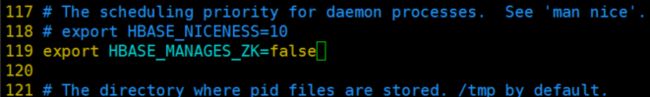

1)hbase-env.sh修改内容:

27行export JAVA_HOME=/opt/module/jdk1.8.0_144

128行export HBASE_MANAGES_ZK=false

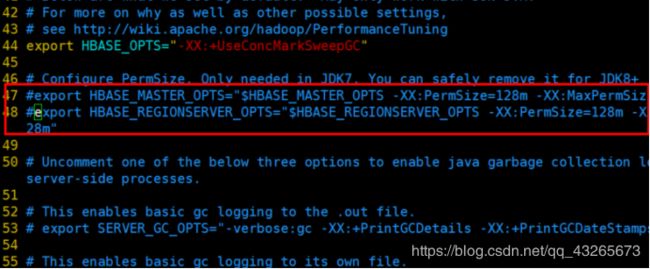

注释掉 46 47两行

2)hbase-site.xml修改内容:

hbase.rootdir

hdfs://hadoop102:9000/hbase

hbase.cluster.distributed

true

hbase.master.port

16000

hbase.zookeeper.quorum

hadoop102:2181,hadoop103:2181,hadoop104:2181

hbase.zookeeper.property.dataDir

/opt/module/zookeeper-3.4.10/zkData

3)regionservers:

| hadoop102 hadoop103 hadoop104 |

4)软连接hadoop配置文件到hbase:

[atguigu@hadoop102 module]$ ln -s /opt/module/hadoop-2.7.2/etc/hadoop/core-site.xml /opt/module/hbase/conf/core-site.xml

[atguigu@hadoop102 module]$ ln -s /opt/module/hadoop-2.7.2/etc/hadoop/hdfs-site.xml /opt/module/hbase/conf/hdfs-site.xml

2.5 HBase远程发送到其他集群

[atguigu@hadoop102 module]$ xsync hbase/

2.6 HBase服务的启动

1.启动方式1

[atguigu@hadoop102 hbase]$ bin/hbase-daemon.sh start master

[atguigu@hadoop102 hbase]$ bin/hbase-daemon.sh start regionserver

在hadoop103、104上启动,查看jpsall

提示:如果集群之间的节点时间不同步,会导致regionserver无法启动,抛出ClockOutOfSyncException异常。

修复提示:

a、同步时间服务

请参:Hadoop入门(下):伪分布式搭建、完全分布式搭建、SSH免密登录、集群分发脚本xsync、集群时间同步、HDFS运行MapReduce、Yarn、jpsall配置、hadoop编译源码、常见错误

b、属性:hbase.master.maxclockskew设置更大的值

|

|

2.启动方式2

[atguigu@hadoop102 hbase]$ bin/start-hbase.sh

对应的停止服务:

[atguigu@hadoop102 hbase]$ bin/stop-hbase.sh

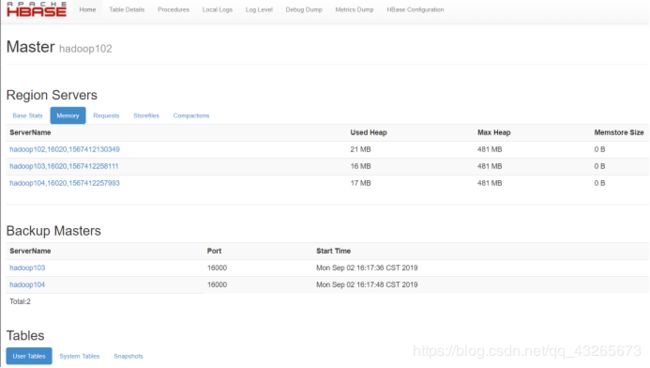

2.7 查看HBase页面

启动成功后,可以通过“host:port”的方式来访问HBase管理页面,例如:

http://hadoop102:16010

第3章 HBase Shell操作

3.1 基本操作

注意:HBase中向左删除是用Ctrl + Backspace或Shift + Backspace组合键删除

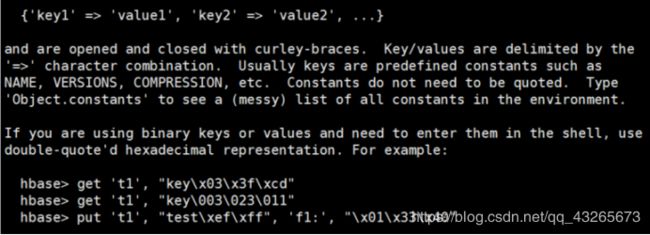

1.进入HBase客户端命令行

[atguigu@hadoop102 hbase]$ bin/hbase shell

2.查看帮助命令

hbase(main):001:0> help

3.查看当前数据库中有哪些表

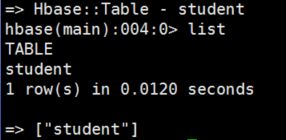

hbase(main):002:0> list

3.2 表的操作

1.创建表

hbase(main):002:0> create 'student','info'

2.插入数据到表

hbase(main):003:0> put 'student','1001','info:sex','male'

hbase(main):004:0> put 'student','1001','info:age','18'

hbase(main):005:0> put 'student','1002','info:name','Janna'

hbase(main):006:0> put 'student','1002','info:sex','female'

hbase(main):007:0> put 'student','1002','info:age','20'

3.扫描查看表数据 (区分大小写)

hbase(main):008:0> scan 'student'

hbase(main):009:0> scan 'student',{STARTROW => '1001', STOPROW => '1001'}

hbase(main):010:0> scan 'student',{STARTROW => '1001'}

4.查看表结构

hbase(main):011:0> describe ‘student’

5.更新指定字段的数据

hbase(main):012:0> put 'student','1001','info:name','Nick'

hbase(main):013:0> put 'student','1001','info:age','100'

6.查看“指定行”或“指定列族:列”的数据

hbase(main):014:0> get 'student','1001'

hbase(main):015:0> get 'student','1001','info:name'

7.统计表数据行数

hbase(main):021:0> count 'student'

8.删除数据

删除某rowkey的全部数据:

hbase(main):016:0> deleteall 'student','1001'

删除某rowkey的某一列数据:

hbase(main):017:0> delete 'student','1002','info:sex'

hbase(main):017:0> delete 'student','1002','info:sex',时间戳

9.清空表数据

hbase(main):018:0> truncate 'student'

提示:清空表的操作顺序为先disable,然后再truncate。

10.删除表

首先需要先让该表为disable状态:

hbase(main):019:0> disable 'student'

然后才能drop这个表:

hbase(main):020:0> drop 'student'

提示:如果直接drop表,会报错:ERROR: Table student is enabled. Disable it first.

11.变更表信息

将info列族中的数据存放3个版本:

hbase(main):022:0> alter 'student',{NAME=>'info',VERSIONS=>3}

hbase(main):022:0> get 'student','1001',{COLUMN=>'info:name',VERSIONS=>3}

注意:更改版本号之后要重新插入数据测试

测试一下删除,如果不指定时间戳则将所有版本全部删除

hbase(main):012:0> delete 'student','1001','info:name'

第4章 HBase数据结构

4.1 RowKey

与nosql数据库们一样,RowKey是用来检索记录的主键。访问HBASE table中的行,只有三种方式:

1.通过单个RowKey访问

2.通过RowKey的range(正则)

3.全表扫描

RowKey行键 (RowKey)可以是任意字符串(最大长度是64KB,实际应用中长度一般为 10-100bytes),在HBASE内部,RowKey保存为字节数组。存储时,数据按照RowKey的字典序(byte order)排序存储。设计RowKey时,要充分排序存储这个特性,将经常一起读取的行存储放到一起。(位置相关性)

4.2 Column Family

列族:HBASE表中的每个列,都归属于某个列族。列族是表的schema的一部 分(而列不是),必须在使用表之前定义。列名都以列族作为前缀。例如 courses:history,courses:math都属于courses 这个列族。

4.3 Cell

由{rowkey, column Family:columu, version} 唯一确定的单元。cell中的数据是没有类型的,全部是字节码形式存贮。 Version ——时间戳

关键字:无类型、字节码

4.4 Time Stamp

HBASE 中通过rowkey和columns确定的为一个存贮单元称为cell。每个 cell都保存 着同一份数据的多个版本。版本通过时间戳来索引。时间戳的类型是 64位整型。时间戳可以由HBASE(在数据写入时自动 )赋值,此时时间戳是精确到毫秒 的当前系统时间。时间戳也可以由客户显式赋值。如果应用程序要避免数据版 本冲突,就必须自己生成具有唯一性的时间戳。每个 cell中,不同版本的数据按照时间倒序排序,即最新的数据排在最前面。

为了避免数据存在过多版本造成的的管理 (包括存贮和索引)负担,HBASE提供 了两种数据版本回收方式。一是保存数据的最后n个版本,二是保存最近一段 时间内的版本(比如最近七天)。用户可以针对每个列族进行设置。

4.5 命名空间 相当于mysql的数据库

命名空间的结构:

1) Table:表,所有的表都是命名空间的成员,即表必属于某个命名空间,如果没有指定,则在default默认的命名空间中。

2) RegionServer group:一个命名空间包含了默认的RegionServer Group。

3) Permission:权限,命名空间能够让我们来定义访问控制列表ACL(Access Control List)。例如,创建表,读取表,删除,更新等等操作。

4) Quota:限额,可以强制一个命名空间可包含的region的数量。

操作

创建命名空间:

create_namespace ‘20190902’

查看命名空间

list_namespace

创建一个此命名空间的表

create '20190902:teachers' ,'f1'

删除命名空间

disable ‘20190902:teachers’

drop ‘20190902:teachers’

drop_namespace ‘20190902’

注意:删除命名空间前要删除表

第5 章 HBase原理

5.1 读流程

HBase读数据流程如图3所示

图3所示 HBase读数据流程

- Client先访问zookeeper,从meta表读取region的位置,

- 然后读取meta表中的数据。meta中又存储了用户表的region信息;

3)根据namespace、表名和rowkey在meta表中找到对应的region信息;

4)找到这个region对应的regionserver;

5)查找对应的region;

6)先从MemStore找数据,如果没有,再到BlockCache里面读;

7)BlockCache还没有,再到StoreFile上读(为了读取的效率);

8)如果是从StoreFile里面读取的数据,不是直接返回给客户端,而是先写入BlockCache,再返回给客户端。

细节分析:

进入102zookeeper目录 bin/zkCli.sh

ls / 找到 hbase

ls /hbase

get /hbase/meta-region-server 可以看到meta表在102上

在hadoop102中关闭HRegionServer

再次查看region-server,已切换到了hadoop103

关掉zkcli quit

5.2 写流程

Hbase写流程如图2所示

图2 HBase写数据流程

1)Client向HregionServer发送写请求;

2)HregionServer将数据写到HLog(write ahead log)。为了数据的持久化和恢复;

3)HregionServer将数据写到内存(MemStore);

4)反馈Client写成功。

5.3 数据flush过程

1)当MemStore数据达到阈值(默认是128M,老版本是64M),将数据刷到硬盘,将内存中的数据删除,同时删除HLog中的历史数据;

2)并将数据存储到HDFS中;

MemStore数据达到regionserver 的40% 会触发 regionserver级别的flush

5.4 数据合并过程

1)当数据块达到4块(最大能存三块),Hmaster将数据块加载到本地,进行合并;

2)当合并的数据超过256M,进行拆分,将拆分后的Region分配给不同的HregionServer管理;

3)当HregionServer宕机后,将HregionServer上的hlog拆分,然后分配给不同的HregionServer加载,修改.META.;

4)注意:HLog会同步到HDFS。

附:hbase-default.xml注释版(hbase-default.xml源码详解)

hbase.tmp.dir

${java.io.tmpdir}/hbase-${user.name}

Temporary directory on the local filesystem.

Change this setting to point to a location more permanent

than '/tmp', the usual resolve for java.io.tmpdir, as the

'/tmp' directory is cleared on machine restart.

hbase.rootdir

${hbase.tmp.dir}/hbase

The directory shared by region servers and into

which HBase persists. The URL should be 'fully-qualified'

to include the filesystem scheme. For example, to specify the

HDFS directory '/hbase' where the HDFS instance's namenode is

running at namenode.example.org on port 9000, set this value to:

hdfs://namenode.example.org:9000/hbase. By default, we write

to whatever ${hbase.tmp.dir} is set too -- usually /tmp --

so change this configuration or else all data will be lost on

machine restart.

hbase.fs.tmp.dir

/user/${user.name}/hbase-staging

A staging directory in default file system (HDFS)

for keeping temporary data.

hbase.bulkload.staging.dir

${hbase.fs.tmp.dir}

A staging directory in default file system (HDFS)

for bulk loading.

hbase.cluster.distributed

false

The mode the cluster will be in. Possible values are

false for standalone mode and true for distributed mode. If

false, startup will run all HBase and ZooKeeper daemons together

in the one JVM.

hbase.zookeeper.quorum

localhost

Comma separated list of servers in the ZooKeeper ensemble

(This config. should have been named hbase.zookeeper.ensemble).

For example, "host1.mydomain.com,host2.mydomain.com,host3.mydomain.com".

By default this is set to localhost for local and pseudo-distributed

modes

of operation. For a fully-distributed setup, this should be set to a

full

list of ZooKeeper ensemble servers. If HBASE_MANAGES_ZK is set in

hbase-env.sh

this is the list of servers which hbase will start/stop ZooKeeper on as

part of cluster start/stop. Client-side, we will take this list of

ensemble members and put it together with the

hbase.zookeeper.clientPort

config. and pass it into zookeeper constructor as the connectString

parameter.

hbase.local.dir

${hbase.tmp.dir}/local/

Directory on the local filesystem to be used

as a local storage.

hbase.master.port

16000

The port the HBase Master should bind to.

hbase.master.info.port

16010

The port for the HBase Master web UI.

Set to -1 if you do not want a UI instance run.

hbase.master.info.bindAddress

0.0.0.0

The bind address for the HBase Master web UI

hbase.master.logcleaner.plugins

org.apache.hadoop.hbase.master.cleaner.TimeToLiveLogCleaner

A comma-separated list of BaseLogCleanerDelegate invoked

by

the LogsCleaner service. These WAL cleaners are called in order,

so put the cleaner that prunes the most files in front. To

implement your own BaseLogCleanerDelegate, just put it in HBase's classpath

and add the fully qualified class name here. Always add the above

default log cleaners in the list.

hbase.master.logcleaner.ttl

600000

Maximum time a WAL can stay in the .oldlogdir directory,

after which it will be cleaned by a Master thread.

hbase.master.hfilecleaner.plugins

org.apache.hadoop.hbase.master.cleaner.TimeToLiveHFileCleaner

A comma-separated list of BaseHFileCleanerDelegate

invoked by

the HFileCleaner service. These HFiles cleaners are called in order,

so put the cleaner that prunes the most files in front. To

implement your own BaseHFileCleanerDelegate, just put it in HBase's classpath

and add the fully qualified class name here. Always add the above

default log cleaners in the list as they will be overwritten in

hbase-site.xml.

hbase.master.catalog.timeout

600000

Timeout value for the Catalog Janitor from the master to

META.

hbase.master.infoserver.redirect

true

Whether or not the Master listens to the Master web

UI port (hbase.master.info.port) and redirects requests to the web

UI server shared by the Master and RegionServer.

hbase.regionserver.port

16020

The port the HBase RegionServer binds to.

hbase.regionserver.info.port

16030

The port for the HBase RegionServer web UI

Set to -1 if you do not want the RegionServer UI to run.

hbase.regionserver.info.bindAddress

0.0.0.0

The address for the HBase RegionServer web UI

hbase.regionserver.info.port.auto

false

Whether or not the Master or RegionServer

UI should search for a port to bind to. Enables automatic port

search if hbase.regionserver.info.port is already in use.

Useful for testing, turned off by default.

hbase.regionserver.handler.count

30

Count of RPC Listener instances spun up on RegionServers.

Same property is used by the Master for count of master handlers.

hbase.ipc.server.callqueue.handler.factor

0.1

Factor to determine the number of call queues.

A value of 0 means a single queue shared between all the handlers.

A value of 1 means that each handler has its own queue.

hbase.ipc.server.callqueue.read.ratio

0

Split the call queues into read and write queues.

The specified interval (which should be between 0.0 and 1.0)

will be multiplied by the number of call queues.

A value of 0 indicate to not split the call queues, meaning that both

read and write

requests will be pushed to the same set of queues.

A value lower than 0.5 means that there will be less read queues than

write queues.

A value of 0.5 means there will be the same number of read and write

queues.

A value greater than 0.5 means that there will be more read queues

than write queues.

A value of 1.0 means that all the queues except one are used to

dispatch read requests.

Example: Given the total number of call queues being 10

a read.ratio of 0 means that: the 10 queues will contain both

read/write requests.

a read.ratio of 0.3 means that: 3 queues will contain only read

requests

and 7 queues will contain only write requests.

a read.ratio of 0.5 means that: 5 queues will contain only read

requests

and 5 queues will contain only write requests.

a read.ratio of 0.8 means that: 8 queues will contain only read

requests

and 2 queues will contain only write requests.

a read.ratio of 1 means that: 9 queues will contain only read requests

and 1 queues will contain only write requests.

hbase.ipc.server.callqueue.scan.ratio

0

Given the number of read call queues, calculated from the

total number

of call queues multiplied by the callqueue.read.ratio, the scan.ratio

property

will split the read call queues into small-read and long-read queues.

A value lower than 0.5 means that there will be less long-read queues

than short-read queues.

A value of 0.5 means that there will be the same number of short-read

and long-read queues.

A value greater than 0.5 means that there will be more long-read

queues than short-read queues

A value of 0 or 1 indicate to use the same set of queues for gets and

scans.

Example: Given the total number of read call queues being 8

a scan.ratio of 0 or 1 means that: 8 queues will contain both long and

short read requests.

a scan.ratio of 0.3 means that: 2 queues will contain only long-read

requests

and 6 queues will contain only short-read requests.

a scan.ratio of 0.5 means that: 4 queues will contain only long-read

requests

and 4 queues will contain only short-read requests.

a scan.ratio of 0.8 means that: 6 queues will contain only long-read

requests

and 2 queues will contain only short-read requests.

hbase.regionserver.msginterval

3000

Interval between messages from the RegionServer to Master

in milliseconds.

hbase.regionserver.logroll.period

3600000

Period at which we will roll the commit log regardless

of how many edits it has.

hbase.regionserver.logroll.errors.tolerated

2

The number of consecutive WAL close errors we will allow

before triggering a server abort. A setting of 0 will cause the

region server to abort if closing the current WAL writer fails during

log rolling. Even a small value (2 or 3) will allow a region server

to ride over transient HDFS errors.

hbase.regionserver.hlog.reader.impl

org.apache.hadoop.hbase.regionserver.wal.ProtobufLogReader

The WAL file reader implementation.

hbase.regionserver.hlog.writer.impl

org.apache.hadoop.hbase.regionserver.wal.ProtobufLogWriter

The WAL file writer implementation.

hbase.regionserver.global.memstore.size

Maximum size of all memstores in a region server before

new

updates are blocked and flushes are forced. Defaults to 40% of heap (0.4).

Updates are blocked and flushes are forced until size of all

memstores

in a region server hits

hbase.regionserver.global.memstore.size.lower.limit.

The default value in this configuration has been intentionally left

emtpy in order to

honor the old hbase.regionserver.global.memstore.upperLimit property if

present.

hbase.regionserver.global.memstore.size.lower.limit

Maximum size of all memstores in a region server before

flushes are forced.

Defaults to 95% of hbase.regionserver.global.memstore.size (0.95).

A 100% value for this value causes the minimum possible flushing to

occur when updates are

blocked due to memstore limiting.

The default value in this configuration has been intentionally left

emtpy in order to

honor the old hbase.regionserver.global.memstore.lowerLimit property if

present.

hbase.regionserver.optionalcacheflushinterval

3600000

Maximum amount of time an edit lives in memory before being automatically

flushed.

Default 1 hour. Set it to 0 to disable automatic flushing.

hbase.regionserver.catalog.timeout

600000

Timeout value for the Catalog Janitor from the

regionserver to META.

hbase.regionserver.dns.interface

default

The name of the Network Interface from which a region

server

should report its IP address.

hbase.regionserver.dns.nameserver

default

The host name or IP address of the name server (DNS)

which a region server should use to determine the host name used by

the

master for communication and display purposes.

hbase.regionserver.region.split.policy

org.apache.hadoop.hbase.regionserver.IncreasingToUpperBoundRegionSplitPolicy

A split policy determines when a region should be split. The various

other split policies that

are available currently are ConstantSizeRegionSplitPolicy,

DisabledRegionSplitPolicy,

DelimitedKeyPrefixRegionSplitPolicy, KeyPrefixRegionSplitPolicy etc.

hbase.regionserver.regionSplitLimit

1000

Limit for the number of regions after which no more region splitting

should take place.

This is not hard limit for the number of regions but acts as a guideline

for the regionserver

to stop splitting after a certain limit. Default is set to 1000.

zookeeper.session.timeout

90000

ZooKeeper session timeout in milliseconds. It is used in

two different ways.

First, this value is used in the ZK client that HBase uses to connect to

the ensemble.

It is also used by HBase when it starts a ZK server and it is passed as

the 'maxSessionTimeout'. See

http://hadoop.apache.org/zookeeper/docs/current/zookeeperProgrammers.html#ch_zkSessions.

For example, if a HBase region server connects to a ZK ensemble

that's also managed by HBase, then the

session timeout will be the one specified by this configuration. But, a

region server that connects

to an ensemble managed with a different configuration will be subjected

that ensemble's maxSessionTimeout. So,

even though HBase might propose using 90 seconds, the ensemble can have a

max timeout lower than this and

it will take precedence. The current default that ZK ships with is 40

seconds, which is lower than HBase's.

zookeeper.znode.parent

/hbase

Root ZNode for HBase in ZooKeeper. All of HBase's

ZooKeeper

files that are configured with a relative path will go under this node.

By default, all of HBase's ZooKeeper file path are configured with a

relative path, so they will all go under this directory unless

changed.

zookeeper.znode.rootserver

root-region-server

Path to ZNode holding root region location. This is

written by

the master and read by clients and region servers. If a relative path is

given, the parent folder will be ${zookeeper.znode.parent}. By

default,

this means the root location is stored at /hbase/root-region-server.

zookeeper.znode.acl.parent

acl

Root ZNode for access control lists.

hbase.zookeeper.dns.interface

default

The name of the Network Interface from which a ZooKeeper

server

should report its IP address.

hbase.zookeeper.dns.nameserver

default

The host name or IP address of the name server (DNS)

which a ZooKeeper server should use to determine the host name used

by the

master for communication and display purposes.

hbase.zookeeper.peerport

2888

Port used by ZooKeeper peers to talk to each other.

See

http://hadoop.apache.org/zookeeper/docs/r3.1.1/zookeeperStarted.html#sc_RunningReplicatedZooKeeper

for more information.

hbase.zookeeper.leaderport

3888

Port used by ZooKeeper for leader election.

See

http://hadoop.apache.org/zookeeper/docs/r3.1.1/zookeeperStarted.html#sc_RunningReplicatedZooKeeper

for more information.

hbase.zookeeper.useMulti

true

Instructs HBase to make use of ZooKeeper's multi-update

functionality.

This allows certain ZooKeeper operations to complete more quickly and

prevents some issues

with rare Replication failure scenarios (see the release note of

HBASE-2611 for an example).

IMPORTANT: only set this to true if all ZooKeeper servers in the cluster are on

version 3.4+

and will not be downgraded. ZooKeeper versions before 3.4 do not support

multi-update and

will not fail gracefully if multi-update is invoked (see ZOOKEEPER-1495).

hbase.config.read.zookeeper.config

false

Set to true to allow HBaseConfiguration to read the

zoo.cfg file for ZooKeeper properties. Switching this to true

is not recommended, since the functionality of reading ZK

properties from a zoo.cfg file has been deprecated.

hbase.zookeeper.property.initLimit

10

Property from ZooKeeper's config zoo.cfg.

The number of ticks that the initial synchronization phase can take.

hbase.zookeeper.property.syncLimit

5

Property from ZooKeeper's config zoo.cfg.

The number of ticks that can pass between sending a request and getting

an

acknowledgment.

hbase.zookeeper.property.dataDir

${hbase.tmp.dir}/zookeeper

Property from ZooKeeper's config zoo.cfg.

The directory where the snapshot is stored.

hbase.zookeeper.property.clientPort

2181

Property from ZooKeeper's config zoo.cfg.

The port at which the clients will connect.

hbase.zookeeper.property.maxClientCnxns

300

Property from ZooKeeper's config zoo.cfg.

Limit on number of concurrent connections (at the socket level) that a

single client, identified by IP address, may make to a single member

of

the ZooKeeper ensemble. Set high to avoid zk connection issues running

standalone and pseudo-distributed.

hbase.client.write.buffer

2097152

Default size of the HTable client write buffer in bytes.

A bigger buffer takes more memory -- on both the client and server

side since server instantiates the passed write buffer to process

it -- but a larger buffer size reduces the number of RPCs made.

For an estimate of server-side memory-used, evaluate

hbase.client.write.buffer * hbase.regionserver.handler.count

hbase.client.pause

100

General client pause value. Used mostly as value to wait

before running a retry of a failed get, region lookup, etc.

See hbase.client.retries.number for description of how we backoff from

this initial pause amount and how this pause works w/ retries.

hbase.client.retries.number

35

Maximum retries. Used as maximum for all retryable

operations such as the getting of a cell's value, starting a row

update,

etc. Retry interval is a rough function based on hbase.client.pause. At

first we retry at this interval but then with backoff, we pretty

quickly reach

retrying every ten seconds. See HConstants#RETRY_BACKOFF for how the backup

ramps up. Change this setting and hbase.client.pause to suit your

workload.

hbase.client.max.total.tasks

100

The maximum number of concurrent tasks a single HTable

instance will

send to the cluster.

hbase.client.max.perserver.tasks

5

The maximum number of concurrent tasks a single HTable

instance will

send to a single region server.

hbase.client.max.perregion.tasks

1

The maximum number of concurrent connections the client

will

maintain to a single Region. That is, if there is already

hbase.client.max.perregion.tasks writes in progress for this region,

new puts

won't be sent to this region until some writes finishes.

hbase.client.scanner.caching

2147483647

Number of rows that we try to fetch when calling next

on a scanner if it is not served from (local, client) memory. This

configuration

works together with hbase.client.scanner.max.result.size to try and use

the

network efficiently. The default value is Integer.MAX_VALUE by default so

that

the network will fill the chunk size defined by

hbase.client.scanner.max.result.size

rather than be limited by a particular number of rows since the size of

rows varies

table to table. If you know ahead of time that you will not require more

than a certain

number of rows from a scan, this configuration should be set to that row

limit via

Scan#setCaching. Higher caching values will enable faster scanners but will eat up

more

memory and some calls of next may take longer and longer times when the

cache is empty.

Do not set this value such that the time between invocations is greater

than the scanner

timeout; i.e. hbase.client.scanner.timeout.period

hbase.client.keyvalue.maxsize

10485760

Specifies the combined maximum allowed size of a KeyValue

instance. This is to set an upper boundary for a single entry saved

in a

storage file. Since they cannot be split it helps avoiding that a region

cannot be split any further because the data is too large. It seems

wise

to set this to a fraction of the maximum region size. Setting it to

zero

or less disables the check.

hbase.client.scanner.timeout.period

60000

Client scanner lease period in milliseconds.

hbase.client.localityCheck.threadPoolSize

2

hbase.bulkload.retries.number

10

Maximum retries. This is maximum number of iterations

to atomic bulk loads are attempted in the face of splitting operations

0 means never give up.

hbase.balancer.period

300000

Period at which the region balancer runs in the Master.

hbase.normalizer.period

1800000

Period at which the region normalizer runs in the Master.

hbase.regions.slop

0.2

Rebalance if any regionserver has average + (average *

slop) regions.

hbase.server.thread.wakefrequency

10000

Time to sleep in between searches for work (in

milliseconds).

Used as sleep interval by service threads such as log roller.

hbase.server.versionfile.writeattempts

3

How many time to retry attempting to write a version file

before just aborting. Each attempt is seperated by the

hbase.server.thread.wakefrequency milliseconds.

hbase.hregion.memstore.flush.size

134217728

Memstore will be flushed to disk if size of the memstore

exceeds this number of bytes. Value is checked by a thread that runs

every hbase.server.thread.wakefrequency.

hbase.hregion.percolumnfamilyflush.size.lower.bound

16777216

If FlushLargeStoresPolicy is used, then every time that we hit the

total memstore limit, we find out all the column families whose

memstores

exceed this value, and only flush them, while retaining the others whose

memstores are lower than this limit. If none of the families have

their

memstore size more than this, all the memstores will be flushed

(just as usual). This value should be less than half of the total memstore

threshold (hbase.hregion.memstore.flush.size).

hbase.hregion.preclose.flush.size

5242880

If the memstores in a region are this size or larger when we go

to close, run a "pre-flush" to clear out memstores before we put up

the region closed flag and take the region offline. On close,

a flush is run under the close flag to empty memory. During

this time the region is offline and we are not taking on any writes.

If the memstore content is large, this flush could take a long time to

complete. The preflush is meant to clean out the bulk of the memstore

before putting up the close flag and taking the region offline so the

flush that runs under the close flag has little to do.

hbase.hregion.memstore.block.multiplier

4

Block updates if memstore has hbase.hregion.memstore.block.multiplier

times hbase.hregion.memstore.flush.size bytes. Useful preventing

runaway memstore during spikes in update traffic. Without an

upper-bound, memstore fills such that when it flushes the

resultant flush files take a long time to compact or split, or

worse, we OOME.

hbase.hregion.memstore.mslab.enabled

true

Enables the MemStore-Local Allocation Buffer,

a feature which works to prevent heap fragmentation under

heavy write loads. This can reduce the frequency of stop-the-world

GC pauses on large heaps.

hbase.hregion.max.filesize

10737418240

Maximum HStoreFile size. If any one of a column families' HStoreFiles has

grown to exceed this value, the hosting HRegion is split in two.

hbase.hregion.majorcompaction

604800000

The time (in miliseconds) between 'major' compactions of

all

HStoreFiles in a region. Default: Set to 7 days. Major compactions tend to

happen exactly when you need them least so enable them such that they

run at

off-peak for your deploy; or, since this setting is on a periodicity that is

unlikely to match your loading, run the compactions via an external

invocation out of a cron job or some such.

hbase.hregion.majorcompaction.jitter

0.50

Jitter outer bound for major compactions.

On each regionserver, we multiply the hbase.region.majorcompaction

interval by some random fraction that is inside the bounds of this

maximum. We then add this + or - product to when the next

major compaction is to run. The idea is that major compaction

does happen on every regionserver at exactly the same time. The

smaller this number, the closer the compactions come together.

hbase.hstore.compactionThreshold

3

If more than this number of HStoreFiles in any one HStore

(one HStoreFile is written per flush of memstore) then a compaction

is run to rewrite all HStoreFiles files as one. Larger numbers

put off compaction but when it runs, it takes longer to complete.

hbase.hstore.flusher.count

2

The number of flush threads. With less threads, the memstore flushes

will be queued. With

more threads, the flush will be executed in parallel, increasing the hdfs

load. This can

lead as well to more compactions.

hbase.hstore.blockingStoreFiles

10

If more than this number of StoreFiles in any one Store

(one StoreFile is written per flush of MemStore) then updates are

blocked for this HRegion until a compaction is completed, or

until hbase.hstore.blockingWaitTime has been exceeded.

hbase.hstore.blockingWaitTime

90000

The time an HRegion will block updates for after hitting the StoreFile

limit defined by hbase.hstore.blockingStoreFiles.

After this time has elapsed, the HRegion will stop blocking updates even

if a compaction has not been completed.

hbase.hstore.compaction.max

10

Max number of HStoreFiles to compact per 'minor'

compaction.

hbase.hstore.compaction.kv.max

10

How many KeyValues to read and then write in a batch when

flushing

or compacting. Do less if big KeyValues and problems with OOME.

Do more if wide, small rows.

hbase.hstore.time.to.purge.deletes

0

The amount of time to delay purging of delete markers

with future timestamps. If

unset, or set to 0, all delete markers, including those with future

timestamps, are purged

during the next major compaction. Otherwise, a delete marker is kept until

the major compaction

which occurs after the marker's timestamp plus the value of this setting,

in milliseconds.

hbase.storescanner.parallel.seek.enable

false

Enables StoreFileScanner parallel-seeking in StoreScanner,

a feature which can reduce response latency under special conditions.

hbase.storescanner.parallel.seek.threads

10

The default thread pool size if parallel-seeking feature enabled.

hfile.block.cache.size

0.4

Percentage of maximum heap (-Xmx setting) to allocate to

block cache

used by HFile/StoreFile. Default of 0.4 means allocate 40%.

Set to 0 to disable but it's not recommended; you need at least

enough cache to hold the storefile indices.

hfile.block.index.cacheonwrite

false

This allows to put non-root multi-level index blocks into

the block

cache at the time the index is being written.

hfile.index.block.max.size

131072

When the size of a leaf-level, intermediate-level, or

root-level

index block in a multi-level block index grows to this size, the

block is written out and a new block is started.

hbase.bucketcache.ioengine

Where to store the contents of the bucketcache. One of:

heap,

offheap, or file. If a file, set it to file:PATH_TO_FILE. See

http://hbase.apache.org/book.html#offheap.blockcache for more

information.

hbase.bucketcache.combinedcache.enabled

true

Whether or not the bucketcache is used in league with the

LRU

on-heap block cache. In this mode, indices and blooms are kept in the LRU

blockcache and the data blocks are kept in the bucketcache.

hbase.bucketcache.size

A float that EITHER represents a percentage of total heap

memory

size to give to the cache (if < 1.0) OR, it is the total capacity in

megabytes of BucketCache. Default: 0.0

hbase.bucketcache.sizes

A comma-separated list of sizes for buckets for the

bucketcache.

Can be multiple sizes. List block sizes in order from smallest to

largest.

The sizes you use will depend on your data access patterns.

Must be a multiple of 1024 else you will run into

'java.io.IOException: Invalid HFile block magic' when you go to read from cache.

If you specify no values here, then you pick up the default bucketsizes

set

in code (See BucketAllocator#DEFAULT_BUCKET_SIZES).

hfile.format.version

3

The HFile format version to use for new files.

Version 3 adds support for tags in hfiles (See

http://hbase.apache.org/book.html#hbase.tags).

Distributed Log Replay requires that tags are enabled. Also see the

configuration

'hbase.replication.rpc.codec'.

hfile.block.bloom.cacheonwrite

false

Enables cache-on-write for inline blocks of a compound

Bloom filter.

io.storefile.bloom.block.size

131072

The size in bytes of a single block ("chunk") of a

compound Bloom

filter. This size is approximate, because Bloom blocks can only be

inserted at data block boundaries, and the number of keys per data

block varies.

hbase.rs.cacheblocksonwrite

false

Whether an HFile block should be added to the block cache

when the

block is finished.

hbase.rpc.timeout

60000

This is for the RPC layer to define how long

(millisecond) HBase client applications

take for a remote call to time out. It uses pings to check connections

but will eventually throw a TimeoutException.

hbase.client.operation.timeout

1200000

Operation timeout is a top-level restriction

(millisecond) that makes sure a

blocking operation in Table will not be blocked more than this. In each

operation, if rpc

request fails because of timeout or other reason, it will retry until

success or throw

RetriesExhaustedException. But if the total time being blocking reach the operation timeout

before retries exhausted, it will break early and throw

SocketTimeoutException.

hbase.cells.scanned.per.heartbeat.check

10000

The number of cells scanned in between heartbeat checks.

Heartbeat

checks occur during the processing of scans to determine whether or not the

server should stop scanning in order to send back a heartbeat message

to the

client. Heartbeat messages are used to keep the client-server connection

alive

during long running scans. Small values mean that the heartbeat checks will

occur more often and thus will provide a tighter bound on the

execution time of

the scan. Larger values mean that the heartbeat checks occur less

frequently

hbase.rpc.shortoperation.timeout

10000

This is another version of "hbase.rpc.timeout". For those

RPC operation

within cluster, we rely on this configuration to set a short timeout

limitation

for short operation. For example, short rpc timeout for region server's

trying

to report to active master can benefit quicker master failover process.

hbase.ipc.client.tcpnodelay

true

Set no delay on rpc socket connections. See

http://docs.oracle.com/javase/1.5.0/docs/api/java/net/Socket.html#getTcpNoDelay()

hbase.regionserver.hostname

This config is for experts: don't set its value unless

you really know what you are doing.

When set to a non-empty value, this represents the (external facing)

hostname for the underlying server.

See https://issues.apache.org/jira/browse/HBASE-12954 for details.

hbase.master.keytab.file

Full path to the kerberos keytab file to use for logging

in

the configured HMaster server principal.

hbase.master.kerberos.principal

Ex. "hbase/[email protected]". The kerberos principal

name

that should be used to run the HMaster process. The principal name should

be in the form: user/hostname@DOMAIN. If "_HOST" is used as the

hostname

portion, it will be replaced with the actual hostname of the running

instance.

hbase.regionserver.keytab.file

Full path to the kerberos keytab file to use for logging

in

the configured HRegionServer server principal.

hbase.regionserver.kerberos.principal

Ex. "hbase/[email protected]". The kerberos principal

name

that should be used to run the HRegionServer process. The principal name

should be in the form: user/hostname@DOMAIN. If "_HOST" is used as

the

hostname portion, it will be replaced with the actual hostname of the

running instance. An entry for this principal must exist in the file

specified in hbase.regionserver.keytab.file

hadoop.policy.file

hbase-policy.xml

The policy configuration file used by RPC servers to make

authorization decisions on client requests. Only used when HBase

security is enabled.

hbase.superuser

List of users or groups (comma-separated), who are

allowed

full privileges, regardless of stored ACLs, across the cluster.

Only used when HBase security is enabled.

hbase.auth.key.update.interval

86400000

The update interval for master key for authentication

tokens

in servers in milliseconds. Only used when HBase security is enabled.

hbase.auth.token.max.lifetime

604800000

The maximum lifetime in milliseconds after which an

authentication token expires. Only used when HBase security is

enabled.

hbase.ipc.client.fallback-to-simple-auth-allowed

false

When a client is configured to attempt a secure

connection, but attempts to

connect to an insecure server, that server may instruct the client to

switch to SASL SIMPLE (unsecure) authentication. This setting controls

whether or not the client will accept this instruction from the

server.

When false (the default), the client will not allow the fallback to

SIMPLE

authentication, and will abort the connection.

hbase.ipc.server.fallback-to-simple-auth-allowed

false

When a server is configured to require secure

connections, it will

reject connection attempts from clients using SASL SIMPLE (unsecure)

authentication.

This setting allows secure servers to accept SASL SIMPLE connections from

clients

when the client requests. When false (the default), the server will not

allow the fallback

to SIMPLE authentication, and will reject the connection. WARNING: This

setting should ONLY

be used as a temporary measure while converting clients over to secure

authentication. It

MUST BE DISABLED for secure operation.

hbase.coprocessor.enabled

true

Enables or disables coprocessor loading. If 'false'

(disabled), any other coprocessor related configuration will be

ignored.

hbase.coprocessor.user.enabled

true

Enables or disables user (aka. table) coprocessor

loading.

If 'false' (disabled), any table coprocessor attributes in table

descriptors will be ignored. If "hbase.coprocessor.enabled" is

'false'

this setting has no effect.

hbase.coprocessor.region.classes

A comma-separated list of Coprocessors that are loaded by

default on all tables. For any override coprocessor method, these

classes

will be called in order. After implementing your own Coprocessor, just

put

it in HBase's classpath and add the fully qualified class name here.

A coprocessor can also be loaded on demand by setting

HTableDescriptor.

hbase.rest.port

8080

The port for the HBase REST server.

hbase.rest.readonly

false

Defines the mode the REST server will be started in.

Possible values are:

false: All HTTP methods are permitted - GET/PUT/POST/DELETE.

true: Only the GET method is permitted.

hbase.rest.threads.max

100

The maximum number of threads of the REST server thread

pool.

Threads in the pool are reused to process REST requests. This

controls the maximum number of requests processed concurrently.

It may help to control the memory used by the REST server to

avoid OOM issues. If the thread pool is full, incoming requests

will be queued up and wait for some free threads.

hbase.rest.threads.min

2

The minimum number of threads of the REST server thread

pool.

The thread pool always has at least these number of threads so

the REST server is ready to serve incoming requests.

hbase.rest.support.proxyuser

false

Enables running the REST server to support proxy-user

mode.

hbase.defaults.for.version

1.2.3

This defaults file was compiled for version

${project.version}. This variable is used

to make sure that a user doesn't have an old version of

hbase-default.xml on the

classpath.

hbase.defaults.for.version.skip

false

Set to true to skip the 'hbase.defaults.for.version'

check.

Setting this to true can be useful in contexts other than

the other side of a maven generation; i.e. running in an

ide. You'll want to set this boolean to true to avoid

seeing the RuntimException complaint: "hbase-default.xml file

seems to be for and old version of HBase (\${hbase.version}), this

version is X.X.X-SNAPSHOT"

hbase.coprocessor.master.classes

A comma-separated list of

org.apache.hadoop.hbase.coprocessor.MasterObserver coprocessors that

are

loaded by default on the active HMaster process. For any implemented

coprocessor methods, the listed classes will be called in order.

After

implementing your own MasterObserver, just put it in HBase's classpath

and add the fully qualified class name here.

hbase.coprocessor.abortonerror

true

Set to true to cause the hosting server (master or

regionserver)

to abort if a coprocessor fails to load, fails to initialize, or throws

an

unexpected Throwable object. Setting this to false will allow the server to

continue execution but the system wide state of the coprocessor in

question

will become inconsistent as it will be properly executing in only a

subset

of servers, so this is most useful for debugging only.

hbase.online.schema.update.enable

true

Set true to enable online schema changes.

hbase.table.lock.enable

true

Set to true to enable locking the table in zookeeper for

schema change operations.

Table locking from master prevents concurrent schema modifications to

corrupt table

state.

hbase.table.max.rowsize

1073741824

Maximum size of single row in bytes (default is 1 Gb) for Get'ting

or Scan'ning without in-row scan flag set. If row size exceeds this

limit

RowTooBigException is thrown to client.

hbase.thrift.minWorkerThreads

16

The "core size" of the thread pool. New threads are

created on every

connection until this many threads are created.

hbase.thrift.maxWorkerThreads

1000

The maximum size of the thread pool. When the pending

request queue

overflows, new threads are created until their number reaches this number.

After that, the server starts dropping connections.

hbase.thrift.maxQueuedRequests

1000

The maximum number of pending Thrift connections waiting

in the queue. If

there are no idle threads in the pool, the server queues requests. Only

when the queue overflows, new threads are added, up to

hbase.thrift.maxQueuedRequests threads.

hbase.thrift.htablepool.size.max

1000

The upper bound for the table pool used in the Thrift

gateways server.

Since this is per table name, we assume a single table and so with 1000

default

worker threads max this is set to a matching number. For other workloads

this number

can be adjusted as needed.

hbase.regionserver.thrift.framed

false

Use Thrift TFramedTransport on the server side.

This is the recommended transport for thrift servers and requires a

similar setting

on the client side. Changing this to false will select the default

transport,

vulnerable to DoS when malformed requests are issued due to THRIFT-601.

hbase.regionserver.thrift.framed.max_frame_size_in_mb

2

Default frame size when using framed transport

hbase.regionserver.thrift.compact

false

Use Thrift TCompactProtocol binary serialization

protocol.

hbase.rootdir.perms

700

FS Permissions for the root directory in a

secure(kerberos) setup.

When master starts, it creates the rootdir with this permissions or sets

the permissions

if it does not match.

hbase.data.umask.enable

false

Enable, if true, that file permissions should be assigned

to the files written by the regionserver

hbase.data.umask

000

File permissions that should be used to write data

files when hbase.data.umask.enable is true

hbase.metrics.showTableName

true

Whether to include the prefix "tbl.tablename" in

per-column family metrics.

If true, for each metric M, per-cf metrics will be reported for

tbl.T.cf.CF.M, if false,

per-cf metrics will be aggregated by column-family across tables, and

reported for cf.CF.M.

In both cases, the aggregated metric M across tables and cfs will be

reported.

hbase.metrics.exposeOperationTimes

true

Whether to report metrics about time taken performing an

operation on the region server. Get, Put, Delete, Increment, and

Append can all

have their times exposed through Hadoop metrics per CF and per region.

-->

hbase.snapshot.enabled

true

Set to true to allow snapshots to be taken / restored /

cloned.

hbase.snapshot.restore.take.failsafe.snapshot

true

Set to true to take a snapshot before the restore

operation.

The snapshot taken will be used in case of failure, to restore the

previous state.

At the end of the restore operation this snapshot will be deleted

hbase.snapshot.restore.failsafe.name

hbase-failsafe-{snapshot.name}-{restore.timestamp}

Name of the failsafe snapshot taken by the restore

operation.

You can use the {snapshot.name}, {table.name} and {restore.timestamp}

variables

to create a name based on what you are restoring.

hbase.server.compactchecker.interval.multiplier

1000

The number that determines how often we scan to see if

compaction is necessary.

Normally, compactions are done after some events (such as memstore flush), but

if

region didn't receive a lot of writes for some time, or due to different

compaction

policies, it may be necessary to check it periodically. The interval between

checks is

hbase.server.compactchecker.interval.multiplier multiplied by

hbase.server.thread.wakefrequency.

hbase.lease.recovery.timeout

900000

How long we wait on dfs lease recovery in total before

giving up.

hbase.lease.recovery.dfs.timeout

64000

How long between dfs recover lease invocations. Should be

larger than the sum of

the time it takes for the namenode to issue a block recovery command as

part of

datanode; dfs.heartbeat.interval and the time it takes for the primary

datanode, performing block recovery to timeout on a dead datanode;

usually

dfs.client.socket-timeout. See the end of HBASE-8389 for more.

hbase.column.max.version

1

New column family descriptors will use this value as the

default number of versions

to keep.

hbase.dfs.client.read.shortcircuit.buffer.size

131072

If the DFSClient configuration

dfs.client.read.shortcircuit.buffer.size is unset, we will

use what is configured here as the short circuit read default

direct byte buffer size. DFSClient native default is 1MB; HBase

keeps its HDFS files open so number of file blocks * 1MB soon

starts to add up and threaten OOME because of a shortage of

direct memory. So, we set it down from the default. Make

it > the default hbase block size set in the HColumnDescriptor

which is usually 64k.

hbase.regionserver.checksum.verify

true

If set to true (the default), HBase verifies the checksums for hfile

blocks. HBase writes checksums inline with the data when it writes

out

hfiles. HDFS (as of this writing) writes checksums to a separate file

than the data file necessitating extra seeks. Setting this flag saves

some on i/o. Checksum verification by HDFS will be internally

disabled

on hfile streams when this flag is set. If the hbase-checksum

verification

fails, we will switch back to using HDFS checksums (so do not disable HDFS

checksums! And besides this feature applies to hfiles only, not to

WALs).

If this parameter is set to false, then hbase will not verify any

checksums,

instead it will depend on checksum verification being done in the HDFS

client.

hbase.hstore.bytes.per.checksum

16384

Number of bytes in a newly created checksum chunk for HBase-level

checksums in hfile blocks.

hbase.hstore.checksum.algorithm

CRC32C

Name of an algorithm that is used to compute checksums. Possible values

are NULL, CRC32, CRC32C.

hbase.client.scanner.max.result.size

2097152

Maximum number of bytes returned when calling a scanner's

next method.

Note that when a single row is larger than this limit the row is still

returned completely.

The default value is 2MB, which is good for 1ge networks.

With faster and/or high latency networks this value should be increased.

hbase.server.scanner.max.result.size

104857600

Maximum number of bytes returned when calling a scanner's

next method.

Note that when a single row is larger than this limit the row is still

returned completely.

The default value is 100MB.

This is a safety setting to protect the server from OOM situations.

hbase.status.published

false

This setting activates the publication by the master of the status of the

region server.

When a region server dies and its recovery starts, the master will push

this information

to the client application, to let them cut the connection immediately

instead of waiting

for a timeout.

hbase.status.publisher.class

org.apache.hadoop.hbase.master.ClusterStatusPublisher$MulticastPublisher

Implementation of the status publication with a multicast message.

hbase.status.listener.class

org.apache.hadoop.hbase.client.ClusterStatusListener$MulticastListener

Implementation of the status listener with a multicast message.

hbase.status.multicast.address.ip

226.1.1.3

Multicast address to use for the status publication by multicast.

hbase.status.multicast.address.port

16100

Multicast port to use for the status publication by multicast.

hbase.dynamic.jars.dir

${hbase.rootdir}/lib

The directory from which the custom filter/co-processor jars can be

loaded

dynamically by the region server without the need to restart. However,

an already loaded filter/co-processor class would not be un-loaded. See

HBASE-1936 for more details.

hbase.security.authentication

simple

Controls whether or not secure authentication is enabled for HBase.

Possible values are 'simple' (no authentication), and 'kerberos'.

hbase.rest.filter.classes

org.apache.hadoop.hbase.rest.filter.GzipFilter

Servlet filters for REST service.

hbase.master.loadbalancer.class

org.apache.hadoop.hbase.master.balancer.StochasticLoadBalancer

Class used to execute the regions balancing when the period occurs.

See the class comment for more on how it works

http://hbase.apache.org/devapidocs/org/apache/hadoop/hbase/master/balancer/StochasticLoadBalancer.html

It replaces the DefaultLoadBalancer as the default (since renamed

as the SimpleLoadBalancer).

hbase.security.exec.permission.checks

false

If this setting is enabled and ACL based access control is active (the

AccessController coprocessor is installed either as a system

coprocessor

or on a table as a table coprocessor) then you must grant all relevant

users EXEC privilege if they require the ability to execute

coprocessor

endpoint calls. EXEC privilege, like any other permission, can be

granted globally to a user, or to a user on a per table or per namespace

basis. For more information on coprocessor endpoints, see the

coprocessor

section of the HBase online manual. For more information on granting or

revoking permissions using the AccessController, see the security

section of the HBase online manual.

hbase.procedure.regionserver.classes

A comma-separated list of

org.apache.hadoop.hbase.procedure.RegionServerProcedureManager

procedure managers that are

loaded by default on the active HRegionServer process. The lifecycle

methods (init/start/stop)

will be called by the active HRegionServer process to perform the

specific globally barriered

procedure. After implementing your own RegionServerProcedureManager, just put

it in

HBase's classpath and add the fully qualified class name here.

hbase.procedure.master.classes

A comma-separated list of

org.apache.hadoop.hbase.procedure.MasterProcedureManager procedure

managers that are

loaded by default on the active HMaster process. A procedure is identified

by its signature and

users can use the signature and an instant name to trigger an execution of

a globally barriered

procedure. After implementing your own MasterProcedureManager, just put it in

HBase's classpath

and add the fully qualified class name here.

hbase.coordinated.state.manager.class

org.apache.hadoop.hbase.coordination.ZkCoordinatedStateManager

Fully qualified name of class implementing coordinated

state manager.

hbase.regionserver.storefile.refresh.period

0

The period (in milliseconds) for refreshing the store files for the

secondary regions. 0

means this feature is disabled. Secondary regions sees new files (from

flushes and

compactions) from primary once the secondary region refreshes the list of files

in the

region (there is no notification mechanism). But too frequent refreshes

might cause

extra Namenode pressure. If the files cannot be refreshed for longer than

HFile TTL

(hbase.master.hfilecleaner.ttl) the requests are rejected. Configuring HFile TTL to a larger

value is also recommended with this setting.

hbase.region.replica.replication.enabled

false

Whether asynchronous WAL replication to the secondary region replicas is

enabled or not.

If this is enabled, a replication peer named

"region_replica_replication" will be created

which will tail the logs and replicate the mutatations to region replicas

for tables that

have region replication > 1. If this is enabled once, disabling this

replication also

requires disabling the replication peer using shell or ReplicationAdmin java

class.

Replication to secondary region replicas works over standard inter-cluster

replication.

So replication, if disabled explicitly, also has to be enabled by

setting "hbase.replication"

to true for this feature to work.

hbase.http.filter.initializers

org.apache.hadoop.hbase.http.lib.StaticUserWebFilter

A comma separated list of class names. Each class in the list must

extend

org.apache.hadoop.hbase.http.FilterInitializer. The corresponding Filter will

be initialized. Then, the Filter will be applied to all user facing jsp

and servlet web pages.

The ordering of the list defines the ordering of the filters.

The default StaticUserWebFilter add a user principal as defined by the

hbase.http.staticuser.user property.

hbase.security.visibility.mutations.checkauths

false

This property if enabled, will check whether the labels in the visibility

expression are associated

with the user issuing the mutation

hbase.http.max.threads

10

The maximum number of threads that the HTTP Server will create in its

ThreadPool.

hbase.replication.rpc.codec

org.apache.hadoop.hbase.codec.KeyValueCodecWithTags

The codec that is to be used when replication is enabled so that

the tags are also replicated. This is used along with HFileV3 which

supports tags in them. If tags are not used or if the hfile version

used

is HFileV2 then KeyValueCodec can be used as the replication codec.

Note that

using KeyValueCodecWithTags for replication when there are no tags causes

no harm.

hbase.replication.source.maxthreads

10

The maximum number of threads any replication source will use for

shipping edits to the sinks in parallel. This also limits the number

of

chunks each replication batch is broken into.

Larger values can improve the replication throughput between the master and

slave clusters. The default of 10 will rarely need to be changed.

The user name to filter as, on static web filters

while rendering content. An example use is the HDFS

web UI (user to be used for browsing files).

hbase.http.staticuser.user

dr.stack

hbase.master.normalizer.class

org.apache.hadoop.hbase.master.normalizer.SimpleRegionNormalizer

Class used to execute the region normalization when the period occurs.

See the class comment for more on how it works

http://hbase.apache.org/devapidocs/org/apache/hadoop/hbase/master/normalizer/SimpleRegionNormalizer.html

hbase.regionserver.handler.abort.on.error.percent

0.5

The percent of region server RPC threads failed to abort

RS.

-1 Disable aborting; 0 Abort if even a single handler has died;

0.x Abort only when this percent of handlers have died;

1 Abort only all of the handers have died.

hbase.snapshot.master.timeout.millis

300000

Timeout for master for the snapshot procedure execution

hbase.snapshot.region.timeout

300000

Timeout for regionservers to keep threads in snapshot request pool waiting