- DPDK(25.03) 零基础配置笔记

_Chipen

DPDK计算机网络

DPDK零基础配置笔记DPDK(DataPlaneDevelopmentKit,数据面开发工具包)是一个高性能数据包处理库,主要用于绕过Linux内核网络协议栈,直接在用户空间对网卡收发的数据进行操作,以此实现极高的数据吞吐。DPDK的核心价值是:使用轮询+巨页内存+用户态驱动,提升网络收发性能。适用场景:高频交易、软件路由器、防火墙、负载均衡器等对网络性能要求极高的系统。基本数据简要解释igb_

- Python领域制造业的Python应用

Python编程之道

Python编程之道python开发语言ai

Python在制造业中的应用:从自动化到智能制造关键词:Python、制造业、工业自动化、数据分析、机器学习、物联网、智能制造摘要:本文深入探讨Python编程语言在制造业中的广泛应用。从基础的自动化脚本到复杂的智能制造系统,Python凭借其丰富的库生态系统和易用性,正在重塑现代制造业。我们将分析Python在制造业中的核心应用场景,包括设备监控、质量控制、预测性维护和供应链优化等,并通过实际案

- 【SRC漏洞】第四章 业务逻辑(补充篇)

提示:主要讲解场景思路提示:看不出来要拦截那个数据包,那么就一个一个数据包的进行尝试目录商城类漏洞支付漏洞商城类漏洞优惠劵并发:领取优惠劵时候,并发领取优惠卷的复用:并发下单,一个优惠劵多次使用切换优惠劵的id:某商品仅允许某优惠卷抓包修改1000的东西只能使用20块的优惠价,但是有一张50的优惠卷将20块的优惠卷id修改为50块的时间型优惠劵:修改个人信息如生日日期出生日期让优惠卷可以天天使用优

- Python 爬虫实战:自动化获取学术会议数据(会议安排、论文提交等)

Python爬虫项目

python爬虫自动化智能家居数据分析开发语言运维

1.引言学术会议是研究人员获取最新科研成果、发表论文、交流思想的重要平台。对于研究者而言,掌握最新的会议安排、论文提交截止日期、会议议程以及演讲嘉宾等信息至关重要。然而,学术会议信息通常分散在不同的官方网站上,人工查找和整理这些数据既费时又容易遗漏。为了提高效率,我们可以使用Python爬虫自动化获取学术会议数据,包括:会议名称、日期、地点论文提交截止日期会议议程及嘉宾信息论文录用结果重要通知及相

- 现代人工智能综合分类:大模型时代的架构、模态与生态系统

司南锤

economics人工智能分类数据挖掘

目录引言:人工智能的第四次浪潮与新分类的必要性第一节:大型模型范式的基础支柱1.1规模化假说:算力、数据与算法的三位一体1.2“涌现能力”之谜:当“更多”变为“不同”1.3自监督学习(SSL)革命第二节:大型模型的技术分类学2.1Transformer:现代人工智能的架构基石2.2架构分化:一种功能性分类2.3提升效率与规模:专家混合模型(MoE)2.4超越Transformer:下一代架构的探索

- 28.【.NET8 实战--孢子记账--从单体到微服务--转向微服务】--单体转微服务--币种服务(二)

喵叔哟

.NET8.net微服务java

仅有币种服务还不够,记账应用还需支持不同币种间的转换。要实现这一功能,首先需要获取币种之间的汇率。因此,本文将介绍如何实现汇率的同步。一、汇率数据从何而来?汇率数据无时无刻都在变动,因此需要一个可靠的来源来获取最新的汇率信息。通常可以通过以下几种方式获取:爬取数据:一些专业的金融数据服务商提供汇率数据,可以通过订阅获取。手动输入:对于小型应用,可以手动输入汇率数据,但这不适合大规模或实时更新的应用

- 关于堆的判断

秋说

PTA数据结构题目集算法数据结构c语言

本专栏持续输出数据结构题目集,欢迎订阅。文章目录题目代码题目将一系列给定数字顺序插入一个初始为空的最小堆。随后判断一系列相关命题是否为真。命题分下列几种:xistheroot:x是根结点;xandyaresiblings:x和y是兄弟结点;xistheparentofy:x是y的父结点;xisachildofy:x是y的一个子结点。输入格式:每组测试第1行包含2个正整数n(≤1000)和m(≤20

- Java基础语法四件套:变量、数据类型、运算符、流程控制(新手必看)

杨凯凡

java开发语言

前言刚学Java就被变量和if-else绕晕了?别急!这篇博客将用“说人话”+代码演示的方式,带你彻底搞懂:如何声明变量?inta=10;和finaldoublePI=3.14;有什么区别?为什么10/3结果是3而不是3.333?for和while循环到底用哪个?学完这篇,你写的代码将从HelloWorld升级为能算能判能循环的智能程序!文章摘要核心内容:✅变量与常量:声明、作用域、final关键

- UDP协议介绍

不想写bug呀

javaEEudp网络协议网络

目录一、UDP基本概念1、定义:2、特点:(1)无连接:(2)不可靠传输:(3)面向数据报:(4)全双工:二、UDP协议格式1、UDP报文结构2、各部分详解:(1)源端口号:(2)目的端口号:(3)UDP长度:(4)校检和:三、UDP使用注意事项四、基于UDP的应用层协议五、总结一、UDP基本概念1、定义:UDP(UserDatagramProtocol,用户数据报协议)是TCP/IP协议簇中位于

- 游戏盾能否保护业务免受DDoS攻击吗?

上海云盾第一敬业销售

游戏ddos网络

在当今这个网络攻击日益频繁的时代,DDoS攻击已成为企业面临的最大威胁之一。游戏盾,作为一种先进的网络安全解决方案,被广泛用于保护在线游戏免受攻击,但其在企业业务保护方面的效果如何呢?本文将深入探讨游戏盾是否能够保护业务免受DDoS攻击,分析其在企业网络安全中的作用和重要性。随着网络攻击手段的不断升级,企业必须采取更为有效的防护措施,以确保业务连续性和数据安全。1、游戏盾的定义游戏盾最初是为在线游

- Java List 集合详解:从基础到实战,掌握 Java 列表操作全貌

大葱白菜

java合集java开发语言后端学习个人开发

作为一名Java开发工程师,你一定在项目中频繁使用过List集合。它是Java集合框架中最常用、最灵活的数据结构之一。无论是从数据库查询出的数据,还是前端传递的参数列表,List都是处理这些数据的首选结构。本文将带你全面掌握:List接口的核心方法与特性常见实现类(如ArrayList、LinkedList、Vector、CopyOnWriteArrayList)List的遍历、增删改查、排序、线

- 什么是网关?网关的作用是什么?

肉胎凡体

物联网网络服务器tcp/ip

网关(Gateway)又称网间连接器、协议转换器。网关在传输层上以实现网络互连,是最复杂的网络互连设备,仅用于两个高层协议不同的网络互连。网关的结构也和路由器类似,不同的是互连层。网关既可以用于广域网互连,也可以用于局域网互连。网关是一种充当转换重任的计算机系统或设备。在使用不同的通信协议、数据格式或语言,甚至体系结构完全不同的两种系统之间,网关是一个翻译器。与网桥只是简单地传达信息不同,网关对收

- Python特性:装饰器解决数据库长时间断连问题

超龄超能程序猿

数据库python

前言在基于Python的Web应用开发里,数据库连接是极为关键的一环。不过,像网络波动、数据库服务器维护这类因素,都可能造成数据库长时间断连,进而影响应用的正常运作。本文将详细介绍怎样运用retry_on_failure装饰器来解决数据库长时间断连的难题一问题背景在实际开发场景中,应用和数据库之间的连接可能会由于各种缘由中断(长时间系统无人访问,再次访问,数据库连接超时)。当应用尝试执行数据库操作

- HTTP协议详细介绍

HTTP(HyperTextTransferProtocol,超文本传输协议)是用于在计算机网络中分发超文本信息的基础协议。它是万维网(WorldWideWeb)的核心协议之一,允许浏览器、服务器和其他应用程序之间的通信。HTTP是无状态的应用层协议,最初用于传输HTML文档,但现在几乎可以传输任何类型的数据。1.HTTP的基本概念1.1无状态协议HTTP是一个无状态协议,这意味着每个请求和响应都

- 判断树的同构

weixin_33681778

数据结构与算法

来源:大学mooc后的编程题(陈越《数据结构》)03-树1树的同构(25分)给定两棵树T1和T2。如果T1可以通过若干次左右孩子互换就变成T2,则我们称两棵树是“同构”的。例如图1给出的两棵树就是同构的,因为我们把其中一棵树的结点A、B、G的左右孩子互换后,就得到另外一棵树。而图2就不是同构的。图1图2现给定两棵树,请你判断它们是否是同构的。输入格式:输入给出2棵二叉树树的信息。对于每棵树,首先在

- Android NFC 技术详解及 IC 卡读取实现

Monkey-旭

microsoftNFCIC卡androidjava

NFC(NearFieldCommunication,近场通信)作为一种短距离高频无线通信技术,在移动支付、身份识别、数据传输等场景中应用广泛。在Android设备上,NFC功能可以实现与IC卡、标签、其他NFC设备的交互,其中“读取IC卡”是最常见的需求之一。本文将从技术原理到实际开发,全面讲解AndroidNFC技术及IC卡读取实现。一、AndroidNFC技术基础1.1什么是NFC?NFC是

- 多通路fpga 通信_FPGA高速接口PCIe详解

weixin_39597636

多通路fpga通信

在高速互连领域中,使用高速差分总线替代并行总线是大势所趋。与单端并行信号(PCI总线)相比,高速差分信号(PCIe总线)可以使用更高的时钟频率,从而使用更少的信号线,完成之前需要许多单端并行数据信号才能达到的总线带宽。PCIe协议基础知识PCI总线使用并行总线结构,在同一条总线上的所有外部设备共享总线带宽,而PCIe总线使用了高速差分总线,并采用端到端的连接方式,因此在每一条PCIe链路中只能连接

- iOS之BLE蓝牙SDK开发个人总结(基础篇)

大灰狼ios

最近一段时间一直在做公司的BLE蓝牙SDK,sdk主要负责外设和手机的连接以及数据通信。过程中遇到了一些比较有价值的问题,现在总结记录下。蓝牙开发使用系统框架#import使用[[CBCentralManageralloc]initWithDelegate:selfqueue:nil]初始化CBCentralManager对象。(设置CBCentralManagerDelegate为self,ni

- 什么是网关?网关有什么作用?

红客597

智能路由器网络

1.网关1.1什么是网关? 网关英文名称为Gateway,又称网间连接器、协议转换器。网关在网络层以上实现网络互连,是最复杂的网络互连设备,仅用于两个高层协议不同的网络互连。 网关既可以用于广域网互连,也可以用于局域网互连。网关是一种充当转换重任的计算机系统或设备。使用在不同的通信协议、数据格式或语言,甚至体系结构完全不同的两种系统之间,网关是一个翻译器,与网桥只是简单地传达信息不同,网关对收

- 03-树1 树的同构

CO₂

PTA树的同构

03-树1树的同构(25分)给定两棵树,请你判断它们是否是同构的。输入格式:输入给出2棵二叉树树的信息。对于每棵树,首先在一行中给出一个非负整数N(≤10),即该树的结点数(此时假设结点从0到N−1编号);随后N行,第i行对应编号第i个结点,给出该结点中存储的1个英文大写字母、其左孩子结点的编号、右孩子结点的编号。如果孩子结点为空,则在相应位置上给出“-”。给出的数据间用一个空格分隔。注意:题目保

- Hbase - 表导出CSV数据

kikiki1

新鲜文章,昨天刚经过线上验证过的,使用它导出了3亿的用户数据出来,花了半个小时,性能还是稳稳的,好了不吹牛皮了,直接上代码吧。MR考查了Hbase的各种MR,没有发现哪一个是能实现的,如果有请通知我,我给他发红包。所以我们只能自己来写一个MR了,编写一个Hbase的MR,官方文档上也有相应的例子。我们用来加以化妆就得到我们想要的了。导出的CSV格式为admin,22,北京admin,23,天津依赖

- 十种常用数据分析模型

耐思nice~

数据分析数据分析人工智能机器学习数学建模

1-线性回归(LinearRegression)场景:预测商品销售额优点:简单易用,结果易于解释缺点:假设线性关系,容易受到异常值影响概念:建立自变量和因变量之间线性关系的模型。公式:[y=b_0+b_1x_1+b_2x_2+...+b_nx_n]代码示例:importpandasaspdfromsklearn.linear_modelimportLinearRegressionfromsklea

- 京东1000元优惠券怎么领?揭秘隐藏优惠券的领取攻略!

氧惠好项目

在现代电商购物中,优惠券成为消费者们追求实惠的重要工具。京东作为国内领先的电商平台,也经常推出各种优惠券福利。其中,1000元优惠券更是备受瞩目。那么,如何才能成功领取到京东的1000元优惠券呢?本文将为您揭秘隐藏优惠券的领取攻略!一、了解1000元优惠券的发放规则在领取京东1000元优惠券之前,我们需要了解其发放规则:发放条件:通常,1000元优惠券的发放有一定的门槛,如需满足一定的消费金额或会

- 2021-12-01

慢品清茶细读书

这个女人不一般早就听说这个女人不一般,可我一点也不信。她,长相不咋地,身材不咋地,不爱化妆,穿着更是普通,根本没有哪一点能提现出让人得以外表上的赏心悦目。她是从车间流水线上提到检验班的,上来就直接干上了检验班班长的职务。这职务当时在整个生产环节挺牛的。上要和销售部门直接对接,下要和各个车间各个工序和环节接洽。即要面对销售的质量关,还要检查监督并及时反馈产品生产过程存在的一些问题,挺重要的岗位。会干

- 什么是网关,网关有哪些作用?

oneday&oneday

微服务面试经验分享网络

网关(Gateway)是在计算机网络中用于连接两个独立的网络的设备,它能够在两个不同协议的网络之间传递数据。在互联网中,网关是一个可以连接不同协议的网络的设备,比如说可以连接局域网和互联网,它可以把局域网的内部网络地址转换成互联网上的合法地址,从而使得局域网内的主机可以与外界通信。在计算机系统中,网关可以用于实现负载均衡、安全过滤、协议转换等功能。具体来说,网关可以分为以下几种:应用网关:用于应用

- C++ 数组详解:从基础到实战

光の

javajvm前端

一、数组的定义与核心特性(一)什么是数组?数组(Array)是C++中用于存储一组相同类型元素的连续内存空间。它通过一个统一的名称(数组名)和索引(下标)来访问每个元素,是实现批量数据管理的基础工具。(二)核心特性特性说明同类型所有元素必须是同一数据类型(如int、double)连续性元素在内存中连续存放,地址递增(&arr[i+1]=&arr[i]+sizeof(类型))固定大小数组声明时需指定

- 碰一碰发视频、碰一碰写好评源码搭建技术开发,支持OEM贴牌

18538162800余+

音视频矩阵线性代数

在移动互联网时代,便捷的交互体验成为吸引用户的关键。“碰一碰发视频”与“碰一碰写好评”功能借助近场通信(NFC)等技术,为用户带来了全新的操作体验,同时也为商家和内容创作者开辟了高效的推广与互动途径。本文将深入探讨这两项功能背后的技术开发要点。一、核心技术基础1.NFC近场通信技术NFC技术是实现碰一碰交互的基石。它基于ISO14443等协议,让设备在短距离(通常为10厘米以内)内进行安全的数据交

- HTTPS,不可或缺的数据安全锁

Arwen303

SSL证书https网络协议http

一、HTTPS:数字时代的"隐形护卫"在网购时输入银行卡信息、登录社交平台发送私信、通过企业OA系统上传文件,这些日常操作背后都藏着一把无形的"安全锁"——HTTPS。↓https://www.joyssl.com/certificate/select/joyssl-dv-single-free-1.html?nid=59↑(注册码230959,赠送1个月有效期)它如同数据传输的"保险箱",在客户

- SSL证书有效期降到47天?企业单位该如何应对!

一觉醒来,天可能都要塌了?就在4月12日,CA/B论坛(负责管理SSL/TLS证书的行业组织)的服务器证书工作组投票通过了SC-081v3提案,从2026年3月14日开始,SSL/TLS证书的有效期将会从目前的最高398天缩短至47天,SANs(域名/IP)验证数据重复使用期限则会从398天缩短到10天。一、可能影响分析安全风险浏览器警告:当证书过期后,用户访问网站时会收到“不安全”提示,导致流量

- 还在了解什么是SSL证书嘛?一篇文章让你简洁明了的认识SSL

一、SSL基础概念1.SSL的定义SSL(SecureSocketsLayer)是一种安全协议,用于在客户端(如浏览器)与服务器之间建立加密通信。它通过数据加密、身份验证和完整性保护,确保网络传输的安全性。2.SSL与TLS的关系SSL:由网景公司于1994年开发,最后一个版本是SSL3.0。TLS(TransportLayerSecurity):SSL的升级版,由IETF标准化。目前主流版本是T

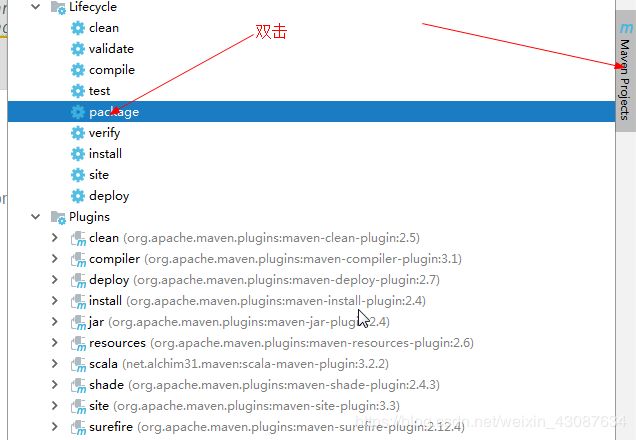

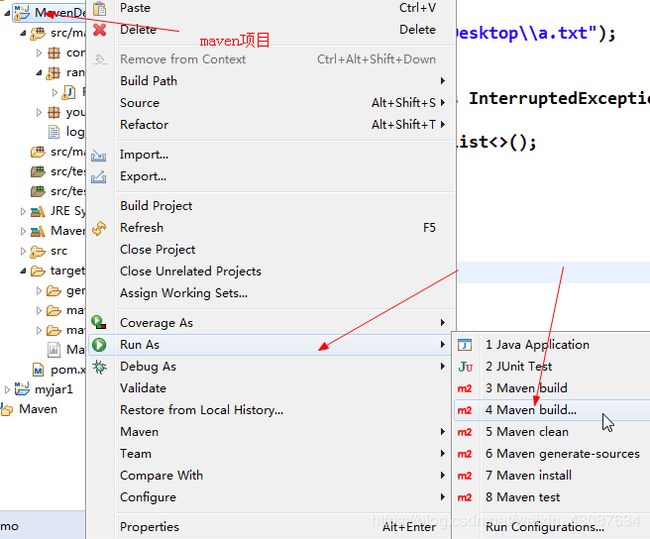

- Maven

Array_06

eclipsejdkmaven

Maven

Maven是基于项目对象模型(POM), 信息来管理项目的构建,报告和文档的软件项目管理工具。

Maven 除了以程序构建能力为特色之外,还提供高级项目管理工具。由于 Maven 的缺省构建规则有较高的可重用性,所以常常用两三行 Maven 构建脚本就可以构建简单的项目。由于 Maven 的面向项目的方法,许多 Apache Jakarta 项目发文时使用 Maven,而且公司

- ibatis的queyrForList和queryForMap区别

bijian1013

javaibatis

一.说明

iBatis的返回值参数类型也有种:resultMap与resultClass,这两种类型的选择可以用两句话说明之:

1.当结果集列名和类的属性名完全相对应的时候,则可直接用resultClass直接指定查询结果类

- LeetCode[位运算] - #191 计算汉明权重

Cwind

java位运算LeetCodeAlgorithm题解

原题链接:#191 Number of 1 Bits

要求:

写一个函数,以一个无符号整数为参数,返回其汉明权重。例如,‘11’的二进制表示为'00000000000000000000000000001011', 故函数应当返回3。

汉明权重:指一个字符串中非零字符的个数;对于二进制串,即其中‘1’的个数。

难度:简单

分析:

将十进制参数转换为二进制,然后计算其中1的个数即可。

“

- 浅谈java类与对象

15700786134

java

java是一门面向对象的编程语言,类与对象是其最基本的概念。所谓对象,就是一个个具体的物体,一个人,一台电脑,都是对象。而类,就是对象的一种抽象,是多个对象具有的共性的一种集合,其中包含了属性与方法,就是属于该类的对象所具有的共性。当一个类创建了对象,这个对象就拥有了该类全部的属性,方法。相比于结构化的编程思路,面向对象更适用于人的思维

- linux下双网卡同一个IP

被触发

linux

转自:

http://q2482696735.blog.163.com/blog/static/250606077201569029441/

由于需要一台机器有两个网卡,开始时设置在同一个网段的IP,发现数据总是从一个网卡发出,而另一个网卡上没有数据流动。网上找了下,发现相同的问题不少:

一、

关于双网卡设置同一网段IP然后连接交换机的时候出现的奇怪现象。当时没有怎么思考、以为是生成树

- 安卓按主页键隐藏程序之后无法再次打开

肆无忌惮_

安卓

遇到一个奇怪的问题,当SplashActivity跳转到MainActivity之后,按主页键,再去打开程序,程序没法再打开(闪一下),结束任务再开也是这样,只能卸载了再重装。而且每次在Log里都打印了这句话"进入主程序"。后来发现是必须跳转之后再finish掉SplashActivity

本来代码:

// 销毁这个Activity

fin

- 通过cookie保存并读取用户登录信息实例

知了ing

JavaScripthtml

通过cookie的getCookies()方法可获取所有cookie对象的集合;通过getName()方法可以获取指定的名称的cookie;通过getValue()方法获取到cookie对象的值。另外,将一个cookie对象发送到客户端,使用response对象的addCookie()方法。

下面通过cookie保存并读取用户登录信息的例子加深一下理解。

(1)创建index.jsp文件。在改

- JAVA 对象池

矮蛋蛋

javaObjectPool

原文地址:

http://www.blogjava.net/baoyaer/articles/218460.html

Jakarta对象池

☆为什么使用对象池

恰当地使用对象池化技术,可以有效地减少对象生成和初始化时的消耗,提高系统的运行效率。Jakarta Commons Pool组件提供了一整套用于实现对象池化

- ArrayList根据条件+for循环批量删除的方法

alleni123

java

场景如下:

ArrayList<Obj> list

Obj-> createTime, sid.

现在要根据obj的createTime来进行定期清理。(释放内存)

-------------------------

首先想到的方法就是

for(Obj o:list){

if(o.createTime-currentT>xxx){

- 阿里巴巴“耕地宝”大战各种宝

百合不是茶

平台战略

“耕地保”平台是阿里巴巴和安徽农民共同推出的一个 “首个互联网定制私人农场”,“耕地宝”由阿里巴巴投入一亿 ,主要是用来进行农业方面,将农民手中的散地集中起来 不仅加大农民集体在土地上面的话语权,还增加了土地的流通与 利用率,提高了土地的产量,有利于大规模的产业化的高科技农业的 发展,阿里在农业上的探索将会引起新一轮的产业调整,但是集体化之后农民的个体的话语权 将更少,国家应出台相应的法律法规保护

- Spring注入有继承关系的类(1)

bijian1013

javaspring

一个类一个类的注入

1.AClass类

package com.bijian.spring.test2;

public class AClass {

String a;

String b;

public String getA() {

return a;

}

public void setA(Strin

- 30岁转型期你能否成为成功人士

bijian1013

成功

很多人由于年轻时走了弯路,到了30岁一事无成,这样的例子大有人在。但同样也有一些人,整个职业生涯都发展得很优秀,到了30岁已经成为职场的精英阶层。由于做猎头的原因,我们接触很多30岁左右的经理人,发现他们在职业发展道路上往往有很多致命的问题。在30岁之前,他们的职业生涯表现很优秀,但从30岁到40岁这一段,很多人

- [Velocity三]基于Servlet+Velocity的web应用

bit1129

velocity

什么是VelocityViewServlet

使用org.apache.velocity.tools.view.VelocityViewServlet可以将Velocity集成到基于Servlet的web应用中,以Servlet+Velocity的方式实现web应用

Servlet + Velocity的一般步骤

1.自定义Servlet,实现VelocityViewServl

- 【Kafka十二】关于Kafka是一个Commit Log Service

bit1129

service

Kafka is a distributed, partitioned, replicated commit log service.这里的commit log如何理解?

A message is considered "committed" when all in sync replicas for that partition have applied i

- NGINX + LUA实现复杂的控制

ronin47

lua nginx 控制

安装lua_nginx_module 模块

lua_nginx_module 可以一步步的安装,也可以直接用淘宝的OpenResty

Centos和debian的安装就简单了。。

这里说下freebsd的安装:

fetch http://www.lua.org/ftp/lua-5.1.4.tar.gz

tar zxvf lua-5.1.4.tar.gz

cd lua-5.1.4

ma

- java-14.输入一个已经按升序排序过的数组和一个数字, 在数组中查找两个数,使得它们的和正好是输入的那个数字

bylijinnan

java

public class TwoElementEqualSum {

/**

* 第 14 题:

题目:输入一个已经按升序排序过的数组和一个数字,

在数组中查找两个数,使得它们的和正好是输入的那个数字。

要求时间复杂度是 O(n) 。如果有多对数字的和等于输入的数字,输出任意一对即可。

例如输入数组 1 、 2 、 4 、 7 、 11 、 15 和数字 15 。由于

- Netty源码学习-HttpChunkAggregator-HttpRequestEncoder-HttpResponseDecoder

bylijinnan

javanetty

今天看Netty如何实现一个Http Server

org.jboss.netty.example.http.file.HttpStaticFileServerPipelineFactory:

pipeline.addLast("decoder", new HttpRequestDecoder());

pipeline.addLast(&quo

- java敏感词过虑-基于多叉树原理

cngolon

违禁词过虑替换违禁词敏感词过虑多叉树

基于多叉树的敏感词、关键词过滤的工具包,用于java中的敏感词过滤

1、工具包自带敏感词词库,第一次调用时读入词库,故第一次调用时间可能较长,在类加载后普通pc机上html过滤5000字在80毫秒左右,纯文本35毫秒左右。

2、如需自定义词库,将jar包考入WEB-INF工程的lib目录,在WEB-INF/classes目录下建一个

utf-8的words.dict文本文件,

- 多线程知识

cuishikuan

多线程

T1,T2,T3三个线程工作顺序,按照T1,T2,T3依次进行

public class T1 implements Runnable{

@Override

- spring整合activemq

dalan_123

java spring jms

整合spring和activemq需要搞清楚如下的东东1、ConnectionFactory分: a、spring管理连接到activemq服务器的管理ConnectionFactory也即是所谓产生到jms服务器的链接 b、真正产生到JMS服务器链接的ConnectionFactory还得

- MySQL时间字段究竟使用INT还是DateTime?

dcj3sjt126com

mysql

环境:Windows XPPHP Version 5.2.9MySQL Server 5.1

第一步、创建一个表date_test(非定长、int时间)

CREATE TABLE `test`.`date_test` (`id` INT NOT NULL AUTO_INCREMENT ,`start_time` INT NOT NULL ,`some_content`

- Parcel: unable to marshal value

dcj3sjt126com

marshal

在两个activity直接传递List<xxInfo>时,出现Parcel: unable to marshal value异常。 在MainActivity页面(MainActivity页面向NextActivity页面传递一个List<xxInfo>): Intent intent = new Intent(this, Next

- linux进程的查看上(ps)

eksliang

linux pslinux ps -llinux ps aux

ps:将某个时间点的进程运行情况选取下来

转载请出自出处:http://eksliang.iteye.com/admin/blogs/2119469

http://eksliang.iteye.com

ps 这个命令的man page 不是很好查阅,因为很多不同的Unix都使用这儿ps来查阅进程的状态,为了要符合不同版本的需求,所以这个

- 为什么第三方应用能早于System的app启动

gqdy365

System

Android应用的启动顺序网上有一大堆资料可以查阅了,这里就不细述了,这里不阐述ROM启动还有bootloader,软件启动的大致流程应该是启动kernel -> 运行servicemanager 把一些native的服务用命令启动起来(包括wifi, power, rild, surfaceflinger, mediaserver等等)-> 启动Dalivk中的第一个进程Zygot

- App Framework发送JSONP请求(3)

hw1287789687

jsonp跨域请求发送jsonpajax请求越狱请求

App Framework 中如何发送JSONP请求呢?

使用jsonp,详情请参考:http://json-p.org/

如何发送Ajax请求呢?

(1)登录

/***

* 会员登录

* @param username

* @param password

*/

var user_login=function(username,password){

// aler

- 发福利,整理了一份关于“资源汇总”的汇总

justjavac

资源

觉得有用的话,可以去github关注:https://github.com/justjavac/awesome-awesomeness-zh_CN 通用

free-programming-books-zh_CN 免费的计算机编程类中文书籍

精彩博客集合 hacke2/hacke2.github.io#2

ResumeSample 程序员简历

- 用 Java 技术创建 RESTful Web 服务

macroli

java编程WebREST

转载:http://www.ibm.com/developerworks/cn/web/wa-jaxrs/

JAX-RS (JSR-311) 【 Java API for RESTful Web Services 】是一种 Java™ API,可使 Java Restful 服务的开发变得迅速而轻松。这个 API 提供了一种基于注释的模型来描述分布式资源。注释被用来提供资源的位

- CentOS6.5-x86_64位下oracle11g的安装详细步骤及注意事项

超声波

oraclelinux

前言:

这两天项目要上线了,由我负责往服务器部署整个项目,因此首先要往服务器安装oracle,服务器本身是CentOS6.5的64位系统,安装的数据库版本是11g,在整个的安装过程中碰到很多的坑,不过最后还是通过各种途径解决并成功装上了。转别写篇博客来记录完整的安装过程以及在整个过程中的注意事项。希望对以后那些刚刚接触的菜鸟们能起到一定的帮助作用。

安装过程中可能遇到的问题(注

- HttpClient 4.3 设置keeplive 和 timeout 的方法

supben

httpclient

ConnectionKeepAliveStrategy kaStrategy = new DefaultConnectionKeepAliveStrategy() {

@Override

public long getKeepAliveDuration(HttpResponse response, HttpContext context) {

long keepAlive

- Spring 4.2新特性-@Import注解的升级

wiselyman

spring 4

3.1 @Import

@Import注解在4.2之前只支持导入配置类

在4.2,@Import注解支持导入普通的java类,并将其声明成一个bean

3.2 示例

演示java类

package com.wisely.spring4_2.imp;

public class DemoService {

public void doSomethin