自定义Flume整合HBase

一、Flume收集数据到Hbase中

1、自定义的Flume-HBase-Sink,主要完成的功能:

1、可以指定任意的分隔符

2、可以完成任意的列内容的分割

3、自定义行键

2、自定义FLume和HBase sink需要解决的问题

1、读到我们配置的列名有哪些

2、得要知道如何去拆分我们的数据

如果想要在这个类中获取自定义的配置项信息,那么该配置项必须要在Serializer.*的后面(*)来指定

二、具体实现步骤

1、下载flume源码包并解压:

下载地址:链接:https://pan.baidu.com/s/1IUGOTDRC2xZEqx9lHC90YQ 提取码:tom1

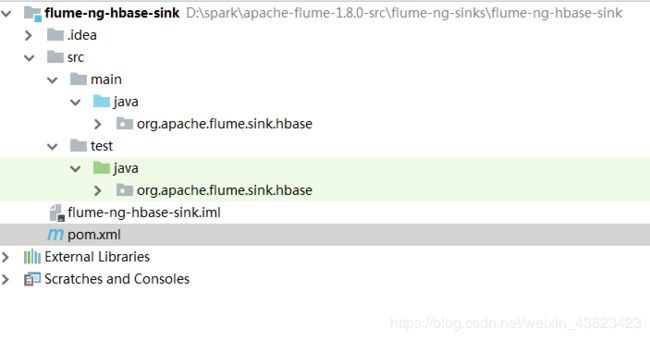

2、找到flume-ng-sinks文件夹并打开,用idea打开flume-ng-hbase-sink

3、在main/java下编写实现代码:

代码实现如下:

package org.apache.flume.sink.hbase;

import com.google.common.base.Charsets;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.FlumeException;

import org.apache.flume.conf.ComponentConfiguration;

import org.apache.hadoop.hbase.client.Increment;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Row;

import java.util.Arrays;

import java.util.LinkedList;

import java.util.List;

public class MySimpleHbaseEventSerializer implements HbaseEventSerializer {

private String rowPrefix;

private byte[] incrementRow;

private byte[] columnFamily;

private byte[] cf;

private String plCol;

private byte[] incCol;

private byte[] payload;

private String seperator;

public MySimpleHbaseEventSerializer() {

}

/**

* 获得配置文件中的配置项

* @param context

*/

@Override

public void configure(Context context) {

rowPrefix = context.getString("rowPrefix", "default");

incrementRow =

context.getString("incrementRow", "incRow").getBytes(Charsets.UTF_8);

String suffix = context.getString("suffix", "uuid");

//datetime,userid,searchname,retorder,cliorder,cliurl

String payloadColumn = context.getString("payloadColumn", "pCol");

String incColumn = context.getString("incrementColumn", "iCol");

String seperator = context.getString("seperator", ",");

String cf = context.getString("columnFamily", "cf1");

if (payloadColumn != null && !payloadColumn.isEmpty()) {

plCol = payloadColumn;//pCol

System.out.println("==============>payloadColumn: " + plCol);

}

if (incColumn != null && !incColumn.isEmpty()) {

incCol = incColumn.getBytes(Charsets.UTF_8);//iCol

}

if(seperator != null && !seperator.isEmpty()) {

this.seperator = seperator;

System.out.println("==============>seperator: " + seperator);

}

if(cf != null && !cf.isEmpty()) {

this.columnFamily = cf.getBytes(Charsets.UTF_8);

System.out.println("==============>columnFamily: " + cf);

}

}

@Override

public void configure(ComponentConfiguration conf) {

}

@Override

public void initialize(Event event, byte[] cf) {

this.payload = event.getBody();//读取到的数据

this.cf = cf;

}

/**

* 把数据由channel写入到hbase的操作就在这里面实现

* @return

* @throws FlumeException

*/

@Override

public List getActions() throws FlumeException {

List actions = new LinkedList();

if (plCol != null) {//pCol

byte[] rowKey = null;

try {

//数据在payload中,列信息在plCol中,这二者需要一一对应

String[] columns = this.plCol.split(",");

System.out.println("---------------->columns: " + Arrays.toString(columns));

String[] dataArray = new String(this.payload).split(this.seperator);

System.out.println("---------------->columns: " + Arrays.toString(dataArray));

if(columns == null || dataArray == null || columns.length != dataArray.length) {

return actions;

} else {

String userid = dataArray[1];

String time = dataArray[0];

Put put = null;

for (int i = 0; i < columns.length; i++) {

String column = columns[i];

String data = dataArray[i];

put = new Put(SimpleRowKeyGenerator.getUserIdAndTimeKey(time, userid));

put.addColumn(columnFamily, column.getBytes(), data.getBytes());

actions.add(put);

}

}

} catch (Exception e) {

throw new FlumeException("Could not get row key!", e);

}

}

return actions;

}

@Override

public List getIncrements() {

List incs = new LinkedList();

if (incCol != null) {

Increment inc = new Increment(incrementRow);

inc.addColumn(cf, incCol, 1);

incs.add(inc);

}

return incs;

}

@Override

public void close() {

}

}

4、打成jar包替换linux下的jar包

5、编写Flume配置文件:

主要作用是文件中的新增内容,将数据打入到HBase中

注意:Flume agent的运行,主要就是配置source channel sink

下面的a1就是agent的代号,source叫r1 channel叫c1 sink叫k1

a1.sources = r1

a1.sinks = k1

a1.channels = c1

#对于source的配置描述 监听目录中的新增数据

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /home/bigdata/data/projects/news/data/news-logs.log

#对于sink的配置描述 使用hbase做数据的消费

a1.sinks.k1.type = asynchbase

a1.sinks.k1.table = news_log_1805

a1.sinks.k1.columnFamily = cf

a1.sinks.k1.serializer = org.apache.flume.sink.hbase.MyAsyncHbaseEventSerializer

## 将flume采集到的数据,写入到hbase表中对应的列

a1.sinks.k1.serializer.payloadColumn = datetime,userid,searchname,retorder,cliorder,cliurl

a1.sinks.k1.serializer.separator = \\t

#对于channel的配置描述 使用内存缓冲区域做数据的临时缓存,文件

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /home/bigdata/data/projects/news/checkpoint

a1.channels.c1.dataDirs = /home/bigdata/data/projects/news/channel

a1.channels.c1.capacity = 100000

a1.channels.c1.transactionCapacity = 10000

#通过channel c1将source r1和sink k1关联起来

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c16、后台运行flume

nohup bin/flume-ng agent -n a1 -c conf -f conf/flume-hbase-sink.conf >/dev/null 2>&1 &