opencv -dnn人脸识别

随着深度学习的发展,opencv3.1也可以直接调用caffe或者torch。下面是使用opencv的dnn模块来进行人脸识别:

1:编译opencv3.1

首先下载opencv源码https://github.com/opencv/opencv

下载Cmake https://cmake.org/download/

下载opencv的

具体的camke过程可以参考这篇博客:

http://www.cnblogs.com/jliangqiu2016/p/5597501.html

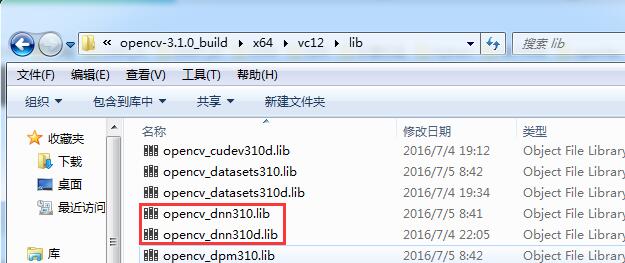

编译完成后可以把不需要的文件删除仅保留include bin lib 文件即可。

编译好的opencv3.1和普通opencv的配置过程一样:

opencv_aruco310.lib

opencv_bgsegm310.lib

opencv_bioinspired310.lib

opencv_calib3d310.lib

opencv_ccalib310.lib

opencv_core310.lib

opencv_cudaarithm310.lib

opencv_cudabgsegm310.lib

opencv_cudacodec310.lib

opencv_cudafeatures2d310.lib

opencv_cudafilters310.lib

opencv_cudaimgproc310.lib

opencv_cudalegacy310.lib

opencv_cudaobjdetect310.lib

opencv_cudaoptflow310.lib

opencv_cudastereo310.lib

opencv_cudawarping310.lib

opencv_cudev310.lib

opencv_datasets310.lib

opencv_dnn310.lib

opencv_dpm310.lib

opencv_face310.lib

opencv_features2d310.lib

opencv_flann310.lib

opencv_fuzzy310.lib

opencv_highgui310.lib

opencv_imgcodecs310.lib

opencv_imgproc310.lib

opencv_line_descriptor310.lib

opencv_ml310.lib

opencv_objdetect310.lib

opencv_optflow310.lib

opencv_photo310.lib

opencv_plot310.lib

opencv_reg310.lib

opencv_rgbd310.lib

opencv_saliency310.lib

opencv_shape310.lib

opencv_stereo310.lib

opencv_stitching310.lib

opencv_structured_light310.lib

opencv_superres310.lib

opencv_surface_matching310.lib

opencv_text310.lib

opencv_tracking310.lib

opencv_ts310.lib

opencv_video310.lib

opencv_videoio310.lib

opencv_videostab310.lib

opencv_viz310.lib

opencv_xfeatures2d310.lib

opencv_ximgproc310.lib

opencv_xobjdetect310.lib

opencv_xphoto310.lib

在opencv的源码中提供了dnn的test.cpp

下面具体分析代码:

/* Find best class for the blob (i. e. class with maximal probability) */

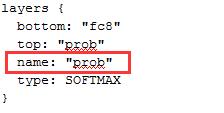

获取prob层的输出:实际意义为测试图片所对应与标签的概率值。resize成一个列向量,然后排序,输出最大值和最大值所对应的位置。

void getMaxClass(dnn::Blob &probBlob, int *classId, double *classProb)

{

Mat probMat = probBlob.matRefConst().reshape(1, 1); //reshape the blob to 1x1000 matrix

Point classNumber;

minMaxLoc(probMat, NULL, classProb, NULL, &classNumber);

*classId = classNumber.x;

}相关系数函数:一种相似性度量用于判断两个人的相似性距离。

float mean(const std::vector<float>& v)

{

assert(v.size() != 0);

float ret = 0.0;

for (std::vector<float>::size_type i = 0; i != v.size(); ++i)

{

ret += v[i];

}

return ret / v.size();

}

float cov(const std::vector<float>& v1, const std::vector<float>& v2)

{

assert(v1.size() == v2.size() && v1.size() > 1);

float ret = 0.0;

float v1a = mean(v1), v2a = mean(v2);

for (std::vector<float>::size_type i = 0; i != v1.size(); ++i)

{

ret += (v1[i] - v1a) * (v2[i] - v2a);

}

return ret / (v1.size() - 1);

}

// 相关系数

float coefficient(const std::vector<float>& v1, const std::vector<float>& v2)

{

assert(v1.size() == v2.size());

return cov(v1, v2) / sqrt(cov(v1, v1) * cov(v2, v2));

}cos相似性距离函数:

//cos 相似性度量

float cos_distance(const std::vector<float>& vecfeature1, vector<float>& vecfeature2)

{

float cos_dis=0;

float dotmal=0, norm1=0, norm2=0;

for (int i = 0; i < vecfeature1.size(); i++)

{

dotmal += vecfeature1[i] * vecfeature2[i];

norm1 += vecfeature1[i] * vecfeature1[i];

norm2 += vecfeature2[i] * vecfeature2[i];

}

norm1 = sqrt(norm1);

norm2 = sqrt(norm2);

cos_dis = dotmal / (norm1*norm2);

return cos_dis;

}下面是主函数:

/**/

//

//

#include importer;

try //Try to import Caffe GoogleNet model

{

importer = dnn::createCaffeImporter(modelTxt, modelBin);

}

catch (const cv::Exception &err) //Importer can throw errors, we will catch them

{

std::cerr << err.msg << std::endl;

}

//! [Create the importer of Caffe model]

if (!importer)

{

std::cerr << "Can't load network by using the following files: " << std::endl;

std::cerr << "prototxt: " << modelTxt << std::endl;

std::cerr << "caffemodel: " << modelBin << std::endl;

std::cerr << "bvlc_googlenet.caffemodel can be downloaded here:" << std::endl;

std::cerr << "http://dl.caffe.berkeleyvision.org/bvlc_googlenet.caffemodel" << std::endl;

exit(-1);

}

//! [Initialize network]

dnn::Net net;

importer->populateNet(net);

importer.release(); //We don't need importer anymore

//! [Initialize network]

//! [Prepare blob]

//===============进行训练样本提取=======================可修改====================

//========================五个人,每人一张照片====================================

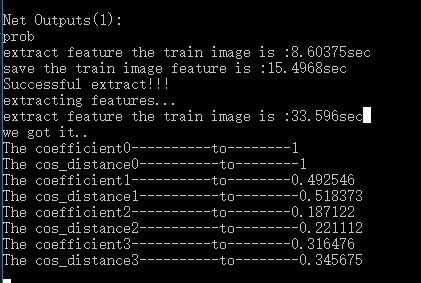

std::vector 里面我有所修改,本来提取的是fc8层的,后来改成fc7层4096维特征。

这速度真喜人!!!!!!!!提取个特征就要8秒!!!!!!!

1:程序的改进方向:

1:保存提取的特征为dat文件,这样可以预先训练,直接测试即可

2:程序输出的是Bolb格式的数据,保存数据占用的时间比较长,可以修改一下。

3:还是使用caffe for windows吧!

下面是一些参考链接:

http://blog.csdn.net/mr_curry/article/details/52183263

http://docs.opencv.org/trunk/d5/de7/tutorial_dnn_googlenet.html

http://docs.opencv.org/trunk/de/d25/tutorial_dnn_build.html