三、大数据实践——构建新特征指标与构建风控模型

一、建立新的信用指标评估体系

二、计算新的指标值

1、以年消费总额这个新指标为例

年消费总额 = transCnt_mean * transAmt_mean

把计算结果作为新特征,加入作为新列加入data

# 计算客户年消费总额。

trans_total = data['transCnt_mean']*data['transAmt_mean']

# 将计算结果保留到小数点后六位。

trans_total =round(trans_total,6)

# 将结果加在data数据集中的最后一列,并将此列命名为trans_total。

data['trans_total'] = trans_total

print(data['trans_total'].head(20))其他几个新指标做类似上述方法处理

三、构建风控模型

1、构建风控模型流程

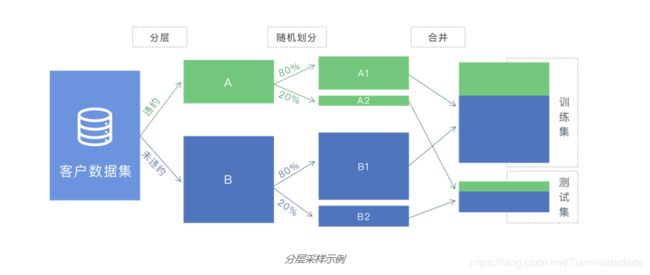

2、分层采样

由于目标标签属于非平衡数据,数据集中违约客户远少于未违约的客户,为了更客观构建风控模型和评估效果,应该尽量使训练集和测试集中违约客户的比例一致,因此需要采用分层采样方法来划分训练集与测试集。

划分过程: 分层、随即划分、合并

可见,经过分层采样后,训练集与测试集中违约样本比例会保持一致。

from sklearn.model_selection import train_test_split

# 筛选data中的Default列的值,赋予变量y

y = data['Default'].values

# 筛选除去Default列的其他列的值,赋予变量x

x = data.drop(['Default'], axis=1).values

# 使用train_test_split方法,将x,y划分训练集和测试集

x_train, x_test, y_train, y_test = train_test_split(x,y,test_size=0.2,random_state=33,stratify=y)

# 查看划分后的x_train与x_test的长度

len_x_train = len(x_train)

len_x_test = len(x_test)

print('x_train length: %d, x_test length: %d'%(len_x_train,len_x_test))

# 查看分层采样后的训练集中违约客户人数的占比

train_ratio = y_train.sum()/len(y_train)

print(train_ratio)

# 查看分层采样后的测试集中违约客户人数的占比

test_ratio = y_test.sum()/len(y_test)

print(test_ratio)

3、使用LogisticRegression建立风险评估模型

from sklearn.linear_model import LogisticRegression

# 调用模型,新建模型对象

lr = LogisticRegression()

# 带入训练集x_train, y_train进行训练

lr.fit(x_train,y_train)

# 对训练好的lr模型调用predict方法,带入测试集x_test进行预测

y_predict = lr.predict(x_test)

# 查看模型预测结果

print(y_predict[:10])

print(len(y_predict))

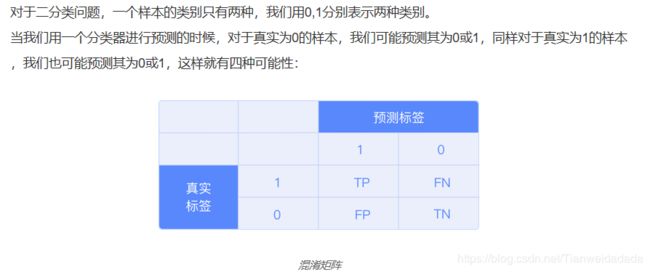

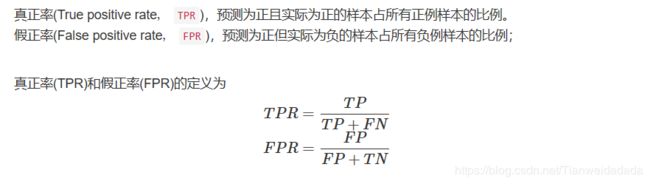

4、LogisticRegression风控模型效果评估

模型建立好后,需要对模型的效果进行评估,这里我们使用AUC值作为评价指标。

from sklearn.metrics import roc_auc_score

'''

predict_prob 返回 n*k数组

(i,j) 表示 预测 第i个样本为 第j类别的概率

'''

y_predict_proba = lr.predict_proba(x_test)

# 查看概率估计前十行

print(y_predict_proba[:10])

# 取目标分数为正类(1)的概率估计

y_predict = y_predict_proba[:,1]

# 利用roc_auc_score查看模型效果

test_auc = roc_auc_score(y_test,y_predict)

print('逻辑回归模型 test_auc:',test_auc)5、LogisticRegression参数优化

from sklearn.metrics import roc_auc_score

from sklearn.linear_model import LogisticRegression

# 建立一个LogisticRegression对象,命名为lr

'''

使用l1正则

'''

lr = LogisticRegression(C=0.6,class_weight='balanced',penalty='l1')

# 对lr对象调用fit方法,带入训练集x_train, y_train进行训练

lr.fit(x_train,y_train)

# 对训练好的lr模型调用predict_proba方法

y_predict = lr.predict_proba(x_test)[:,1]

# 调用roc_auc_score方法

test_auc = roc_auc_score(y_test,y_predict)

print('逻辑回归模型test auc:')

print(test_auc)6、LogisticRegression 对数据进行标准化以提升逻辑回归模型

continuous_columns = ['age','cashTotalAmt','cashTotalCnt','monthCardLargeAmt','onlineTransAmt','onlineTransCnt','publicPayAmt','publicPayCnt','transTotalAmt','transTotalCnt','transCnt_non_null_months','transAmt_mean','transAmt_non_null_months','cashCnt_mean','cashCnt_non_null_months','cashAmt_mean','cashAmt_non_null_months','card_age', 'trans_total','total_withdraw', 'avg_per_withdraw','avg_per_online_spend', 'avg_per_public_spend', 'bad_record']

# 对data中所有连续型的列进行Z-score标准化 important

data[continuous_columns]=data[continuous_columns].apply(lambda x:(x-x.mean())/x.std())

# 查看标准化后的数据的均值和标准差,以cashAmt_mean为例

print('cashAmt_mean标准化后的均值:',data['cashAmt_mean'].mean())

print('cashAmt_mean标准化后的标准差:',data['cashAmt_mean'].std())

# 查看标准化后对模型的效果提升

y = data['Default'].values

x = data.drop(['Default'], axis=1).values

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2,random_state = 33,stratify=y)

from sklearn.metrics import roc_auc_score

from sklearn.linear_model import LogisticRegression

lr = LogisticRegression(penalty='l1',C=0.6,class_weight='balanced')

lr.fit(x_train, y_train)

# 查看模型预测结果

y_predict = lr.predict_proba(x_test)[:,1]

auc_score =roc_auc_score(y_test,y_predict)

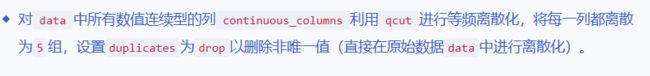

print('score:',auc_score)7、使用(对连续型特征数值)离散化提升LogisticRegression回归模型效果

# 这里 把 后来 提取的特征 也放进去 和原有特征一起离散化

continuous_columns = ['age','cashTotalAmt','cashTotalCnt','monthCardLargeAmt','onlineTransAmt','onlineTransCnt','publicPayAmt','publicPayCnt','transTotalAmt','transTotalCnt','transCnt_non_null_months','transAmt_mean','transAmt_non_null_months','cashCnt_mean','cashCnt_non_null_months','cashAmt_mean','cashAmt_non_null_months','card_age', 'trans_total', 'total_withdraw', 'avg_per_withdraw','avg_per_online_spend', 'avg_per_public_spend', 'bad_record']

# 对data中数值连续型的列进行等频离散化,将每一列都离散为5个组。

data[continuous_columns] = data[continuous_columns].apply(lambda x : pd.qcut(x,5,duplicates='drop'))

# 查看离散化后的数据

print(data.head())

# 查看离散化后对模型的效果提升

# 先对各离散组进行One-Hot处理

data=pd.get_dummies(data)

#print(data.columns)

y = data['Default'].values

# 取出除标签外所有列

x = data.drop(['Default'], axis=1).values

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2,random_state = 33,stratify=y)

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import roc_auc_score

lr = LogisticRegression(penalty='l1',C=0.6,class_weight='balanced')

lr.fit(x_train, y_train)

# 查看模型预测结果

y_predict = lr.predict_proba(x_test)[:,1]

score_auc = roc_auc_score(y_test,y_predict)

print('score:',score_auc)

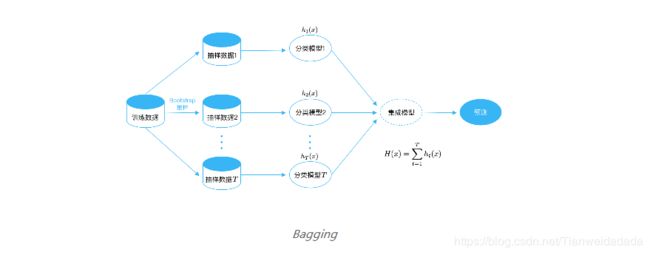

8、使用随机森林建立风险评估模型

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import roc_auc_score

rf_clf = RandomForestClassifier()

rf_clf.fit(x_train,y_train)

y_predict = rf_clf.predict_proba(x_test)[:,1]

# 查看模型效果

test_auc = roc_auc_score(y_test,y_predict)

print ("AUC Score (Test): %f" % test_auc)9、随机森林参数调优

n_estimators表示随机森林中决策树数目

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import roc_auc_score

# 尝试设置参数n_estimators

rf_clf1 = RandomForestClassifier(n_estimators=100)

rf_clf1.fit(x_train, y_train)

y_predict1 = rf_clf1.predict_proba(x_test)[:,1]

# 查看模型效果

test_auc = roc_auc_score(y_test,y_predict1)

print ("AUC Score (Test): %f" % test_auc)10、随机森林参数调优

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import roc_auc_score

import matplotlib.pyplot as plt

# 定义存储AUC分数的数组

scores_train=[]

scores_test=[]

# 定义存储n_estimators取值的数组

estimators=[]

# 设置n_estimators在100-210中每隔20取一个数值

for i in range(100,210,20):

estimators.append(i)

rf = RandomForestClassifier(n_estimators=i, random_state=12)

rf.fit(x_train,y_train)

y_predict = rf.predict_proba(x_test)[:,1]

scores_test.append(roc_auc_score(y_test,y_predict))

# 查看我们使用的n_estimators取值

print("estimators =", estimators)

# 查看以上模型中在测试集最好的评分

print("best_scores_test =",max(scores_test))

# 画出n_estimators与AUC的图形

fig,ax = plt.subplots()

# 设置x y坐标名称

ax.set_xlabel('estimators')

ax.set_ylabel('AUC分数')

plt.plot(estimators,scores_test, label='测试集')

#显示汉语标注

plt.rcParams['font.sans-serif']=['SimHei']

plt.rcParams['font.family']=['sans-serif']

# 设置图例

plt.legend(loc="lower right")

plt.show()

可以看到我们对n_estimators 在 100-210中每取一个值,n_estimators为180时评分最好,较之前略有提升。

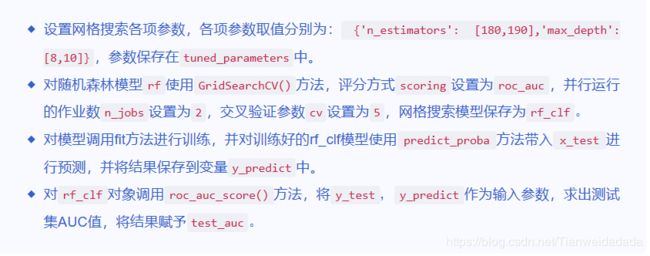

11、使用网格搜索(GridSearchCV)进行随机森林参数优化

交叉验证

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import roc_auc_score

rf = RandomForestClassifier()

# 设置需要调试的参数

tuned_parameters = {

'n_estimators':[180,190],

'criterion':['entropy','gini'],

'max_depth':[8,10],

'min_samples_split':[2,3]

}

# 调用网格搜索函数

rf_clf = GridSearchCV(rf,tuned_parameters,cv=5,n_jobs=12,scoring='roc_auc')

rf_clf.fit(x_train, y_train)

y_predict = rf_clf.predict_proba(x_test)[:,1]

test_auc = roc_auc_score(y_test,y_predict)

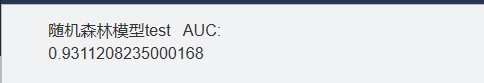

print ('随机森林模型test AUC:')

print (test_auc)