基于Kubernetes的DevOps平台实战

网络、主机等规划

规划集群网络

规划主机集群架构(如节点多机房灾备等)

主机系统初始化

主机名

软件源

关闭swap

关闭防火墙

关闭SELinux

配置Chrony

安装依赖软件包

加载相关内核模块

内核优化

配置数据盘

签发集群证书

签发ca证书

签发etcd证书

签发admin证书

签发apiserver证书

签发metrics-server证书

签发controller-manager证书

签发scheduler证书

签发kube-proxy证书

部署etcd高可用集群

etcd集群部署

etcd数据备份

apiserver的高可用配置

HAProxy+Keepalived

部署Master节点

生成kuberctl kubeconfig,配置kubectl

安装配置kube-apiserver

安装配置kube-controller-mannager

安装配置kube-scheduler

部署Node节点

安装配置Docker

安装配置kubelet

安装配置kube-proxy

部署Calico

关闭非安全端口,通过--anonymous-auth=false关闭匿名请求,所有通信使用证书认证,防止请求被篡改;通过证书认证和授权策略(x509、token、RBAC)。

开启bootstrap token认证,动态创建token,而不是在apiserver中静态配置。

使用TLS bootstrap机制自动生成kubelet client和server证书,过期自动轮转(部分集群部署使用admin证书签发kubelet.kubeconfig,这样集群非常不安全)。

etcd、master证书指定节点IP,限制访问,即便某个节点证书泄漏,在其他机器上也无法使用。

网络使用calico ipip

填写申请机器工单

选择用途为Kubernetes新建或者扩容

选择对应角色

工单审批通过后,自动申请机器

调用Ansible Playbook开始进行系统初始化

调用Ansible Playbook开始进行证书签发

调用Ansible Playbook开始进行etcd集群部署

调用Ansible Playbook开始进行节点扩容

ansible-playbook k8s.yml -i inventoryansible-playbook k8s.yml -i inventory -t init -l master

ansible-playbook k8s.yml -i inventory -t cert,install_masteransible-playbook k8s.yml -i inventory -t init -l node

ansible-playbook k8s.yml -i inventory -t cert,install_node![]()

![]()

nodeclient:kubelet以 O=system:nodes和 CN=system:node:(node name)形式发起的CSR请求

selfnodeclient:kubelet client renew自己的证书发起的CSR请求(与上一个证书就有相同的O和CN)

selfnodeserver:kubelet server renew自己的证书发起的CSR请求

配置apiserver使用 BootstrapTokenSecret,替换以前使用 token.csv文件

在集群内创建首次TLS Bootstrap申请证书的ClusterRole、后续renew Kubelet client/server的ClusterRole,以及其相关对应的ClusterRoleBinding;并绑定到对应的组或用户

配置controller-manager,使其可以自动签发相关证书和自动清理过期的TLS Bootstrapping Token

生成特定的包含TLS Bootstrapping Token的 bootstrap.kubeconfig以供kubelet启动时使用

配置kubelet,使其首次启动加载 bootstrap.kubeconfig 并使用其中的 TLS Bootstrapping Token 完成首次证书申请

controller-manager签发证书,并生成kubelet.kubeconfig,kubelet自动重载完成引导流程

后续kubelet自动renew相关证书

集群搭建成功后立即清除 BootstrapTokenSecret,或等待Controller Manager待其过期后删除,以防止被恶意利用

![]()

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: etcd-backup

spec:

schedule: "10 1 * * *"

concurrencyPolicy: Allow

failedJobsHistoryLimit: 3

successfulJobsHistoryLimit: 3

jobTemplate:

spec:

template:

spec:

containers:

- name: etcd-backup

imagePullPolicy: IfNotPresent

image: k8sre/etcd:v3.3.15

env:

- name: ENDPOINTS

value: "https://172.16.70.131:2379,https://172.16.70.132:2379,https://172.16.70.133:2379"

command:

- /bin/bash

- -c

- |

set -ex

ETCDCTL_API=3 /opt/etcd/etcdctl \

--endpoints=$ENDPOINTS \

--cacert=/certs/ca.pem \

--cert=/certs/etcd.pem \

--key=/certs/etcd.key \

snapshot save /data/backup/snapshot-$(date +"%Y%m%d").db

volumeMounts:

- name: etcd-backup

mountPath: /data/backup

- name: etcd-certs

mountPath: /certs

restartPolicy: OnFailure

volumes:

- name: etcd-backup

persistentVolumeClaim:

claimName: etcd-backup

- name: etcd-certs

secret:

secretName: etcd-certsapiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins-master

namespace: jenkins

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: jenkins-master

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: jenkins-master

namespace: jenkins

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: jenkins-master

namespace: jenkins

spec:

serviceName: "jenkins-master"

replicas: 1

selector:

matchLabels:

app: jenkins-master

template:

metadata:

labels:

app: jenkins-master

spec:

serviceAccount: jenkins-master

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: app

operator: In

values:

- jenkins

containers:

- name: jenkins

image: k8sre/jenkins:master

imagePullPolicy: Always

securityContext:

runAsUser: 0

privileged: true

ports:

- containerPort: 8080

name: http

protocol: TCP

- containerPort: 50000

name: agentport

protocol: TCP

resources:

limits:

cpu: 2

memory: 4Gi

requests:

cpu: 2

memory: 4Gi

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 10

timeoutSeconds: 3

periodSeconds: 5

readinessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 10

timeoutSeconds: 3

periodSeconds: 5

volumeMounts:

- name: jenkins-data

mountPath: /data/jenkins

- name: sshkey

mountPath: /root/.ssh/id_rsa

subPath: id_rsa

readOnly: true

- name: jenkins-docker

mountPath: /var/run/docker.sock

- name: jenkins-maven

mountPath: /usr/share/java/maven-3/conf/settings.xml

subPath: settings.xml

readOnly: true

volumes:

- name: sshkey

configMap:

defaultMode: 0600

name: sshkey

- name: jenkins-docker

hostPath:

path: /var/run/docker.sock

type: Socket

- name: jenkins-maven

configMap:

name: jenkins-maven

- name: jenkins-data

persistentVolumeClaim:

claimName: jenkins

---

apiVersion: v1

kind: Service

metadata:

name: jenkins-master

namespace: jenkins

spec:

ports:

- name: web

port: 8080

protocol: TCP

targetPort: 8080

- name: agent

port: 50000

protocol: TCP

targetPort: 50000

selector:

app: jenkins-masterapiVersion: v1

kind: ServiceAccount

metadata:

name: agent-go

namespace: jenkins

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: agent-go

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: agent-go

namespace: jenkins

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: agent-go

namespace: jenkins

spec:

serviceName: "agent-go"

replicas: 1

selector:

matchLabels:

app: agent-go

template:

metadata:

labels:

app: agent-go

spec:

serviceAccount: agent-go

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: role

operator: In

values:

- jenkins

containers:

- name: jenkins

image: k8sre/jenkins:go

imagePullPolicy: Always

securityContext:

runAsUser: 600

privileged: true

env:

- name: "ENV_NAME"

value: "go"

- name: "JKS_SECRET"

value: "xxxxxxxxxxxxxx"

- name: "JKS_DOMAINNAME"

value: "https://ci.k8sre.com"

- name: "JKS_ARGS"

value: "-Xmx4g -Xms4g"

- name: "JKS_DIR"

value: "/data/jenkins"

volumeMounts:

- mountPath: /data/jenkins

name: jenkins-data

volumes:

- name: jenkins-data

persistentVolumeClaim:

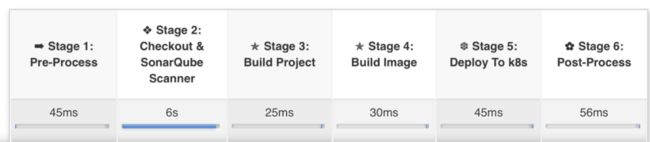

claimName: jenkins---> 在DevOps平台创建项目(DevOps平台提供项目管理、配置管理、JOB处理、日志查看、监控信息、Kubernetes容器Web Terminal等管理功能,CICD后续流程全部为Jenkins处理)

--->选择是容器部署or虚机部署

--->按照提示填写相关配置(包含开发语言、GIT仓库、编译命令、域名、容器内可写目录、运行命令、运行端口、资源限制、健康检查等)

--->保存配置

--->触发JOB

--->发布前检查/拉取项目配置(同时对项目配置进行校验是否符合规范)

--->拉取业务代码

--->漏洞扫描

--->编译构建

--->根据配置选择对应语言的编译节点

--->判断虚机还是容器部署,选择不同的部署流程

---> 容器部署会根据项目配置生成Dockerfile并构建上传镜像(适配所有业务Dockerfile自动生成,并支持自定义)

--->选择环境(prd or pre or qa or dev )

--->helm使用模版替换项目配置进行部署(Helm Chart模版适配了所有业务需求,并支持自定义。默认部署Deployment、Service、Ingress、HPA)

--->部署结束后发送部署详情通知

![]()

目前Kubernetes配置建议使用yaml配置文件进行配置,参数形式即将删除,后期逐步一一替换。

apiserver的健康检查问题,目前使用4层代理,无法对服务状态进行校验,后续需要对服务健康状态进行校验。

拆分CI/CD,增加制品库;改造编译节点动态创建Pod进行编译,任务完成后删除Pod,提高资源利用率,提高编译构建的并发量。

使用helm repo,将chart保存在repo,让部署更简洁。

![]()

https://github.com/k8sre/k8s_init.git

https://github.com/k8sre/docs/blob/master/kubernetes/kubernetes高可用集群之二进制部署.md

![]()