O-RAN notes(2)---Bronze SMO deployment (1)

O-RAN SC Bronze(or release 'B') has been released at 2020/6/21.

Follow link: https://wiki.o-ran-sc.org/pages/viewpage.action?pageId=14221635

(1) SMO deployment

https://wiki.o-ran-sc.org/display/GS/SMO+Installation

First of all, you need a VM. Recommend OS is Ubuntu 18.04 LTS (Bionic Beaver).

dabs@smobronze:~/oran/dep/tools/k8s/bin$ uname -a

Linux smobronze 5.3.0-28-generic #30~18.04.1-Ubuntu SMP Fri Jan 17 06:14:09 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

2020/7/4 update:

Minimum requirements on RAM and HD for SMO deployment:

- 12GB for VM RAM

- 30GB for VM hard disk

(10:46 dabs@smobronze smo) > top | grep Mem

KiB Mem : 12241356 total, 197140 free, 11103664 used, 940552 buff/cache

KiB Swap: 0 total, 0 free, 0 used. 847688 avail Mem

(10:46 dabs@smobronze smo) > df /dev/sda1

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda1 ext4 49G 24G 23G 52% /

[Step 1: Obtaining the Deployment Scripts and Charts] is very simple:

dabs@smobronze:~$ mkdir oran

dabs@smobronze:~$ cd oran

dabs@smobronze:~/oran$ git clone http://gerrit.o-ran-sc.org/r/it/dep -b bronze

Cloning into 'dep'...

warning: redirecting to https://gerrit.o-ran-sc.org/r/it/dep/

remote: Counting objects: 3, done

remote: Total 4412 (delta 0), reused 4412 (delta 0)

Receiving objects: 100% (4412/4412), 2.21 MiB | 45.00 KiB/s, done.

Resolving deltas: 100% (1784/1784), done.

dabs@smobronze:~/oran$ cd dep/

dabs@smobronze:~/oran/dep$ git submodule update --init --recursive --remote

Submodule 'ric-dep' (https://gerrit.o-ran-sc.org/r/ric-plt/ric-dep) registered for path 'ric-dep'

Cloning into '/home/dabs/oran/dep/ric-dep'...

Submodule path 'ric-dep': checked out 'f13294efad66831d8140c35d21fb59d19160493d'[Step 2: Generation of cloud-init Script]:

update dep/tools/k8s/etc/infra.rc:

dabs@smobronze:~/oran/dep/tools/k8s/etc$ cat infra.rc

# modify below for RIC infrastructure (docker-k8s-helm) component versions

# RIC tested

#INFRA_DOCKER_VERSION=""

#INFRA_HELM_VERSION="2.12.3"

#INFRA_K8S_VERSION="1.16.0"

#INFRA_CNI_VERSION="0.7.5"

# older RIC tested

#INFRA_DOCKER_VERSION=""

#INFRA_HELM_VERSION="2.12.3"

#INFRA_K8S_VERSION="1.13.3"

#INFRA_CNI_VERSION="0.6.0"

# ONAP Frankfurt

INFRA_DOCKER_VERSION="18.09.7"

INFRA_K8S_VERSION="1.15.9"

INFRA_CNI_VERSION="0.7.5"

INFRA_HELM_VERSION="2.16.6"

run gen-cloud-init.sh to generate the k8s-1node-cloud-init-k_1_15-h_2_16-d_18_09.sh script:

dabs@smobronze:~/oran/dep/tools/k8s/bin$ ./gen-cloud-init.sh

find: warning: you have specified the global option -maxdepth after the argument -type, but global options are not positional, i.e., -maxdepth affects tests specified before it as well as those specified after it. Please specify global options before other arguments.

reading in values in /home/dabs/oran/dep/tools/k8s/bin/../etc/env.rc

reading in values in /home/dabs/oran/dep/tools/k8s/bin/../etc/infra.rc

reading in values in /home/dabs/oran/dep/tools/k8s/bin/../etc/openstack.rc

dabs@smobronze:~/oran/dep/tools/k8s/bin$ ls

deploy-stack.sh gen-ric-heat-yaml.sh k8s-1node-cloud-init-k_1_15-h_2_16-d_18_09.sh uninstall

gen-cloud-init.sh install undeploy-stack.sh[Step 3: Installation of Kubernetes, Helm, Docker, etc.]

First, make changes to the script: k8s-1node-cloud-init-k_1_15-h_2_16-d_18_09.sh

(1) As I am using debian-testing, update the DOCKERVERSION part as below:

2020/7/2 update: I've changed to use Ubuntu 18.04 LTS VM.

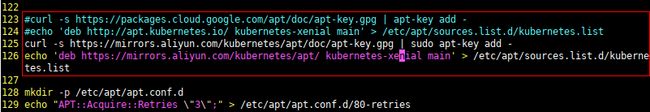

(2) update k8s mirror:

#avoid the NO_PUBKEY error when 'sudo apt update'

$ sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 6A030B21BA07F4FB(3) update docker mirror:

(4) comment last line to avoid sudden VM reboot(O_O, I thought system failure happened!!!):

(5) edit /etc/hosts:

(6) update docker apt repository

$sudo apt-key fingerprint 0EBFCD88

#for ubuntu

$sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

#avoid the NO_PUBKEY error when 'sudo apt update'

$ sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 7EA0A9C3F273FCD8

Now, run the script for the 1st time, you should encounter problems, and use CTRL+C to end the infinite loop of 'waiting for 0/8 pods ready and take-a-nap' :

dabs@smobronze:~/oran/dep/tools/k8s/bin$ sudo ./k8s-1node-cloud-init-k_1_15-h_2_16-d_18_09.sh

+ NUMPODS=0

+ echo '> waiting for 0/8 pods running in namespace [kube-system] with keyword [Running]'

> waiting for 0/8 pods running in namespace [kube-system] with keyword [Running]

+ '[' 0 -lt 8 ']'

+ sleep 5

This is caused by the docker-pull-firewalled-problem, you will need following docker images for [Step 3]:

For the k8s.gcr.io/* images, solution:

$sudo kubeadm config images list | sed -e 's/^/docker pull /g' -e 's#k8s.gcr.io#registry.aliyuncs.com/google_containers#g' | sudo sh -x

$sudo docker images | grep registry.aliyuncs.com/google_containers | awk '{print "docker tag ",$1":"$2,$1":"$2}' | sed -e 's#registry.aliyuncs.com/google_containers#k8s.gcr.io#2' | sudo sh -x

$sudo docker images | grep registry.aliyuncs.com/google_containers | awk '{print "docker rmi ", $1":"$2}' | sudo sh -xFor the flannel image, solution:

manually download flannel:v0.12.0 from github or baidu-netdisk-link:

https://github.com/coreos/flannel/releases/download/v0.12.0/flanneld-v0.12.0-amd64.docker

链接: https://pan.baidu.com/s/1_3H6_0kaJwkmse1v6dYt1w 提取码: jdmc$sudo docker load < ~/Downloads/flanneld-v0.12.0-amd64.dockerFor the tiller image, solution:

dabs@smobronze:/tmp$ docker pull sapcc/tiller:v2.16.6

v2.16.6: Pulling from sapcc/tiller

aad63a933944: Pull complete

5a5f2e29be4f: Pull complete

d2bd87496312: Pull complete

27aa33a05387: Pull complete

Digest: sha256:e88aaf190da692e7a31d825e3e88a3264a1c03b430f995b8f58d2cb55cb434a8

Status: Downloaded newer image for sapcc/tiller:v2.16.6

dabs@smobronze:/tmp$ docker tag sapcc/tiller:v2.16.6 gcr.io/kubernetes-helm/tiller:v2.16.6

dabs@smobronze:/tmp$ docker rmi sapcc/tiller:v2.16.6

Untagged: sapcc/tiller:v2.16.6

Untagged: sapcc/tiller@sha256:e88aaf190da692e7a31d825e3e88a3264a1c03b430f995b8f58d2cb55cb434a8

With all the docker images ready, you can run the script for the 2nd time. Eventually, you will end up with an one-node k8s cluster, and 9 Running pods.

dabs@smobronze:~/oran/dep/tools/k8s/bin$ sudo kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

smobronze Ready master 115s v1.15.9 172.16.99.132 Ubuntu 18.04.4 LTS 5.3.0-62-generic docker://18.9.7

dabs@smobronze:~/oran/dep/tools/k8s/bin$ sudo kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-5d4dd4b4db-fdfqd 1/1 Running 0 100s 10.244.0.2 smobronze

kube-system coredns-5d4dd4b4db-rzn6z 1/1 Running 0 100s 10.244.0.3 smobronze

kube-system etcd-smobronze 1/1 Running 0 49s 172.16.99.132 smobronze

kube-system kube-apiserver-smobronze 1/1 Running 0 52s 172.16.99.132 smobronze

kube-system kube-controller-manager-smobronze 1/1 Running 0 60s 172.16.99.132 smobronze

kube-system kube-flannel-ds-amd64-zv4pj 1/1 Running 0 100s 172.16.99.132 smobronze

kube-system kube-proxy-vml6p 1/1 Running 0 100s 172.16.99.132 smobronze

kube-system kube-scheduler-smobronze 1/1 Running 0 62s 172.16.99.132 smobronze

kube-system tiller-deploy-666f9c57f4-8xjwj 1/1 Running 0 21s 10.244.0.4 smobronze

dabs@smobronze:~/oran/dep/tools/k8s/bin$ sudo kubectl get service --all-namespaces -o wide

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default kubernetes ClusterIP 10.96.0.1 443/TCP 3m31s

kube-system kube-dns ClusterIP 10.96.0.10 53/UDP,53/TCP,9153/TCP 3m30s k8s-app=kube-dns

kube-system tiller-deploy ClusterIP 10.96.189.79 44134/TCP 114s app=helm,name=tiller

Manually reboot VM and you are ready for [Step 4: Deploy SMO].

dabs@RICBronze:~/oran/dep$ cd smo/bin

dabs@RICBronze:~/oran/dep/smo/bin$ ls

install uninstall

dabs@RICBronze:~/oran/dep/smo/bin$ sudo ./install initlocalrepo[Step 4] really needs hours as been told...

2020/7/3 update: I can finally get ONAP successfully deployed, and will update the details later.

2020/7/4 update: ONAP/NONRTRIC/RICAUX for SMO are all successfully deployed.

- 9 pods of ns kube-system

- 27 pods of ns onap (excluding the two Init:Error sdnc-sdnrdb-init-job pods)

- 8 pods of ns nonrtric

- 2 pods of ns ricaux

(10:42 dabs@smobronze smo) > sudo kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-5d4dd4b4db-7g8b5 1/1 Running 10 16h 10.244.0.24 smobronze

kube-system coredns-5d4dd4b4db-jwg2g 1/1 Running 12 16h 10.244.0.31 smobronze

kube-system etcd-smobronze 1/1 Running 10 16h 192.168.43.172 smobronze

kube-system kube-apiserver-smobronze 1/1 Running 38 16h 192.168.43.172 smobronze

kube-system kube-controller-manager-smobronze 1/1 Running 26 16h 192.168.43.172 smobronze

kube-system kube-flannel-ds-amd64-68dxh 1/1 Running 13 16h 192.168.43.172 smobronze

kube-system kube-proxy-cxprf 1/1 Running 10 16h 192.168.43.172 smobronze

kube-system kube-scheduler-smobronze 1/1 Running 25 16h 192.168.43.172 smobronze

kube-system tiller-deploy-666f9c57f4-9stnz 1/1 Running 12 16h 10.244.0.34 smobronze

nonrtric a1-sim-osc-0 1/1 Running 0 17m 10.244.0.88 smobronze

nonrtric a1-sim-osc-1 1/1 Running 0 16m 10.244.0.93 smobronze

nonrtric a1-sim-std-0 1/1 Running 0 17m 10.244.0.86 smobronze

nonrtric a1-sim-std-1 1/1 Running 0 16m 10.244.0.92 smobronze

nonrtric a1controller-6484f5dbc4-6fx9z 1/1 Running 1 17m 10.244.0.90 smobronze

nonrtric controlpanel-8bd4748f4-m2sx9 1/1 Running 7 17m 10.244.0.91 smobronze

nonrtric db-549ff9b4d5-5ddtm 1/1 Running 0 17m 10.244.0.89 smobronze

nonrtric policymanagementservice-5ffc4cf5f6-qskp8 1/1 Running 8 17m 10.244.0.87 smobronze

onap dev-consul-68d576d55c-xptxr 1/1 Running 0 58m 10.244.0.56 smobronze

onap dev-consul-server-0 1/1 Running 0 58m 10.244.0.57 smobronze

onap dev-consul-server-1 1/1 Running 0 58m 10.244.0.71 smobronze

onap dev-consul-server-2 1/1 Running 0 58m 10.244.0.78 smobronze

onap dev-kube2msb-9fc58c48-sl57k 1/1 Running 0 58m 10.244.0.66 smobronze

onap dev-mariadb-galera-0 1/1 Running 0 58m 10.244.0.68 smobronze

onap dev-mariadb-galera-1 1/1 Running 0 44m 10.244.0.80 smobronze

onap dev-mariadb-galera-2 1/1 Running 0 38m 10.244.0.83 smobronze

onap dev-message-router-0 1/1 Running 0 58m 10.244.0.59 smobronze

onap dev-message-router-kafka-0 1/1 Running 0 58m 10.244.0.79 smobronze

onap dev-message-router-kafka-1 1/1 Running 0 58m 10.244.0.63 smobronze

onap dev-message-router-kafka-2 1/1 Running 0 58m 10.244.0.64 smobronze

onap dev-message-router-zookeeper-0 1/1 Running 0 58m 10.244.0.58 smobronze

onap dev-message-router-zookeeper-1 1/1 Running 0 58m 10.244.0.60 smobronze

onap dev-message-router-zookeeper-2 1/1 Running 0 58m 10.244.0.61 smobronze

onap dev-msb-consul-65b9697c8b-gvxk2 1/1 Running 0 58m 10.244.0.70 smobronze

onap dev-msb-discovery-54b76c4898-zzdqg 2/2 Running 0 58m 10.244.0.65 smobronze

onap dev-msb-eag-76d4b9b9d7-n5z92 2/2 Running 0 58m 10.244.0.69 smobronze

onap dev-msb-iag-65c59cb86b-wxjr9 2/2 Running 0 58m 10.244.0.67 smobronze

onap dev-sdnc-0 2/2 Running 0 58m 10.244.0.74 smobronze

onap dev-sdnc-db-0 1/1 Running 0 58m 10.244.0.77 smobronze

onap dev-sdnc-dmaap-listener-5c77848759-r5nnb 1/1 Running 0 58m 10.244.0.73 smobronze

onap dev-sdnc-sdnrdb-init-job-f89zj 0/1 Init:Error 0 58m 10.244.0.75 smobronze

onap dev-sdnc-sdnrdb-init-job-frs5s 0/1 Completed 0 29m 10.244.0.84 smobronze

onap dev-sdnc-sdnrdb-init-job-hbnkr 0/1 Init:Error 0 43m 10.244.0.81 smobronze

onap dev-sdnrdb-coordinating-only-9b9956fc-rdtjp 2/2 Running 0 58m 10.244.0.72 smobronze

onap dev-sdnrdb-master-0 1/1 Running 0 58m 10.244.0.76 smobronze

onap dev-sdnrdb-master-1 1/1 Running 0 40m 10.244.0.82 smobronze

onap dev-sdnrdb-master-2 1/1 Running 0 25m 10.244.0.85 smobronze

ricaux bronze-infra-kong-68657d8dfd-vgxzb 2/2 Running 3 15m 10.244.0.94 smobronze

ricaux deployment-ricaux-ves-65db844758-jv9b5 1/1 Running 0 15m 10.244.0.95 smobronze Good luck!