【技术向】目标跟踪UAV123数据集下载及使用指南

文章目录

- 1. 下载

- 2.使用指南

- 2.1 主要文件

- 2.2 预处理

- 2.3 跑库与评测

- 2.3.1 跑库

- 2.3.2 评测

- 2.3.3 注意事项

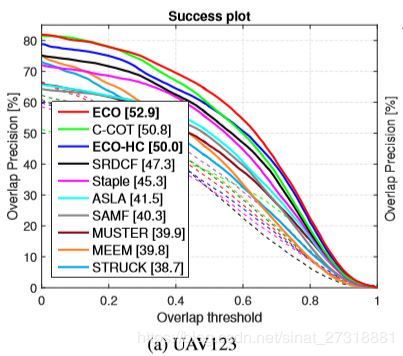

今天推一篇普及性文章,大家知道目标跟踪常用数据集有OTB和VOT系列,现在还有更大的数据集LASOT和TrackingNet出现,此外有一个叫做UAV123的数据集,是一个专门场景的数据集,都是用无人机拍摄,特点是背景干净,视角变化较多,包含123个视频,总大小在13.5G左右。一些知名的跟踪算法会使用UAV进行评测,比如ECO等。可以查看UAV论文看看详细介绍? UAV123论文ECCV16。本文将提供该数据集的下载及使用方法,自跑自评,不用其他工具。有问题欢迎讨论~

1. 下载

下载渠道1:?UAV123数据集主页

下载渠道2:?百度网盘,提取码:7lwk。其中包含5个压缩包,一起解压就行。

2.使用指南

2.1 主要文件

anno文件夹包含UAV20L和UAV123的groundtruth标注,格式为左上角坐标和长宽四个值一行/帧,我们一般使用的是UAV123,其中子文件夹att包含每个视频的属性标注,具体可以查看主目录下的DatasetAnnotation.pdf文件;

第二个是data_seq文件夹,包含数据集的所有图片文件命名方式为06i.jpg;

还有一个configSeqs.m文件,是模仿OTB工具的视频信息文件,后面我们将使用它进行简单的跑库。

2.2 预处理

写了一个utils_UAV.m文件,里面有一些实用的程序,可以在网盘中下载。

我们的目标是做成OTB数据集那样的格式便于跑库,每个视频还缺一个frame文件,即指定起始帧和终止帧的文件,可以通过configSeqs.m生成,首先在主目录新建frame文件夹,然后运行下面程序。

%% achieve frame files like OTB

base_path = '主目录/anno/UAV123/';

seqs = configSeqs;

for i = 1:numel(seqs)

frame_name = [seqs{i}.name '_frames.txt'];

file_name = ['./frame/' frame_name];

fid = fopen(file_name, 'wt');

fprintf(fid, '%d,%d\n',seqs{i}.startFrame, seqs{i}.endFrame);

fclose(fid);

end

下面我们打印一下每个视频的名称,便于后面跑库使用

%% print seq names

fid = fopen('seq_names.txt','wt');

for i = 1:numel(seqs)

fprintf(fid, '''%s'',',seqs{i}.name);

end

fclose(fid);

当然你也可以检查一下是不是都是彩色视频

base_path = '主目录/data_seq/UAV123/';

for i = 1:numel(seqs)

img = imread([base_path seqs{i}.name '/' num2str(seqs{i}.startFrame, '%06i.jpg')]);

if size(img,3) == 1

disp([seqs{i}.name]);

end

end

2.3 跑库与评测

2.3.1 跑库

我们以ECO为例,跑ECO-HC跟踪算法,你也可以跑SRDCF算法,设置都一样。你可以下载我用来跑库的ECO的示例?代码

首先在ECO代码文件中,utils文件夹中增加一个类似load_video_info.m的文件用于提取UAV数据集的信息,起名为load_video_info_UAV.m,内容如下

function [seq, ground_truth] = load_video_info_UAV(gt_path, img_path, frame_path, testSet, seqs)

ground_truth = load([gt_path testSet '.txt']);

seq.format = 'otb';

seq.len = size(ground_truth, 1);

seq.init_rect = ground_truth(1,:);

f = fopen([frame_path testSet '_frames.txt']);

frames = textscan(f, '%f,%f');

fclose(f);

img_path = seqs.path;

img_files = num2str((frames{1}:frames{2})', [img_path '%06i.jpg']);

seq.s_frames = cellstr(img_files);

end

然后ECO代码主目录新建一个跑库主函数demo_UAV.m,并把UAV123数据集中的configSeqs.m文件复制进来。demo_UAV.m内容如下

% Add paths

setup_paths();

seqs = configSeqs;

% Load video information

gt_path = 'UAV123主目录/anno/UAV123/';

img_path = 'UAV123主目录/data_seq/UAV123/';

frame_path = 'UAV123主目录/frame/';

UAV123 = {'bike1','bike2','bike3','bird1_1','bird1_2','bird1_3','boat1','boat2','boat3','boat4','boat5','boat6','boat7','boat8','boat9','building1','building2','building3','building4',...

'building5','car1_1','car1_2','car1_3','car2','car3','car4','car5','car6_1','car6_2','car6_3','car6_4','car6_5','car7','car8_1','car8_2','car9','car10','car11','car12','car13',...

'car14','car15','car16_1','car16_2','car17','car18','group1_1','group1_2','group1_3','group1_4','group2_1','group2_2','group2_3','group3_1','group3_2','group3_3','group3_4',...

'person1','person2_1','person2_2','person3','person4_1','person4_2','person5_1','person5_2','person6','person7_1','person7_2','person8_1','person8_2','person9','person10',...

'person11','person12_1','person12_2','person13','person14_1','person14_2','person14_3','person15','person16','person17_1','person17_2','person18','person19_1','person19_2',...

'person19_3','person20','person21','person22','person23','truck1','truck2','truck3','truck4_1','truck4_2','uav1_1','uav1_2','uav1_3','uav2','uav3','uav4','uav5','uav6','uav7',...

'uav8','wakeboard1','wakeboard2','wakeboard3','wakeboard4','wakeboard5','wakeboard6','wakeboard7','wakeboard8','wakeboard9','wakeboard10','car1_s','car2_s','car3_s','car4_s',...

'person1_s','person2_s','person3_s'};

testSet = UAV123;

if ~exist('res', 'dir')

mkdir('res');

end

%% run UAV

for i = 1:length(testSet)

[seq, ground_truth] = load_video_info_UAV(gt_path, img_path, frame_path, testSet{i}, seqs{i});

results = testing_ECO_HC(seq);

results.res = [results.res(:,[2,1]) + (results.res(:,[4,3]) - 1) / 2 , results.res(:,[4,3])];

res = results.res;

save(['./res/' testSet{i} '_UAV.mat'], 'res');

end

2.3.2 评测

注意我们把结果的形式保存成中心坐标和长宽的形式,以便于下面的评测。评测主要指标是AUC和精度,和OTB报告的一样,只不过没有曲线图,想要可以自己加。

评测时把run UAV下面都注释掉,增加评测代码如下

average_center_location_error_sum=0;

distance_precision_sum=0;

PASCAL_precision_sum=0;

Overlap_sum=0;

fps_sum=0;

fid = fopen('results_UAV.txt', 'wt');

for i = 1:length(testSet)

[seq, ground_truth] = load_video_info_UAV(gt_path, img_path, frame_path, testSet{i}, seqs{i});

ground_truth = [ground_truth(:,[2,1]) + (ground_truth(:,[4,3])-1)/2 , ground_truth(:,[4,3])];

results.fps = 0;

load(['./res/' testSet{i} '_UAV.mat'], 'res');

ground_truth2 = [];

positions2 = [];

for j = 1:size(ground_truth,1)

if isnan(ground_truth(j,1))

continue;

else

ground_truth2 = [ground_truth2;ground_truth(j,1:4)];

positions2 = [positions2; res(j,1:4)];

end

end

[distance_precision, PASCAL_precision, average_center_location_error, Overlap] = ...

compute_performance_measures(positions2, ground_truth2, 20, 0.5);

disp([num2str(Overlap) testSet{i}]);

fprintf(fid, '%.5g %%, %s\n', 100*Overlap, testSet{i});

average_center_location_error_sum=average_center_location_error_sum+average_center_location_error;

distance_precision_sum=distance_precision_sum+distance_precision;

PASCAL_precision_sum=PASCAL_precision_sum+PASCAL_precision;

Overlap_sum=Overlap_sum+Overlap;

fps_sum=fps_sum+results.fps;

end

average_center_location_error=average_center_location_error_sum/length(testSet);

distance_precision=distance_precision_sum/length(testSet);

PASCAL_precision=PASCAL_precision_sum/length(testSet);

Overlap=Overlap_sum/length(testSet);

fps=fps_sum/length(testSet);

fprintf('Center Location Error: %.3g pixels\nDistance Precision: %.3g %%\nOverlap Precision: %.5g %%\nOverlap: %.5g%%\nSpeed: %.5g fps\n', ...

average_center_location_error, 100*distance_precision, 100*PASCAL_precision,100*Overlap, fps);

fprintf(fid, '%.5g %%, %s\n', 100*Overlap, 'All');

fclose(fid);

其中compute_performance_measures函数如下

function [distance_precision, PASCAL_precision, average_center_location_error,Overlap] = ...

compute_performance_measures(positions, ground_truth, distance_precision_threshold, PASCAL_threshold)

% [distance_precision, PASCAL_precision, average_center_location_error] = ...

% compute_performance_measures(positions, ground_truth, distance_precision_threshold, PASCAL_threshold)

%

% For the given tracker output positions and ground truth it computes the:

% * Distance Precision at the specified threshold (20 pixels as default if

% omitted)

% * PASCAL Precision at the specified threshold (0.5 as default if omitted)

% * Average Center Location error (CLE).

%

% The tracker positions and ground truth must be Nx4-matrices where N is

% the number of time steps in the tracking. Each row has to be on the form

% [c1, c2, s1, s2] where (c1, c2) is the center coordinate and s1 and s2

% are the size in the first and second dimension respectively (the order of

% x and y does not matter here).

if nargin < 3 || isempty(distance_precision_threshold)

distance_precision_threshold = 20;

end

if nargin < 4 || isempty(PASCAL_threshold)

PASCAL_threshold = 0.5;

end

if size(positions,1) ~= size(ground_truth,1),

disp('Could not calculate precisions, because the number of ground')

disp('truth frames does not match the number of tracked frames.')

return

end

%calculate distances to ground truth over all frames

distances = sqrt((positions(:,1) - ground_truth(:,1)).^2 + ...

(positions(:,2) - ground_truth(:,2)).^2);

distances(isnan(distances)) = [];

%calculate distance precision

distance_precision = nnz(distances < distance_precision_threshold) / numel(distances);

%calculate average center location error (CLE)

average_center_location_error = mean(distances);

%calculate the overlap in each dimension

overlap_height = min(positions(:,1) + positions(:,3)/2, ground_truth(:,1) + ground_truth(:,3)/2) ...

- max(positions(:,1) - positions(:,3)/2, ground_truth(:,1) - ground_truth(:,3)/2);

overlap_width = min(positions(:,2) + positions(:,4)/2, ground_truth(:,2) + ground_truth(:,4)/2) ...

- max(positions(:,2) - positions(:,4)/2, ground_truth(:,2) - ground_truth(:,4)/2);

% if no overlap, set to zero

overlap_height(overlap_height < 0) = 0;

overlap_width(overlap_width < 0) = 0;

% remove NaN values (should not exist any)

valid_ind = ~isnan(overlap_height) & ~isnan(overlap_width);

% calculate area

overlap_area = overlap_height(valid_ind) .* overlap_width(valid_ind);

tracked_area = positions(valid_ind,3) .* positions(valid_ind,4);

ground_truth_area = ground_truth(valid_ind,3) .* ground_truth(valid_ind,4);

% calculate PASCAL overlaps

overlaps = overlap_area ./ (tracked_area + ground_truth_area - overlap_area);

% calculate PASCAL precision

Overlap = mean(overlaps);

PASCAL_precision = nnz(overlaps >= PASCAL_threshold) / numel(overlaps);

end

2.3.3 注意事项

- UAV的视频部分有目标完全离开视野的情况,这种情况的groundtruth标注为NaN,NaN,NaN,NaN,所以在评测时我们先把这部分帧剔除再进行计算;

- 这种自跑自评的方法不限于UAV库,OTB和VOT都适用。