Jsoup - 使用详解与爬虫

【1】简介

jsoup is a Java library for working with real-world HTML. It provides a very convenient API for extracting and manipulating data, using the best of DOM, CSS, and jquery-like methods.

jsoup implements the WHATWG HTML5 specification, and parses HTML to the same DOM as modern browsers do.

- scrape and parse HTML from a URL, file, or string

- find and extract data, using DOM traversal or CSS selectors

- manipulate the HTML elements, attributes, and text

- clean user-submitted content against a safe white-list, to prevent XSS attacks

- output tidy HTML

jsoup is designed to deal with all varieties of HTML found in the wild; from pristine and validating, to invalid tag-soup; jsoup will create a sensible parse tree.

教程地址如下 :

the website: https://jsoup.org/。

中文地址:http://www.open-open.com/jsoup/

翻译过来也就是说,通过jsoup你可以很方便的从url或者文件或者仅仅只是一段html中使用DOM/CSS/JS的知识解析出来你想要得到的东西。也可以使用它来防止XSS攻击。

至于为什么,则是jsoup会将得到的html解析为DOM Tree。拿到了DOM Tree,则一切就变得简单起来。

【2】Jsoup类

Jsoup源码如下:

- 一向认为,研究技术,首先看源码。

/**

The core public access point to the jsoup functionality.

@author Jonathan Hedley */

public class Jsoup {

private Jsoup() {}

/**

Parse HTML into a Document. The parser will make a sensible, balanced document tree out of any HTML.

@param html HTML to parse

@param baseUri The URL where the HTML was retrieved from. Used to resolve relative URLs to absolute URLs, that occur before the HTML declares a {@code

* Use examples:

*

* Document doc = Jsoup.connect("http://example.com").userAgent("Mozilla").data("name", "jsoup").get();Document doc = Jsoup.connect("http://example.com").cookie("auth", "token").post();

* @param url URL to connect to. The protocol must be {@code http} or {@code https}.

* @return the connection. You can add data, cookies, and headers; set the user-agent, referrer, method; and then execute.

*/

public static Connection connect(String url) {

return HttpConnection.connect(url);

}

/**

Parse the contents of a file as HTML.

@param in file to load HTML from

@param charsetName (optional) character set of file contents. Set to {@code null} to determine from {@code http-equiv} meta tag, if

present, or fall back to {@code UTF-8} (which is often safe to do).

@param baseUri The URL where the HTML was retrieved from, to resolve relative links against.

@return sane HTML

@throws IOException if the file could not be found, or read, or if the charsetName is invalid.

*/

public static Document parse(File in, String charsetName, String baseUri) throws IOException {

return DataUtil.load(in, charsetName, baseUri);

}

/**

Parse the contents of a file as HTML. The location of the file is used as the base URI to qualify relative URLs.

@param in file to load HTML from

@param charsetName (optional) character set of file contents. Set to {@code null} to determine from {@code http-equiv} meta tag, if

present, or fall back to {@code UTF-8} (which is often safe to do).

@return sane HTML

@throws IOException if the file could not be found, or read, or if the charsetName is invalid.

@see #parse(File, String, String)

*/

public static Document parse(File in, String charsetName) throws IOException {

return DataUtil.load(in, charsetName, in.getAbsolutePath());

}

/**

Read an input stream, and parse it to a Document.

@param in input stream to read. Make sure to close it after parsing.

@param charsetName (optional) character set of file contents. Set to {@code null} to determine from {@code http-equiv} meta tag, if

present, or fall back to {@code UTF-8} (which is often safe to do).

@param baseUri The URL where the HTML was retrieved from, to resolve relative links against.

@return sane HTML

@throws IOException if the file could not be found, or read, or if the charsetName is invalid.

*/

public static Document parse(InputStream in, String charsetName, String baseUri) throws IOException {

return DataUtil.load(in, charsetName, baseUri);

}

/**

Read an input stream, and parse it to a Document. You can provide an alternate parser, such as a simple XML

(non-HTML) parser.

@param in input stream to read. Make sure to close it after parsing.

@param charsetName (optional) character set of file contents. Set to {@code null} to determine from {@code http-equiv} meta tag, if

present, or fall back to {@code UTF-8} (which is often safe to do).

@param baseUri The URL where the HTML was retrieved from, to resolve relative links against.

@param parser alternate {@link Parser#xmlParser() parser} to use.

@return sane HTML

@throws IOException if the file could not be found, or read, or if the charsetName is invalid.

*/

public static Document parse(InputStream in, String charsetName, String baseUri, Parser parser) throws IOException {

return DataUtil.load(in, charsetName, baseUri, parser);

}

/**

Parse a fragment of HTML, with the assumption that it forms the {@code body} of the HTML.

@param bodyHtml body HTML fragment

@param baseUri URL to resolve relative URLs against.

@return sane HTML document

@see Document#body()

*/

public static Document parseBodyFragment(String bodyHtml, String baseUri) {

return Parser.parseBodyFragment(bodyHtml, baseUri);

}

/**

Parse a fragment of HTML, with the assumption that it forms the {@code body} of the HTML.

@param bodyHtml body HTML fragment

@return sane HTML document

@see Document#body()

*/

public static Document parseBodyFragment(String bodyHtml) {

return Parser.parseBodyFragment(bodyHtml, "");

}

/**

Fetch a URL, and parse it as HTML. Provided for compatibility; in most cases use {@link #connect(String)} instead.

The encoding character set is determined by the content-type header or http-equiv meta tag, or falls back to {@code UTF-8}.

@param url URL to fetch (with a GET). The protocol must be {@code http} or {@code https}.

@param timeoutMillis Connection and read timeout, in milliseconds. If exceeded, IOException is thrown.

@return The parsed HTML.

@throws java.net.MalformedURLException if the request URL is not a HTTP or HTTPS URL, or is otherwise malformed

@throws HttpStatusException if the response is not OK and HTTP response errors are not ignored

@throws UnsupportedMimeTypeException if the response mime type is not supported and those errors are not ignored

@throws java.net.SocketTimeoutException if the connection times out

@throws IOException if a connection or read error occurs

@see #connect(String)

*/

public static Document parse(URL url, int timeoutMillis) throws IOException {

Connection con = HttpConnection.connect(url);

con.timeout(timeoutMillis);

return con.get();

}

/**

Get safe HTML from untrusted input HTML, by parsing input HTML and filtering it through a white-list of permitted

tags and attributes.

@param bodyHtml input untrusted HTML (body fragment)

@param baseUri URL to resolve relative URLs against

@param whitelist white-list of permitted HTML elements

@return safe HTML (body fragment)

@see Cleaner#clean(Document)

*/

public static String clean(String bodyHtml, String baseUri, Whitelist whitelist) {

Document dirty = parseBodyFragment(bodyHtml, baseUri);

Cleaner cleaner = new Cleaner(whitelist);

Document clean = cleaner.clean(dirty);

return clean.body().html();

}

/**

Get safe HTML from untrusted input HTML, by parsing input HTML and filtering it through a white-list of permitted

tags and attributes.

@param bodyHtml input untrusted HTML (body fragment)

@param whitelist white-list of permitted HTML elements

@return safe HTML (body fragment)

@see Cleaner#clean(Document)

*/

public static String clean(String bodyHtml, Whitelist whitelist) {

return clean(bodyHtml, "", whitelist);

}

/**

* Get safe HTML from untrusted input HTML, by parsing input HTML and filtering it through a white-list of

* permitted tags and attributes.

*

The HTML is treated as a body fragment; it's expected the cleaned HTML will be used within the body of an

* existing document. If you want to clean full documents, use {@link Cleaner#clean(Document)} instead, and add

* structural tags (html, head, body etc) to the whitelist.

*

* @param bodyHtml input untrusted HTML (body fragment)

* @param baseUri URL to resolve relative URLs against

* @param whitelist white-list of permitted HTML elements

* @param outputSettings document output settings; use to control pretty-printing and entity escape modes

* @return safe HTML (body fragment)

* @see Cleaner#clean(Document)

*/

public static String clean(String bodyHtml, String baseUri, Whitelist whitelist, Document.OutputSettings outputSettings) {

Document dirty = parseBodyFragment(bodyHtml, baseUri);

Cleaner cleaner = new Cleaner(whitelist);

Document clean = cleaner.clean(dirty);

clean.outputSettings(outputSettings);

return clean.body().html();

}

/**

Test if the input body HTML has only tags and attributes allowed by the Whitelist. Useful for form validation.

The input HTML should still be run through the cleaner to set up enforced attributes, and to tidy the output.

Assumes the HTML is a body fragment (i.e. will be used in an existing HTML document body.)

@param bodyHtml HTML to test

@param whitelist whitelist to test against

@return true if no tags or attributes were removed; false otherwise

@see #clean(String, org.jsoup.safety.Whitelist)

*/

public static boolean isValid(String bodyHtml, Whitelist whitelist) {

return new Cleaner(whitelist).isValidBodyHtml(bodyHtml);

}

}

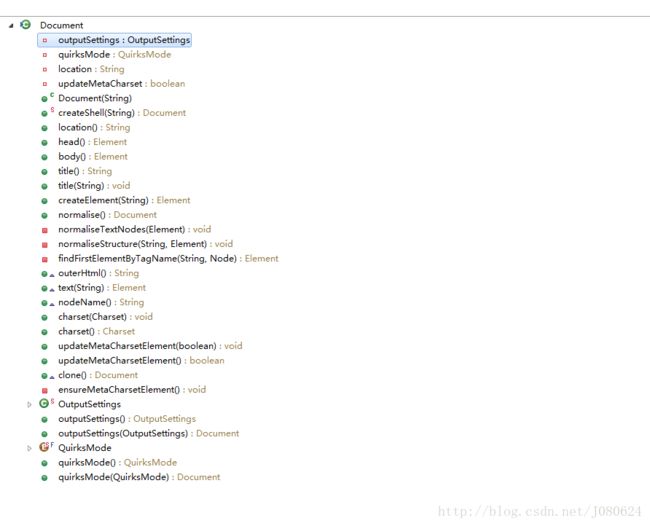

其方法如下图所示:

方法名parse的多个重载方法,基本就是解析不同状况下的html。如有的提供了baseUrl,有的提供了parser。

最后四个方法在防止用户恶意输入html脚本或者过滤HTML时使用。

connet则是从url获取Document。示例如下:

Connection conn = Jsoup.connect(url );

String userAgent="Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.108 Safari/537.36";

// 修改http包中的header,伪装成浏览器进行抓取

conn.header("User-Agent", userAgent);

Document doc = null;

try {

doc = conn.get();

} catch (IOException e) {

e.printStackTrace();

}拿到Document之后,接下来我们需要做的就是获取具体Element,Node,

【3】Document

什么是Document?

W3C解释:

每个载入浏览器的 HTML 文档都会成为 Document 对象。

Document 对象使我们可以从脚本中对 HTML 页面中的所有元素进行访问。

提示:Document 对象是 Window 对象的一部分,可通过 window.document 属性对其进行访问。

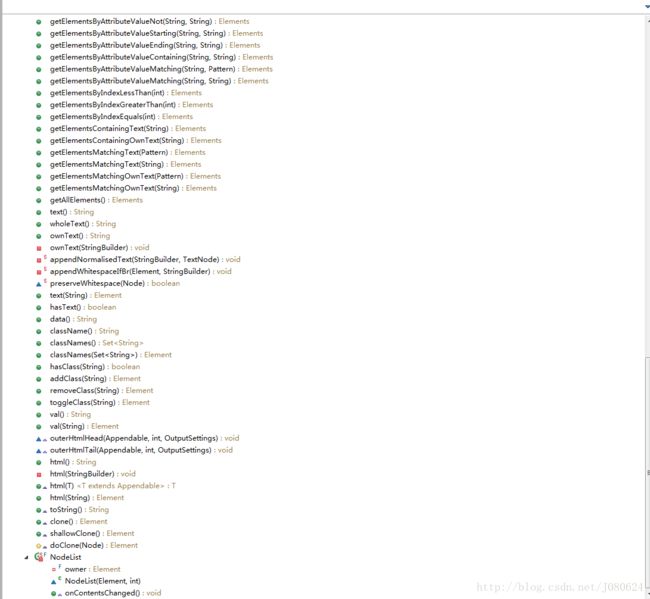

源码方法如下图:

需要注意的是,Document是Element的子类。

/**

A HTML Document.

@author Jonathan Hedley, [email protected] */

public class Document extends Element {

private OutputSettings outputSettings = new OutputSettings();

private QuirksMode quirksMode = QuirksMode.noQuirks;

private String location;

private boolean updateMetaCharset = false;

/**

Create a new, empty Document.

@param baseUri base URI of document

@see org.jsoup.Jsoup#parse

@see #createShell

*/

public Document(String baseUri) {

super(Tag.valueOf("#root", ParseSettings.htmlDefault), baseUri);

this.location = baseUri;

}

/**

Create a valid, empty shell of a document, suitable for adding more elements to.

@param baseUri baseUri of document

@return document with html, head, and body elements.

*/

public static Document createShell(String baseUri) {

Validate.notNull(baseUri);

Document doc = new Document(baseUri);

Element html = doc.appendElement("html");

html.appendElement("head");

html.appendElement("body");

return doc;

}

//...

}也可以这样理解,Document是许多个Element组成的。

【4】Element

什么是Element ?

W3C:

在 HTML DOM 中,Element 对象表示 HTML 元素。

Element 对象可以拥有类型为元素节点、文本节点、注释节点的子节点。

NodeList 对象表示节点列表,比如 HTML 元素的子节点集合。

元素也可以拥有属性。属性是属性节点。源码如下:

- Element继承自Node

/**

* A HTML element consists of a tag name, attributes, and child nodes (including text nodes and

* other elements).

*

* From an Element, you can extract data, traverse the node graph, and manipulate the HTML.

*

* @author Jonathan Hedley, [email protected]

*/

public class Element extends Node {

private static final List EMPTY_NODES = Collections.emptyList();

private static final Pattern classSplit = Pattern.compile("\\s+");

private Tag tag;

private WeakReference> shadowChildrenRef; // points to child elements shadowed from node children

List childNodes;

private Attributes attributes;

private String baseUri;

/**

* Create a new, standalone element.

* @param tag tag name

*/

public Element(String tag) {

this(Tag.valueOf(tag), "", new Attributes());

}

/**

* Create a new, standalone Element. (Standalone in that is has no parent.)

*

* @param tag tag of this element

* @param baseUri the base URI

* @param attributes initial attributes

* @see #appendChild(Node)

* @see #appendElement(String)

*/

public Element(Tag tag, String baseUri, Attributes attributes) {

Validate.notNull(tag);

Validate.notNull(baseUri);

childNodes = EMPTY_NODES;

this.baseUri = baseUri;

this.attributes = attributes;

this.tag = tag;

}

/**

* Create a new Element from a tag and a base URI.

*

* @param tag element tag

* @param baseUri the base URI of this element. It is acceptable for the base URI to be an empty

* string, but not null.

* @see Tag#valueOf(String, ParseSettings)

*/

public Element(Tag tag, String baseUri) {

this(tag, baseUri, null);

}

//...

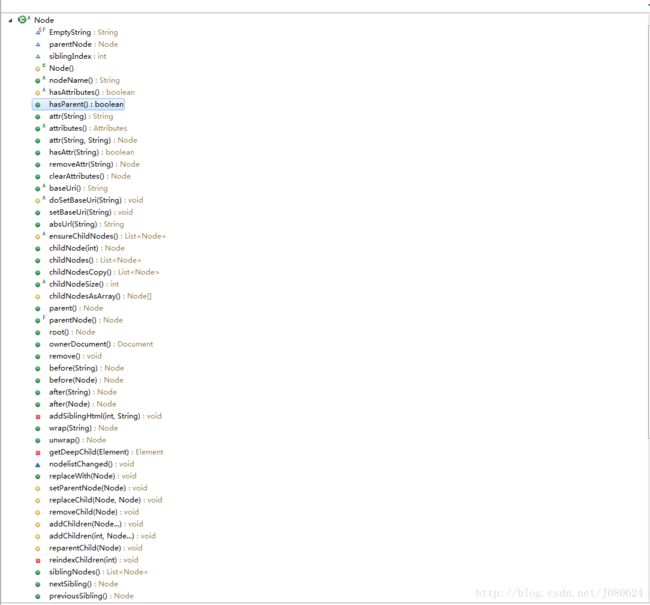

} 其方法如下:

so many !

还没完,继续看Node类。

【5】Node

什么是Node ?

W3C:

在 HTML DOM (文档对象模型)中,每个部分都是节点:

文档本身是文档节点

所有 HTML 元素是元素节点

所有 HTML 属性是属性节点

HTML 元素内的文本是文本节点

注释是注释节点源码如下:

/**

The base, abstract Node model. Elements, Documents, Comments etc are all Node instances.

@author Jonathan Hedley, [email protected] */

public abstract class Node implements Cloneable {

static final String EmptyString = "";

Node parentNode;

int siblingIndex;

/**

* Default constructor. Doesn't setup base uri, children, or attributes; use with caution.

*/

protected Node() {

}

/**

Get the node name of this node. Use for debugging purposes and not logic switching (for that, use instanceof).

@return node name

*/

public abstract String nodeName();

/**

* Check if this Node has an actual Attributes object.

*/

protected abstract boolean hasAttributes();

public boolean hasParent() {

return parentNode != null;

}

/**

* Get an attribute's value by its key. Case insensitive

*

* To get an absolute URL from an attribute that may be a relative URL, prefix the key with abs,

* which is a shortcut to the {@link #absUrl} method.

*

* E.g.:

* String url = a.attr("abs:href");

*

* @param attributeKey The attribute key.

* @return The attribute, or empty string if not present (to avoid nulls).

* @see #attributes()

* @see #hasAttr(String)

* @see #absUrl(String)

*/

public String attr(String attributeKey) {

Validate.notNull(attributeKey);

if (!hasAttributes())

return EmptyString;

String val = attributes().getIgnoreCase(attributeKey);

if (val.length() > 0)

return val;

else if (attributeKey.startsWith("abs:"))

return absUrl(attributeKey.substring("abs:".length()));

else return "";

}

/**

* Get all of the element's attributes.

* @return attributes (which implements iterable, in same order as presented in original HTML).

*/

public abstract Attributes attributes();

/**

* Set an attribute (key=value). If the attribute already exists, it is replaced. The attribute key comparison is

* case insensitive.

* @param attributeKey The attribute key.

* @param attributeValue The attribute value.

* @return this (for chaining)

*/

public Node attr(String attributeKey, String attributeValue) {

attributes().putIgnoreCase(attributeKey, attributeValue);

return this;

}

//...

}到这里可以归纳一下,document,element,comment etc are all Node instance。

你甚至可以认为,一个Html Document中,一切皆为节点!

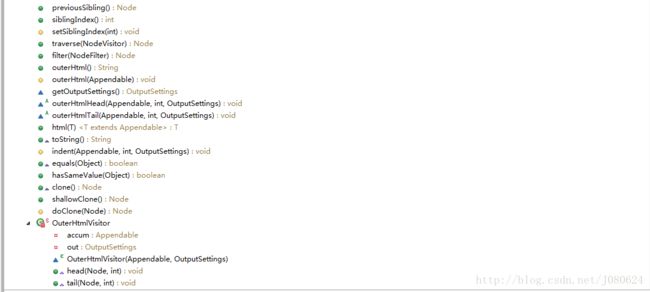

其方法如下:

至此,拥有了这些方法,只要拿到Document,就可以得到任何你想要的Document中的内容。

【6】使用Jsoup进行爬虫

爬虫,听起来很高大上的一个技术。其实不然,尤其是使用Jsoup,稍微研究一下就可以很容易掌握。

主要思路如下:

获取模板url

添加头部用户代理伪装浏览器

很多网站可能会做了防爬虫处理

拿到Document

建立自己的过滤规则

获取自己想要的数据持久化

这里有一个爬虫实战,爬取模板url并进行自动翻页获取指定内容操作。

点击下载:http://download.csdn.net/download/j080624/10214159