java Diagnostic Tools

Diagnostic Tools

The Java Development Kit (JDK) provides diagnostic tools and troubleshooting tools specific to various operating systems. Custom diagnostic tools can also be developed using the APIs provided by the JDK.

This chapter contains the following sections:

-

Diagnostic Tools Overview

-

What Are Java Flight Recordings

-

How to Produce a Flight Recording

-

Inspect a Flight Recording

-

The jcmd Utility

-

Native Memory Tracking

-

JConsole

-

The jdb Utility

-

The jinfo Utility

-

The jmap Utility

-

The jps Utility

-

The jrunscript Utility

-

The jstack Utility

-

The jstat Utility

-

The visualgc Tool

-

Control+Break Handler

-

Native Operating System Tools

-

Custom Diagnostic Tools

-

The jstatd Daemon

Diagnostic Tools Overview

Most of the command-line utilities described in this section are either included in the JDK or native operating system tools and utilities.

Although the JDK command-line utilities are included in the JDK download, it is important to consider that they can be used to diagnose issues and monitor applications that are deployed with the Java Runtime Environment (JRE).

In general, the diagnostic tools and options use various mechanisms to get the information they report. The mechanisms are specific to the virtual machine (VM) implementation, operating systems, and release. Frequently, only a subset of the tools is applicable to a given issue at a particular time. Command-line options that are prefixed with -XX are specific to Java HotSpot VM. See Java HotSpot VM Command-Line Options.

Note:

The -XX options are not part of the Java API and can vary from one release to the next.

The tools and options are divided into several categories, depending on the type of problem that you are troubleshooting. Certain tools and options might fall into more than one category.

-

Postmortem diagnostics These tools and options can be used to diagnose a problem after an application crashes. See Postmortem Diagnostic Tools.

-

Hung processes These tools can be used to investigate a hung or deadlocked process. See Hung Processes Tools.

-

Monitoring These tools can be used to monitor a running application. See Monitoring Tools.

-

Other These tools and options can be used to help diagnose other issues. See Other Tools, Options, Variables, and Properties.

Note:

Some command-line utilities described in this section are experimental. The jstack, jinfo, and jmap utilities are examples of utilities that are experimental. It is suggested to use the latest diagnostic utility, jcmd instead of the earlier jstack, jinfo, and jmap utilities.

Java Mission Control

The Java Mission Control (JMC) is a JDK profiling and diagnostics tools platform for HotSpot JVM.

It is a tool suite for basic monitoring, managing, and production-time profiling, and diagnostics with high performance. Java Mission Control minimizes the performance overhead that's usually an issue with profiling tools.

The Java Mission Control (JMC) consists of Java Management Console (JMX), Java Flight Recorder (JFR), and several other plug-ins downloadable from the tool. The JMX is a tool for monitoring and managing Java applications, and the JFR is a profiling tool. Java Mission Control is also available as a set of plug-ins for the Eclipse IDE.

The following topic describes how to troubleshoot with Java Mission Control.

-

Troubleshoot with Java Mission Control

Troubleshoot with Java Mission Control

Troubleshooting activities that you can perform with Java Mission Control.

Java Mission Control allows you to perform the following troubleshooting activities:

- Java Management console (JMX) connects to a running JVM, and collects and displays key characteristics in real time.

- Triggers user-provided custom actions and rules for JVM.

- Experimental plug-ins like -

WLS,DTrace,JOverflow, and others from the JMC tool provide troubleshooting activities.DTraceplug-in is an extendedDScriptlanguage to produce self-describing events. It provides visualization similar to Java Flight Recorder.JOverflowis another plug-in tool for analyzing heap waste (empty/sparse collections). It is recommended to use JDK 8 release and later for optimal use of theJOverflowplug-in.

- The Java Flight Recording (JFR) in Java Mission Control is available to analyze events. The preconfigured tabs enable you to easily to drill down in various areas of common interest, such as, code, memory and gc, threads, and I/O. The General Events tab and Operative Events tab together allow drilling down further and rapidly honing in on a set of events with certain properties. The Events tab usually has check boxes to only show events in the Operative set.

- JFR, when used as a plug-in for the JMC client, presents diagnostic information in logically grouped tables, charts, and dials. It enables you to select the range of time and level of detail necessary to focus on the problem.

- The Java Mission Control plug-ins connect to JVM using the Java Management Extensions (JMX) agent. The JMX is a standard API for the management and monitoring of resources such as applications, devices, services, and the Java Virtual Machine.

What Are Java Flight Recordings

The Java Flight Recorder records detailed information about the Java runtime and the Java application running in the Java runtime.

The recording process is done with little overhead. The data is recorded as time-stamped data points called events. Typical events can be threads waiting for locks, garbage collections, periodic CPU usage data, etc.

When creating a flight recording, you select which events should be saved. This is called a recording template. Some templates only save very basic events and have virtually no impact on performance. Other templates may come with slight performance overhead, and may also trigger GCs in order to gather additional information. In general, it is rare to see more than a few percentage of overhead.

Flight Recordings can be used to debug a wide range of issues from performance problems to memory leaks or heavy lock contention.

The following topic describes types of recording to produce a Java flight recording.

-

Types of Recordings

Types of Recordings

The two types of flight recordings are continuous recordings and profiling recordings.

-

Continuous recordings: A continuous recording is a recording that is always on and saves, for example, the last 6 hours of data. If your application runs into any issues, then you can dump the data from, for example, the last hour and see what happened at the time of the problem.

The default setting for a continuous recordings is to use a recording profile with low overhead. This profile will not get heap statistics or allocation profiling, but will still gather a lot of useful data.

A continuous recording is great to always have running, and is very helpful when debugging issues that happen rarely. The recording can be dumped manually using either jcmd or JMC. You can also set a trigger in JMC to dump the flight recording when specific criteria is fulfilled.

-

Profiling recordings: A profiling recording is a recording that is turned on, runs for a set amount of time, and then stops. Usually, a profiling recording has more events enabled and may have a slightly bigger performance effect. The events that are turned on can be modified depending on your use of profiling recording.

Typical use cases for profiling recordings are as follows:

-

Profile which methods are run the most and where most objects are created.

-

Look for classes that use more and more heap, which indicates a memory leak.

-

Look for bottlenecks due to synchronization and many more such use cases.

A profiling recording will give a lot of information even though you are not troubleshooting a specific issue. A profiling recording will give you a good view of the application and can help you find any bottlenecks or areas that need improvement.

-

Note:

The typical overhead is around 2%, so you can run a profiling recording on your production environment (which is one of the main use cases for JFR), unless you are extremely sensitive for performance or latencies.

How to Produce a Flight Recording

The following sections describe three ways to produce a flight recording.

-

Use Java Mission Control to Produce a Flight Recording

-

Use Startup Flags at the Command Line to Produce a Flight Recording

-

Use Triggers for Automatic Recordings

Use Java Mission Control to Produce a Flight Recording

Use Java Mission Control (JMC) to easily manage flight recordings.

Prerequisites:

To start, find your server in the JVM Browser in the leftmost frame, as shown in Figure 2-1.

Figure 2-1 Java Mission Control - Find Server

Description of "Figure 2-1 Java Mission Control - Find Server"

By default, any local running JVMs will be listed. Remote JVMs (running as the same effective user as the user running JMC) must be set up to use a remote JMX agent. Then, click the New JVM Connection button, and enter the network details.

The Java Flight Recorder can be enabled during runtime.

The following are three ways to use Java Mission Control to produce a flight recording:

- Inspect running recordings: Expand the node in the JVM Browser to the recordings that are running. Figure 2-2 shows both a running continuous recording (with the infinity sign) and a timed profiling recording.

Figure 2-2 Java Mission Control - Running Recordings

Description of "Figure 2-2 Java Mission Control - Running Recordings"Right-click any of the recordings to dump, edit, or stop the recording. Stopping a profiling recording will still produce a recording file and closing a profiling recording will discard the recording.

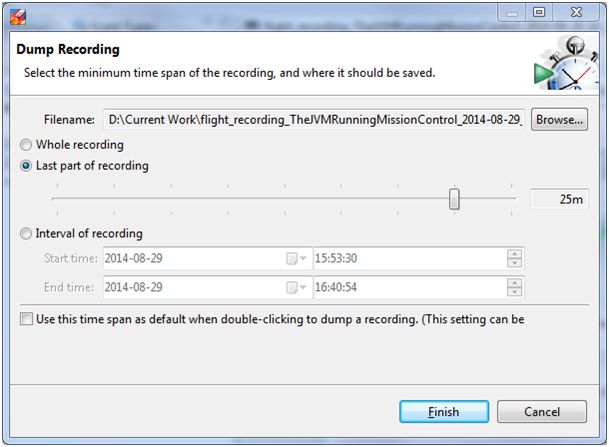

- Dump continuous recordings: Right-click a continuous recording in the JVM Browser and then select to dump it to a file. In the dialog box that comes up, select to dump all available data or only the last part of the recording, as shown in Figure 2-3.

Figure 2-3 Java Mission Control - Dump Continuous Recordings

Description of "Figure 2-3 Java Mission Control - Dump Continuous Recordings" - Start a new recording: To start a new recording, right click the JVM you want to record on and select Start Flight Recording. Then, a window displays, as shown in Figure 2-4.

Figure 2-4 Java Mission Control - Start Flight Recordings

Description of "Figure 2-4 Java Mission Control - Start Flight Recordings"Select either Time fixed recording (profiling recording), or Continuous recording as shown in Figure 2-4. For continuous recordings, you also specify the maximum size or age of events you want to save.

You can also select Event settings. There is an option to create your own templates, but for 99 percent of all use cases you want to select either the Continuous template (for very low overhead recordings) or the Profiling template (for more data and slightly more overhead). Note: The typical overhead for a profiling recording is about 2 percent.

When done, click Next. The next screen, as shown in Figure 2-5, gives you a chance to modify the template for different use cases.

Figure 2-5 Java Mission Control - Event Options for Profiling

Description of "Figure 2-5 Java Mission Control - Event Options for Profiling"The default settings give a good balance between data and performance. In some cases, you may want to add extra events. For example, if you are investigating a memory leak or want to see the objects that take up the most Java heap, enable Heap Statistics. This will trigger two Old Collections at the start and end of the recording, so this will give some extra latency. You can also select to show all exceptions being thrown, even the ones that are caught. For some applications, this will generate a lot of events.

The Threshold value is the length of event recording. For example, by default, synchronization events above 10 ms are gathered. This means, if a thread waits for a lock for more than 10 ms, an event is saved. You can lower this value to get more detailed data for short contentions.

The Thread Dump setting gives you an option to do periodic thread dumps. These will be normal textual thread dumps, like the ones you would get using the diagnostic command

Thread.print, or by using thejstacktool. The thread dumps complement the events.

Use Startup Flags at the Command Line to Produce a Flight Recording

Use startup flags at the command line to produce profiling recording, continuous recording, and using diagnostic commands.

For a complete description of JFR flags, see Advanced Runtime Options in the Java Platform, Standard Edition Tools Reference.

The following are three ways to startup flags at the command line to produce a flight recording.

- Start a profiling recording: You can configure a time fixed recording at the start of the application using the

-XX:StartFlightRecordingoption. The following example illustrates how to run theMyAppapplication and start a 60-second recording 20 seconds after starting the JVM, which will be saved to a file namedmyrecording.jfr:java -XX:StartFlightRecording=delay=20s,duration=60s,name=myrecording,filename=C:\TEMP\myrecording.jfr,settings=profile MyAppThe settings parameter takes either the path to or the name of a template. Default templates are located in the

jre/lib/jfrfolder. The two standard profiles are: default- a low overhead setting made primarily for continuous recordings and profile - gathers more data and is primarily for profiling recordings. - Start a continuous recording: You can also start a continuous recording from the command line using

-XX:FlightRecorderOptions. These flags will start a continuous recording that can later be dumped if needed. The following example illustrates a continuous recording. The temporary data will be saved to disk, to the/tmpfolder, and 6 hours of data will be stored.java -XX:FlightRecorderOptions=defaultrecording=true,disk=true,repository=/tmp,maxage=6h,settings=default MyAppNote:

When you actually dump the recording, you specify a new location for the dumped file, so the files in the repository are only temporary. - Use diagnostic commands:

You can also control recordings by using Java command-line diagnostic commands. The simplest way to execute a diagnostic command is to use the

jcmdtool located in the Java installation directory. For more details see, The jcmd Utility.

Use Triggers for Automatic Recordings

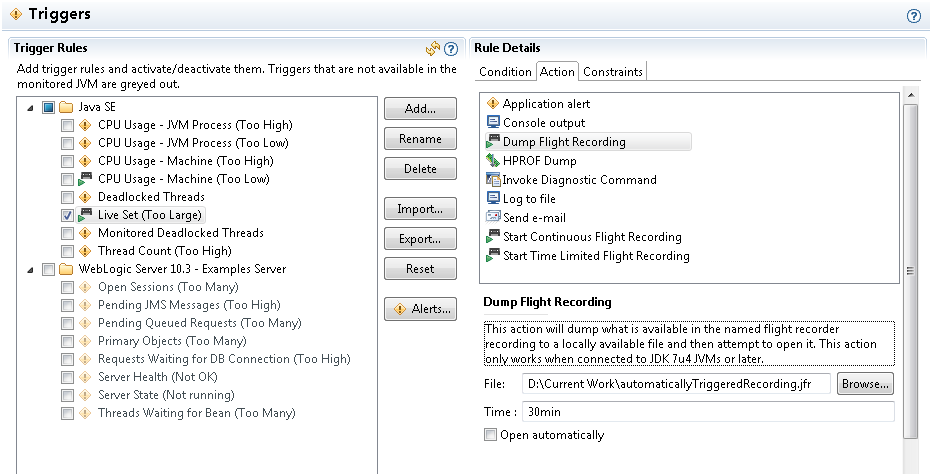

You can set up Java Mission Control to automatically start or dump a flight recording if a condition is met. This is done from the JMX console. To start the JMX console, find your application in the JVM Browser, right-click it, and select Start JMX Browser.

Select the Triggers tab at the bottom of the screen, as shown in Figure 2-6.

Figure 2-6 Java Mission Control - Automatic Recordings

Description of "Figure 2-6 Java Mission Control - Automatic Recordings"

You can choose to create a trigger on any MBean in the application. There are several default triggers set up for common conditions such as high CPU usage, deadlocked threads, or too large of a live set. Select Add to choose any MBean in the application, including your own application-specific ones. When you select your trigger, you can also select the conditions that must be met. For more information, click the question mark in the top right corner to see the built-in help.

Click the boxes next to the triggers to have several triggers running.

Once you have selected your condition, click the Action tab. Then, select what to do when the condition is met. Finally, choose to either dump a continuous recording or to start a time-limited flight recording as shown in Figure 2-7.

Figure 2-7 Java Mission Control - Use Triggers

Description of "Figure 2-7 Java Mission Control - Use Triggers"

Inspect a Flight Recording

Information about how to get a sample JFR to inspect a flight recording and various tabs in Java Mission Control for you to analyze the flight recordings.

The following sections are described:

-

How to Get a Sample JFR to Inspect

-

Range Navigator

-

General Tab

-

Memory Tab

-

Code Tab

-

Threads Tab

-

I/O Tab

-

System Tab

-

Events Tab

How to Get a Sample JFR to Inspect

Create a Flight Recording, you can open it in Mission Control.

After you create a Flight Recording, you can open it in Mission Control. An easy way to look at a flight recording is:

- Open Mission Control and select the JVM Browser tab.

- Select The JVM Running Mission Control option to create a short recording.

Open a flight recording to see several main tabs such as General, Memory, Code, Threads, I/O, System, and Events. You can also have other main tabs if any plug-ins are installed. Each of these main tabs have sub tabs. Click the question mark to view the built-in help section for the main tabs and subtabs.

Range Navigator

Inspect the flight recordings using the range navigator.

Each tab has a range navigator at the top view.

Figure 2-8 Inspect Flight Recordings - Range Navigator

Description of "Figure 2-8 Inspect Flight Recordings - Range Navigator"

The vertical bars in Figure 2-8 represent the events in the recording. The higher the bar, the more events there are at that time. You can drag the edges of the selected time to zoom in or out in the recording. Double click the range navigator to zoom out and view the entire recording. Click the Synchronize Selection check box for all the subtabs to use the same zoom level.

See Using the Range Navigator in the built-in help for more information. The events are named as per the tab name.

General Tab

Inspect flight recordings in the General tab.

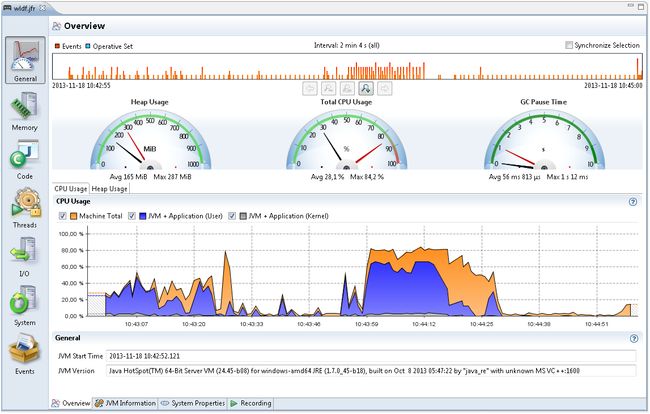

The General Tab contains a few subtabs that describe the general application. The first subtab is Overview, which shows some basic information such as the maximum heap usage, total CPU usage, and GC pause time, as shown in Figure 2-9.

Figure 2-9 Inspect Flight Recordings - General Tab

Description of "Figure 2-9 Inspect Flight Recordings - General Tab"

Also, look at the CPU Usage over time and both the Application Usage and Machine Total. This tab is good to look at when something that goes wrong immediately in the application. For example, watch for CPU usage spiking near 100 percent or the CPU usage is too low or too long garbage collection pauses.

Note: A profiling recording started with Heap Statistics gets two old collections, at the start and the end of the recording that may be longer than the rest.

The other subtab - JVM Information shows the JVM information. The start parameters subtabs - System Properties shows all system properties set, and Recording shows information about the specific recording such as, the events that are turned on. Click the question marks for built-in detailed information about all tabs and subtabs.

Memory Tab

Inspect the flight recordings in the Memory tab.

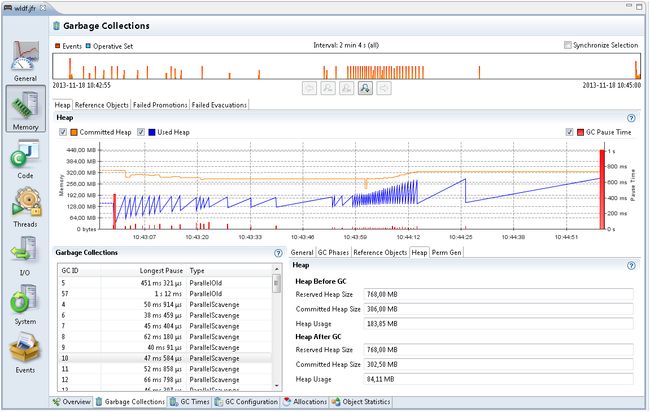

The Memory tab contains information about Garbage Collections, Allocation patterns and Object Statistics. This tab is specifically helpful to debug memory leaks as well as for tuning the GC.

The Overview tab shows some general information about the memory usage and some statistics over garbage collections. Note: The graph scale in the Overview tab goes up to the available physical memory in the machine; therefore, in some cases the Java heap may take up only a small section at the bottom.

The following three subtabs are described from the Memory tab.

-

Garbage Collection tab: The Garbage Collection tab shows memory usage over time and information about all garbage collections.

Figure 2-10 Inspect Flight Recordings - Garbage Collections

Description of "Figure 2-10 Inspect Flight Recordings - Garbage Collections"As shown in Figure 2-10, the spiky pattern of the heap usage is perfectly normal. In most applications, temporary objects are allocated all the time. Once a condition is met, a Garbage Collection (GC) is triggered and all the objects no longer used are removed. Therefore, the heap usage increases steadily until a GC is triggered, then it drops suddenly.

Most GCs in Java have some kind of smaller garbage collections. The old GC goes through the entire Java heap, while the other GC might look at part of the heap. The heap usage after an old collection is the memory the application is using, which is called the live set.

The flight recording generated with Heap Statistics enabled will start and end with an old GC. Select that old GC in the list of GCs, and then choose the General tab to see the GC Reason as - Heap Inspection Initiated GC. These GCs usually take slightly longer than other GCs.

For a better way to address memory leaks, look at the Heap After GC value in the first and last old GC. There could a memory leak when this value is increasing over time.

The GC Times tab has information about the time spent doing GCs and time when the application is completely paused due to GCs. The GC Configuration tab has GC configuration information. For more details about these tabs, click the question mark in the top right corner to see the built-in help.

-

Allocations tab: Figure 2-11 shows a selection of all memory allocations made. Small objects in Java are allocated in a TLAB (Thread Local Area Buffer). TLAB is a small memory area where new objects are allocated. Once a TLAB is full, the thread gets a new one. Logging all memory allocations gives an overhead; therefore, all allocations that triggered a new TLAB are logged. Larger objects are allocated outside TLAB, which are also logged.

Figure 2-11 Inspect Flight Recordings - Allocations Tab

Description of "Figure 2-11 Inspect Flight Recordings - Allocations Tab"To estimate the memory allocation for each class, select the Allocation in new TLAB tab and then select Allocations tab. These allocations are object allocations that happen to trigger the new TLABs. The

chararrays trigger the most new TLABs. How much memory is allocated aschararrays is not known. The size of the TLABs is a good estimate for memory allocated bychararrays.Figure 2-11 is an example for

chararrays allocating the most memory. Click one of the classes to see the Stack Trace of these allocations. The example recording shows that 44% of all allocation pressure comes fromchararrays and 27 percent comes fromArray.copyOfRange, which is called fromStringBuilder.toString. TheStringBuilder.toStringis in turn usually called byThrowable.printStackTraceandStackTraceElement.toString. Expand further to see how these methods are called.Note: The more temporary objects the application allocates, the more the application must garbage collect. The Allocations tab helps you find the most allocations and reduce the GC pressure in your application. Look at Allocation outside TLAB tab to see large memory allocations, which usually have less memory pressure than the allocations in New TLAB tab.

-

Object Statistics tab: The Object Statistics tab shows the classes that have the most live set. Read the Garbage Collection subtab from the Memory Tab to understand a live set. Figure 2-12 shows heap statistics for a flight recording. Enable Heap Statistics for a flight recording to show the data. The Top Growers tab at the bottom shows how each object type increased in size during a flight recording. A specific object type increased a lot in size indicates a memory leak; however, a small variance is normal. Especially, investigate the top growers of non-standard Java classes.

Figure 2-12 Inspect Flight Recordings - Object Statistics Tab

Description of "Figure 2-12 Inspect Flight Recordings - Object Statistics Tab"

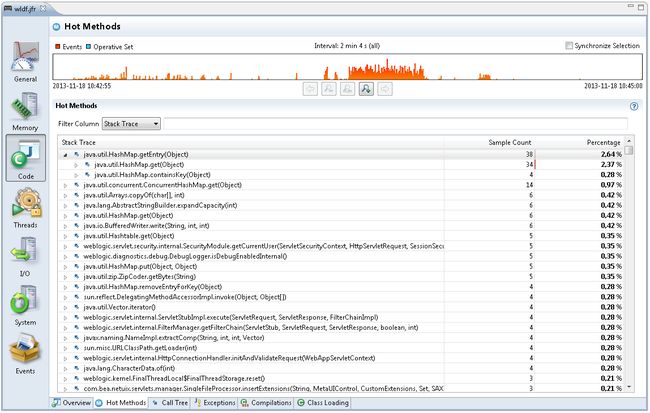

Code Tab

Inspect flight recordings in the Code tab.

The Code tab contains information about where the application spends most of its time. The Overview subtab shows the packages and classes that spent the most execution time. This data comes from sampling. JFR takes samples of threads running at intervals. Only the threads running actual code are sampled; the threads that are sleeping, waiting for locks or I/O are not shown.

To see more details about the application time for running the actual code, look at the Hot Methods subtab.

Figure 2-13 Inspect Flight Recordings - Code Tab

Description of "Figure 2-13 Inspect Flight Recordings - Code Tab"

Figure 2-13 shows the methods that are sampled the most. Expand the samples to see from where they are called. If a HashMap.getEntry is called a lot, then expand this node until you find the method that called the most. This is the best tab to use to find bottlenecks in the application.

The Call Tree subtab shows the same events, but starts from the bottom; for example, from Thread.run.

The Exceptions sub tab shows any exceptions thrown. By default, only Errors are logged, but change this setting to include All Exceptions when starting a new recording.

The Compilations sub tab shows the methods compiled over time as the application was running.

The Class Loading sub tab shows the number of loaded classes, actual loaded classes and unloaded classes over time. This sub tab shows information only when Class Loadingevents were enabled at the start of the recording.

For more details about these tabs, click the question mark in the top right corner to see the built-in help.

Threads Tab

Inspect flight recordings in the Threads tab.

The Threads tab contains information about threads, lock contention and other latencies.

The Overview subtab shows CPU usage and the number of threads over time.

The Hot Threads sub tab shows the threads that do most of the code execution. This information is based on the same sampling data as the Hot Methods subtab in the Code tab.

The Contention tab is useful for finding bottle necks due to lock contention.

Figure 2-14 Inspect Flight Recordings - Contention Tab

Description of "Figure 2-14 Inspect Flight Recordings - Contention Tab"Figure 2-14 shows objects that are the most waited for due to synchronization. Select a Class to see the Stack Trace of the wait time for each object. These pauses are generally caused by synchronized methods, where another thread holds the lock.

Note:

By default, only synchronization events longer than 10 ms will be recorded, but you can lower this threshold when starting a recording.

The Latencies subtab shows other sources of latencies; for example, calling sleep or wait, reading from sockets, or waiting for file I/O.

The Thread Dumps subtab shows the periodic thread dumps that can be triggered in the recording.

The Lock Instances subtab shows the exact instances of objects that are waited upon the most due to synchronization.

For more details about these tabs, click the question mark in the top right corner to see the built-in help.

I/O Tab

The I/O tab shows information on file reads, file writes, socket reads, and socket writes.

This tab is helpful depending on the application; especially, when any I/O operation takes a long time.

Note:

By default, only events longer than 10 ms are shown. The thresholds can be modified when creating a new recording.

System Tab

The System tab gives detailed information about the CPU, Memory and OS of the machine running the application.

It also shows environment variables and any other processes running at the same time as the JVM.

Events Tab

The Events tab shows all the events in the recording.

This is an advanced tab that can be used in many different ways. For more details about these tabs, click the question mark in the top right corner to see the built-in help.

The jcmd Utility

The jcmd utility is used to send diagnostic command requests to the JVM, where these requests are useful for controlling Java Flight Recordings, troubleshoot, and diagnose JVM and Java applications.

jcmd must be used on the same machine where the JVM is running, and have the same effective user and group identifiers that were used to launch the JVM.

A special command jcmd prints all performance counters in the process.

The command jcmd sends the command to the JVM.

The following example shows diagnostic command requests to the JVM using jcmd utility.

> jcmd

5485 sun.tools.jcmd.JCmd

2125 MyProgram

> jcmd MyProgram help (or "jcmd 2125 help")

2125:

The following commands are available:

JFR.configure

JFR.stop

JFR.start

JFR.dump

JFR.check

VM.log

VM.native_memory

VM.check_commercial_features

VM.unlock_commercial_features

ManagementAgent.status

ManagementAgent.stop

ManagementAgent.start_local

ManagementAgent.start

Compiler.directives_clear

Compiler.directives_remove

Compiler.directives_add

Compiler.directives_print

VM.print_touched_methods

Compiler.codecache

Compiler.codelist

Compiler.queue

VM.classloader_stats

Thread.print

JVMTI.data_dump

JVMTI.agent_load

VM.stringtable

VM.symboltable

VM.class_hierarchy

VM.systemdictionary

GC.class_stats

GC.class_histogram

GC.heap_dump

GC.finalizer_info

GC.heap_info

GC.run_finalization

GC.run

VM.info

VM.uptime

VM.dynlibs

VM.set_flag

VM.flags

VM.system_properties

VM.command_line

VM.version

help

For more information about a specific command use 'help '.

> jcmd MyProgram help Thread.print

2125:

Thread.print

Print all threads with stacktraces.

Impact: Medium: Depends on the number of threads.

Permission: java.lang.management.ManagementPermission(monitor)

Syntax : Thread.print [options]

Options: (options must be specified using the or = syntax)

-l : [optional] print java.util.concurrent locks (BOOLEAN, false)

> jcmd MyProgram Thread.print

2125:

2018-07-06 12:43:46

Full thread dump Java HotSpot(TM) 64-Bit Server VM (10.0.1+10 mixed mode):

... The following sections describe some useful commands and troubleshooting techniques with the jcmd utility:

-

Useful Commands for the jcmd Utility

-

Troubleshoot with the jcmd Utility

Useful Commands for the jcmd Utility

The available diagnostic commands depend on the JVM being used. Use jcmd to see all available options.

The following are some of the most useful commands of the jcmd tool:

- Print full HotSpot and JDK version ID.

jcmdVM.version - Print all the system properties set for a VM.

There can be several hundred lines of information displayed.

jcmdVM.system_properties - Print all the flags used for a VM.

Even if you have provided no flags, some of the default values will be printed, for example initial and maximum heap size.

jcmdVM.flags - Print the uptime in seconds.

jcmdVM.uptime - Create a class histogram.

The results can be rather verbose, so you can redirect the output to a file. Both internal and application-specific classes are included in the list. Classes taking the most memory are listed at the top, and classes are listed in a descending order.

jcmdGC.class_histogram - Create a heap dump.

jcmd GC.heap_dump filename=MyheapdumpThis is the same as using

jmap -dump:file=, butjcmdis the recommended tool to use. - Create a heap histogram.

jcmdGC.class_histogram filename=Myheaphistogram This is the same as using

jmap -histo, butjcmdis the recommended tool to use. - Print all threads with stack traces.

jcmdThread.print

Troubleshoot with the jcmd Utility

Use the jcmd utility to troubleshoot.

The jcmd utility provides the following troubleshooting options:

- Start a recording.

For example, to start a 2-minute recording on the running Java process with the identifier

7060and save it to myrecording.jfr in the current directory, use the following:jcmd 7060 JFR.start name=MyRecording settings=profile delay=20s duration=2m filename=C:\TEMP\myrecording.jfr - Check a recording.

The

JFR.checkdiagnostic command checks a running recording. For example:jcmd 7060 JFR.check - Stop a recording.

The

JFR.stopdiagnostic command stops a running recording and has the option to discard the recording data. For example:jcmd 7060 JFR.stop - Dump a recording.

The

JFR.dumpdiagnostic command stops a running recording and has the option to dump recordings to a file. For example:jcmd 7060 JFR.dump name=MyRecording filename=C:\TEMP\myrecording.jfr - Create a heap dump.

The preferred way to create a heap dump is

jcmdGC.heap_dump filename=Myheapdump - Create a heap histogram.

The preferred way to create a heap histogram is

jcmdGC.class_histogram filename=Myheaphistogram

Native Memory Tracking

The Native Memory Tracking (NMT) is a Java HotSpot VM feature that tracks internal memory usage for a Java HotSpot VM.

Since NMT doesn't track memory allocations by non-JVM code, you may have to use tools supported by the operating system to detect memory leaks in native code.

The following sections describe how to monitor VM internal memory allocations and diagnose VM memory leaks.

-

Use NMT to Detect a Memory Leak

-

How to Monitor VM Internal Memory

-

NMT Memory Categories

Use NMT to Detect a Memory Leak

Procedure to use Native Memory Tracking to detect memory leaks.

Follow these steps to detect a memory leak:

- Start the JVM with summary or detail tracking using the command line option:

-XX:NativeMemoryTracking=summaryor-XX:NativeMemoryTracking=detail. - Establish an early baseline. Use NMT baseline feature to get a baseline to compare during development and maintenance by running:

jcmd.VM.native_memory baseline - Monitor memory changes using:

jcmd.VM.native_memory detail.diff - If the application leaks a small amount of memory, then it may take a while to show up.

How to Monitor VM Internal Memory

Native Memory Tracking can be set up to monitor memory and ensure that an application does not start to use increasing amounts of memory during development or maintenance.

See Table 2-1 for details about NMT memory categories.

The following sections describe how to get summary or detail data for NMT and describes how to interpret the sample output.

- Interpret sample output: From the following sample output, you will see reserved and committed memory. Note that only committed memory is actually used. For example, if you run with

-Xms100m -Xmx1000m, then the JVM will reserve 1000 MB for the Java heap. Because the initial heap size is only 100 MB, only 100 MB will be committed to begin with. For a 64-bit machine where address space is almost unlimited, there is no problem if a JVM reserves a lot of memory. The problem arises if more and more memory gets committed, which may lead to swapping or native out of memory (OOM) situations.An arena is a chunk of memory allocated using malloc. Memory is freed from these chunks in bulk, when exiting a scope or leaving an area of code. These chunks can be reused in other subsystems to hold temporary memory, for example, pre-thread allocations. An arena malloc policy ensures no memory leakage. So arena is tracked as a whole and not individual objects. Some initial memory cannot be tracked.

Enabling NMT will result in a 5-10 percent JVM performance drop, and memory usage for NMT adds 2 machine words to all malloc memory as a malloc header. NMT memory usage is also tracked by NMT.

Total: reserved=664192KB, committed=253120KB <--- total memory tracked by Native Memory Tracking - Java Heap (reserved=516096KB, committed=204800KB) <--- Java Heap (mmap: reserved=516096KB, committed=204800KB) - Class (reserved=6568KB, committed=4140KB) <--- class metadata (classes #665) <--- number of loaded classes (malloc=424KB, #1000) <--- malloc'd memory, #number of malloc (mmap: reserved=6144KB, committed=3716KB) - Thread (reserved=6868KB, committed=6868KB) (thread #15) <--- number of threads (stack: reserved=6780KB, committed=6780KB) <--- memory used by thread stacks (malloc=27KB, #66) (arena=61KB, #30) <--- resource and handle areas - Code (reserved=102414KB, committed=6314KB) (malloc=2574KB, #74316) (mmap: reserved=99840KB, committed=3740KB) - GC (reserved=26154KB, committed=24938KB) (malloc=486KB, #110) (mmap: reserved=25668KB, committed=24452KB) - Compiler (reserved=106KB, committed=106KB) (malloc=7KB, #90) (arena=99KB, #3) - Internal (reserved=586KB, committed=554KB) (malloc=554KB, #1677) (mmap: reserved=32KB, committed=0KB) - Symbol (reserved=906KB, committed=906KB) (malloc=514KB, #2736) (arena=392KB, #1) - Memory Tracking (reserved=3184KB, committed=3184KB) (malloc=3184KB, #300) - Pooled Free Chunks (reserved=1276KB, committed=1276KB) (malloc=1276KB) - Unknown (reserved=33KB, committed=33KB) (arena=33KB, #1) - Get detail data: To get a more detailed view of native memory usage, start the JVM with command line option:

-XX:NativeMemoryTracking=detail. This will track exactly what methods allocate the most memory. Enabling NMT will result in 5-10 percent JVM performance drop and memory usage for NMT adds 2 words to all malloc memory as malloc header. NMT memory usage is also tracked by NMT.The following example shows a sample output for virtual memory for track level set to detail. One way to get this sample output is to run:

jcmd.VM.native_memory detail Virtual memory map: [0x8f1c1000 - 0x8f467000] reserved 2712KB for Thread Stack from [Thread::record_stack_base_and_size()+0xca] [0x8f1c1000 - 0x8f467000] committed 2712KB from [Thread::record_stack_base_and_size()+0xca] [0x8f585000 - 0x8f729000] reserved 1680KB for Thread Stack from [Thread::record_stack_base_and_size()+0xca] [0x8f585000 - 0x8f729000] committed 1680KB from [Thread::record_stack_base_and_size()+0xca] [0x8f930000 - 0x90100000] reserved 8000KB for GC from [ReservedSpace::initialize(unsigned int, unsigned int, bool, char*, unsigned int, bool)+0x555] [0x8f930000 - 0x90100000] committed 8000KB from [PSVirtualSpace::expand_by(unsigned int)+0x95] [0x902dd000 - 0x9127d000] reserved 16000KB for GC from [ReservedSpace::initialize(unsigned int, unsigned int, bool, char*, unsigned int, bool)+0x555] [0x902dd000 - 0x9127d000] committed 16000KB from [os::pd_commit_memory(char*, unsigned int, unsigned int, bool)+0x36] [0x9127d000 - 0x91400000] reserved 1548KB for Thread Stack from [Thread::record_stack_base_and_size()+0xca] [0x9127d000 - 0x91400000] committed 1548KB from [Thread::record_stack_base_and_size()+0xca] [0x91400000 - 0xb0c00000] reserved 516096KB for Java Heap <--- reserved memory range from [ReservedSpace::initialize(unsigned int, unsigned int, bool, char*, unsigned int, bool)+0x190] <--- callsite that reserves the memory [0x91400000 - 0x93400000] committed 32768KB from [VirtualSpace::initialize(ReservedSpace, unsigned int)+0x3e8] <--- committed memory range and its callsite [0xa6400000 - 0xb0c00000] committed 172032KB from [PSVirtualSpace::expand_by(unsigned int)+0x95] <--- committed memory range and its callsite [0xb0c61000 - 0xb0ce2000] reserved 516KB for Thread Stack from [Thread::record_stack_base_and_size()+0xca] [0xb0c61000 - 0xb0ce2000] committed 516KB from [Thread::record_stack_base_and_size()+0xca] [0xb0ce2000 - 0xb0e83000] reserved 1668KB for GC from [ReservedSpace::initialize(unsigned int, unsigned int, bool, char*, unsigned int, bool)+0x555] [0xb0ce2000 - 0xb0cf0000] committed 56KB from [PSVirtualSpace::expand_by(unsigned int)+0x95] [0xb0d88000 - 0xb0d96000] committed 56KB from [CardTableModRefBS::resize_covered_region(MemRegion)+0xebf] [0xb0e2e000 - 0xb0e83000] committed 340KB from [CardTableModRefBS::resize_covered_region(MemRegion)+0xebf] [0xb0e83000 - 0xb7003000] reserved 99840KB for Code from [ReservedSpace::initialize(unsigned int, unsigned int, bool, char*, unsigned int, bool)+0x555] [0xb0e83000 - 0xb0e92000] committed 60KB from [VirtualSpace::initialize(ReservedSpace, unsigned int)+0x3e8] [0xb1003000 - 0xb139b000] committed 3680KB from [VirtualSpace::initialize(ReservedSpace, unsigned int)+0x37a] [0xb7003000 - 0xb7603000] reserved 6144KB for Class from [ReservedSpace::initialize(unsigned int, unsigned int, bool, char*, unsigned int, bool)+0x555] [0xb7003000 - 0xb73a4000] committed 3716KB from [VirtualSpace::initialize(ReservedSpace, unsigned int)+0x37a] [0xb7603000 - 0xb760b000] reserved 32KB for Internal from [PerfMemory::create_memory_region(unsigned int)+0x8ba] [0xb770b000 - 0xb775c000] reserved 324KB for Thread Stack from [Thread::record_stack_base_and_size()+0xca] [0xb770b000 - 0xb775c000] committed 324KB from [Thread::record_stack_base_and_size()+0xca] - Get diff from NMT baseline: For both summary and detail level tracking, you can set a baseline after the application is up and running. Do this by running

jcmdafter the application warms up. Then, you can runVM.native_memory baseline jcmdorVM.native_memory summary.diff jcmd.VM.native_memory detail.diff The following example shows sample output for the summary difference in native memory usage since the baseline was set and is a great way to find memory leaks.

Total: reserved=664624KB -20610KB, committed=254344KB -20610KB <--- total memory changes vs. earlier baseline. '+'=increase '-'=decrease - Java Heap (reserved=516096KB, committed=204800KB) (mmap: reserved=516096KB, committed=204800KB) - Class (reserved=6578KB +3KB, committed=4530KB +3KB) (classes #668 +3) <--- 3 more classes loaded (malloc=434KB +3KB, #930 -7) <--- malloc'd memory increased by 3KB, but number of malloc count decreased by 7 (mmap: reserved=6144KB, committed=4096KB) - Thread (reserved=60KB -1129KB, committed=60KB -1129KB) (thread #16 +1) <--- one more thread (stack: reserved=7104KB +324KB, committed=7104KB +324KB) (malloc=29KB +2KB, #70 +4) (arena=31KB -1131KB, #32 +2) <--- 2 more arenas (one more resource area and one more handle area) - Code (reserved=102328KB +133KB, committed=6640KB +133KB) (malloc=2488KB +133KB, #72694 +4287) (mmap: reserved=99840KB, committed=4152KB) - GC (reserved=26154KB, committed=24938KB) (malloc=486KB, #110) (mmap: reserved=25668KB, committed=24452KB) - Compiler (reserved=106KB, committed=106KB) (malloc=7KB, #93) (arena=99KB, #3) - Internal (reserved=590KB +35KB, committed=558KB +35KB) (malloc=558KB +35KB, #1699 +20) (mmap: reserved=32KB, committed=0KB) - Symbol (reserved=911KB +5KB, committed=911KB +5KB) (malloc=519KB +5KB, #2921 +180) (arena=392KB, #1) - Memory Tracking (reserved=2073KB -887KB, committed=2073KB -887KB) (malloc=2073KB -887KB, #84 -210) - Pooled Free Chunks (reserved=2624KB -15876KB, committed=2624KB -15876KB) (malloc=2624KB -15876KB)The following example is a sample output that shows the detail difference in native memory usage since the baseline and is a great way to find memory leaks.

Details: [0x01195652] ChunkPool::allocate(unsigned int)+0xe2 (malloc=482KB -481KB, #8 -8) [0x01195652] ChunkPool::allocate(unsigned int)+0xe2 (malloc=2786KB -19742KB, #134 -618) [0x013bd432] CodeBlob::set_oop_maps(OopMapSet*)+0xa2 (malloc=591KB +6KB, #681 +37) [0x013c12b1] CodeBuffer::block_comment(int, char const*)+0x21 <--- [callsite address] method name + offset (malloc=562KB +33KB, #35940 +2125) <--- malloc'd amount, increased by 33KB #malloc count, increased by 2125 [0x0145f172] ConstantPool::ConstantPool(Array*)+0x62 (malloc=69KB +2KB, #610 +15) ... [0x01aa3ee2] Thread::allocate(unsigned int, bool, unsigned short)+0x122 (malloc=21KB +2KB, #13 +1) [0x01aa73ca] Thread::record_stack_base_and_size()+0xca (mmap: reserved=7104KB +324KB, committed=7104KB +324KB)

JConsole

Another useful tool included in the JDK download is the JConsole monitoring tool. This tool is compliant with JMX. The tool uses the built-in JMX instrumentation in the JVM to provide information about the performance and resource consumption of running applications.

Although the tool is included in the JDK download, it can also be used to monitor and manage applications deployed with the JRE.

The JConsole tool can attach to any Java application in order to display useful information such as thread usage, memory consumption, and details about class loading, runtime compilation, and the operating system.

This output helps with the high-level diagnosis of problems such as memory leaks, excessive class loading, and running threads. It can also be useful for tuning and heap sizing.

In addition to monitoring, JConsole can be used to dynamically change several parameters in the running system. For example, the setting of the -verbose:gc option can be changed so that the garbage collection trace output can be dynamically enabled or disabled for a running application.

The following sections describe troubleshooting techniques with the JConsole tool.

-

Troubleshoot with the JConsole Tool

-

Monitor Local and Remote Applications with JConsole

Troubleshoot with the JConsole Tool

Use the JConsole tool to monitor data.

The following list provides an idea of the data that can be monitored using the JConsole tool. Each heading corresponds to a tab pane in the tool.

-

Overview

This pane displays graphs that shows the heap memory usage, number of threads, number of classes, and CPU usage over time. This overview allows you to visualize the activity of several resources at once.

-

Memory

-

For a selected memory area (heap, non-heap, various memory pools):

-

Graph showing memory usage over time

-

Current memory size

-

Amount of committed memory

-

Maximum memory size

-

-

Garbage collector information, including the number of collections performed, and the total time spent performing garbage collection

-

Graph showing the percentage of heap and non-heap memory currently used

In addition, on this pane you can request garbage collection to be performed.

-

-

Threads

-

Graph showing thread usage over time.

-

Live threads: Current number of live threads.

-

Peak: Highest number of live threads since the JVM started.

-

For a selected thread, the name, state, and stack trace, as well as, for a blocked thread, the synchronizer that the thread is waiting to acquire, and the thread that ownsthe lock.

-

The Deadlock Detection button sends a request to the target application to perform deadlock detection and displays each deadlock cycle in a separate tab.

-

-

Classes

-

Graph showing the number of loaded classes over time

-

Number of classes currently loaded into memory

-

Total number of classes loaded into memory since the JVM started, including those subsequently unloaded

-

Total number of classes unloaded from memory since the JVM started

-

-

VM Summary

-

General information, such as the JConsole connection data, uptime for the JVM, CPU time consumed by the JVM, complier name, total compile time, and so on.

-

Thread and class summary information

-

Memory and garbage collection information, including number of objects pending finalization, and so on

-

Information about the operating system, including physical characteristics, the amount of virtual memory for the running process, and swap space

-

Information about the JVM itself, such as the arguments and class path

-

-

MBeans

This pane displays a tree structure that shows all platform and application MBeans that are registered in the connected JMX agent. When you select an MBean in the tree, its attributes, operations, notifications, and other information are displayed.

-

You can invoke operations, if any. For example, the operation

dumpHeapfor theHotSpotDiagnosticMBean, which is in thecom.sun.managementdomain, performs a heap dump. The input parameter for this operation is the path name of the heap dump file on the machine where the target VM is running. -

You can set the value of writable attributes. For example, you can set, unset, or change the value of certain VM flags by invoking the

setVMOptionoperation of theHotSpotDiagnosticMBean. The flags are indicated by the list of values of theDiagnosticOptionsattribute. -

You can subscribe to notifications, if any, by using the Subscribe and Unsubscribe buttons.

-

Monitor Local and Remote Applications with JConsole

JConsole can monitor both local applications and remote applications. If you start the tool with an argument specifying a JMX agent to connect to, then the tool will automatically start monitoring the specified application.

To monitor a local application, execute the command jconsolepid , where pid is the process ID of the application.

To monitor a remote application, execute the command jconsolehostname: portnumber, where hostname is the name of the host running the application, and portnumber is the port number you specified when you enabled the JMX agent.

If you execute the jconsole command without arguments, the tool will start by displaying the New Connection window, where you specify the local or remote process to be monitored. You can connect to a different host at any time by using the Connection menu.

With the latest JDK releases, no option is necessary when you start the application to be monitored.

As an example of the output of the monitoring tool, Figure 2-15 shows a chart of the heap memory usage.

Figure 2-15 Sample Output from JConsole

Description of "Figure 2-15 Sample Output from JConsole"

The jdb Utility

The jdb utility is included in the JDK as an example command-line debugger. The jdb utility uses the Java Debug Interface (JDI) to launch or connect to the target JVM.

The JDI is a high-level Java API that provides information useful for debuggers and similar systems that need access to the running state of a (usually remote) virtual machine. JDI is a component of the Java Platform Debugger Architecture (JPDA). See Java Platform Debugger Architecture.

The following section provides troubleshooting techniques for jdb utility.

-

Troubleshoot with the jdb Utility

Troubleshoot with the jdb Utility

The jdb utility is used to monitor the debugger connectors used for remote debugging.

In JDI, a connector is the way that the debugger connects to the target JVM. The JDK traditionally ships with connectors that launch and establish a debugging session with a target JVM, as well as connectors that are used for remote debugging (using TCP/IP or shared memory transports).

These connectors are generally used with enterprise debuggers, such as the NetBeans integrated development environment (IDE) or commercial IDEs.

The command jdb -listconnectors prints a list of the available connectors. The command jdb -help prints the command usage help.

See jdb Utility in the Java Platform, Standard Edition Tools Reference

The jinfo Utility

The jinfo command-line utility gets configuration information from a running Java process or crash dump, and prints the system properties or the command-line flags that were used to start the JVM.

Java Mission Control, Java Flight Recorder, and jcmd utility can be used for diagnosing problems with JVM and Java applications. Use the latest utility, jcmd, instead of the previous jinfo utility for enhanced diagnostics and reduced performance overhead.

With the -flag option, the jinfo utility can dynamically set, unset, or change the value of certain JVM flags for the specified Java process. See Java HotSpot VM Command-Line Options.

The output for the jinfo utility for a Java process with PID number 29620 is shown in the following example.

$ jinfo 29620

Attaching to process ID 29620, please wait...

Debugger attached successfully.

Client compiler detected.

JVM version is 1.6.0-rc-b100

Java System Properties:

java.runtime.name = Java(TM) SE Runtime Environment

sun.boot.library.path = /usr/jdk/instances/jdk1.6.0/jre/lib/sparc

java.vm.version = 1.6.0-rc-b100

java.vm.vendor = Sun Microsystems Inc.

java.vendor.url = http://java.sun.com/

path.separator = :

java.vm.name = Java HotSpot(TM) Client VM

file.encoding.pkg = sun.io

sun.java.launcher = SUN_STANDARD

sun.os.patch.level = unknown

java.vm.specification.name = Java Virtual Machine Specification

user.dir = /home/js159705

java.runtime.version = 1.6.0-rc-b100

java.awt.graphicsenv = sun.awt.X11GraphicsEnvironment

java.endorsed.dirs = /usr/jdk/instances/jdk1.6.0/jre/lib/endorsed

os.arch = sparc

java.io.tmpdir = /var/tmp/

line.separator =

java.vm.specification.vendor = Sun Microsystems Inc.

os.name = SunOS

sun.jnu.encoding = ISO646-US

java.library.path = /usr/jdk/instances/jdk1.6.0/jre/lib/sparc/client:/usr/jdk/instances/jdk1.6.0/jre/lib/sparc:

/usr/jdk/instances/jdk1.6.0/jre/../lib/sparc:/net/gtee.sfbay/usr/sge/sge6/lib/sol-sparc64:

/usr/jdk/packages/lib/sparc:/lib:/usr/lib

java.specification.name = Java Platform API Specification

java.class.version = 50.0

sun.management.compiler = HotSpot Client Compiler

os.version = 5.10

user.home = /home/js159705

user.timezone = US/Pacific

java.awt.printerjob = sun.print.PSPrinterJob

file.encoding = ISO646-US

java.specification.version = 1.6

java.class.path = /usr/jdk/jdk1.6.0/demo/jfc/Java2D/Java2Demo.jar

user.name = js159705

java.vm.specification.version = 1.0

java.home = /usr/jdk/instances/jdk1.6.0/jre

sun.arch.data.model = 32

user.language = en

java.specification.vendor = Sun Microsystems Inc.

java.vm.info = mixed mode, sharing

java.version = 1.6.0-rc

java.ext.dirs = /usr/jdk/instances/jdk1.6.0/jre/lib/ext:/usr/jdk/packages/lib/ext

sun.boot.class.path = /usr/jdk/instances/jdk1.6.0/jre/lib/resources.jar:

/usr/jdk/instances/jdk1.6.0/jre/lib/rt.jar:/usr/jdk/instances/jdk1.6.0/jre/lib/sunrsasign.jar:

/usr/jdk/instances/jdk1.6.0/jre/lib/jsse.jar:

/usr/jdk/instances/jdk1.6.0/jre/lib/jce.jar:/usr/jdk/instances/jdk1.6.0/jre/lib/charsets.jar:

/usr/jdk/instances/jdk1.6.0/jre/classes

java.vendor = Sun Microsystems Inc.

file.separator = /

java.vendor.url.bug = http://java.sun.com/cgi-bin/bugreport.cgi

sun.io.unicode.encoding = UnicodeBig

sun.cpu.endian = big

sun.cpu.isalist =

VM Flags:

The following topic describes the troubleshooting technique with jinfo utility.

-

Troubleshooting with the jinfo Utility

Troubleshooting with the jinfo Utility

The output from jinfo provides the settings for java.class.path and sun.boot.class.path.

If you start the target JVM with the -classpath and -Xbootclasspath arguments, then the output from jinfo provides the settings for java.class.path and sun.boot.class.path. This information might be needed when investigating class loader issues.

In addition to getting information from a process, the jhsdb jinfo tool can use a core file as input. On the Oracle Solaris operating system, for example, the gcore utility can be used to get a core file of the process in the preceding example. The core file will be named core.29620 and will be generated in the working directory of the process. The path to the Java executable file and the core file must be specified as arguments to the jhsdb jinfo utility, as shown in the following example.

$ jhsdb jinfo --exe java-home/bin/java --core core.29620

Sometimes, the binary name will not be java. This happens when the VM is created using the JNI invocation API. The jhsdb jinfo tool requires the binary from which the core file was generated.

The jmap Utility

The jmap command-line utility prints memory-related statistics for a running VM or core file. For a core file, use jhsdb jmap.

Java Mission Control, Java Flight Recorder, and jcmd utility can be used for diagnosing problems with JVM and Java applications. It is suggested to use the latest utility, jcmdinstead of the previous jmap utility for enhanced diagnostics and reduced performance overhead.

If jmap is used with a process or core file without any command-line options, then it prints the list of shared objects loaded (the output is similar to the pmap utility on Oracle Solaris operating system). For more specific information, you can use the options -heap, -histo, or -permstat. These options are described in the subsections that follow.

In addition, the JDK 7 release introduced the -dump:format=b,file=filename option, which causes jmap to dump the Java heap in binary format to a specified file.

If the jmap pid command does not respond because of a hung process, then use the jhsdb jmap utility to run the Serviceability Agent.

The following sections describe troubleshooting techniques with examples that print memory-related statistics for a running VM or a core file.

- Heap Configuration and Usage

-

Heap Histogram

Heap Configuration and Usage

Use the jhsdb jmap --heap command to get the Java heap information.

The --heap option is used to get the following Java heap information:

-

Information specific to the garbage collection (GC) algorithm, including the name of the GC algorithm (for example, parallel GC) and algorithm-specific details (such as the number of threads for parallel GC).

-

Heap configuration that might have been specified as command-line options or selected by the VM based on the machine configuration.

-

Heap usage summary: For each generation (area of the heap), the tool prints the total heap capacity, in-use memory, and available free memory. If a generation is organized as a collection of spaces (for example, the new generation), then a space-specific memory size summary is included.

The following example shows output from the jhsdb jmap --heap command.

$ jhsdb jmap --heap --pid 29620

Attaching to process ID 29620, please wait...

Debugger attached successfully.

Client compiler detected.

JVM version is 12-30+ea

using thread-local object allocation.

Mark Sweep Compact GC

Heap Configuration:

MinHeapFreeRatio = 40

MaxHeapFreeRatio = 70

MaxHeapSize = 67108864 (64.0MB)

NewSize = 2228224 (2.125MB)

MaxNewSize = 4294901760 (4095.9375MB)

OldSize = 4194304 (4.0MB)

NewRatio = 8

SurvivorRatio = 8

PermSize = 12582912 (12.0MB)

MaxPermSize = 67108864 (64.0MB)

Heap Usage:

New Generation (Eden + 1 Survivor Space):

capacity = 2031616 (1.9375MB)

used = 70984 (0.06769561767578125MB)

free = 1960632 (1.8698043823242188MB)

3.4939673639112905% used

Eden Space:

capacity = 1835008 (1.75MB)

used = 36152 (0.03447723388671875MB)

free = 1798856 (1.7155227661132812MB)

1.9701276506696428% used

From Space:

capacity = 196608 (0.1875MB)

used = 34832 (0.0332183837890625MB)

free = 161776 (0.1542816162109375MB)

17.716471354166668% used

To Space:

capacity = 196608 (0.1875MB)

used = 0 (0.0MB)

free = 196608 (0.1875MB)

0.0% used

tenured generation:

capacity = 15966208 (15.2265625MB)

used = 9577760 (9.134063720703125MB)

free = 6388448 (6.092498779296875MB)

59.98769400974859% used

Perm Generation:

capacity = 12582912 (12.0MB)

used = 1469408 (1.401336669921875MB)

free = 11113504 (10.598663330078125MB)

11.677805582682291% used

Heap Histogram

The jmap command with the -histo option or the jhsdb jmap --histo command can be used to get a class-specific histogram of the heap.

The jmap -histo command can print the heap histogram for a running process. Use jhsdb jmap --histo to print the heap histogram for a core file.

When the jmap -histo command is executed on a running process, the tool prints the number of objects, memory size in bytes, and fully qualified class name for each class. Internal classes in the Java HotSpot VM are enclosed within angle brackets. The histogram is useful to understand how the heap is used. To get the size of an object, you must divide the total size by the count of that object type.

The following example shows output from the jmap -histo command when it is executed on a process with PID number 29620.

$ jmap -histo 29620

num #instances #bytes class name

--------------------------------------

1: 1414 6013016 [I

2: 793 482888 [B

3: 2502 334928

4: 280 274976

5: 324 227152 [D

6: 2502 200896

7: 2094 187496 [C

8: 280 172248

9: 3767 139000 [Ljava.lang.Object;

10: 260 122416

11: 3304 112864

12: 160 72960 java2d.Tools$3

13: 192 61440

14: 219 55640 [F

15: 2114 50736 java.lang.String

16: 2079 49896 java.util.HashMap$Entry

17: 528 48344 [S

18: 1940 46560 java.util.Hashtable$Entry

19: 481 46176 java.lang.Class

20: 92 43424 javax.swing.plaf.metal.MetalScrollButton

... more lines removed here to reduce output...

1118: 1 8 java.util.Hashtable$EmptyIterator

1119: 1 8 sun.java2d.pipe.SolidTextRenderer

Total 61297 10152040

When the jhsdb jmap --histo command is executed on a core file, the tool prints the serial number, number of instances, bytes, and class name for each class. Internal classes in the Java HotSpot VM are prefixed with an asterisk (*).

The following example shows output of the jhsdb jmap --histo command when it is executed on a core file.

& jhsdb jmap --exe /usr/java/jdk_12/bin/java --core core.16395 --histo

Attaching to core core.16395 from executable /usr/java/jdk_12/bin/java please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 12-ea+30

Iterating over heap. This may take a while...

Object Histogram:

num #instances #bytes Class description

--------------------------------------------------------------------------

1: 11102 564520 byte[]

2: 10065 241560 java.lang.String

3: 1421 163392 java.lang.Class

4: 26403 2997816 * ConstMethodKlass

5: 26403 2118728 * MethodKlass

6: 39750 1613184 * SymbolKlass

7: 2011 1268896 * ConstantPoolKlass

8: 2011 1097040 * InstanceKlassKlass

9: 1906 882048 * ConstantPoolCacheKlass

10: 1614 125752 java.lang.Object[]

11: 1160 64960 jdk.internal.org.objectweb.asm.Item

12: 1834 58688 java.util.HashMap$Node

13: 359 40880 java.util.HashMap$Node[]

14: 1189 38048 java.util.concurrent.ConcurrentHashMap$Node

15: 46 37280 jdk.internal.org.objectweb.asm.Item[]

16: 29 35600 char[]

17: 968 32320 int[]

18: 650 26000 java.lang.invoke.MethodType

19: 475 22800 java.lang.invoke.MemberName

The jps Utility

The jps utility lists every instrumented Java HotSpot VM for the current user on the target system.

The utility is very useful in environments where the VM is embedded, that is, where it is started using the JNI Invocation API rather than the java launcher. In these environments, it is not always easy to recognize the Java processes in the process list.

The following example shows the use of the jps utility.

$ jps

16217 MyApplication

16342 jps

The jps utility lists the virtual machines for which the user has access rights. This is determined by access-control mechanisms specific to the operating system. On the Oracle Solaris operating system, for example, if a non-root user executes the jps utility, then the output is a list of the virtual machines that were started with that user's UID.

In addition to listing the PID, the utility provides options to output the arguments passed to the application's main method, the complete list of VM arguments, and the full package name of the application's main class. The jps utility can also list processes on a remote system if the remote system is running the jstatd daemon.

The jstack Utility

Use the jcmd or jhsdb jstack utility, instead of the jstack utility to diagnose problems with JVM and Java applications.

Java Mission Control, Java Flight Recorder, and jcmd utility can be used to diagnose problems with JVM and Java applications. It is suggested to use the latest utility, jcmd, instead of the previous jstack utility for enhanced diagnostics and reduced performance overhead.

The following sections describe troubleshooting techniques with the jstack and jhsdb jstack utilites.

-

Troubleshoot with the jstack Utility

-

Stack Trace from a Core Dump

-

Mixed Stack

Troubleshoot with the jstack Utility

The jstack command-line utility attaches to the specified process, and prints the stack traces of all threads that are attached to the virtual machine, including Java threads and VM internal threads, and optionally native stack frames. The utility also performs deadlock detection. For core files, use jhsdb jstack.

A stack trace of all threads can be useful in diagnosing a number of issues, such as deadlocks or hangs.

The -l option instructs the utility to look for ownable synchronizers in the heap and print information about java.util.concurrent.locks. Without this option, the thread dump includes information only on monitors.

The output from the jstack pid option is the same as that obtained by pressing Ctrl+\ at the application console (standard input) or by sending the process a quit signal. See Control+Break Handler for an example of the output.

Thread dumps can also be obtained programmatically using the Thread.getAllStackTraces method, or in the debugger using the debugger option to print all thread stacks (the where command in the case of the jdb sample debugger).

Stack Trace from a Core Dump

Use the jhsdb jstack command to obtain stack traces from a core dump.

To get stack traces from a core dump, execute the jhsdb jstack command on a core file, as shown in the following example.

$ jhsdb jstack --exe java-home/bin/java --core core-file

Mixed Stack

The jhsdb jstack utility can also be used to print a mixed stack; that is, it can print native stack frames in addition to the Java stack. Native frames are the C/C++ frames associated with VM code and JNI/native code.

To print a mixed stack, use the --mixed option, as shown in the following example.

$ jhsdb jstack --mixed --pid 21177

Attaching to process ID 21177, please wait...

Debugger attached successfully.

Client compiler detected.

JVM version is 12-30+ea

Deadlock Detection:

Found one Java-level deadlock:

=============================

"Thread1":

waiting to lock Monitor@0x0005c750 (Object@0xd4405938, a java/lang/String),

which is held by "Thread2"

"Thread2":

waiting to lock Monitor@0x0005c6e8 (Object@0xd4405900, a java/lang/String),

which is held by "Thread1"

Found a total of 1 deadlock.

----------------- t@1 -----------------

0xff2c0fbc __lwp_wait + 0x4

0xff2bc9bc _thrp_join + 0x34

0xff2bcb28 thr_join + 0x10

0x00018a04 ContinueInNewThread + 0x30

0x00012480 main + 0xeb0

0x000111a0 _start + 0x108

----------------- t@2 -----------------

0xff2c1070 ___lwp_cond_wait + 0x4

0xfec03638 bool Monitor::wait(bool,long) + 0x420

0xfec9e2c8 bool Threads::destroy_vm() + 0xa4

0xfe93ad5c jni_DestroyJavaVM + 0x1bc

0x00013ac0 JavaMain + 0x1600

0xff2bfd9c _lwp_start

----------------- t@3 -----------------

0xff2c1070 ___lwp_cond_wait + 0x4

0xff2ac104 _lwp_cond_timedwait + 0x1c

0xfec034f4 bool Monitor::wait(bool,long) + 0x2dc

0xfece60bc void VMThread::loop() + 0x1b8

0xfe8b66a4 void VMThread::run() + 0x98

0xfec139f4 java_start + 0x118

0xff2bfd9c _lwp_start

----------------- t@4 -----------------

0xff2c1070 ___lwp_cond_wait + 0x4

0xfec195e8 void os::PlatformEvent::park() + 0xf0

0xfec88464 void ObjectMonitor::wait(long long,bool,Thread*) + 0x548

0xfe8cb974 void ObjectSynchronizer::wait(Handle,long long,Thread*) + 0x148

0xfe8cb508 JVM_MonitorWait + 0x29c

0xfc40e548 * java.lang.Object.wait(long) bci:0 (Interpreted frame)

0xfc40e4f4 * java.lang.Object.wait(long) bci:0 (Interpreted frame)

0xfc405a10 * java.lang.Object.wait() bci:2 line:485 (Interpreted frame)

... more lines removed here to reduce output...

----------------- t@12 -----------------

0xff2bfe3c __lwp_park + 0x10

0xfe9925e4 AttachOperation*AttachListener::dequeue() + 0x148

0xfe99115c void attach_listener_thread_entry(JavaThread*,Thread*) + 0x1fc

0xfec99ad8 void JavaThread::thread_main_inner() + 0x48

0xfec139f4 java_start + 0x118

0xff2bfd9c _lwp_start

----------------- t@13 -----------------

0xff2c1500 _door_return + 0xc

----------------- t@14 -----------------

0xff2c1500 _door_return + 0xc

Frames that are prefixed with an asterisk (*) are Java frames, whereas frames that are not prefixed with an asterisk are native C/C++ frames.

The output of the utility can be piped through c++filt to demangle C++ mangled symbol names. Because the Java HotSpot VM is developed in the C++ language, the jhsdb jstack utility prints C++ mangled symbol names for the Java HotSpot internal functions.

The c++filt utility is delivered with the native C++ compiler suite: SUNWspro on the Oracle Solaris operating system and gnu on Linux.

The jstat Utility

The jstat utility uses the built-in instrumentation in the Java HotSpot VM to provide information about performance and resource consumption of running applications.

The tool can be used when diagnosing performance issues, and in particular issues related to heap sizing and garbage collection. The jstat utility does not require the VM to be started with any special options. The built-in instrumentation in the Java HotSpot VM is enabled by default. This utility is included in the JDK download for all operating system platforms supported by Oracle.

Note:

The instrumentation is not accessible on a FAT32 file system.

See jstat in the Java Platform, Standard Edition Tools Reference.

The jstat utility uses the virtual machine identifier (VMID) to identify the target process. The documentation describes the syntax of the VMID, but its only required component is the local virtual machine identifier (LVMID). The LVMID is typically (but not always) the operating system's PID for the target JVM process.

The jstat utility provides data similar to the data provided by the vmstat and iostat on Oracle Solaris and Linux operating systems.

For a graphical representation of the data, you can use the visualgc tool. See The visualgc Tool.

The following example illustrates the use of the -gcutil option, where the jstat utility attaches to LVMID number 2834 and takes 7 samples at 250-millisecond intervals.

$ jstat -gcutil 2834 250 7

S0 S1 E O M YGC YGCT FGC FGCT GCT

0.00 99.74 13.49 7.86 95.82 3 0.124 0 0.000 0.124

0.00 99.74 13.49 7.86 95.82 3 0.124 0 0.000 0.124

0.00 99.74 13.49 7.86 95.82 3 0.124 0 0.000 0.124

0.00 99.74 13.49 7.86 95.82 3 0.124 0 0.000 0.124

0.00 99.74 13.49 7.86 95.82 3 0.124 0 0.000 0.124

0.00 99.74 13.49 7.86 95.82 3 0.124 0 0.000 0.124

0.00 99.74 13.49 7.86 95.82 3 0.124 0 0.000 0.124

The output of this example shows you that a young generation collection occurred between the third and fourth samples. The collection took 0.017 seconds and promoted objects from the eden space (E) to the old space (O), resulting in an increase of old space utilization from 46.56% to 54.60%.

The following example illustrates the use of the -gcnew option where the jstat utility attaches to LVMID number 2834, takes samples at 250-millisecond intervals, and displays the output. In addition, it uses the -h3 option to display the column headers after every 3 lines of data.

$ jstat -gcnew -h3 2834 250

S0C S1C S0U S1U TT MTT DSS EC EU YGC YGCT

192.0 192.0 0.0 0.0 15 15 96.0 1984.0 942.0 218 1.999

192.0 192.0 0.0 0.0 15 15 96.0 1984.0 1024.8 218 1.999

192.0 192.0 0.0 0.0 15 15 96.0 1984.0 1068.1 218 1.999

S0C S1C S0U S1U TT MTT DSS EC EU YGC YGCT

192.0 192.0 0.0 0.0 15 15 96.0 1984.0 1109.0 218 1.999

192.0 192.0 0.0 103.2 1 15 96.0 1984.0 0.0 219 2.019

192.0 192.0 0.0 103.2 1 15 96.0 1984.0 71.6 219 2.019

S0C S1C S0U S1U TT MTT DSS EC EU YGC YGCT

192.0 192.0 0.0 103.2 1 15 96.0 1984.0 73.7 219 2.019

192.0 192.0 0.0 103.2 1 15 96.0 1984.0 78.0 219 2.019

192.0 192.0 0.0 103.2 1 15 96.0 1984.0 116.1 219 2.019

In addition to showing the repeating header string, this example shows that between the fourth and fifth samples, a young generation collection occurred, whose duration was 0.02 seconds. The collection found enough live data that the survivor space 1 utilization (S1U) would have exceeded the desired survivor size (DSS). As a result, objects were promoted to the old generation (not visible in this output), and the tenuring threshold (TT) was lowered from 15 to 1.

The following example illustrates the use of the -gcoldcapacity option, where the jstat utility attaches to LVMID number 21891 and takes 3 samples at 250-millisecond intervals. The -t option is used to generate a time stamp for each sample in the first column.

$ jstat -gcoldcapacity -t 21891 250 3

Timestamp OGCMN OGCMX OGC OC YGC FGC FGCT GCT

150.1 1408.0 60544.0 11696.0 11696.0 194 80 2.874 3.799

150.4 1408.0 60544.0 13820.0 13820.0 194 81 2.938 3.863

150.7 1408.0 60544.0 13820.0 13820.0 194 81 2.938 3.863

The Timestamp column reports the elapsed time in seconds since the start of the target JVM. In addition, the -gcoldcapacity output shows the old generation capacity (OGC) and the old space capacity (OC) increasing as the heap expands to meet the allocation or promotion demands. The OGC has grown from 11696 KB to 13820 KB after the 81st full generation capacity (FGC). The maximum capacity of the generation (and space) is 60544 KB (OGCMX), so it still has room to expand.

The visualgc Tool

The visualgc tool provides a graphical view of the garbage collection (GC) system.

The visualgc tool is related to the jstat tool. See The jstat Utility. The visualgc tool provides a graphical view of the garbage collection (GC) system. As with jstat, it uses the built-in instrumentation of the Java HotSpot VM.

The visualgc tool is not included in the JDK release, but is available as a separate download from the jvmstat technology page.

Figure 2-16 shows how the GC and heap are visualized.

Figure 2-16 Sample Output from visualgc

Description of "Figure 2-16 Sample Output from visualgc"

Control+Break Handler

The result of pressing the Control key and the backslash (\) key at the application console on operating systems such as Oracle Solaris or Linux, or Windows.

On Oracle Solaris or Linux operating systems, the combination of pressing the Control key and the backslash (\) key at the application console (standard input) causes the Java HotSpot VM to print a thread dump to the application's standard output. On Windows, the equivalent key sequence is the Control and Break keys. The general term for these key combinations is the Control+Break handler.

On Oracle Solaris and Linux operating systems, a thread dump is printed if the Java process receives a quit signal. Therefore, the kill -QUIT pid command causes the process with the ID pid to print a thread dump to standard output.

The following sections describe the data traced by the Control+Break handler:

-

Thread Dump

-

Detect Deadlocks

-

Heap Summary

Thread Dump

The thread dump consists of the thread stack, including the thread state, for all Java threads in the virtual machine.

The thread dump does not terminate the application: it continues after the thread information is printed.

The following example illustrates a thread dump.

Full thread dump Java HotSpot(TM) Client VM (1.6.0-rc-b100 mixed mode):

"DestroyJavaVM" prio=10 tid=0x00030400 nid=0x2 waiting on condition [0x00000000..0xfe77fbf0]

java.lang.Thread.State: RUNNABLE

"Thread2" prio=10 tid=0x000d7c00 nid=0xb waiting for monitor entry [0xf36ff000..0xf36ff8c0]

java.lang.Thread.State: BLOCKED (on object monitor)

at Deadlock$DeadlockMakerThread.run(Deadlock.java:32)

- waiting to lock <0xf819a938> (a java.lang.String)

- locked <0xf819a970> (a java.lang.String)

"Thread1" prio=10 tid=0x000d6c00 nid=0xa waiting for monitor entry [0xf37ff000..0xf37ffbc0]

java.lang.Thread.State: BLOCKED (on object monitor)

at Deadlock$DeadlockMakerThread.run(Deadlock.java:32)

- waiting to lock <0xf819a970> (a java.lang.String)

- locked <0xf819a938> (a java.lang.String)

"Low Memory Detector" daemon prio=10 tid=0x000c7800 nid=0x8 runnable [0x00000000..0x00000000]

java.lang.Thread.State: RUNNABLE

"CompilerThread0" daemon prio=10 tid=0x000c5400 nid=0x7 waiting on condition [0x00000000..0x00000000]

java.lang.Thread.State: RUNNABLE

"Signal Dispatcher" daemon prio=10 tid=0x000c4400 nid=0x6 waiting on condition [0x00000000..0x00000000]

java.lang.Thread.State: RUNNABLE

"Finalizer" daemon prio=10 tid=0x000b2800 nid=0x5 in Object.wait() [0xf3f7f000..0xf3f7f9c0]

java.lang.Thread.State: WAITING (on object monitor)

at java.lang.Object.wait(Native Method)

- waiting on <0xf4000b40> (a java.lang.ref.ReferenceQueue$Lock)

at java.lang.ref.ReferenceQueue.remove(ReferenceQueue.java:116)

- locked <0xf4000b40> (a java.lang.ref.ReferenceQueue$Lock)

at java.lang.ref.ReferenceQueue.remove(ReferenceQueue.java:132)

at java.lang.ref.Finalizer$FinalizerThread.run(Finalizer.java:159)