canal1.1.5 同步mysql数据到ES7.X

目录

- 安装:canal.deployer服务端

- 配置mysql

- 授权canal链接 MySQL 账号具有作为 MySQL slave的权限

- 跑example

- 启动

- 客户端

- 依赖

- 客户端测试代码

- 测试

- 使用`canal.adapter`客户端适配器同步数据到`ES`:

- 数组:

- 多`instance`:

- 实例:

https://blog.csdn.net/qq_32254003/article/details/76837638

https://github.com/alibaba/canal/wiki/QuickStart

安装:canal.deployer服务端

版本:1.1.5

下载:https://github.com/alibaba/canal/releases

注意:canal1.1.5开始才支持同步数据到ES7,哭唧唧,忙活半天,才发现

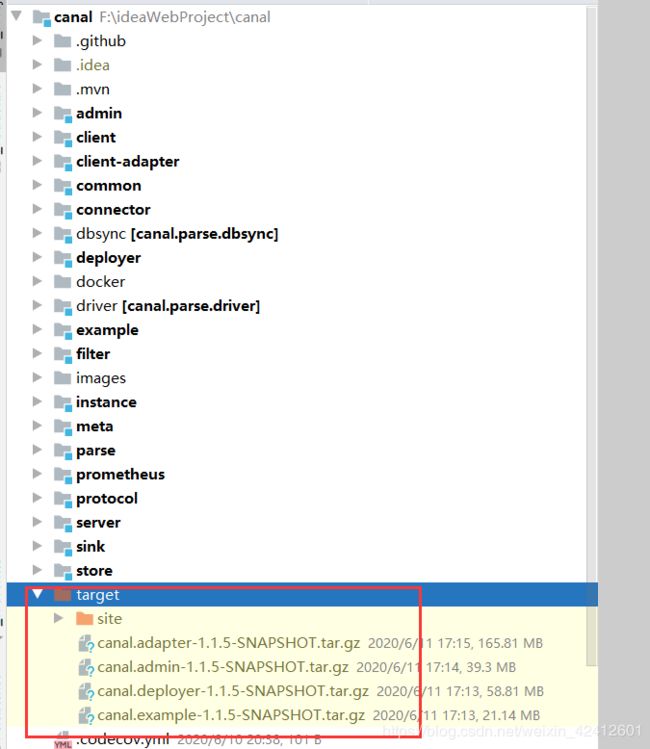

自己编译:

git clone git@github.com:alibaba/canal.git

git co canal-$version #切换到对应的版本上

mvn clean install -Denv=release -Dmaven.test.skip=true --settings G:\.m2\settings-sxw.xml

执行完成后,会在canal工程根目录下生成一个target目录,里面会包含一个 canal.deployer-$verion.tar.gz

配置mysql

[mysqld]

#开启binlog

log-bin=mysql-bin

#选择ROW模式

binlog-format=ROW

# 配置 MySQL replaction 需要定义,不要和 canal 的 slaveId 重复

server_id=1

重启mysql服务

如果mysql没有配置远程连接需要配置一下:https://blog.csdn.net/qq_38257857/article/details/103700314

授权canal链接 MySQL 账号具有作为 MySQL slave的权限

如果已有账户可直接grant,如果使用root就可以省略这一步。

CREATE USER canal IDENTIFIED BY 'canal';

GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';

-- GRANT ALL PRIVILEGES ON *.* TO 'canal'@'%' ;

FLUSH PRIVILEGES;

跑example

解压canal.deployer-1.1.5-SNAPSHOT.tar.gz,deployer/conf/example目录下:vi instance.properties

需要修改的配置:

#canal示例的slaveId

canal.instance.mysql.slaveId=1234

#mysql地址

canal.instance.master.address= ip:3306

#用户名

canal.instance.dbUsername=root

#密码

canal.instance.dbPassword=123456

#指定需要同步的数据库

canal.instance.defaultDatabaseName =item

#指定编码方式

canal.instance.connectionCharset = UTF-8

#监控的是所有数据库,所有的表改动都会监控到,这样可能会浪费不少性能,可能我只想监控的是某一个数据库下的表。

# .*\\..*表示监控所有数据库,canal\\..*表示监控canal数据库

#如果要在官方给例子中看到效果,connector.subscribe("canal\\..*"); 和这里要一致

canal.instance.filter.regex=item\\..*

启动

bin下: ./startup.sh

如果执行sh文件出现:没有那个文件或目录的问题

解决:http://blog.sina.com.cn/s/blog_70a150570102ys0o.html

或出现-bash: ./startup.sh: /bin/bash^M: 坏的解释器: 没有那个文件或目录

解决:[root@localhost bin]# sed -i ‘s/\r$//’ startup.sh

查看 server 日志:

deployer/logs/canal目录下:tail -50f canal.log

2020-06-11 02:35:03.785 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## set default uncaught exception handler

2020-06-11 02:35:03.829 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## load canal configurations

2020-06-11 02:35:03.839 [main] INFO com.alibaba.otter.canal.deployer.CanalStarter - ## start the canal server.

2020-06-11 02:35:03.874 [main] INFO com.alibaba.otter.canal.deployer.CanalController - ## start the canal server[192.168.63.129(192.168.63.129):11111]

2020-06-11 02:35:04.984 [main] INFO com.alibaba.otter.canal.deployer.CanalStarter - ## the canal server is running now ...... ......

查看 instance 的日志:

deployer/logs/example目录下:tail -550f example.log

2020-06-11 03:03:50.299 [main] INFO c.a.o.c.i.spring.support.PropertyPlaceholderConfigurer - Loading properties file from class path resource [example/instance.properties]

2020-06-11 03:03:50.790 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example

2020-06-11 03:03:50.806 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table filter : ^item\..*$

2020-06-11 03:03:50.806 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table black filter :

2020-06-11 03:03:50.816 [main] INFO c.a.otter.canal.instance.core.AbstractCanalInstance - start successful....

客户端

创建一个maven工程。

依赖

<!--canal-->

<dependency>

<groupId>com.alibaba.otter</groupId>

<artifactId>canal.client</artifactId>

<version>1.1.0</version>

</dependency>

客户端测试代码

import java.net.InetSocketAddress;

import java.util.List;

import com.alibaba.otter.canal.client.CanalConnectors;

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.common.utils.AddressUtils;

import com.alibaba.otter.canal.protocol.Message;

import com.alibaba.otter.canal.protocol.CanalEntry.Column;

import com.alibaba.otter.canal.protocol.CanalEntry.Entry;

import com.alibaba.otter.canal.protocol.CanalEntry.EntryType;

import com.alibaba.otter.canal.protocol.CanalEntry.EventType;

import com.alibaba.otter.canal.protocol.CanalEntry.RowChange;

import com.alibaba.otter.canal.protocol.CanalEntry.RowData;

public class SimpleCanalClientExample {

public static void main(String args[]) {

// 创建链接

CanalConnector connector = CanalConnectors.newSingleConnector(

new InetSocketAddress("canal服务器ip", 11111), "example", "", "");

int batchSize = 1000;

int emptyCount = 0;

try {

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

int totalEmptyCount = 120;

while (emptyCount < totalEmptyCount) {

Message message = connector.getWithoutAck(batchSize); // 获取指定数量的数据

long batchId = message.getId();

int size = message.getEntries().size();

if (batchId == -1 || size == 0) {

emptyCount++;

System.out.println("empty count : " + emptyCount);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

}

} else {

emptyCount = 0;

// System.out.printf("message[batchId=%s,size=%s] \n", batchId, size);

printEntry(message.getEntries());

}

connector.ack(batchId); // 提交确认

// connector.rollback(batchId); // 处理失败, 回滚数据

}

System.out.println("empty too many times, exit");

} finally {

connector.disconnect();

}

}

private static void printEntry(List<Entry> entrys) {

for (Entry entry : entrys) {

if (entry.getEntryType() == EntryType.TRANSACTIONBEGIN || entry.getEntryType() == EntryType.TRANSACTIONEND) {

continue;

}

RowChange rowChage = null;

try {

rowChage = RowChange.parseFrom(entry.getStoreValue());

} catch (Exception e) {

throw new RuntimeException("ERROR ## parser of eromanga-event has an error , data:" + entry.toString(),

e);

}

EventType eventType = rowChage.getEventType();

System.out.println(String.format("================> binlog[%s:%s] , name[%s,%s] , eventType : %s",

entry.getHeader().getLogfileName(), entry.getHeader().getLogfileOffset(),

entry.getHeader().getSchemaName(), entry.getHeader().getTableName(),

eventType));

for (RowData rowData : rowChage.getRowDatasList()) {

if (eventType == EventType.DELETE) {

printColumn(rowData.getBeforeColumnsList());

} else if (eventType == EventType.INSERT) {

printColumn(rowData.getAfterColumnsList());

} else {

System.out.println("-------> before");

printColumn(rowData.getBeforeColumnsList());

System.out.println("-------> after");

printColumn(rowData.getAfterColumnsList());

}

}

}

}

private static void printColumn(List<Column> columns) {

for (Column column : columns) {

System.out.println(column.getName() + " : " + column.getValue() + " update=" + column.getUpdated());

}

}

}

测试

启动canal服务端deployer,启动Canal Client后,可以从控制台从看到类似消息:

empty count : 1

empty count : 2

empty count : 3

empty count : 4

注意:这个客户端会有一个问题,之前在配置服务端的时候,指定了只监控canal.instance.filter.regex=item\\..*,item这个数据库,但是测试的时候,修改别的库,也会被监听到。

只需要修改客户端代码:之所以会出现配置不生效的原因,是因为代码配的把配置文件里配的给覆盖了。

connector.subscribe("item\\..*");

使用canal.adapter客户端适配器同步数据到ES:

解压canal.adapter-1.1.5-SNAPSHOT.tar.gz;

adapter/conf目录下,修改配置文件application.yml同时启动zookeeper。

zookeeperHosts: ip:2181

canal.tcp.zookeeper.hosts: ip:2181

srcDataSources:

defaultDS:

url: jdbc:mysql://ip:3306/item?useUnicode=true

username: root

password: 123456

canalAdapters:

- instance: example # canal instance Name or mq topic name

groups:

- groupId: g1

outerAdapters:

- name: es7

key: exampleKey

hosts: ip:9200 # 127.0.0.1:9200 for rest mode

properties:

mode: rest # or rest

# security.auth: test:123456 # only used for rest mode

cluster.name: test

es7目录下:

vi item.yml item是我的索引的名字,注意:我这里,同步之前,索引已经事先在ES里创建好了

dataSourceKey: defaultDS

outerAdapterKey: exampleKey # 对应application.yml中es配置的key

destination: example

groupId: g1

esMapping:

_index: item

_id: _id

upsert: true

sql: "select id as _id,title,brand,category,images,price from item"

commitBatch: 3000

这里还有一个小小的问题:因为这里是使用id as _id作为es文档的主键,数据同步过去后,就会发现id字段没了,因为id字段拿去充当文档主键_id了。

解决:

select id as _id,id,title,brand,category,images,price from item

启动:bin目录下启动

20-06-12 16:37:42.964 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterService - ## the canal client adapters are running now ......

2020-06-12 16:37:42.970 [main] INFO org.apache.coyote.http11.Http11NioProtocol - Starting ProtocolHandler ["http-nio-8081"]

2020-06-12 16:37:42.985 [Thread-4] INFO c.a.otter.canal.adapter.launcher.loader.AdapterProcessor - =============> Start to connect destination: example <=============

2020-06-12 16:37:43.189 [main] INFO org.apache.tomcat.util.net.NioSelectorPool - Using a shared selector for servlet write/read

2020-06-12 16:37:44.006 [main] INFO o.s.boot.web.embedded.tomcat.TomcatWebServer - Tomcat started on port(s): 8081 (http) with context path ''

2020-06-12 16:37:44.036 [main] INFO c.a.otter.canal.adapter.launcher.CanalAdapterApplication - Started CanalAdapterApplication in 8.485 seconds (JVM running for 9.213)

2020-06-12 16:37:44.096 [Thread-4] INFO c.a.otter.canal.adapter.launcher.loader.AdapterProcessor - =============> Subscribe destination: example succeed <=============

测试:

1.全量导入数据

//端口是`adapter`的端口,有key加上key,没有就不用

curl http://127.0.0.1:9182/etl/es7/item.yml -X POST

curl http://127.0.0.1:8081/etl/es7/exampleKey/item.yml -X POST

[root@localhost ~]# curl http://127.0.0.1:8081/etl/es7/exampleKey/item.yml -X POST

{"succeeded":true,"resultMessage":"导入ES 数据:6 条"}[root@localhost ~]#

2.数据库修改数据,然后到ES查看,有没有同时修改过来。

如果测试通过的话,就大功告成啦!

下面这些不用看。这些是我自己备份的东西以及踩过的一些坑…

数组:

正确的方式:先用子查询把数组字段聚合,再left join

select a.catalog_id as _id,a.catalog_name as catalogName,a.catalog_status as catalogStatus,a.page_views as pageViews,a.data_provide as dataProvide,a.update_time as updateTime,b.dicValList

from kf_data_catalog a left JOIN

(SELECT catalog_id,group_concat(dic_val order by catalog_id desc separator ';') as dicValList

from kf_catalog_tag GROUP BY catalog_id) b on a.catalog_id=b.catalog_id;

尝试把数组拼接操作放到前面:查询结果错误

SELECT a.catalog_id,a.catalog_name,a.catalog_status,a.page_views,a.data_provide,a.update_time,

group_concat(b.dic_val order by a.catalog_id desc separator ';')

from kf_data_catalog a LEFT JOIN kf_catalog_tag b on a.catalog_id=b.catalog_id;

子查询把数组字段聚合

SELECT catalog_id,group_concat(dic_val order by catalog_id desc separator ';') as dicValList

from kf_catalog_tag GROUP BY catalog_id

[search@yunqi002 es]$ curl http://127.0.0.1:8081/etl/es/exampleKey/datainterface.yml -X POST

{"succeeded":true,"resultMessage":"导入ES 数据:9032 条"}[search@yunqi002 es]$ curl http://127.0.0.1:8081/etl/es/exampleKey/datainterface.yml -X POST^C

[search@yunqi002 es]$ curl http://127.0.0.1:8081/etl/es/exampleKey/datacatalog.yml -X POST

{"succeeded":true,"resultMessage":"导入ES 数据:20002 条"}[search@yunqi002 es]$ curl http://127.0.0.1:8081/etl/es/exampleKey/datacatalog.yml -X POST^C

[search@yunqi002 es]$ curl http://127.0.0.1:8081/etl/es/exampleKey/dataset.yml -X POST

{"succeeded":true,"resultMessage":"导入ES 数据:14028 条"}[search@yunqi002 es]$

多instance:

https://blog.csdn.net/javaee_sunny/article/details/91349907

实例:

我的配置文件:canal.adapter-1.1.4 的

application.yml:

server:

port: 8081

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

default-property-inclusion: non_null

canal.conf:

mode: tcp # kafka rocketMQ

canalServerHost: 127.0.0.1:11111

# zookeeperHosts: slave1:2181

# mqServers: 127.0.0.1:9092 #or rocketmq

# flatMessage: true

batchSize: 500

syncBatchSize: 1000

retries: 0

timeout:

accessKey:

secretKey:

srcDataSources:

defaultDS:

url: jdbc:mysql://ip:3306/kf_data_open?useUnicode=true

username: canal

password: canal

canalAdapters:

- instance: example # canal instance Name or mq topic name

groups:

- groupId: g1

outerAdapters:

- name: es

key: exampleKey

hosts: ip:9200 # 127.0.0.1:9200 for rest mode

properties:

mode: rest # transport or rest

# security.auth: test:123456 # only used for rest mode

cluster.name: dataopendev

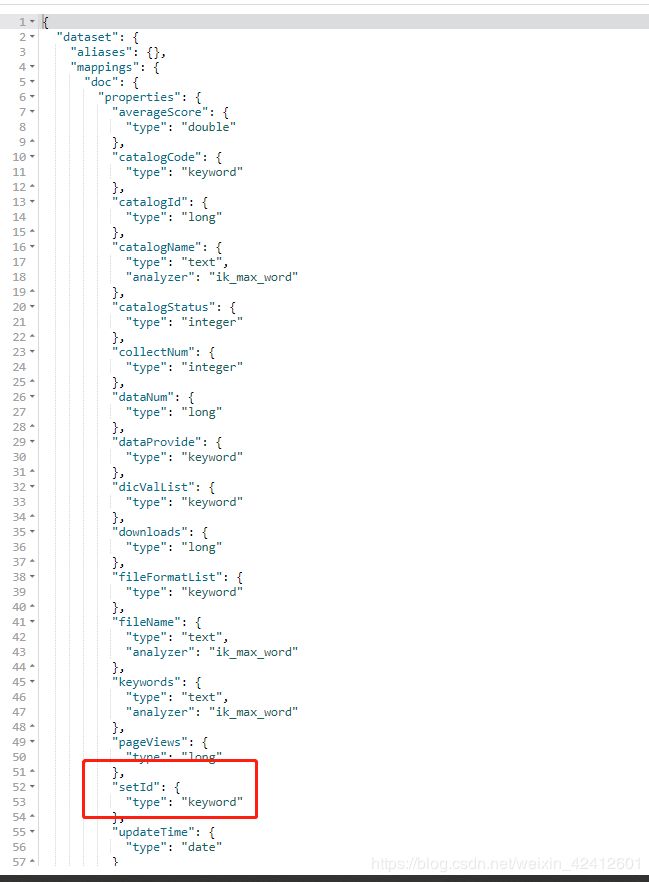

es目录下的yml:

dataset.yml:

dataSourceKey: defaultDS

destination: example

outerAdapterKey: exampleKey #对应上文中的key 这个key很重要 再强调一次

groupId: g1

esMapping:

_index: dataset

_type: doc

_id: _id

#pk: catalog_id

upsert: true

sql: "SELECT a.set_id as _id,a.set_id as setId,a.page_views as pageViews,a.downloads,a.data_num as dataNum,a.average_score as averageScore,a.collect_num as collectNum,a.update_time as updateTime,b.fileFormatList,b.fileName,c.catalog_id as catalogId,c.keywords,c.catalog_code as catalogCode,c.data_provide as dataProvide,c.catalog_status as catalogStatus,d.dicValList,c.catalog_name as catalogName from kf_data_set a left JOIN (SELECT set_id,group_concat( file_name order by set_id desc separator ';' ) as fileName ,group_concat( file_format order by set_id desc separator ';' ) as fileFormatList from kf_files_info GROUP BY set_id)b on a.set_id=b.set_id left JOIN kf_data_catalog c on a.catalog_id=c.catalog_id left JOIN (select catalog_id,group_concat( dic_val order by catalog_id desc separator ';' ) as dicValList from kf_catalog_tag group by catalog_id ) d on a.catalog_id=d.catalog_id"

objFields:

dicValList: array:;

fileFormatList: array:;

fileName: array:;

# etlCondition: "where a.c_time>={}"

commitBatch: 3000

这里第一个a.set_id as _id 用来映射文档主键_id,a.set_id as setId 用来映射文档的setId 字段。