动手学深度学习--线性回归、softmax与分类模型、多层感知机

说明:

该笔记是学习课程《动手学深度学习-pytorch实现》的笔记。pytorch零基础

1. 线性回归

1.1. 线性回归的基本要素

模型

y = X W + b y = XW + b y=XW+b

损失函数

l ( i ) ( w , b ) = 1 2 ( y ^ ( i ) − y ( i ) ) 2 , l^{(i)}(\mathbf{w}, b) = \frac{1}{2} \left(\hat{y}^{(i)} - y^{(i)}\right)^2, l(i)(w,b)=21(y^(i)−y(i))2,

L ( w , b ) = 1 n ∑ i = 1 n l ( i ) ( w , b ) = 1 n ∑ i = 1 n 1 2 ( w ⊤ x ( i ) + b − y ( i ) ) 2 . L(\mathbf{w}, b) =\frac{1}{n}\sum_{i=1}^n l^{(i)}(\mathbf{w}, b) =\frac{1}{n} \sum_{i=1}^n \frac{1}{2}\left(\mathbf{w}^\top \mathbf{x}^{(i)} + b - y^{(i)}\right)^2. L(w,b)=n1i=1∑nl(i)(w,b)=n1i=1∑n21(w⊤x(i)+b−y(i))2.

优化函数–随机梯度下降

在深度学习算法中常用小批量随机梯度下降。它的算法很简单:先选取一组模型参数的初始值,如随机选取;接下来对参数进行多次迭代,使每次迭代都可能降低损失函数的值。在每次迭代中,先随机均匀采样一个由固定数目训练数据样本所组成的小批量(mini-batch) B \mathcal{B} B,然后求小批量中数据样本的平均损失有关模型参数的导数(梯度),最后用此结果与预先设定的一个正数的乘积作为模型参数在本次迭代的减小量。

( w , b ) ← ( w , b ) − η ∣ B ∣ ∑ i ∈ B ∂ ( w , b ) l ( i ) ( w , b ) (\mathbf{w},b) \leftarrow (\mathbf{w},b) - \frac{\eta}{|\mathcal{B}|} \sum_{i \in \mathcal{B}} \partial_{(\mathbf{w},b)} l^{(i)}(\mathbf{w},b) (w,b)←(w,b)−∣B∣ηi∈B∑∂(w,b)l(i)(w,b)

学习率: η \eta η代表在每次优化中,能够学习的步长的大小

批量大小: B \mathcal{B} B是小批量计算中的批量大小batch size

总结一下,优化函数的有以下两个步骤:

- (i)初始化模型参数,一般来说使用随机初始化;

- (ii)我们在数据上迭代多次,通过在负梯度方向移动参数来更新每个参数。

1.2 线性回归模型从零开始实现

# import packages and modules

%matplotlib inline

import torch

from IPython import display

from matplotlib import pyplot as plt

import numpy as np

import random

print(torch.__version__)

生成数据集

使用线性模型来生成数据集,生成一个1000个样本的数据集,下面是用来生成数据的线性关系:

p r i c e = w a r e a ⋅ a r e a + w a g e ⋅ a g e + b \mathrm{price} = w_{\mathrm{area}} \cdot \mathrm{area} + w_{\mathrm{age}} \cdot \mathrm{age} + b price=warea⋅area+wage⋅age+b

# set input feature number

num_inputs = 2

# set example number

num_examples = 1000

# set true weight and bias in order to generate corresponded label

true_w = [2, -3.4]

true_b = 4.2

features = torch.randn(num_examples, num_inputs,dtype=torch.float32)

labels = true_w[0] * features[:,0] + true_w[1] * features[:,1] + true_b

labels += torch.tensor(np.random.normal(0,0.01,size=labels.size()),dtype=torch.float32) # 添加噪声

使用图像来展示生成的数据

plt.scatter(features[:,1].numpy(),labels.numpy(),1)

读取数据

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices) # random read 10 samples

for i in range(0, num_examples, batch_size):

j = torch.LongTensor(indices[i: min(i + batch_size, num_examples)]) # the last time may be not enough for a whole batch

yield features.index_select(0, j), labels.index_select(0, j)

# index_select(dim,index):dim 表示从第几维挑选数据;index 表示从第一个参数维度中的哪些位置挑选数据。

batch_size = 10

for X, y in data_iter(batch_size, features, labels):

print(X, '\n', y)

break

初始化模型参数

w = torch.tensor(np.random.normal(0, 0.01, (num_inputs, 1)), dtype=torch.float32)

b = torch.zeros(1, dtype=torch.float32)

w.requires_grad_(requires_grad=True)

b.requires_grad_(requires_grad=True)

定义模型

def linreg(X,m,b):

return torch.mm(X,w) + b

定义损失函数

这里我们采用均方误差损失函数:

def squared_loss(y_hat,y):

return (y_hat - y.view(y_hat.size())) ** 2 /2

定义优化函数

def sgd(params, lr, batch_size):

for param in params:

param.data -= lr * param.grad/batch_size # ues .data to operate param without gradient track

训练

# super parameters init

lr = 0.03

num_epochs = 5

net = linreg

loss = squared_loss

# training

for epoch in range(num_epochs): # training repeats num_epochs times

# in each epoch, all the samples in dataset will be used once

# X is the feature and y is the label of a batch sample

for X, y in data_iter(batch_size, features, labels):

l = loss(net(X, w, b), y).sum()

# calculate the gradient of batch sample loss

l.backward()

# using small batch random gradient descent to iter model parameters

sgd([w, b], lr, batch_size)

# reset parameter gradient

w.grad.data.zero_()

b.grad.data.zero_()

train_l = loss(net(features, w, b), labels)

print('epoch %d, loss %f' % (epoch + 1, train_l.mean().item()))

w, true_w, b, true_b

1.3 线性回归模型使用pytorch的简洁实现

import torch

from torch import nn

import numpy as np

torch.manual_seed(1)

print(torch.__version__)

torch.set_default_tensor_type('torch.FloatTensor')

生成数据集

在这里生成数据集跟从零开始的实现中是完全一样的。

num_inputs = 2

num_examples = 1000

true_w = [2, -3.4]

true_b = 4.2

features = torch.tensor(np.random.normal(0,1,(num_examples,num_inputs)), dtype=torch.float)

labels = true_w[0] * features[:,0] + true_w[1] * features[:,1] + true_b

labels += torch.tensor(np.random.normal(0,0.01, size=labels.size()), dtype=torch.float)

读取数据集

import torch.utils.data as Data

batch_size = 10

# combine featues and labels of dataset

dataset = Data.TensorDataset(features, labels)

# put dataset into DataLoader

data_iter = Data.DataLoader(

dataset=dataset, # torch TensorDataset format

batch_size=batch_size, # mini batch size

shuffle=True, # whether shuffle the data or not

num_workers=2, # read data in multithreading

)

for X,y in data_iter:

print(X, '\n',y)

break

定义模型

class LinearNet(nn.Module):

def __init__(self, n_feature):

super(LinearNet, self).__init__() # call father function to init

self.linear = nn.Linear(n_feature, 1) # function prototype: `torch.nn.Linear(in_features, out_features, bias=True)`

def forward(self, x):

y = self.linear(x)

return y

net = LinearNet(num_inputs)

print(net)

# ways to init a multilayer network

# method one

net = nn.Sequential(

nn.Linear(num_inputs, 1)

# other layers can be added here

)

# method two

net = nn.Sequential()

net.add_module('linear', nn.Linear(num_inputs, 1))

# net.add_module ......

# method three

from collections import OrderedDict

net = nn.Sequential(OrderedDict([

('linear', nn.Linear(num_inputs, 1))

# ......

]))

print(net)

print(net[0])

初始化模型参数

from torch.nn import init

init.normal_(net[0].weight, mean=0.0, std=0.01)

init.constant_(net[0].bias, val=0.0) # or you can use `net[0].bias.data.fill_(0)` to modify it directly

for param in net.parameters():

print(param)

定义损失函数

loss = nn.MSELoss() # nn built-in squared loss function

# function prototype: `torch.nn.MSELoss(size_average=None, reduce=None, reduction='mean')`

定义优化函数

import torch.optim as optim

optimizer = optim.SGD(net.parameters(), lr=0.03) # built-in random gradient descent function

print(optimizer) # function prototype: `torch.optim.SGD(params, lr=, momentum=0, dampening=0, weight_decay=0, nesterov=False)`

训练

num_epochs = 3

for epoch in range(1, num_epochs + 1):

for X, y in data_iter:

output = net(X)

l = loss(output, y.view(-1, 1))

optimizer.zero_grad() # reset gradient, equal to net.zero_grad()

l.backward()

optimizer.step()

print('epoch %d, loss: %f' % (epoch, l.item()))

# result comparision

dense = net[0]

print(true_w, dense.weight.data)

print(true_b, dense.bias.data)

2 softmax与分类模型

2.1 softmax从零开始实现

import torch

import torchvision

import numpy as np

import sys

sys.path.append("/home/kesci/input")

import d2lzh1981 as d2l

print(torch.__version__)

print(torchvision.__version__)

获取训练集数据和测试集数据

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, root='/home/kesci/input/FashionMNIST2065')

模型参数初始化

num_inputs = 784

print(28*28)

num_outputs = 10

W = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_outputs)), dtype=torch.float)

b = torch.zeros(num_outputs, dtype=torch.float)

W.requires_grad_(requires_grad=True)

b.requires_grad_(requires_grad=True)

定义softmax操作

def softmax(X):

X_exp = X.exp()

partition = X_exp.sum(dim=1, keepdim=True)

# print("X size is ", X_exp.size())

# print("partition size is ", partition, partition.size())

return X_exp / partition # 这里应用了广播机制

X = torch.rand((2, 5))

X_prob = softmax(X)

print(X_prob, '\n', X_prob.sum(dim=1))

softmax回归模型

def net(X):

return softmax(torch.mm(X.view((-1, num_inputs)), W) + b)

定义损失函数

H ( y ( i ) , y ^ ( i ) ) = − ∑ j = 1 q y j ( i ) log y ^ j ( i ) , H\left(\boldsymbol y^{(i)}, \boldsymbol {\hat y}^{(i)}\right ) = -\sum_{j=1}^q y_j^{(i)} \log \hat y_j^{(i)}, H(y(i),y^(i))=−j=1∑qyj(i)logy^j(i),

ℓ ( Θ ) = 1 n ∑ i = 1 n H ( y ( i ) , y ^ ( i ) ) , \ell(\boldsymbol{\Theta}) = \frac{1}{n} \sum_{i=1}^n H\left(\boldsymbol y^{(i)}, \boldsymbol {\hat y}^{(i)}\right ), ℓ(Θ)=n1i=1∑nH(y(i),y^(i)),

ℓ ( Θ ) = − ( 1 / n ) ∑ i = 1 n log y ^ y ( i ) ( i ) \ell(\boldsymbol{\Theta}) = -(1/n) \sum_{i=1}^n \log \hat y_{y^{(i)}}^{(i)} ℓ(Θ)=−(1/n)i=1∑nlogy^y(i)(i)

y_hat = torch.tensor([[0.1, 0.3, 0.6], [0.3, 0.2, 0.5]])

y = torch.LongTensor([0, 2])

y_hat.gather(1, y.view(-1, 1))

def cross_entropy(y_hat, y):

return - torch.log(y_hat.gather(1, y.view(-1, 1)))

定义准确率

def accuracy(y_hat, y):

return (y_hat.argmax(dim=1) == y).float().mean().item()

print(accuracy(y_hat, y))

#本函数已保存在d2lzh_pytorch包中方便以后使用。该函数将被逐步改进:它的完整实现将在“图像增广”一节中描述

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for X, y in data_iter:

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

return acc_sum / n

print(evaluate_accuracy(test_iter, net))

训练模型

num_epochs, lr = 5, 0.1

# 本函数已保存在d2lzh_pytorch包中方便以后使用

def train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size,

params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

# 梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

d2l.sgd(params, lr, batch_size)

else:

optimizer.step()

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item()

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f'

% (epoch + 1, train_l_sum / n, train_acc_sum / n, test_acc))

train_ch3(net, train_iter, test_iter, cross_entropy, num_epochs, batch_size, [W, b], lr)

模型预测

X, y = iter(test_iter).next()

true_labels = d2l.get_fashion_mnist_labels(y.numpy())

pred_labels = d2l.get_fashion_mnist_labels(net(X).argmax(dim=1).numpy())

titles = [true + '\n' + pred for true, pred in zip(true_labels, pred_labels)]

d2l.show_fashion_mnist(X[0:9], titles[0:9])

2.2 softmax简洁实现

# 加载各种包或者模块

import torch

from torch import nn

from torch.nn import init

import numpy as np

import sys

sys.path.append("/home/kesci/input")

import d2lzh1981 as d2l

print(torch.__version__)

初始化

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, root='/home/kesci/input/FashionMNIST2065')

定义网络模型

num_inputs = 784

num_outputs = 10

class LinearNet(nn.Module):

def __init__(self, num_inputs, num_outputs):

super(LinearNet, self).__init__()

self.linear = nn.Linear(num_inputs, num_outputs)

def forward(self, x): # x 的形状: (batch, 1, 28, 28)

y = self.linear(x.view(x.shape[0], -1))

return y

# net = LinearNet(num_inputs, num_outputs)

class FlattenLayer(nn.Module):

def __init__(self):

super(FlattenLayer, self).__init__()

def forward(self, x): # x 的形状: (batch, *, *, ...)

return x.view(x.shape[0], -1)

from collections import OrderedDict

net = nn.Sequential(

# FlattenLayer(),

# LinearNet(num_inputs, num_outputs)

OrderedDict([

('flatten', FlattenLayer()),

('linear', nn.Linear(num_inputs, num_outputs))]) # 或者写成我们自己定义的 LinearNet(num_inputs, num_outputs) 也可以

)

初始化模型参数

init.normal_(net.linear.weight, mean=0, std=0.01)

init.constant_(net.linear.bias, val=0)

定义损失函数

loss = nn.CrossEntropyLoss() # 下面是他的函数原型

# class torch.nn.CrossEntropyLoss(weight=None, size_average=None, ignore_index=-100, reduce=None, reduction='mean')

定义优化函数

optimizer = torch.optim.SGD(net.parameters(), lr=0.1) # 下面是函数原型

# class torch.optim.SGD(params, lr=, momentum=0, dampening=0, weight_decay=0, nesterov=False)

训练

num_epochs = 5

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, None, None, optimizer)

3. 多层感知机

3.1 多层感知机的基本知识

深度学习主要关注多层模型。在这里,我们将以多层感知机(multilayer perceptron,MLP)为例,介绍多层神经网络的概念。

隐藏层

下图展示了一个多层感知机的神经网络图,它含有一个隐藏层,该层中有5个隐藏单元。

![]()

表达公式

具体来说,给定一个小批量样本 X ∈ R n × d \boldsymbol{X} \in \mathbb{R}^{n \times d} X∈Rn×d,其批量大小为 n n n,输入个数为 d d d。假设多层感知机只有一个隐藏层,其中隐藏单元个数为 h h h。记隐藏层的输出(也称为隐藏层变量或隐藏变量)为 H \boldsymbol{H} H,有 H ∈ R n × h \boldsymbol{H} \in \mathbb{R}^{n \times h} H∈Rn×h。因为隐藏层和输出层均是全连接层,可以设隐藏层的权重参数和偏差参数分别为 W h ∈ R d × h \boldsymbol{W}_h \in \mathbb{R}^{d \times h} Wh∈Rd×h和 b h ∈ R 1 × h \boldsymbol{b}_h \in \mathbb{R}^{1 \times h} bh∈R1×h,输出层的权重和偏差参数分别为 W o ∈ R h × q \boldsymbol{W}_o \in \mathbb{R}^{h \times q} Wo∈Rh×q和 b o ∈ R 1 × q \boldsymbol{b}_o \in \mathbb{R}^{1 \times q} bo∈R1×q。

我们先来看一种含单隐藏层的多层感知机的设计。其输出 O ∈ R n × q \boldsymbol{O} \in \mathbb{R}^{n \times q} O∈Rn×q的计算为

H = X W h + b h , O = H W o + b o , \begin{aligned} \boldsymbol{H} &= \boldsymbol{X} \boldsymbol{W}_h + \boldsymbol{b}_h,\\ \boldsymbol{O} &= \boldsymbol{H} \boldsymbol{W}_o + \boldsymbol{b}_o, \end{aligned} HO=XWh+bh,=HWo+bo,

也就是将隐藏层的输出直接作为输出层的输入。如果将以上两个式子联立起来,可以得到

O = ( X W h + b h ) W o + b o = X W h W o + b h W o + b o . \boldsymbol{O} = (\boldsymbol{X} \boldsymbol{W}_h + \boldsymbol{b}_h)\boldsymbol{W}_o + \boldsymbol{b}_o = \boldsymbol{X} \boldsymbol{W}_h\boldsymbol{W}_o + \boldsymbol{b}_h \boldsymbol{W}_o + \boldsymbol{b}_o. O=(XWh+bh)Wo+bo=XWhWo+bhWo+bo.

从联立后的式子可以看出,虽然神经网络引入了隐藏层,却依然等价于一个单层神经网络:其中输出层权重参数为 W h W o \boldsymbol{W}_h\boldsymbol{W}_o WhWo,偏差参数为 b h W o + b o \boldsymbol{b}_h \boldsymbol{W}_o + \boldsymbol{b}_o bhWo+bo。不难发现,即便再添加更多的隐藏层,以上设计依然只能与仅含输出层的单层神经网络等价。

激活函数

上述问题的根源在于全连接层只是对数据做仿射变换(affine transformation),而多个仿射变换的叠加仍然是一个仿射变换。解决问题的一个方法是引入非线性变换,例如对隐藏变量使用按元素运算的非线性函数进行变换,然后再作为下一个全连接层的输入。这个非线性函数被称为激活函数(activation function)。

下面我们介绍几个常用的激活函数:

ReLU函数

ReLU(rectified linear unit)函数提供了一个很简单的非线性变换。给定元素 x x x,该函数定义为

ReLU ( x ) = max ( x , 0 ) . \text{ReLU}(x) = \max(x, 0). ReLU(x)=max(x,0).

可以看出,ReLU函数只保留正数元素,并将负数元素清零。为了直观地观察这一非线性变换,我们先定义一个绘图函数xyplot。

%matplotlib inline

import torch

import numpy as np

import matplotlib.pyplot as plt

import sys

sys.path.append("/home/kesci/input")

import d2lzh1981 as d2l

print(torch.__version__)

def xyplot(x_vals, y_vals, name):

# d2l.set_figsize(figsize=(5, 2.5))

plt.plot(x_vals.detach().numpy(), y_vals.detach().numpy())

plt.xlabel('x')

plt.ylabel(name + '(x)')

x = torch.arange(-8.0, 8.0, 0.1, requires_grad=True)

y = x.relu()

xyplot(x, y, 'relu') # 展示relu函数

y.sum().backward()

xyplot(x, x.grad, 'grad of relu') # 梯度图像

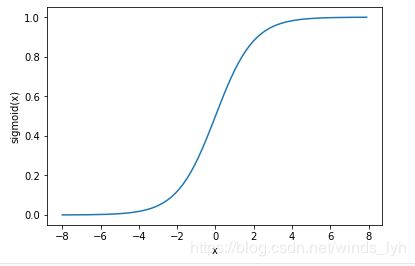

Sigmoid函数

sigmoid函数可以将元素的值变换到0和1之间:

sigmoid ( x ) = 1 1 + exp ( − x ) . \text{sigmoid}(x) = \frac{1}{1 + \exp(-x)}. sigmoid(x)=1+exp(−x)1.

y = x.sigmoid()

xyplot(x, y, 'sigmoid')

sigmoid ′ ( x ) = sigmoid ( x ) ( 1 − sigmoid ( x ) ) . \text{sigmoid}'(x) = \text{sigmoid}(x)\left(1-\text{sigmoid}(x)\right). sigmoid′(x)=sigmoid(x)(1−sigmoid(x)).

下面绘制了sigmoid函数的导数。当输入为0时,sigmoid函数的导数达到最大值0.25;当输入越偏离0时,sigmoid函数的导数越接近0。

x.grad.zero_()

y.sum().backward()

xyplot(x, x.grad, 'grad of sigmoid')

tanh函数

tanh(双曲正切)函数可以将元素的值变换到-1和1之间:

tanh ( x ) = 1 − exp ( − 2 x ) 1 + exp ( − 2 x ) . \text{tanh}(x) = \frac{1 - \exp(-2x)}{1 + \exp(-2x)}. tanh(x)=1+exp(−2x)1−exp(−2x).

我们接着绘制tanh函数。当输入接近0时,tanh函数接近线性变换。虽然该函数的形状和sigmoid函数的形状很像,但tanh函数在坐标系的原点上对称。

y = x.tanh()

xyplot(x, y, 'tanh')

依据链式法则,tanh函数的导数

tanh ′ ( x ) = 1 − tanh 2 ( x ) . \text{tanh}'(x) = 1 - \text{tanh}^2(x). tanh′(x)=1−tanh2(x).

下面绘制了tanh函数的导数。当输入为0时,tanh函数的导数达到最大值1;当输入越偏离0时,tanh函数的导数越接近0。

x.grad.zero_()

y.sum().backward()

xyplot(x, x.grad, 'grad of tanh')

关于激活函数的选择

ReLu函数是一个通用的激活函数,目前在大多数情况下使用。但是,ReLU函数只能在隐藏层中使用。

用于分类器时,sigmoid函数及其组合通常效果更好。由于梯度消失问题,有时要避免使用sigmoid和tanh函数。

在神经网络层数较多的时候,最好使用ReLu函数,ReLu函数比较简单计算量少,而sigmoid和tanh函数计算量大很多。

在选择激活函数的时候可以先选用ReLu函数如果效果不理想可以尝试其他激活函数。

多层感知机

多层感知机就是含有至少一个隐藏层的由全连接层组成的神经网络,且每个隐藏层的输出通过激活函数进行变换。多层感知机的层数和各隐藏层中隐藏单元个数都是超参数。以单隐藏层为例并沿用本节之前定义的符号,多层感知机按以下方式计算输出:

H = ϕ ( X W h + b h ) , O = H W o + b o , \begin{aligned} \boldsymbol{H} &= \phi(\boldsymbol{X} \boldsymbol{W}_h + \boldsymbol{b}_h),\\ \boldsymbol{O} &= \boldsymbol{H} \boldsymbol{W}_o + \boldsymbol{b}_o, \end{aligned} HO=ϕ(XWh+bh),=HWo+bo,

其中 ϕ \phi ϕ表示激活函数。

3.2 多层感知机从零开始实现

import torch

import numpy as np

import sys

sys.path.append("/home/kesci/input")

import d2lzh1981 as d2l

print(torch.__version__)

获取训练集

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size,root='/home/kesci/input/FashionMNIST2065')

定义模型参数

num_inputs, num_outputs, num_hiddens = 784, 10, 256

W1 = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_hiddens)), dtype=torch.float)

b1 = torch.zeros(num_hiddens, dtype=torch.float)

W2 = torch.tensor(np.random.normal(0, 0.01, (num_hiddens, num_outputs)), dtype=torch.float)

b2 = torch.zeros(num_outputs, dtype=torch.float)

params = [W1, b1, W2, b2]

for param in params:

param.requires_grad_(requires_grad=True)

定义激活函数

def relu(X):

return torch.max(input=X, other=torch.tensor(0.0))

定义网络

def net(X):

X = X.view((-1, num_inputs))

H = relu(torch.matmul(X, W1) + b1)

return torch.matmul(H, W2) + b2

定义损失函数

loss = torch.nn.CrossEntropyLoss()

训练

num_epochs, lr = 5, 100.0

# def train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size,

# params=None, lr=None, optimizer=None):

# for epoch in range(num_epochs):

# train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

# for X, y in train_iter:

# y_hat = net(X)

# l = loss(y_hat, y).sum()

#

# # 梯度清零

# if optimizer is not None:

# optimizer.zero_grad()

# elif params is not None and params[0].grad is not None:

# for param in params:

# param.grad.data.zero_()

#

# l.backward()

# if optimizer is None:

# d2l.sgd(params, lr, batch_size)

# else:

# optimizer.step() # “softmax回归的简洁实现”一节将用到

#

#

# train_l_sum += l.item()

# train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item()

# n += y.shape[0]

# test_acc = evaluate_accuracy(test_iter, net)

# print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f'

# % (epoch + 1, train_l_sum / n, train_acc_sum / n, test_acc))

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, params, lr)

3.3 多层感知机pytorch实现

导入包

import torch

from torch import nn

from torch.nn import init

import numpy as np

import sys

sys.path.append("/home/kesci/input")

import d2lzh1981 as d2l

print(torch.__version__)

初始化模型和各个参数

num_inputs, num_outputs, num_hiddens = 784, 10, 256

net = nn.Sequential(

d2l.FlattenLayer(),

nn.Linear(num_inputs, num_hiddens),

nn.ReLU(),

nn.Linear(num_hiddens, num_outputs),

)

for params in net.parameters():

init.normal_(params, mean=0, std=0.01)

训练

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size,root='/home/kesci/input/FashionMNIST2065')

loss = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(net.parameters(), lr=0.5)

num_epochs = 5

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, None, None, optimizer)