Android PC投屏简单尝试—最终章1

回顾之前的几遍文章,我们分别通过RMTP协议和简单的Socket 发送Bitmap图片的Base64编码来完成投屏。

回想这系列文章的想法来源-Vysor,它通过 USB来进行连接的。又看到了 scrcpy项目。

于是有了这个系列的最终章-仿scrcpy(Vysor)

ps:其实就是对着scrcpy的源码撸了一遍。

效果预览

简单的录制效果.gif

源码地址:https://github.com/deepsadness/AppRemote

内容目录

包括的内容有

- 通过USB连接和adb进行手机通信

- 在Android端发送录制屏幕的H264 Naul

- 使用SDL2和FFmpeg,编写能够在PC(Windows,Mac)上运行的投屏界面

1. USB Socket连接

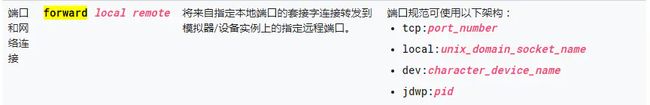

- 熟悉adb foward 和 adb reverse 命令

由于Android版本低于5.0版本不支持adb reverse

adb forward.png

forward --list list all forward socket connections

forward [--no-rebind] LOCAL REMOTE

forward socket connection using:

tcp: ( may be "tcp:0" to pick any open port)

localabstract:

localreserved:

localfilesystem:

dev:

jdwp: (remote only)

forward --remove LOCAL remove specific forward socket connection

forward --remove-all remove all forward socket connections

这个命令的意思是 将PC上的端口(LOCAL) 转发到 Android手机上(REMOTE) 。

这样的话,我们就可以在Android段建立Server,监听我们的REMOTE,而PC端可以通过连接连接这个LOCAL,就可以成功的建立Socket连接。

还可以注意到,我们使用的LOCAL和REMOTE除了可以使用 TCP的端口的形式,还可以使用 UNIX Domain Socket IPC协议 。

通常我们可以使用adb forward tcp:8888 tcp:8888来监听两端的端口。

下面是简单的调试代码

调试代码

下面通过两种方式来进行通信

使用 tcp port的方式

- 命令行

adb forward tcp:8888 tcp:8888 - socket Server(android 端)

public class PortServer {

public static void start() {

new Thread(new Runnable() {

@Override

public void run() {

//可以直接使用抽象的名字作为socket的名称

ServerSocket serverSocket = null;

try {

serverSocket = new ServerSocket(8888);

//blocking

Socket accept = serverSocket.accept();

Log.d("ZZX", "serverSocket 链接成功");

while (true) {

if (!accept.isConnected()) {

return;

}

InputStream inputStream = accept.getInputStream();

String result = IO.streamToString(inputStream);

Log.d("ZZX", "serverSocket recv =" + result);

}

} catch (IOException e) {

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

}

}

- socket client 端

java 版本

private static void socket() {

//开启socket clinet

Socket clinet = new Socket();

//用adb转发端口到8888

try {

//blocking

clinet.connect(new InetSocketAddress("127.0.0.1", 8888));

System.out.println("连接成功!!");

OutputStream outputStream = clinet.getOutputStream();

outputStream.write("Hello World".getBytes());

outputStream.flush();

outputStream.close();

} catch (IOException e) {

e.printStackTrace();

}

}

使用 localabstract 的方式

- 命令行

adb forward tcp:8888 localabstract: local

需要修改的只有Server 端。因为我们将服务端(Android端)改成了localabstract的方式。

- android

public class LocalServer {

public static void start() {

new Thread(new Runnable() {

@Override

public void run() {

//可以直接使用抽象的名字作为socket的名称

LocalServerSocket serverSocket = null;

try {

serverSocket = new LocalServerSocket("local");

//blocking

LocalSocket client = serverSocket.accept();

Log.d("ZZX", "serverSocket 链接成功");

while (true) {

if (!client.isConnected()) {

return;

}

InputStream inputStream = client.getInputStream();

String result = IO.streamToString(inputStream);

Log.d("ZZX", "serverSocket recv =" + result);

}

} catch (IOException e) {

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

}

}

这样我们就通过ADB协议和USB 建立了手机和PC端的通信。之后我们就可以通过这里建立的socket,进行数据传递。

2. 在Android上运行后台程序

我们期望运行一个后台的程序,PC端开启之后,就开始给我们发送截屏的数据。

这里涉及了几个问题。

第一个是在之前的文章中,我们知道,我们需要进行屏幕的截屏,需要申请对应的权限。

第二个是,如何直接在Android上运行我们写好的java程序,还不是用Activity的方式来运行。

使用app_process 运行程序

这个命令完美的满足了我们的需求。

它不但可以在后台直接运行dex中的java文件,还具有较高的权限!!!

调试代码

我们先来写一个简单的类试一下

- 编写一个简单的java文件

public class HelloWorld {

public static void main(String... args) {

System.out.println("Hello, world!");

}

}

- 将其编译成dex文件

javac -source 1.7 -target 1.7 HelloWorld.java

"$ANDROID_HOME"/build-tools/27.0.2/dx \

--dex --output classes.dex HelloWorld.class

- 推送到设备上并执行它

adb push classes.dex /data/local/tmp/

$ adb shell CLASSPATH=/data/local/tmp/classes.dex app_process / HelloWorld

这样我们就运行成功了~~

这里需要注意的是app_process 只能执行dex文件

- adb_forward

task adb_forward() {

doLast {

println "adb_forward"

def cmd = "adb forward --list"

ProcessBuilder builder = new ProcessBuilder(cmd.split(" "))

Process adbpb = builder.start()

if (adbpb.waitFor() == 0) {

def result = adbpb.inputStream.text

println result.length()

if (result.length() <= 1) {

def forward_recorder = "adb forward tcp:9000 localabstract:recorder"

ProcessBuilder forward_pb = new ProcessBuilder(forward_recorder.split(" "))

Process forward_ps = forward_pb.start()

if (forward_ps.waitFor() == 0) {

println "forward success!!"

}

} else {

println result

}

} else {

println "error = " + adbpb.errorStream.text

}

}

}

在AndroidStudio中编译

上面我体验了简单的通过命令行的形式来编译。实际上,我们的开发都是在AndroidStudio中的。而且它为我们提供了一个很好的编译环境。不需要在手动去敲入这些代码。

我们只需要通过自定义Gradle Task就可以简单的完成这个任务。

- 单纯复制dex文件

因为运行的是dex文件,所以我们直接复制dex文件就行了。

这个任务必须依赖于assembleDebug任务。因为只有这个任务执行完,才会有这些dex文件。

//将dex文件复制。通过dependsOn来制定依赖关系

//因为是设定了type是gradle中已经实现了Copy,所以直接配置它的属性

task class_cls(type: Copy, dependsOn: "assembleDebug") {

from "build/intermediates/transforms/dexMerger/debug/0/"

destinationDir = file('build/libs')

}

- 压缩成jar

如果不直接使用dex的话,也可以压缩成jar的形式。其实和上面直接使用dex的方式没差。就看你自己喜欢了。

//将编译好的dex文件压缩成jar.

task classex_jar(type: Jar, dependsOn: "assembleDebug") {

from "build/intermediates/transforms/dexMerger/debug/0/"

destinationDir = file('build/libs')

archiveName 'class.jar'

}

- 直接将编译好的结果,push到手机上

这里再写一个push的task,并把将其依赖于classex_jar任务。这样运行它时,会先去运行我们依赖的classex_jar为我们打包。

//将编译好的push到手机上

//这里,因为是自己定义的项目类型。要把执行的代码,写在Action内

//直接跟在后面的这个闭包,是在项目配置阶段运行的

task adb_push(dependsOn: "classex_jar") {

//doLast是定义个Action。Action是在task运行阶段运行的

doLast {

File file = new File("./app/build/libs/class.jar")

def jarDir = file.getAbsolutePath()

def cmd = "adb push $jarDir /data/local/tmp"

ProcessBuilder builder = new ProcessBuilder(cmd.split(" "))

Process push = builder.start()

if (push.waitFor() == 0) {

println "result = " + push.inputStream.text

} else {

println "error = " + push.errorStream.text

}

}

}

- 直接在项目里运行调试

我们也可以直接在项目将调试的代码运行起来。

//直接运行,查看结果

task adb_exc(dependsOn: "adb_push") {

//相当于制定了一个Action,在这个任务的最后执行

doLast {

println "adb_exc"

def cmd = "adb shell CLASSPATH=/data/local/tmp/class.jar app_process /data/local/tmp com.cry.cry.appprocessdemo.HelloWorld"

ProcessBuilder builder = new ProcessBuilder(cmd.split(" "))

Process adbpb = builder.start()

println "start adb "

if (adbpb.waitFor() == 0) {

println "result = " + adbpb.inputStream.text

} else {

println "error = " + adbpb.errorStream.text

}

}

}

运行结果

运行任务.png

基于gradle任务快速调试.png

这样,就把我们就可以在AndroidStudio中快速的运行调试我们的代码了~~

3. 通过Android程序,获取设备的信息和录制数据

3.1 获取设备信息

- 获取

ServiceManager

我们平时通过Context中暴露的getService方法。来调用对应的Service 来获取设备信息的。

因为我们是后台运行的程序,没有对应的Context。那我们要怎么办?

我们知道Android的系统架构中,其实所有的getService方法,最后都是落实在ServerManager这个代理类中,去获取Service ManagerService中对应的真实注册的Service的远程代理对象。

所以这里,我们就通过反射,来创建ServiceManager,同时通过它,来获取我们需要的Service的远程代理对象。

@SuppressLint("PrivateApi")

public final class ServiceManager {

private final Method getServiceMethod;

private DisplayManager displayManager;

private PowerManager powerManager;

private InputManager inputManager;

public ServiceManager() {

try {

getServiceMethod = Class.forName("android.os.ServiceManager").getDeclaredMethod("getService", String.class);

} catch (Exception e) {

e.printStackTrace();

throw new AssertionError(e);

}

}

private IInterface getService(String service, String type) {

try {

IBinder binder = (IBinder) getServiceMethod.invoke(null, service);

Method asInterface = Class.forName(type + "$Stub").getMethod("asInterface", IBinder.class);

return (IInterface) asInterface.invoke(null, binder);

} catch (Exception e) {

e.printStackTrace();

throw new AssertionError(e);

}

}

public DisplayManager getDisplayManager() {

if (displayManager == null) {

IInterface service = getService(Context.DISPLAY_SERVICE, "android.hardware.display.IDisplayManager");

displayManager = new DisplayManager(service);

}

return displayManager;

}

public PowerManager getPowerManager() {

if (powerManager == null) {

IInterface service = getService(Context.POWER_SERVICE, "android.os.IPowerManager");

powerManager = new PowerManager(service);

}

return powerManager;

}

public InputManager getInputManager() {

if (inputManager == null) {

IInterface service = getService(Context.INPUT_SERVICE, "android.hardware.input.IInputManager");

inputManager = new InputManager(service);

}

return inputManager;

}

}

通过getService方法,获取BINDER对象之后,在通过对应的实现类的Stub的asInterface方法,转成对应的远程代理类。

- 获取屏幕信息

接下来,我们通过得到的远程代理对象,就可以调用方法了

public class DisplayManager {

/**

* 这个service 对应 final class BinderService extends IDisplayManager.Stub

*/

private final IInterface service;

public DisplayManager(IInterface service) {

this.service = service;

}

public DisplayInfo getDisplayInfo() {

try {

Object displayInfo = service.getClass().getMethod("getDisplayInfo", int.class)

.invoke(service, 0);

Class cls = displayInfo.getClass();

// width and height already take the rotation into account

int width = cls.getDeclaredField("logicalWidth").getInt(displayInfo);

int height = cls.getDeclaredField("logicalHeight").getInt(displayInfo);

int rotation = cls.getDeclaredField("rotation").getInt(displayInfo);

return new DisplayInfo(new Size(width, height), rotation);

} catch (Exception e) {

e.printStackTrace();

throw new AssertionError(e);

}

}

/*

这方法是在DisplayManager里面有,但是DisplayManagerService内,没有。所以没法调用

public DisplayInfo getDisplay() {

try {

Object display = service.getClass().getMethod("getDisplay", int.class)

.invoke(service, 0);

Point point = new Point();

Method getSize = display.getClass().getMethod("getSize", Point.class);

Method getRotation = display.getClass().getMethod("getRotation");

getSize.invoke(display, point);

int rotation = (int) getRotation.invoke(display);

return new DisplayInfo(new Size(point.x, point.y), rotation);

} catch (Exception e) {

e.printStackTrace();

throw new AssertionError(e);

}

}

*/

}

3.2 进行屏幕录制

前几遍文章,我们都是通过MediaProjection来完成我们的屏幕录制的。

因为截屏需要MediaProjection这个类。它实际上是一个Serivce

//在Activity中是通过这样的方式,来获取VirtualDisplay的

MediaProjectionManager systemService = (MediaProjectionManager) getSystemService(MEDIA_PROJECTION_SERVICE);

MediaProjection mediaProjection = systemService.getMediaProjection();

mediaProjection.createVirtualDisplay("activity-request", widht, height, 1, DisplayManager.VIRTUAL_DISPLAY_FLAG_PUBLIC, surface, null, null);

在Activity中实际上的代码是这样的。通过MediaProjectionManager来获取一个MediaProjection。然后根据我们的surface来创建一个virtualDisplay。

整个步骤中,需要context来获取MediaProjectionManager ,还需要用户授权之后,才能创建VirtualDisplay。

然而,我们这里既不可能让用户授权,也没有Context,要怎么办呢?

SurfaceControl

我们可以参考 adb screenrecord 命令项目中 的 ScreenRecord的操作

/*

* Configures the virtual display. When this completes, virtual display

* frames will start arriving from the buffer producer.

*/

static status_t prepareVirtualDisplay(const DisplayInfo& mainDpyInfo,

const sp& bufferProducer,

sp* pDisplayHandle) {

sp dpy = SurfaceComposerClient::createDisplay(

String8("ScreenRecorder"), false /*secure*/);

SurfaceComposerClient::Transaction t;

t.setDisplaySurface(dpy, bufferProducer);

setDisplayProjection(t, dpy, mainDpyInfo);

t.setDisplayLayerStack(dpy, 0); // default stack

t.apply();

*pDisplayHandle = dpy;

return NO_ERROR;

}

Native中的SurfaceComposerClient在Java层中,对应的就是SurfaceControl。

我们只要同样按照这样的方式调用SurfaceControl就可以了。

- 获取SurfaceControl

同样可以通过反射的方式来进行获取

@SuppressLint("PrivateApi")

public class SurfaceControl {

private static final Class CLASS;

static {

try {

CLASS = Class.forName("android.view.SurfaceControl");

} catch (ClassNotFoundException e) {

throw new AssertionError(e);

}

}

private SurfaceControl() {

// only static methods

}

public static void openTransaction() {

try {

CLASS.getMethod("openTransaction").invoke(null);

} catch (Exception e) {

throw new AssertionError(e);

}

}

public static void closeTransaction() {

try {

CLASS.getMethod("closeTransaction").invoke(null);

} catch (Exception e) {

throw new AssertionError(e);

}

}

public static void setDisplayProjection(IBinder displayToken, int orientation, Rect layerStackRect, Rect displayRect) {

try {

CLASS.getMethod("setDisplayProjection", IBinder.class, int.class, Rect.class, Rect.class)

.invoke(null, displayToken, orientation, layerStackRect, displayRect);

} catch (Exception e) {

throw new AssertionError(e);

}

}

public static void setDisplayLayerStack(IBinder displayToken, int layerStack) {

try {

CLASS.getMethod("setDisplayLayerStack", IBinder.class, int.class).invoke(null, displayToken, layerStack);

} catch (Exception e) {

throw new AssertionError(e);

}

}

public static void setDisplaySurface(IBinder displayToken, Surface surface) {

try {

CLASS.getMethod("setDisplaySurface", IBinder.class, Surface.class).invoke(null, displayToken, surface);

} catch (Exception e) {

throw new AssertionError(e);

}

}

public static IBinder createDisplay(String name, boolean secure) {

try {

return (IBinder) CLASS.getMethod("createDisplay", String.class, boolean.class).invoke(null, name, secure);

} catch (Exception e) {

throw new AssertionError(e);

}

}

public static void destroyDisplay(IBinder displayToken) {

try {

CLASS.getMethod("destroyDisplay", IBinder.class).invoke(null, displayToken);

} catch (Exception e) {

e.printStackTrace();

throw new AssertionError(e);

}

}

}

- 调用录屏

public void streamScreen(){

IBinder display = createDisplay();

//...

setDisplaySurface(display, surface, contentRect, videoRect);

}

private static IBinder createDisplay() {

return SurfaceControl.createDisplay("scrcpy", false);

}

private static void setDisplaySurface(IBinder display, Surface surface, Rect deviceRect, Rect displayRect) {

SurfaceControl.openTransaction();

try {

SurfaceControl.setDisplaySurface(display, surface);

SurfaceControl.setDisplayProjection(display, 0, deviceRect, displayRect);

SurfaceControl.setDisplayLayerStack(display, 0);

} finally {

SurfaceControl.closeTransaction();

}

}

- 进行录制的代码

其实我们已经经历过很多很多次了。

将录制的数据输入MediaCodec的Surface中。然后就可以得到编码之后的的数据了。

再将这个数据通过socket发送

package com.cry.cry.appprocessdemo;

import android.graphics.Rect;

import android.media.MediaCodec;

import android.media.MediaCodecInfo;

import android.media.MediaFormat;

import android.os.IBinder;

import android.view.Surface;

import com.cry.cry.appprocessdemo.refect.SurfaceControl;

import java.io.FileDescriptor;

import java.io.IOException;

import java.nio.ByteBuffer;

public class ScreenRecorder {

private static final int DEFAULT_FRAME_RATE = 60; // fps

private static final int DEFAULT_I_FRAME_INTERVAL = 10; // seconds

private static final int DEFAULT_BIT_RATE = 8000000; // 8Mbps

private static final int DEFAULT_TIME_OUT = 2 * 1000; // 2s

private static final int REPEAT_FRAME_DELAY = 6; // repeat after 6 frames

private static final int MICROSECONDS_IN_ONE_SECOND = 1_000_000;

private static final int NO_PTS = -1;

private boolean sendFrameMeta = false;

private final ByteBuffer headerBuffer = ByteBuffer.allocate(12);

private long ptsOrigin;

private volatile boolean stop;

private MediaCodec encoder;

public void setStop(boolean stop) {

this.stop = stop;

// encoder.signalEndOfInputStream();

}

//进行录制的循环,录制得到的数据,都写到fd当中

public void record(int width, int height, FileDescriptor fd) {

//对MediaCodec进行配置

boolean alive;

try {

do {

MediaFormat mediaFormat = createMediaFormat(DEFAULT_BIT_RATE, DEFAULT_FRAME_RATE, DEFAULT_I_FRAME_INTERVAL);

mediaFormat.setInteger(MediaFormat.KEY_WIDTH, width);

mediaFormat.setInteger(MediaFormat.KEY_HEIGHT, height);

encoder = MediaCodec.createEncoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

//输入输出的surface 这里是没有

encoder.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

Surface inputSurface = encoder.createInputSurface();

IBinder surfaceClient = setDisplaySurface(width, height, inputSurface);

encoder.start();

try {

alive = encode(encoder, fd);

alive = alive && !stop;

System.out.println("alive =" + alive + ", stop=" + stop);

} finally {

System.out.println("encoder.stop");

//为什么调用stop会block主呢?

// encoder.stop();

System.out.println("destroyDisplaySurface");

destroyDisplaySurface(surfaceClient);

System.out.println("encoder release");

encoder.release();

System.out.println("inputSurface release");

inputSurface.release();

System.out.println("end");

}

} while (alive);

} catch (IOException e) {

e.printStackTrace();

}

System.out.println("end record");

}

//创建录制的Surface

private IBinder setDisplaySurface(int width, int height, Surface inputSurface) {

Rect deviceRect = new Rect(0, 0, width, height);

Rect displayRect = new Rect(0, 0, width, height);

IBinder surfaceClient = SurfaceControl.createDisplay("recorder", false);

//设置和配置截屏的Surface

SurfaceControl.openTransaction();

try {

SurfaceControl.setDisplaySurface(surfaceClient, inputSurface);

SurfaceControl.setDisplayProjection(surfaceClient, 0, deviceRect, displayRect);

SurfaceControl.setDisplayLayerStack(surfaceClient, 0);

} finally {

SurfaceControl.closeTransaction();

}

return surfaceClient;

}

private void destroyDisplaySurface(IBinder surfaceClient) {

SurfaceControl.destroyDisplay(surfaceClient);

}

//创建MediaFormat

private MediaFormat createMediaFormat(int bitRate, int frameRate, int iFrameInterval) {

MediaFormat mediaFormat = new MediaFormat();

mediaFormat.setString(MediaFormat.KEY_MIME, MediaFormat.MIMETYPE_VIDEO_AVC);

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, bitRate);

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, frameRate);

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface);

mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, iFrameInterval);

mediaFormat.setLong(MediaFormat.KEY_REPEAT_PREVIOUS_FRAME_AFTER, MICROSECONDS_IN_ONE_SECOND * REPEAT_FRAME_DELAY / frameRate);//us

return mediaFormat;

}

//进行encode

private boolean encode(MediaCodec codec, FileDescriptor fd) throws IOException {

System.out.println("encode");

boolean eof = false;

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

while (!eof) {

System.out.println("dequeueOutputBuffer outputBufferId before");

int outputBufferId = codec.dequeueOutputBuffer(bufferInfo, DEFAULT_TIME_OUT);

System.out.println("dequeueOutputBuffer outputBufferId =" + outputBufferId);

eof = (bufferInfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0;

System.out.println("encode eof =" + eof);

try {

// if (consumeRotationChange()) {

// // must restart encoding with new size

// break;

// }

if (stop) {

// must restart encoding with new size

break;

}

//将得到的数据,都发送给fd

if (outputBufferId >= 0) {

ByteBuffer codecBuffer = codec.getOutputBuffer(outputBufferId);

System.out.println("dequeueOutputBuffer getOutputBuffer");

if (sendFrameMeta) {

writeFrameMeta(fd, bufferInfo, codecBuffer.remaining());

}

IO.writeFully(fd, codecBuffer);

System.out.println("writeFully");

}

} finally {

if (outputBufferId >= 0) {

codec.releaseOutputBuffer(outputBufferId, false);

System.out.println("releaseOutputBuffer");

}

}

}

return !eof;

}

private void writeFrameMeta(FileDescriptor fd, MediaCodec.BufferInfo bufferInfo, int packetSize) throws IOException {

headerBuffer.clear();

long pts;

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {

pts = NO_PTS; // non-media data packet

} else {

if (ptsOrigin == 0) {

ptsOrigin = bufferInfo.presentationTimeUs;

}

pts = bufferInfo.presentationTimeUs - ptsOrigin;

}

headerBuffer.putLong(pts);

headerBuffer.putInt(packetSize);

headerBuffer.flip();

IO.writeFully(fd, headerBuffer);

}

}

- socket发送

调用了Os.write方法进行发送。

public static void writeFully(FileDescriptor fd, ByteBuffer from) throws IOException {

// ByteBuffer position is not updated as expected by Os.write() on old Android versions, so

// count the remaining bytes manually.

// See .

int remaining = from.remaining();

while (remaining > 0) {

try {

int w = Os.write(fd, from);

if (BuildConfig.DEBUG && w < 0) {

// w should not be negative, since an exception is thrown on error

System.out.println("Os.write() returned a negative value (" + w + ")");

throw new AssertionError("Os.write() returned a negative value (" + w + ")");

}

remaining -= w;

} catch (ErrnoException e) {

e.printStackTrace();

if (e.errno != OsConstants.EINTR) {

throw new IOException(e);

}

}

}

}

4. PC端建立Socket Client,接受数据。并将数据显示出来

4.1 建立Socket.

//创建Socket

client = socket(PF_INET, SOCK_STREAM, 0);

if (!client) {

perror("can not create socket!!");

return -1;

}

struct sockaddr_in in_addr;

memset(&in_addr, 0, sizeof(sockaddr_in));

in_addr.sin_port = htons(9000);

in_addr.sin_family = AF_INET;

in_addr.sin_addr.s_addr = inet_addr("127.0.0.1");

int ret = connect(client, (struct sockaddr *) &in_addr, sizeof(struct sockaddr));

if (ret < 0) {

perror("socket connect error!!\\n");

return -1;

}

printf("连接成功\n");

因为我们将PC的端口的9000转发到Android的Server上,所以我们只要去连接本地的9000端口,就相当于和Android上的Server建立了连接。

4.2 FFmepg解码和SDL2显示

在前面的其他系列文章中,对SDL2和FFmepg都进行过了介绍。而且还对ffplay的源码进行了分析。这里基本上和ffplay 的视频播放功能一样。只是我们没有传输音频数据。

FFmepg解码

这里和之前的FFmpeg解码不同的是,

- 从内存中读取数据

我们不是通过一个url来获取数据,而是通过socket的读取内存来进行读取数据。

所以我们需要自己来构造这个AVIOContext并把它给AVFormat

//通过这个方法,来进行socket的内存读取

int read_socket_buffer(void *opaque, uint8_t *buf, int buf_size) {

int count = recv(client, buf, buf_size, 0);

if (count == 0) {

return -1;

}

return count;

}

play(){

avformat_network_init();

AVFormatContext *format_ctx = avformat_alloc_context();

unsigned char *buffer = static_cast(av_malloc(BUF_SIZE));

//通过avio_alloc_context传入内存读取的地址和方法。

AVIOContext *avio_ctx = avio_alloc_context(buffer, BUF_SIZE, 0, NULL, read_socket_buffer, NULL, NULL);

//在给format_ctx 对象

format_ctx->pb = avio_ctx;

//最后在通过相同的方法打开

ret = avformat_open_input(&format_ctx, NULL, NULL, NULL);

if (ret < 0) {

printf("avformat_open_input error:%s\n", av_err2str(ret));

return -1;

}

//...

}

- 不使用

avformat_find_stream_info

因为直接发送了H264 的naul,而且没有每次都发送媒体数据,所以当我们使用avformat_find_stream_info时,会一直阻塞,获取不到。

所以这里只需要直接创建解码器,进行read_frame就可以了~~

AVCodec *codec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (!codec) {

printf("Did not find a video codec \n");

return -1;

}

AVCodecContext *codec_ctx = avcodec_alloc_context3(codec);

if (!codec_ctx) {

printf("Did not alloc AVCodecContext \n");

return -1;

}

// avcodec_parameters_to_context(codec_ctx, video_stream->codecpar);

// width=1080, height=1920

//这里的解码器的长宽,只有在read_frame 之后,才能正确获取。我们这里就先死。

// 最后的项目,应该是先通过Android发送屏幕数据过来的。

codec_ctx->width = 1080 / 2;

codec_ctx->width = 720 / 2;

codec_ctx->height = 1920 / 2;

codec_ctx->height = 1280 / 2;

ret = avcodec_open2(codec_ctx, codec, NULL);

if (ret < 0) {

printf("avcodec_open2 error:%s\n", av_err2str(ret));

return -1;

}

printf("成功打开编码器\n");

SDL2显示

我们将解码后的数据,送入编码器进行显示就可以了

- 创建

SDLScreen

//创建SDLScreen.

SDL_Screen *sc = new SDL_Screen("memory", codec_ctx->width, codec_ctx->height);

ret = sc->init();

if (ret < 0) {

printf("SDL_Screen init error\n");

return -1;

}

这里需要注意,其实这里的屏幕尺寸也是需要通过计算的。这里就简单写死了。

- 简单的编码循环后,送入显示

AVFrame *pFrame = av_frame_alloc();

//对比使用av_init_packet 它必须已经为packet初始化好了内存,只是设置默认值。

AVPacket *packet = av_packet_alloc();

while (av_read_frame(format_ctx, packet) >= 0) {

// printf("av_read_frame success\n");

printf("widht=%d\n", codec_ctx->width);

// if (packet->stream_index == video_index) {

while (1) {

ret = avcodec_send_packet(codec_ctx, packet);

if (ret == 0) {

// printf("avcodec_send_packet success\n");

//成功找到了

break;

} else if (ret == AVERROR(EAGAIN)) {

// printf("avcodec_send_packet EAGAIN\n");

break;

} else {

printf("avcodec_send_packet error:%s\n", av_err2str(ret));

av_packet_unref(packet);

goto quit;

}

}

// while (1) {

ret = avcodec_receive_frame(codec_ctx, pFrame);

if (ret == 0) {

//成功找到了

// printf("avcodec_receive_frame success\n");

// break;

} else if (ret == AVERROR(EAGAIN)) {

// printf("avcodec_receive_frame EAGAIN\n");

// break;

} else {

printf("avcodec_receive_frame error:%s\n", av_err2str(ret));

goto quit;

}

// }

//送现

sc->send_frame(pFrame);

//如果已经读完,就GG

if (avio_ctx->eof_reached) {

break;

}

// }

av_packet_unref(packet);

}

quit:

if (client >= 0) {

close(client);

client = 0;

}

avformat_close_input(&format_ctx);

sc->destroy();

return 0;

}

这样,我们就初步完成了PC的投屏功能了。

额外-开发环境

- mac上SDL2和FFmpeg开发环境的搭建

因为在Clion中进行开发,所以只要简单的配置CmakeList.txt就可以了。

cmake_minimum_required(VERSION 3.13)

project(SDLDemo)

set(CMAKE_CXX_STANDARD 14)

include_directories(/usr/local/Cellar/ffmpeg/4.0.3/include/)

link_directories(/usr/local/Cellar/ffmpeg/4.0.3/lib/)

include_directories(/usr/local/Cellar/sdl2/2.0.8/include/)

link_directories(/usr/local/Cellar/sdl2/2.0.8/lib/)

set(SOURCE_FILES main.cpp MSPlayer.cpp MSPlayer.h )

add_executable(SDLDemo ${SOURCE_FILES})

target_link_libraries(

SDLDemo

avcodec

avdevice

avfilter

avformat

avresample

avutil

postproc

swresample

swscale

SDL2

)

运行

- Android手机通过USB连接电脑,开启USB调试模式

- 在Studio 的项目中。运行

gradle中的adb_forward和adb_push任务。adb forward result.png

adb_push result.png

- 进入adb shell 运行app_process

adb shell CLASSPATH=/data/local/tmp/class.jar app_process /data/local/tmp com.cry.cry.appprocessdemo.HelloWorld

app_process.png

- 然后点击运行PC上的项目,就可以看到弹出的屏幕了。

总结

通过上述的操作,我们通过USB和ADB命令,结合SDL2的提供的API和FFMpeg解码实现了显示。从而基本实现了PC投屏。

但是还是存在缺陷

- 屏幕的尺寸是我们写死的。在不同分辨率的手机上需要每次都进行调整,才能显示正常。

- 我们还期望能够通过PC来对手机进行控制

- 目前直接在主线程中进行解码和显示,因为解码的延迟,很快就能感到屏幕和手机上的延迟越来越大。

投屏尝试系列文章

- Android PC投屏简单尝试- 自定义协议章(Socket+Bitmap)

- Android PC投屏简单尝试(录屏直播)2—硬解章(MediaCodec+RMTP)

- Android PC投屏简单尝试(录屏直播)3—软解章(ImageReader+FFMpeg with X264)

作者:deep_sadness

链接:https://www.jianshu.com/p/06f4b8919991

來源:简书

简书著作权归作者所有,任何形式的转载都请联系作者获得授权并注明出处。