Spring boot2.0.4启动SparkSession2.3.0连接hive实现SQL查询

记得以前处理这个碰见很多坑,现在贴出自己的代码供别人参考,创建Spring Boot项目过程就不在赘述

一 项目代码

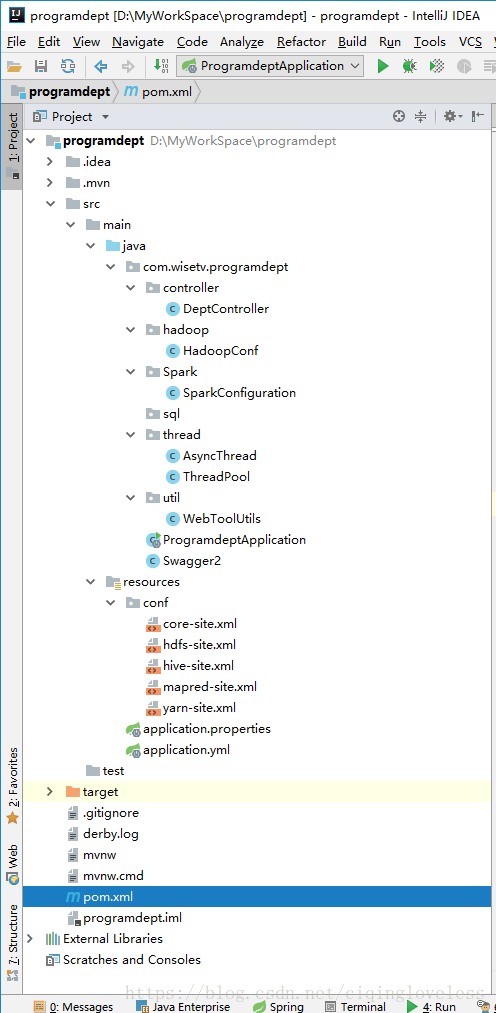

1 项目结构

2 pom.xml

部分可精简,我就不调优了

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>com.wisetvgroupId>

<artifactId>programdeptartifactId>

<version>0.0.1-SNAPSHOTversion>

<packaging>jarpackaging>

<name>sparkroutename>

<description>Demo project for Spring Bootdescription>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.0.0.RELEASEversion>

<relativePath/>

parent>

<properties>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8project.reporting.outputEncoding>

<java.version>1.8java.version>

<hadoop.version>2.9.0hadoop.version>

<springboot.version>1.5.9.RELEASEspringboot.version>

<org.apache.hive.version>1.2.1org.apache.hive.version>

<swagger.version>2.7.0swagger.version>

<ojdbc6.version>11.2.0.1.0ojdbc6.version>

<scala.version>2.11.12scala.version>

<spark.version>2.3.0spark.version>

properties>

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-jdbcartifactId>

<exclusions>

<exclusion>

<groupId>org.apache.tomcatgroupId>

<artifactId>tomcat-jdbcartifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

<dependency>

<groupId>com.google.code.gsongroupId>

<artifactId>gsonartifactId>

<version>2.8.2version>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-jdbcartifactId>

<version>${org.apache.hive.version}version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.aggregategroupId>

<artifactId>jetty-allartifactId>

exclusion>

<exclusion>

<groupId>org.apache.hivegroupId>

<artifactId>hive-shimsartifactId>

exclusion>

<exclusion>

<groupId>org.apache.parquetgroupId>

<artifactId>parquet-hadoop-bundleartifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>${hadoop.version}version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-yarn-clientartifactId>

<version>${hadoop.version}version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>druidartifactId>

<version>1.1.8version>

dependency>

<dependency>

<groupId>com.oraclegroupId>

<artifactId>ojdbc6artifactId>

<version>${ojdbc6.version}version>

dependency>

<dependency>

<groupId>io.springfoxgroupId>

<artifactId>springfox-swagger2artifactId>

<version>${swagger.version}version>

<exclusions>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-coreartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-beansartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-contextartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-context-supportartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-aopartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-txartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-ormartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-jdbcartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-webartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-webmvcartifactId>

exclusion>

<exclusion>

<groupId>org.springframeworkgroupId>

<artifactId>spring-oxmartifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>io.springfoxgroupId>

<artifactId>springfox-swagger-uiartifactId>

<version>${swagger.version}version>

dependency>

<dependency>

<groupId>org.scala-langgroupId>

<artifactId>scala-libraryartifactId>

<version>${scala.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_2.11artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-graphx_2.11artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-hive_2.11artifactId>

<version>${spark.version}version>

<exclusions>

<exclusion>

<groupId>com.twittergroupId>

<artifactId>parquet-hadoop-bundleartifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-mesos_2.11artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-yarn_2.11artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_2.11artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>com.google.guavagroupId>

<artifactId>guavaartifactId>

<version>24.0-jreversion>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.46version>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>6.0.6version>

dependency>

<dependency>

<groupId>com.hadoop.compression.lzogroupId>

<artifactId>LzoCodecartifactId>

<version>0.4.20version>

<scope>systemscope>

<systemPath>${basedir}/src/main/resources/lib/hadoop-lzo-0.4.20-SNAPSHOT.jarsystemPath>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

plugin>

plugins>

build>

project>

3 Hadoop配置文件

hadoop配置文件放于resource文件夹下,创建conf文件夹,然后将hadoop的配置文件拷贝到conf文件夹下,注意一点,需要注释关于压缩类的参数,避免项目报错

hadoop配置文件

3.1 core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dirname>

<value>/app/hadoop/tmp/hadoopvalue>

<description>A base for other temporarydirectories.description>

property>

<property>

<name>fs.defaultFSname>

<value>hdfs://sparkdis1:8020value>

property>

<property>

<name>dfs.client.failover.max.attemptsname>

<value>15value>

property>

<property>

<name>dfs.replicationname>

<value>2value>

property>

<property>

<name>io.file.buffer.sizename>

<value>131072value>

property>

<property>

<name>hadoop.proxyuser.hadoop.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.hadoop.groupsname>

<value>*value>

property>

configuration>

3.2 hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.rpc-addressname>

<value>sparkdis1:8020value>

property>

<property>

<name>dfs.namenode.http-addressname>

<value>sparkdis1:50070value>

property>

<property>

<name>dfs.ha.fencing.methodsname>

<value>sshfencevalue>

property>

<property>

<name>dfs.ha.fencing.ssh.private-key-filesname>

<value>/home/hadoop/.ssh/id_rsavalue>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>file:/hadoop/dfs/name,file:/app/hadoop/dfs/namevalue>

property>

<property>

<name>dfs.namenode.edits.dirname>

<value>file:/hadoop/dfs/name,file:/app/hadoop/dfs/namevalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>file:/app/hadoop/dfs/datavalue>

property>

<property>

<name>dfs.datanode.handler.countname>

<value>100value>

property>

<property>

<name>dfs.namenode.handler.countname>

<value>1024value>

property>

<property>

<name>dfs.datanode.max.xcieversname>

<value>8096value>

property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.enablename>

<value>falsevalue>

property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.policyname>

<value>NEVERvalue>

property>

configuration>

3.3 hive-site.xml

由于文件太大我就发这个了,记得把自己的hive文件拷贝进去就好

3.4 mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>mapreduce.jobhistory.addressname>

<value>sparkdis1:10020value>

property>

<property>

<name>mapreduce.jobhistory.webapp.addressname>

<value>sparkdis1:19888value>

property>

<property>

<name>mapreduce.reduce.shuffle.parallelcopiesname>

<value>50value>

property>

<property>

<name>mapreduce.map.java.optsname>

<value>-Xmx4096Mvalue>

property>

<property>

<name>mapreduce.reduce.java.optsname>

<value>-Xmx8192Mvalue>

property>

<property>

<name>mapreduce.map.memory.mbname>

<value>4096value>

property>

<property>

<name>mapreduce.reduce.memory.mbname>

<value>8192value>

property>

<property>

<name>mapreduce.map.output.compressname>

<value>truevalue>

property>

<property>

<name>mapred.child.envname>

<value>JAVA_LIBRARY_PATH=/app/hadoop-2.9.0/lib/nativevalue>

property>

<property>

<name>mapreduce.task.io.sort.mbname>

<value>512value>

property>

<property>

<name>mapreduce.task.io.sort.factorname>

<value>100value>

property>

<property>

<name>mapred.reduce.tasksname>

<value>4value>

property>

<property>

<name>mapred.map.tasksname>

<value>20value>

property>

<property>

<name>mapred.child.java.optsname>

<value>-Xmx4096mvalue>

property>

<property>

<name>mapreduce.reduce.shuffle.memory.limit.percentname>

<value>0.1value>

property>

<property>

<name>mapred.job.shuffle.input.buffer.percentname>

<value>0.6value>

property>

configuration>3.5 yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.addressname>

<value>sparkdis1:8032value>

property>

<property>

<name>yarn.resourcemanager.scheduler.addressname>

<value>sparkdis1:8030value>

property>

<property>

<name>yarn.resourcemanager.admin.addressname>

<value>sparkdis1:8033value>

property>

<property>

<name>yarn.resourcemanager.resource-tracker.addressname>

<value>sparkdis1:8031value>

property>

<property>

<name>yarn.resourcemanager.webapp.addressname>

<value>sparkdis1:8088value>

property>

<property>

<name>yarn.scheduler.minimum-allocation-mbname>

<value>1024value>

property>

<property>

<name>yarn.scheduler.maximum-allocation-mbname>

<value>15360value>

property>

<property>

<name>yarn.nodemanager.resource.memory-mbname>

<value>122880value>

property>

<property>

<name>yarn.nodemanager.local-dirsname>

<value>/app/hadoop/hadoop_data/localvalue>

property>

<property>

<name>yarn.nodemanager.log-dirsname>

<value>/app/hadoop/hadoop_data/logsvalue>

property>

<property>

<name>yarn.nodemanager.resource.cpu-vcoresname>

<value>60value>

property>

<property>

<name>yarn.scheduler.maximum-allocation-vcoresname>

<value>10value>

property>

<property>

<name>yarn.nodemanager.vmem-pmem-rationame>

<value>6value>

property>

<property>

<name>yarn.nodemanager.pmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shuffle,spark_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.classname>

<value>org.apache.spark.network.yarn.YarnShuffleServicevalue>

property>

configuration>4 自定义端口

application.yml

server:

port: 9706

max-http-header-size: 100000

spring:

application:

name: service-programdept

servlet:

multipart:

max-file-size: 50000Mb

max-request-size: 50000Mb

enabled: true5 项目代码

5.1 Swagger2

我在项目中加入了Swagger2进行测试,可以不加,看自己选择

ProgramdeptApplication.java

package com.wisetv.programdept;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.EnableAutoConfiguration;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.Bean;

import org.springframework.web.cors.CorsConfiguration;

import org.springframework.web.cors.UrlBasedCorsConfigurationSource;

import org.springframework.web.filter.CorsFilter;

import springfox.documentation.swagger2.annotations.EnableSwagger2;

@SpringBootApplication

@EnableSwagger2

@EnableAutoConfiguration

public class ProgramdeptApplication {

public static void main(String[] args) {

SpringApplication.run(ProgramdeptApplication.class, args);

}

@Bean

public CorsFilter corsFilter() {

final UrlBasedCorsConfigurationSource source = new UrlBasedCorsConfigurationSource();

final CorsConfiguration config = new CorsConfiguration();

config.setAllowCredentials(true); // 允许cookies跨域

config.addAllowedOrigin("*");// #允许向该服务器提交请求的URI,*表示全部允许,在SpringMVC中,如果设成*,会自动转成当前请求头中的Origin

config.addAllowedHeader("*");// #允许访问的头信息,*表示全部

config.setMaxAge(18000L);// 预检请求的缓存时间(秒),即在这个时间段里,对于相同的跨域请求不会再预检了

config.addAllowedMethod("OPTIONS");// 允许提交请求的方法,*表示全部允许

config.addAllowedMethod("HEAD");

config.addAllowedMethod("GET");// 允许Get的请求方法

config.addAllowedMethod("PUT");

config.addAllowedMethod("POST");

config.addAllowedMethod("DELETE");

config.addAllowedMethod("PATCH");

source.registerCorsConfiguration("/**", config);

return new CorsFilter(source);

}

}

Swagger2.java

package com.wisetv.programdept;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import springfox.documentation.builders.ApiInfoBuilder;

import springfox.documentation.builders.PathSelectors;

import springfox.documentation.builders.RequestHandlerSelectors;

import springfox.documentation.service.ApiInfo;

import springfox.documentation.spi.DocumentationType;

import springfox.documentation.spring.web.plugins.Docket;

@Configuration

public class Swagger2 {

@Bean

public Docket createRestApi() {

return new Docket(DocumentationType.SWAGGER_2)

.apiInfo(apiInfo())

.select()

.apis(RequestHandlerSelectors.basePackage("com.wisetv.sparkuseranalysis"))

.paths(PathSelectors.any())

.build();

}

private ApiInfo apiInfo() {

return new ApiInfoBuilder()

.title("spark接口文档")

.description("简单优雅的restfun风格")

// .termsOfServiceUrl("http://blog.csdn.net/saytime")

.version("1.0")

.build();

}

}

5.2 SparkConfiguration.java

这个是spark启动参数,这其中有很多坑,没有把握尽量不要注释我的参数

package com.wisetv.programdept.Spark;

import com.wisetv.programdept.util.WebToolUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.sql.SparkSession;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.stereotype.Service;

import java.net.SocketException;

import java.net.UnknownHostException;

import java.util.Iterator;

import java.util.Map;

@Service

public class SparkConfiguration {

@Bean

public SparkConf getSparkConf() {

SparkConf sparkConf = new SparkConf().setAppName("programdept")

.setMaster("yarn-client")

.set("spark.executor.uri", "hdfs://sparkdis1:8020/spark/binary/spark-sql.tar.gz")

.set("spark.testing.memory", "2147480000")

.set("spark.sql.hive.verifyPartitionPath", "true")

.set("spark.yarn.executor.memoryOverhead", "2048m")

.set("spark.dynamicAllocation.enabled", "true")

.set("spark.shuffle.service.enabled", "true")

.set("spark.dynamicAllocation.executorIdleTimeout", "60")

.set("spark.dynamicAllocation.cachedExecutorIdleTimeout", "18000")

.set("spark.dynamicAllocation.initialExecutors", "3")

.set("spark.dynamicAllocation.maxExecutors", "10")

.set("spark.dynamicAllocation.minExecutors", "3")

.set("spark.dynamicAllocation.schedulerBacklogTimeout", "10")

.set("spark.eventLog.enabled", "true")

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.set("spark.hadoop.yarn.resourcemanager.hostname", "sparkdis1")

.set("spark.hadoop.yarn.resourcemanager.address", "sparkdis1:8032")

.set("spark.hadoop.fs.defaultFS", "hdfs://sparkdis1:8020")

.set("spark.yarn.access.namenodes", "hdfs://sparkdis1:8020")

.set("spark.history.fs.logDirectory", "hdfs://sparkdis1:8020/spark/historyserverforSpark")

.set("spark.cores.max","10")

.set("spark.mesos.coarse","true")

.set("spark.executor.cores","2")

.set("spark.executor.memory","4g")

.set("spark.eventLog.dir", "hdfs://sparkdis1:8020/spark/eventLog")

.set("spark.sql.parquet.cacheMetadata","false")

.set("spark.sql.hive.verifyPartitionPath", "true")

//下面这个在打包之后需要解开注释,setJars是需要的,需要打一个无依赖包的jar让spark传到yarn中

// .setJars(new String[]{"/app/springserver/addjars/sparkroute.jar","/app/springserver/addjars/fastjson-1.2.46.jar"})

.set("spark.yarn.stagingDir", "hdfs://sparkdis1/user/hadoop/");

// .set("spark.sql.hive.metastore.version","2.1.0");

String hostname = null;

try {

hostname = WebToolUtils.getLocalIP();

} catch (SocketException e) {

e.printStackTrace();

} catch (UnknownHostException e) {

e.printStackTrace();

}

sparkConf.set("spark.driver.host", hostname);

return sparkConf;

}

@Bean

public SparkSession getSparkSession(@Autowired SparkConf sparkConf, @Autowired Configuration conf) {

SparkSession.Builder config = SparkSession.builder();

Iterator.Entry> iterator = conf.iterator();

while (iterator.hasNext()) {

Map.Entry next = iterator.next();

config.config(next.getKey(), next.getValue());

}

SparkSession sparkSession = config.config(sparkConf).enableHiveSupport().getOrCreate();

return sparkSession;

}

@Bean

public JavaSparkContext getSparkContext(@Autowired SparkSession sparkSession){

JavaSparkContext javaSparkContext = new JavaSparkContext(sparkSession.sparkContext());

return javaSparkContext;

}

}

5.3 HadoopConf.java

hadoop配置文件

package com.wisetv.programdept.hadoop;

import org.apache.hadoop.conf.Configuration;

import org.springframework.context.annotation.Bean;

@org.springframework.context.annotation.Configuration

public class HadoopConf {

@Bean

public Configuration getHadoopConf() {

Configuration conf = new Configuration();

conf.addResource("conf/core-site.xml");

conf.addResource("conf/hdfs-site.xml");

conf.addResource("conf/hive-site.xml");

conf.addResource("conf/yarn-site.xml");

conf.addResource("conf/mapred-site.xml");

return conf;

}

}

5.4 WebToolUtils.java

在旧版本中spark获取的是本机ip,新版本中用的是机器名,有两种方案一个是在spark所有服务器上配置你的机器名,另一种是我代码中的方式,指定sparkConf.set(“spark.driver.host”, hostname);参数。下面这个类是获取IP的工具类,但是当机器有多IP时可能会有问题,可以将上面参数写死,或者自行解决.

package com.wisetv.programdept.util;

import org.apache.http.HttpEntity;

import org.apache.http.NameValuePair;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.util.EntityUtils;

import javax.servlet.http.HttpServletRequest;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.OutputStream;

import java.net.*;

import java.util.ArrayList;

import java.util.Enumeration;

import java.util.List;

/**

* 常用工具类

*

* @author 席红蕾

* @date 2016-09-27

* @version 1.0

*/

public class WebToolUtils {

/**

* 获取本地IP地址

*

* @throws SocketException

*/

public static String getLocalIP() throws UnknownHostException, SocketException {

if (isWindowsOS()) {

return InetAddress.getLocalHost().getHostAddress();

} else {

return getLinuxLocalIp();

}

}

/**

* 判断操作系统是否是Windows

*

* @return

*/

public static boolean isWindowsOS() {

boolean isWindowsOS = false;

String osName = System.getProperty("os.name");

if (osName.toLowerCase().indexOf("windows") > -1) {

isWindowsOS = true;

}

return isWindowsOS;

}

/**

* 获取本地Host名称

*/

public static String getLocalHostName() throws UnknownHostException {

return InetAddress.getLocalHost().getHostName();

}

/**

* 获取Linux下的IP地址

*

* @return IP地址

* @throws SocketException

*/

private static String getLinuxLocalIp() throws SocketException {

String ip = "";

try {

for (Enumeration en = NetworkInterface.getNetworkInterfaces(); en.hasMoreElements();) {

NetworkInterface intf = en.nextElement();

String name = intf.getName();

if (!name.contains("docker") && !name.contains("lo")) {

for (Enumeration enumIpAddr = intf.getInetAddresses(); enumIpAddr.hasMoreElements();) {

InetAddress inetAddress = enumIpAddr.nextElement();

if (!inetAddress.isLoopbackAddress()) {

String ipaddress = inetAddress.getHostAddress().toString();

if (!ipaddress.contains("::") && !ipaddress.contains("0:0:") && !ipaddress.contains("fe80")) {

ip = ipaddress;

}

}

}

}

}

} catch (SocketException ex) {

System.out.println("获取ip地址异常");

ip = "127.0.0.1";

ex.printStackTrace();

}

return ip;

}

/**

* 获取用户真实IP地址,不使用request.getRemoteAddr();的原因是有可能用户使用了代理软件方式避免真实IP地址,

*

* 可是,如果通过了多级反向代理的话,X-Forwarded-For的值并不止一个,而是一串IP值,究竟哪个才是真正的用户端的真实IP呢?

* 答案是取X-Forwarded-For中第一个非unknown的有效IP字符串。

*

* 如:X-Forwarded-For:192.168.1.110, 192.168.1.120, 192.168.1.130,

* 192.168.1.100

*

* 用户真实IP为: 192.168.1.110

*

* @param request

* @return

*/

public static String getIpAddress(HttpServletRequest request) {

String ip = request.getHeader("x-forwarded-for");

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("Proxy-Client-IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("WL-Proxy-Client-IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("HTTP_CLIENT_IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("HTTP_X_FORWARDED_FOR");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getRemoteAddr();

}

return ip;

}

/**

* 向指定URL发送GET方法的请求

*

* @param url

* 发送请求的URL

* @param param

* 请求参数,请求参数应该是 name1=value1&name2=value2 的形式。

* @return URL 所代表远程资源的响应结果

*/

// public static String sendGet(String url, String param) {

// String result = "";

// BufferedReader in = null;

// try {

// String urlNameString = url + "?" + param;

// URL realUrl = new URL(urlNameString);

// // 打开和URL之间的连接

// URLConnection connection = realUrl.openConnection();

// // 设置通用的请求属性

// connection.setRequestProperty("accept", "*/*");

// connection.setRequestProperty("connection", "Keep-Alive");

// connection.setRequestProperty("user-agent", "Mozilla/4.0 (compatible;

// MSIE 6.0; Windows NT 5.1;SV1)");

// // 建立实际的连接

// connection.connect();

// // 获取所有响应头字段

// Map> map = connection.getHeaderFields();

// // 遍历所有的响应头字段

// for (String key : map.keySet()) {

// System.out.println(key + "--->" + map.get(key));

// }

// // 定义 BufferedReader输入流来读取URL的响应

// in = new BufferedReader(new

// InputStreamReader(connection.getInputStream()));

// String line;

// while ((line = in.readLine()) != null) {

// result += line;

// }

// } catch (Exception e) {

// System.out.println("发送GET请求出现异常!" + e);

// e.printStackTrace();

// }

// // 使用finally块来关闭输入流

// finally {

// try {

// if (in != null) {

// in.close();

// }

// } catch (Exception e2) {

// e2.printStackTrace();

// }

// }

// return result;

// }

/**

* 向指定 URL 发送POST方法的请求

*

* @param url

* 发送请求的 URL

* @param param

* 请求参数,请求参数应该是 name1=value1&name2=value2 的形式。

* @return 所代表远程资源的响应结果

*/

public static void sendPost(String pathUrl, String name, String pwd, String phone, String content) {

// PrintWriter out = null;

// BufferedReader in = null;

// String result = "";

// try {

// URL realUrl = new URL(url);

// // 打开和URL之间的连接

// URLConnection conn = realUrl.openConnection();

// // 设置通用的请求属性

// conn.setRequestProperty("accept", "*/*");

// conn.setRequestProperty("connection", "Keep-Alive");

// conn.setRequestProperty("user-agent",

// "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1;SV1)");

// // 发送POST请求必须设置如下两行

// conn.setDoOutput(true);

// conn.setDoInput(true);

// // 获取URLConnection对象对应的输出流

// out = new PrintWriter(conn.getOutputStream());

// // 发送请求参数

// out.print(param);

// // flush输出流的缓冲

// out.flush();

// // 定义BufferedReader输入流来读取URL的响应

// in = new BufferedReader(

// new InputStreamReader(conn.getInputStream()));

// String line;

// while ((line = in.readLine()) != null) {

// result += line;

// }

// } catch (Exception e) {

// System.out.println("发送 POST 请求出现异常!"+e);

// e.printStackTrace();

// }

// //使用finally块来关闭输出流、输入流

// finally{

// try{

// if(out!=null){

// out.close();

// }

// if(in!=null){

// in.close();

// }

// }

// catch(IOException ex){

// ex.printStackTrace();

// }

// }

// return result;

try {

// 建立连接

URL url = new URL(pathUrl);

HttpURLConnection httpConn = (HttpURLConnection) url.openConnection();

// //设置连接属性

httpConn.setDoOutput(true);// 使用 URL 连接进行输出

httpConn.setDoInput(true);// 使用 URL 连接进行输入

httpConn.setUseCaches(false);// 忽略缓存

httpConn.setRequestMethod("POST");// 设置URL请求方法

String requestString = "客服端要以以流方式发送到服务端的数据...";

// 设置请求属性

// 获得数据字节数据,请求数据流的编码,必须和下面服务器端处理请求流的编码一致

byte[] requestStringBytes = requestString.getBytes("utf-8");

httpConn.setRequestProperty("Content-length", "" + requestStringBytes.length);

httpConn.setRequestProperty("Content-Type", " application/x-www-form-urlencoded");

httpConn.setRequestProperty("Connection", "Keep-Alive");// 维持长连接

httpConn.setRequestProperty("Accept", "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8");

httpConn.setRequestProperty("Accept-Encoding", "gzip, deflate");

httpConn.setRequestProperty("Accept-Language", "zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3");

httpConn.setRequestProperty("User-Agent", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:49.0) Gecko/20100101 Firefox/49.0");

httpConn.setRequestProperty("Upgrade-Insecure-Requests", "1");

httpConn.setRequestProperty("account", name);

httpConn.setRequestProperty("passwd", pwd);

httpConn.setRequestProperty("phone", phone);

httpConn.setRequestProperty("content", content);

// 建立输出流,并写入数据

OutputStream outputStream = httpConn.getOutputStream();

outputStream.write(requestStringBytes);

outputStream.close();

// 获得响应状态

int responseCode = httpConn.getResponseCode();

if (HttpURLConnection.HTTP_OK == responseCode) {// 连接成功

// 当正确响应时处理数据

StringBuffer sb = new StringBuffer();

String readLine;

BufferedReader responseReader;

// 处理响应流,必须与服务器响应流输出的编码一致

responseReader = new BufferedReader(new InputStreamReader(httpConn.getInputStream(), "utf-8"));

while ((readLine = responseReader.readLine()) != null) {

sb.append(readLine).append("\n");

}

responseReader.close();

}

} catch (Exception ex) {

ex.printStackTrace();

}

}

/**

* 执行一个HTTP POST请求,返回请求响应的HTML

*

* @param url

* 请求的URL地址

* @param params

* 请求的查询参数,可以为null

* @return 返回请求响应的HTML

*/

public static void doPost(String url, String name, String pwd, String phone, String content) {

// 创建默认的httpClient实例.

CloseableHttpClient httpclient = HttpClients.createDefault();

// 创建httppost

HttpPost httppost = new HttpPost(url);

// 创建参数队列

List formparams = new ArrayList();

formparams.add(new BasicNameValuePair("account", name));

formparams.add(new BasicNameValuePair("passwd", pwd));

formparams.add(new BasicNameValuePair("phone", phone));

formparams.add(new BasicNameValuePair("content", content));

UrlEncodedFormEntity uefEntity;

try {

uefEntity = new UrlEncodedFormEntity(formparams, "UTF-8");

httppost.setEntity(uefEntity);

System.out.println("executing request " + httppost.getURI());

CloseableHttpResponse response = httpclient.execute(httppost);

try {

HttpEntity entity = response.getEntity();

if (entity != null) {

System.out.println("--------------------------------------");

System.out.println("Response content: " + EntityUtils.toString(entity, "UTF-8"));

System.out.println("--------------------------------------");

}

} finally {

response.close();

}

} catch (Exception e) {

e.printStackTrace();

} finally {

// 关闭连接,释放资源

try {

httpclient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

} 5.5 DeptController.java

控制类

package com.wisetv.programdept.controller;

import com.google.gson.Gson;

import io.swagger.annotations.Api;

import io.swagger.annotations.ApiImplicitParam;

import io.swagger.annotations.ApiImplicitParams;

import io.swagger.annotations.ApiOperation;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.SparkSession;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

import java.util.stream.Collectors;

@RestController

@ResponseBody

@Api(value = "接口控制类")

public class DeptController {

@Autowired

SparkSession sparkSession;

Gson gson = new Gson();

@ApiOperation(value = "测试接口", notes = "测试接口")

@RequestMapping(value = {"/test"}, method = {RequestMethod.GET, RequestMethod.POST})

public String test(){

Dataset sql = sparkSession.sql("select data from mobile.noreplace limit 1");

List

二 测试

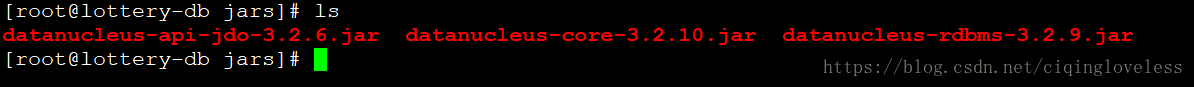

三 部署

上面参数调整坑比较多,但是部署jar坑更多,我给大家演示一下部署的java指令

#!/bin/sh

nohup java -cp sparkroute-0.0.1-SNAPSHOT.jar:jars/* org.springframework.boot.loader.JarLauncher > sparkroute.log &